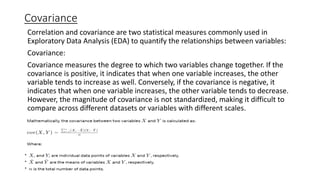

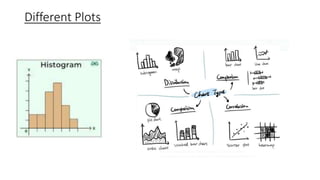

Exploratory Data Analysis (EDA) is an initial step in the data analysis process aimed at understanding the structure, relationships, and patterns within a dataset. Through EDA, analysts employ techniques like summary statistics, visualization, and dimensionality reduction to uncover insights, identify anomalies, and form hypotheses. Common EDA techniques include examining summary statistics, univariate and bivariate distributions, and relationships between variables through graphical and non-graphical methods. The goals of EDA are to gain insights, detect anomalies, and generate hypotheses for further investigation.