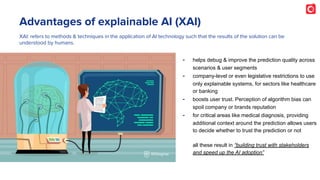

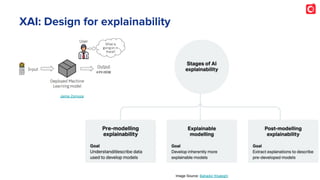

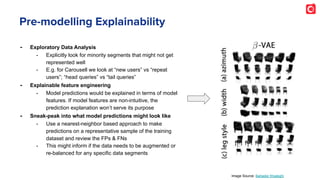

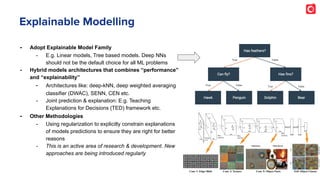

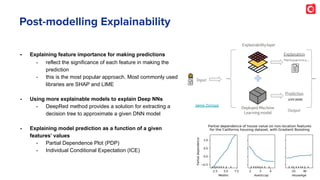

The document discusses the necessity of AI for enhancing personalization and the importance of building explainable AI in-house, highlighting Carousell's growth as a leading classifieds marketplace. It outlines strategies for developing explainable AI, including pre-modeling, explainable modeling, and post-modeling techniques, to foster user trust and mitigate algorithm bias. The document emphasizes that while sophisticated AI models may offer accuracy, explainability is crucial for user acceptance and effective decision-making.

![AI led Matchmaking

FINE: match

AI provides you the variety of

colors & brushes to create a

beautiful painting.

Image Source: PNGGuru

Overview of COUPLENET model architecture illustrating the computation of

similarity score for two users. [Image: courtesy of Yi Tay]

… algorithm was able to spot what

mutual interests between users it

thought suggested a good match...

The researchers write that “we believe

‘hidden’ (latent) patterns (such as

emotions and personality) of the users

are being learned and modeled in

order to make recommendations.” ...

their method likely overcomes issues

that crop up on dating sites, like

deceptive self-presentation,

harassment, and bots.

- article link

DEEP CONTENT

UNDERSTANDING

COMPREHENSIVE USER

UNDERSTANDING

ABILITY TO MAKE A FINE

MATCH](https://image.slidesharecdn.com/explainableaipoweredpersonalisedcontentfeeds-201117093001/85/Explainable-ai-powered-personalised-content-feeds-14-320.jpg)