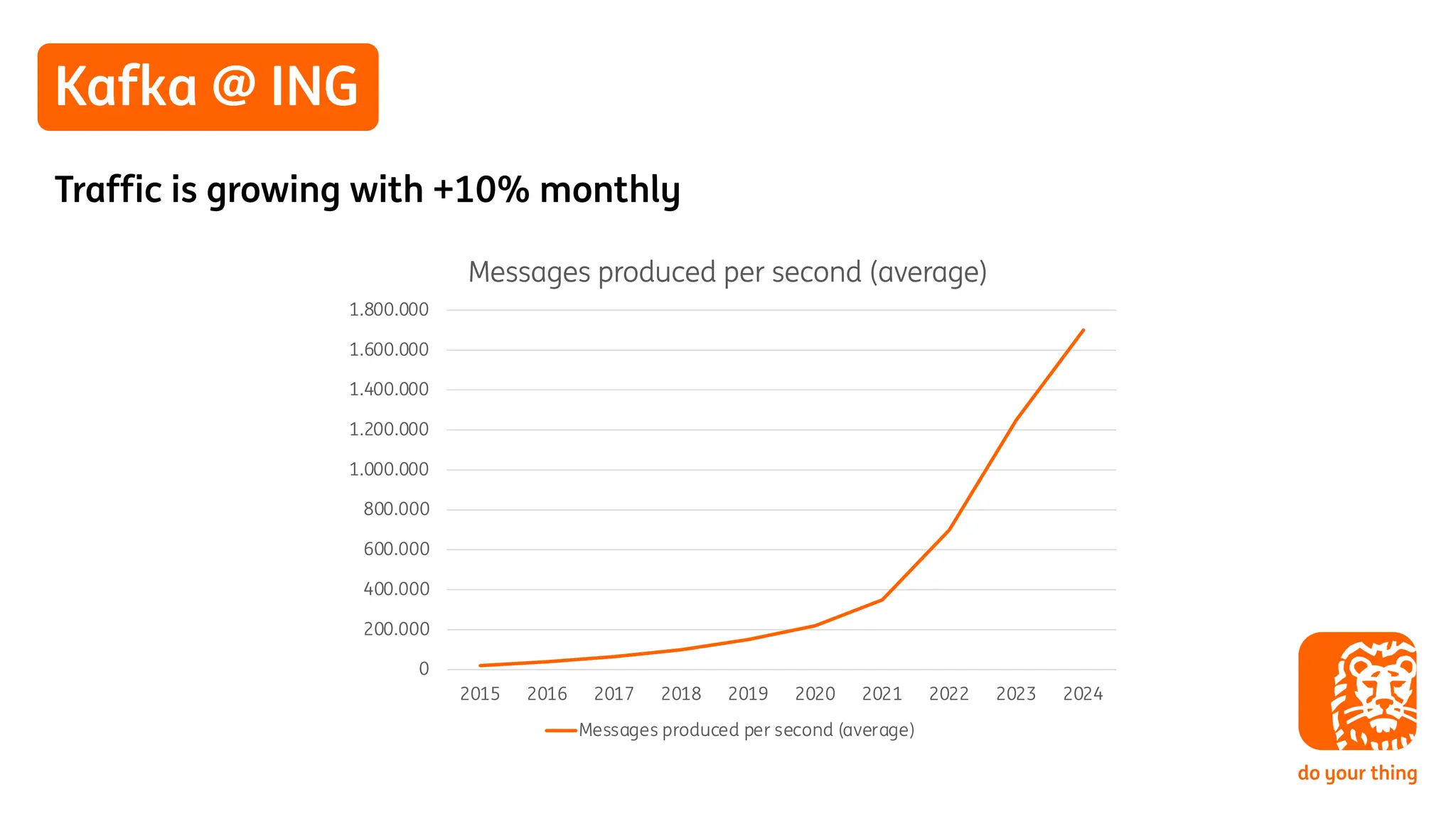

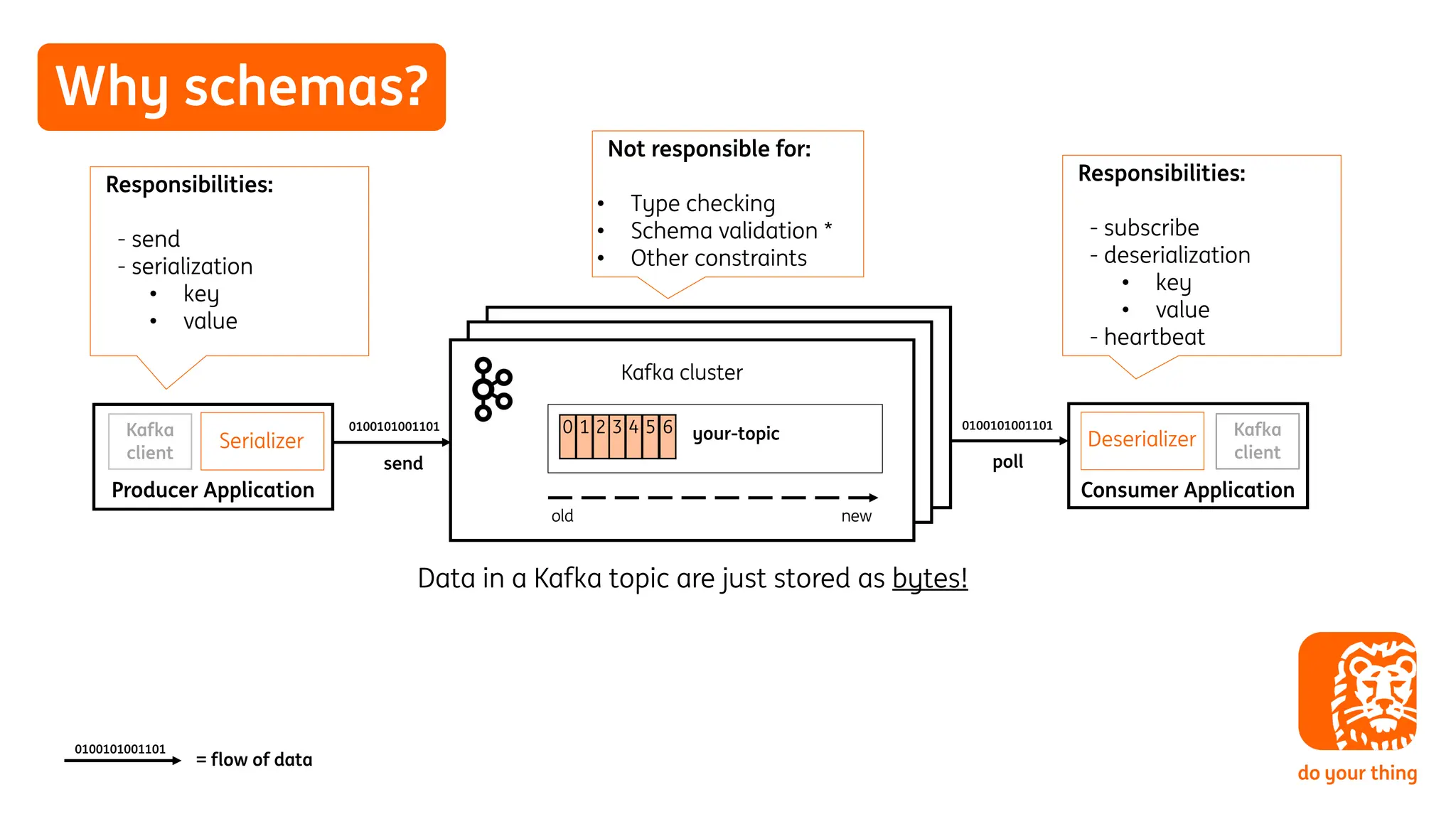

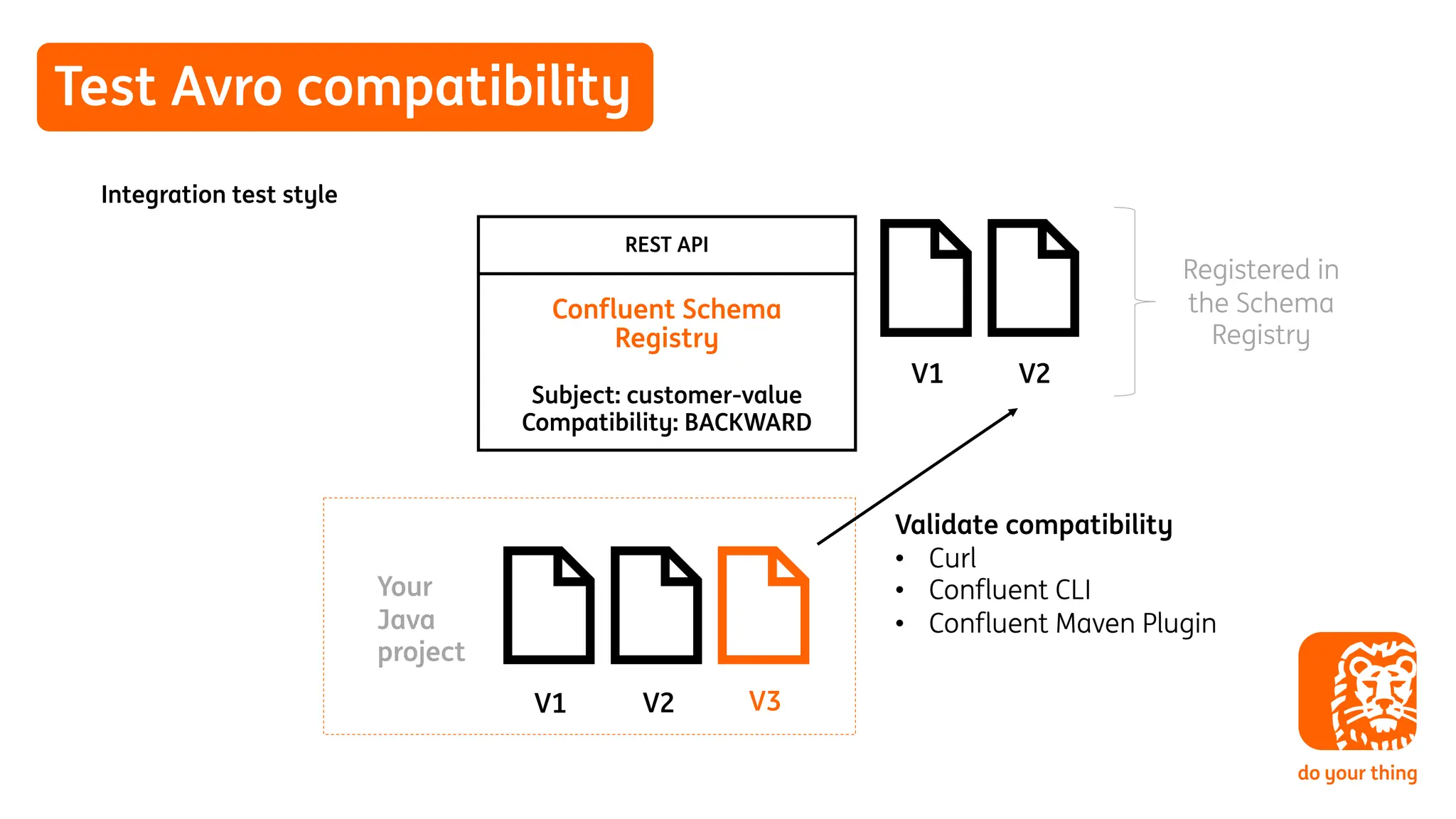

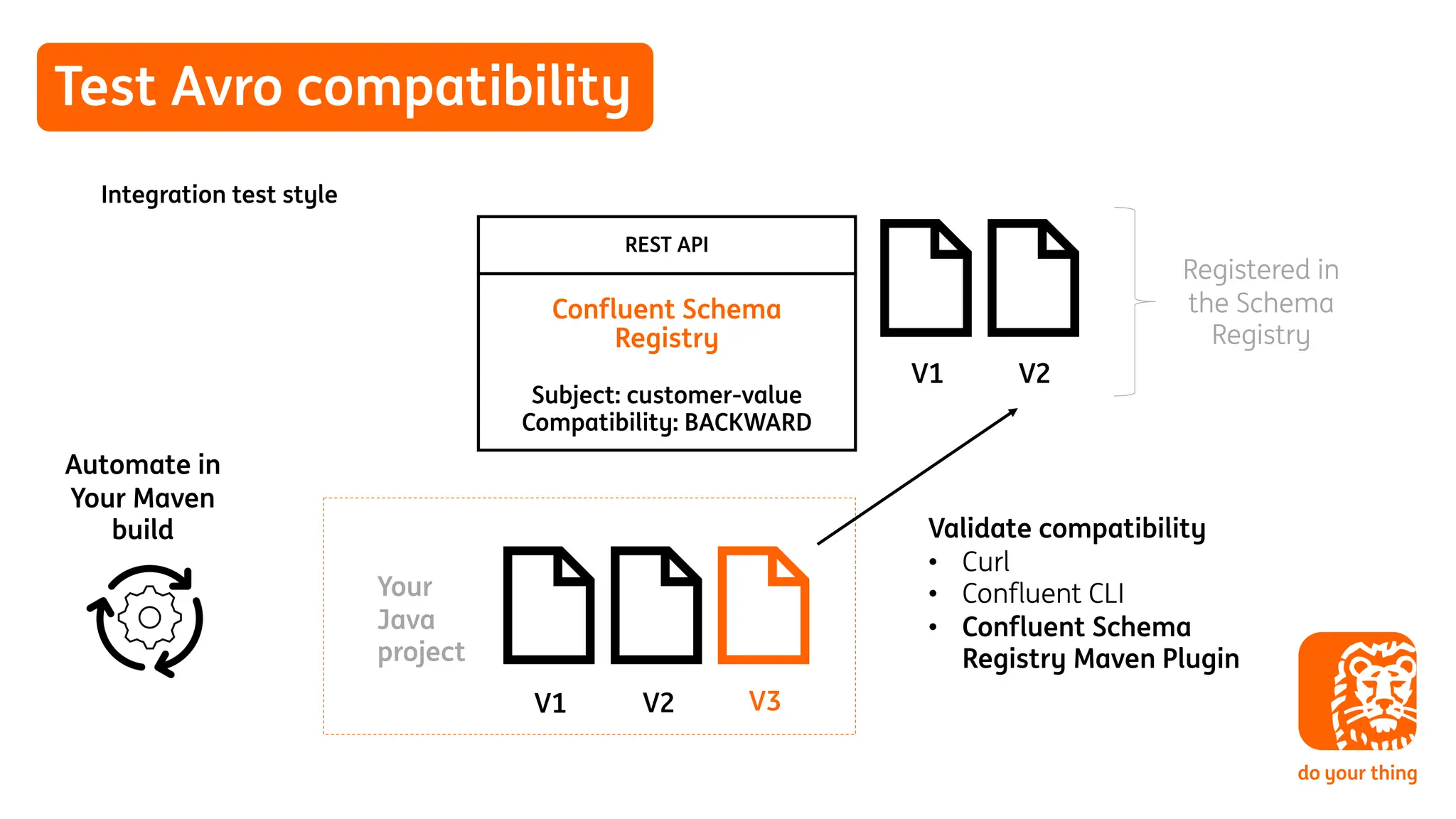

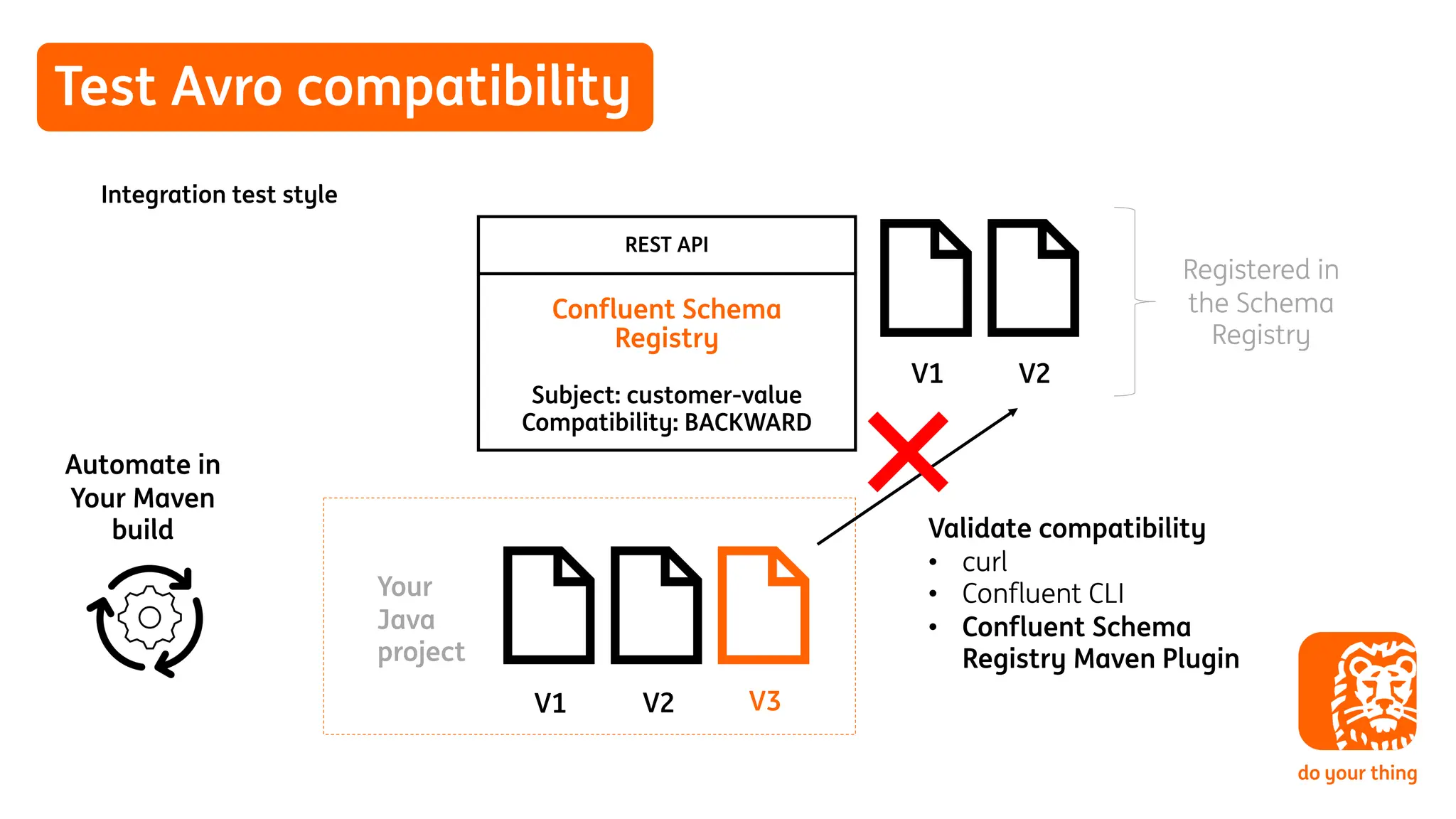

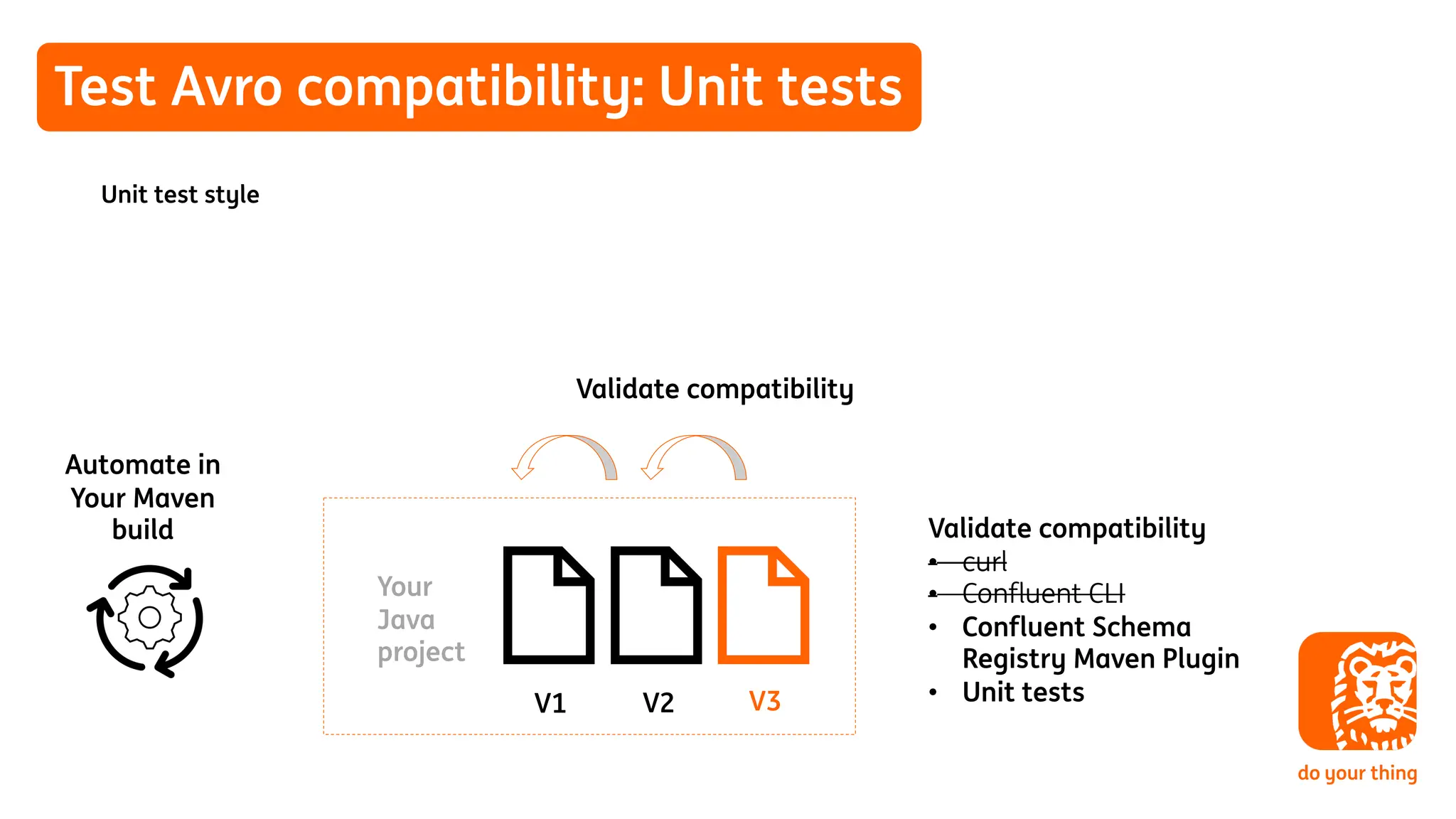

The document discusses the importance of Avro schema compatibility in Kafka, emphasizing the role of schemas in managing evolving data structures within streaming applications. It covers topics such as schema registration, compatibility levels, and how to implement changes without causing disruptions to existing services. Additionally, it provides insights on generating Java classes from Avro schemas and automated testing strategies.

![Avro

§ At ING we prefer Avro

§ Apache Avro™ is a data serialization system

offering rich data structures and uses a compact

and binary format.

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"fields": [

{ "name": ”name", "type": "string” },

{ "name": ”isJointAccountHolder", "type": ”boolean "}

]

}

{

"name": ”Jack",

"isJointAccountHolder": true

}](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-25-2048.jpg)

![Avro field types

q primitive types (null, boolean, int, long, float, double, bytes, and string)

q complex types (record, enum, array, map, union, and fixed).

q Logical types(decimal, uuid, date…)

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"fields": [

{ "name": ”name", "type": "string” },

{ "name": ”isJointAccountHolder", "type": ”boolean "},

{ "name": "country", "type": { "name": "Country", "type": "enum", "symbols" : ["US", "UK", "NL"] } },

{ "name": ”dateJoined", "type": ”long”, ”logicalType": ” timestamp-millis” }

]

}

{

"name": ”Jack",

"isJointAccountHolder": true,

”country": ”UK”,

”dateJoined": 1708944593285

}](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-26-2048.jpg)

![Maps

Note: the values type applies for the values in the map. The keys are strings.

Example java Map representation:

Map<String, Long> customerPropertiesMap = new HashMap<>();

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"fields": [

{ "name": "name", "type": "string" },

{ "name": "isJointAccountHolder", "type": "boolean "},

{ "name": "country", "type": { "name": "Country", "type": "enum", "symbols": ["US", "UK", "NL"]}},

{ "name": "dateJoined", "type": "long", "logicalType": " timestamp-millis"},

{ "name": "customerPropertiesMap", "type": { "type": "map", "values": "long" }}

]

}

{

"name": ”Jack",

"isJointAccountHolder": true,

”country": ”UK”,

”dateJoined": 1708944593285,

“customerPropertiesMap”:

{”key1": 1708, ”key2": 1709}

}](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-27-2048.jpg)

![Fixed

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"fields": [

{ "name": "name", "type": "string" },

{ "name": "isJointAccountHolder", "type": "boolean "},

{ "name": "country", "type": { "name": "Country", "type": "enum", "symbols": ["US", "UK", "NL"]}},

{ "name": "dateJoined", "type": "long", "logicalType": " timestamp-millis"},

{ "name": "customerPropertiesMap", "type": { "type": "map", "values": "long" }, "doc": "Customer

properties"},

{ "name": "annualIncome", "type": ["null", {"name": "AnnualIncome", "type": "fixed", "size":

32}],"doc": "Annual income of the Customer.", "default": null}

]

}

{

"name": ”Jack",

"isJointAccountHolder": true,

”country": ”UK”,

”dateJoined": 1708944593285,

“customerPropertiesMap”:

{”key1": 1708, ”key2": 1709},

”annualIncome": [64, -9, 92, …]

}](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-28-2048.jpg)

![Unions

• Unions are represented using JSON arrays

• For example, ["null", "string"] declares a schema which may be either a

null or string.

• Question: Who thinks this a valid definition?

{

….

"fields": [

{

"name": "firstName",

"type": ["null", "string"],

"doc": "The first name of the Customer."

}

,…]

{

….

"fields": [

{

"name": "firstName",

"type": ["null", "string”, “int”],

"doc": "The first name of the Customer."

}

,…]

org.apache.kafka.common.errors.SerializationException: Error

serializing Avro message…

…

Caused by: org.apache.avro.UnresolvedUnionException: Not in union

["null","string","int"]: true](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-29-2048.jpg)

![Aliases

{

….

"fields": [

{

"name": ”customerName",

"aliases": [

”name"

],

"type": "string”,

"doc": "The name of the Customer.",

"default": null

}

,…]

{

….

"fields": [

{

"name": ”name",

"type": "string”,

"doc": "The name of the Customer.",

"default": null

}

,…]

• Named types and fields may have aliases

• Aliases function by re-writing the writer's schema using aliases from the reader's schema.

Consumer

Producer](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-30-2048.jpg)

![BACKWARD

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": "1",

"fields": [

{ "name": ”name", "type": "string” },

{ "name": "occupation", "type": "string "}

]

}

Producer 1: V1

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": "1",

"fields": [

{ "name": ”name", "type": "string” },

{ "name": "occupation", "type": "string "}

]

}

Consumer 1 read: V1

Consumer

Producer

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": "2",

"fields": [

{ "name": " name ", "type": "string "},

{ "name": "occupation", "type": "string "}

}

Consumer 2 read: V2 (Delete field)](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-32-2048.jpg)

![BACKWARD

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": "2",

"fields": [

{ "name": " name ", "type": "string "},

{ "name": "occupation", "type": "string "}

}

Producer 1: V2

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": "2",

"fields": [

{ "name": " name ", "type": "string "},

{ "name": "occupation", "type": "string "}

}

Consumer 1 read: V2

Consumer

Producer

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": "2",

"fields": [

{ "name": " name ", "type": "string "},

{ "name": "occupation", "type": "string "}

}

Consumer 2 read: V2 (Delete field)

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”3",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": "occupation", "type": "string"},

{ "name": " annualIncome", "type": ["null","int"],"default": null}

Consumer 3 read: V3 (Add optional field)](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-33-2048.jpg)

![BACKWARD TRANSITIVE

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": "1",

"fields": [

{ "name": ”name", "type": "string” },

{ "name": "occupation", "type": "string "}

]

}

Producer: V1

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": "2",

"fields": [

{ "name": " name ", "type": "string "},

{ "name": "occupation", "type": "string "}

}

Consumer read: V2 (Delete field)

Consumer

Producer

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”3",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": "occupation", "type": "string"},

{ "name": " annualIncome", "type": ["null","int"],"default": “null”}

]

}

Consumer read: V3 (Add optional field)

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”…n",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": "occupation", "type": "string"},

{ "name": " annualIncome", "type": ["null","int"],"default": null}

]

}

Consumer read: V…n (Delete field)

Compatible](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-34-2048.jpg)

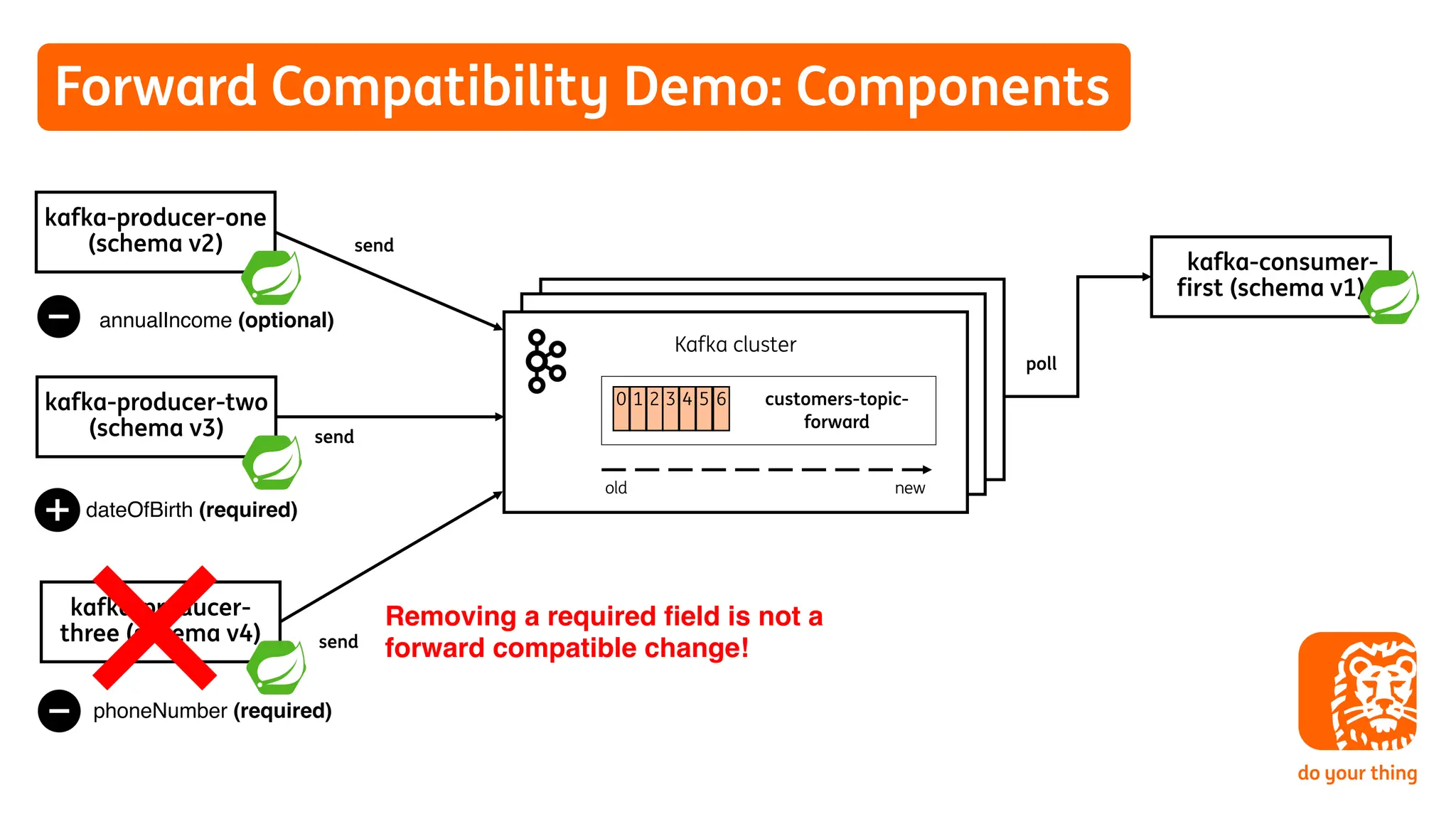

![FORWARD

Consumer

Producer

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”1",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": " annualIncome", "type": ["null"," double"],"default": null}

]

}

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”1",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": " annualIncome", "type": ["null",”double"],"default": null}

]

}

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”2",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": " annualIncome", "type": ["null",”double"],"default": null}

]

}

Producer write: V2 (Delete Optional Field)

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”3",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": " annualIncome", "type": ["null",”double"],"default": null},

{"name": "dateOfBirth", "type": "string", "doc": "The date of birth for the Customer."}

]

}

Producer write: V3 (Add Required Field)

Consumer read: V1

Producer write: V1](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-35-2048.jpg)

![FORWARD TRANSITIVE

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”3",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": "occupation", "type": "string"},

{ "name": " annualIncome", "type": ["null","int"],"default": null}

]

}

Producer: V2 (Delete Optional Field)

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”3",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": "occupation", "type": "string"},

{ "name": " annualIncome", "type": ["null","int"],"default": null},

{"name": "dateOfBirth", "type": "string”}

]

}

Producer: V3 (Add Field)

Consumer

Producer

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”3",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": "occupation", "type": "string"},

{ "name": " annualIncome", "type": ["null","int"],"default": null}

]

}

Consumer read: V1

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”…n",

"fields": [

{ "name": "name ", "type": "string ”},

{ "name": "occupation", "type": "string"},

{ "name": " annualIncome", "type": ["null","int"],"default": null},

{"name": "dateOfBirth", "type": "string"},

{"name": "phoneNumber", "type": "string”}

]

}

Producer: V..n (Add Field)

Compatible](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-36-2048.jpg)

![FULL

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": ”1",

"fields": [

{ "name": "name , "type": [”null",”string"],"default": null}

{ "name": "occupation , "type": [”null",”string"],"default": null}

{ "name": "annualIncome", "type": ["null","int"],"default": null},

{ "name": "dateOfBirth", "type": [”null",”string"],"default": null}

}

Producer: V1

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"version": "2",

"fields": [

{ "name": "name , "type": [”null",”string"],"default": null}

{ "name": "occupation , "type": [”null",”string"],"default": null}

{ "name": " annualIncome", "type": ["null","int"],"default": null},

{ "name": "dateOfBirth", "type": [”null",”string"],"default": null},

{ "name": "phoneNumber" , "type": [”null",”string"],"default": null}

}

Consumer read: V2

Consumer

Producer

NOTE:

• The default values apply only on the consumer side.

• On the producer side you need to set a value for the field](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-37-2048.jpg)

![package com.example.avro.customer;

/** Avro schema for our customer. */

@org.apache.avro.specific.AvroGenerated

public class Customer extends

org.apache.avro.specific.SpecificRecordBase implements

org.apache.avro.specific.SpecificRecord {

private static final long serialVersionUID = 1600536469030327220L;

public static final org.apache.avro.Schema SCHEMA$ = new

org.apache.avro.Schema.Parser().parse("{"type":"record","name":"

CustomerBackwardDemo","namespace":"com.example.avro.custom

er","doc":"Avro schema for our

customer.","fields":[{"name":"name","type":{"type":"string","

avro.java.string":"String"},"doc":"The name of the

Customer."},{"name":"occupation","type":{"type":"string","avro

.java.string":"String"},"doc":"The occupation of the

Customer."}],"version":1}");

…

}

{

"namespace": "com.example.avro.customer",

"type": "record",

"name": "Customer",

"version": 1,

"doc": "Avro schema for our customer.",

"fields": [

{

"name": "name",

"type": "string",

"doc": "The name of the Customer."

},

{

"name": "occupation",

"type": "string",

"doc": "The occupation of the Customer."

}

]

}

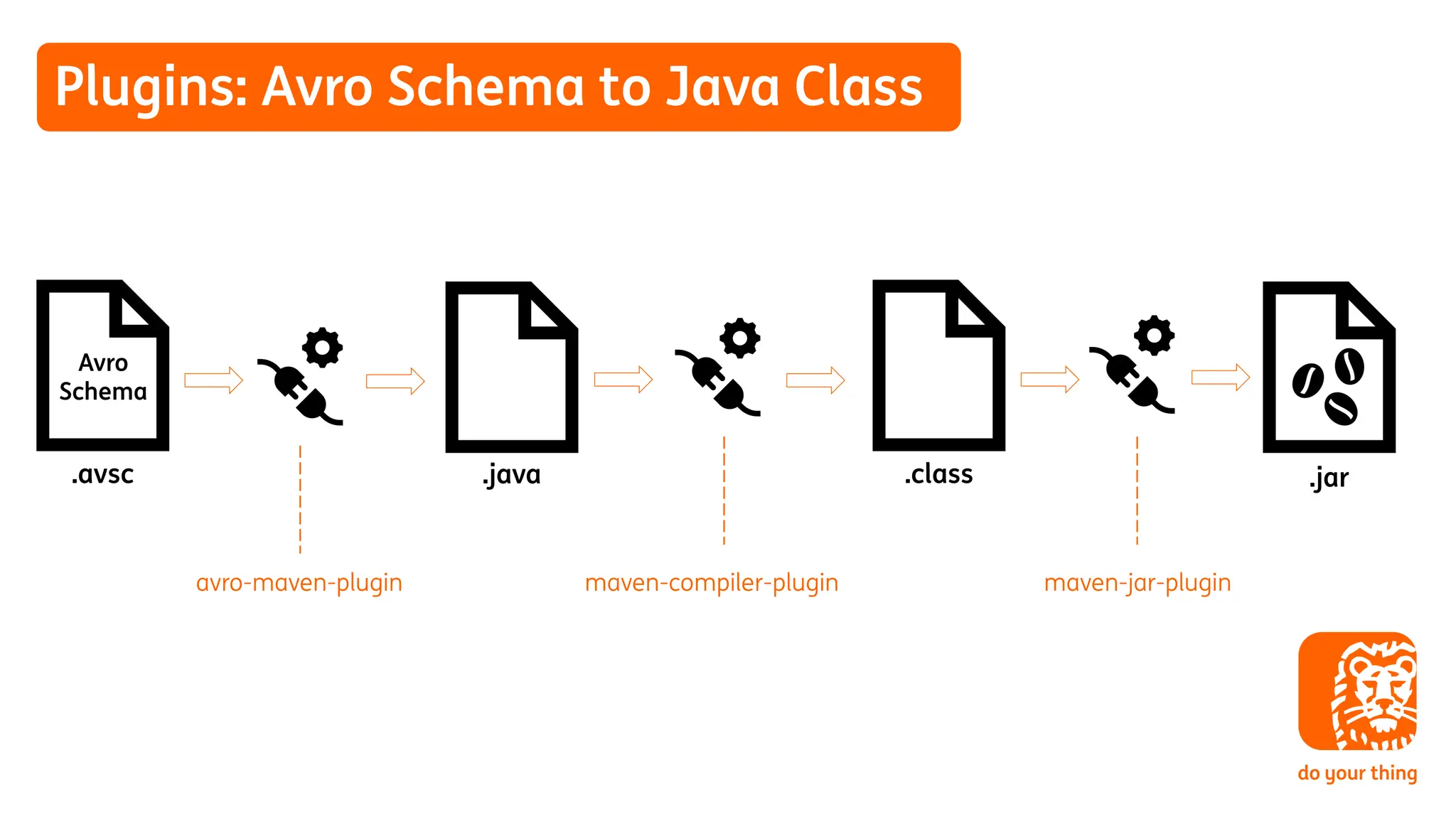

Plugins: Avro Schema to Java Class

Customer.avsc Customer.java](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-45-2048.jpg)

![package com.example.avro.customer;

/** Avro schema for our customer. */

@org.apache.avro.specific.AvroGenerated

public class Customer extends org.apache.avro.specific.SpecificRecordBase implements

org.apache.avro.specific.SpecificRecord {

private static final long serialVersionUID = 1600536469030327220L;

public static final org.apache.avro.Schema SCHEMA$ = new

org.apache.avro.Schema.Parser().parse("{"type":"record","name":"CustomerBackwardDemo

","namespace":"com.example.avro.customer","doc":"Avro schema for our

customer.","fields":[{"name":"name","type":{"type":"string","avro.java.string":"String"

},"doc":"The name of the

Customer."},{"name":"occupation","type":{"type":"string","avro.java.string":"String"},

"doc":"The occupation of the Customer."}],"version":1}");

…

}

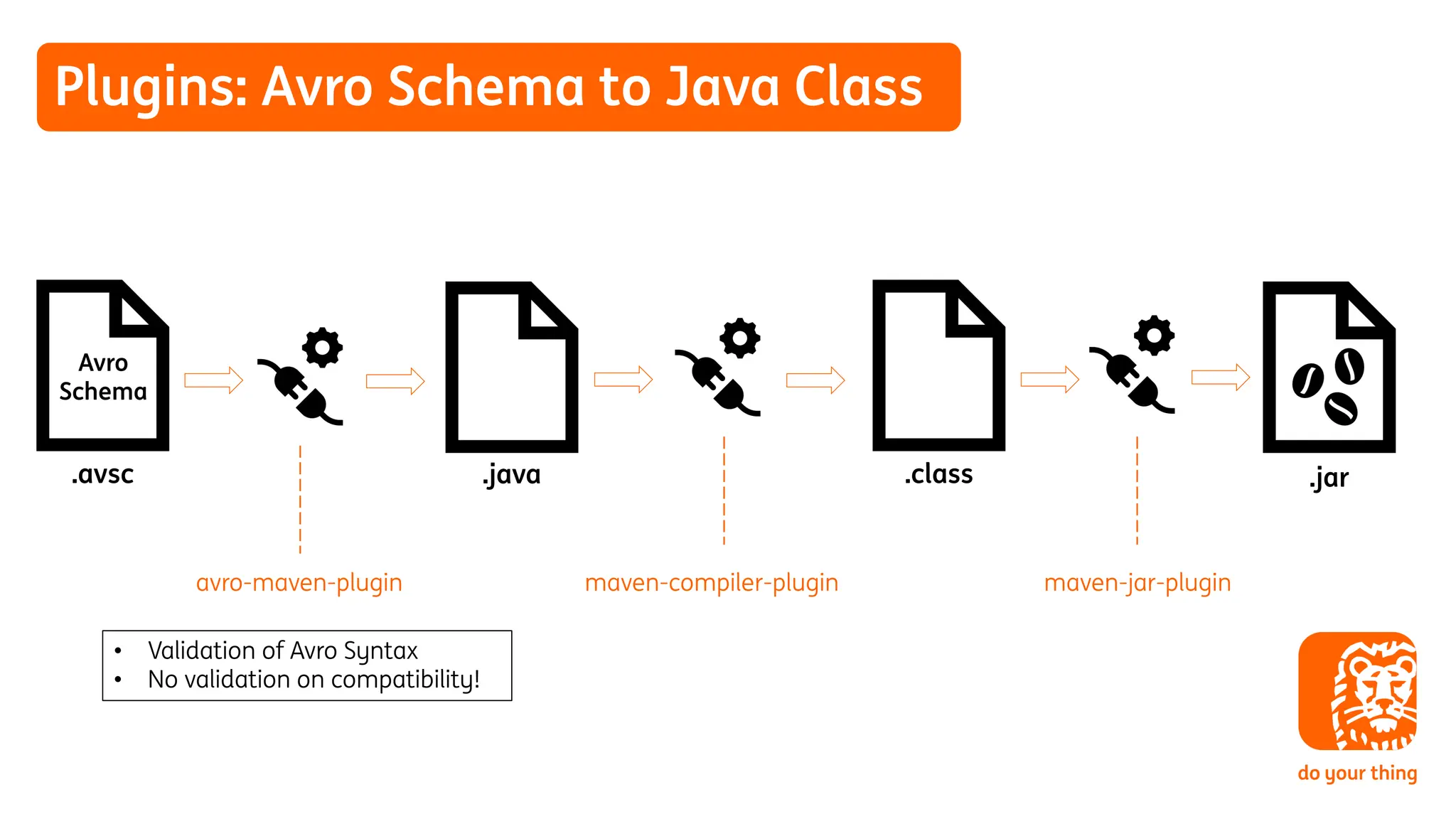

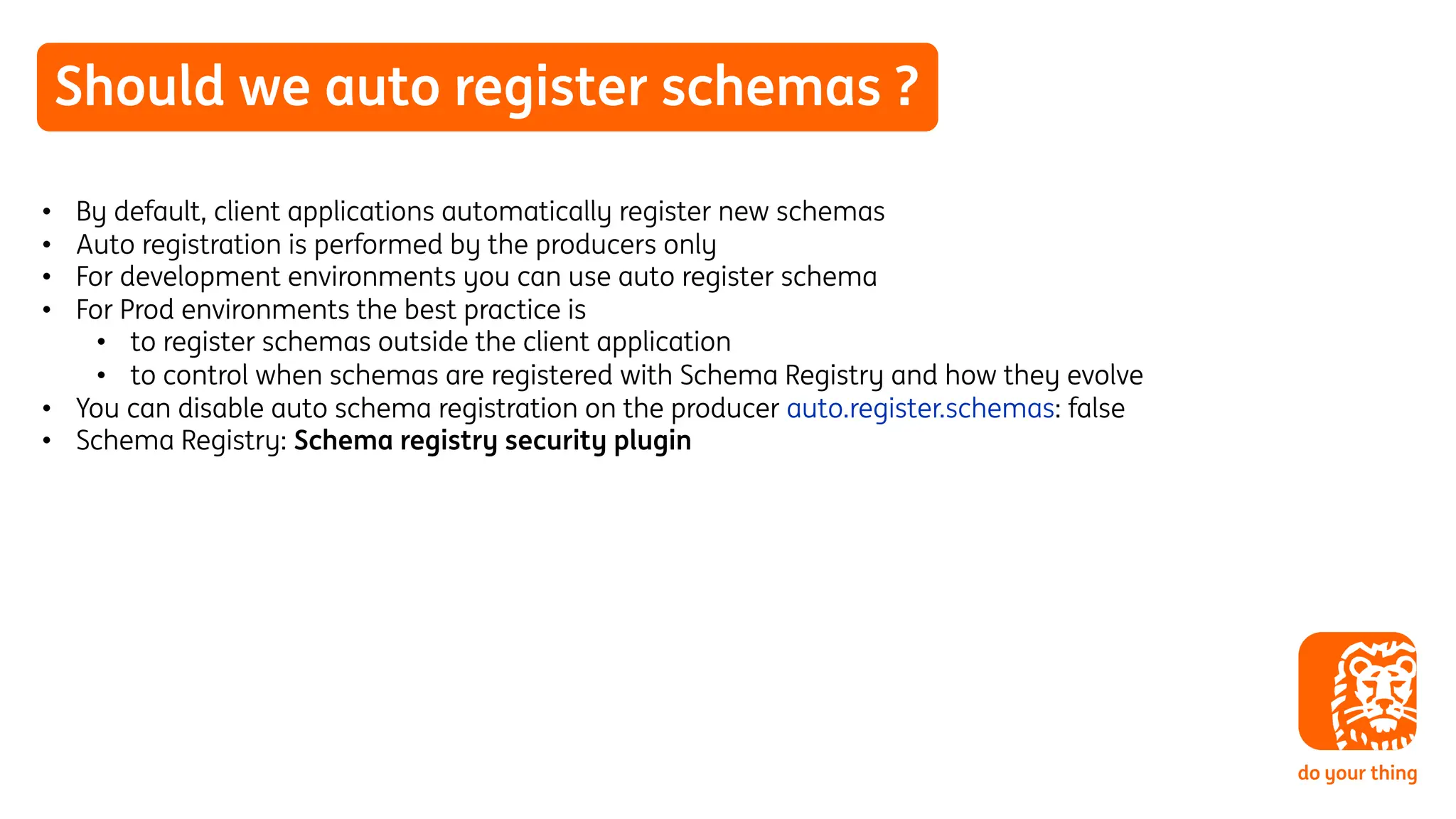

Auto register schema lessons learned

• Maven Avro plugin: additional information appended to the schema in Java code

• Producer (KafkaAvroSerializer): auto.register.schemas: false

• When serializing the Avro Schema is derived from Customer Java object

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"fields": [

{

"name": ”name",

"type": "string”,

”doc": "The name of the Customer.”

}

]

}

Mismatch in schema comparison

Avro Schema (avsc) registered

in Schema Registry

Avro Schema (Java) in producer](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-53-2048.jpg)

![Auto register schema lessons learned

• If you are using the its recommented to set this property (KafkaAvroSerializer)

avro.remove.java.properties: true

Note:

There is an open issue for the Avro Maven Plugin for this AVRO-2838

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"fields": [

{

"name": ”name",

"type": "string”,

”doc": "The name of the Customer.”

}

]

}

{

"type": "record",

"namespace": "com.example",

"name": "Customer",

"fields": [

{

"name": ”name",

"type": "string”,

”doc": "The name of the Customer.”

}

]

}

No mismatch in schema comparison

Avro Schema (avsc) Avro Schema (as Java String)](https://image.slidesharecdn.com/bs05-20240319-ksl24-ing-timvanbaarsenandkostachuturkov-240402152349-ea2a9581/75/Evolve-Your-Schemas-in-a-Better-Way-A-Deep-Dive-into-Avro-Schema-Compatibility-and-Schema-Registry-54-2048.jpg)