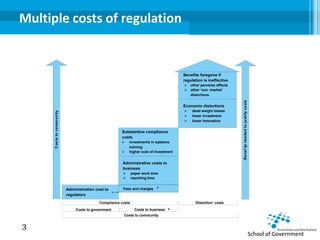

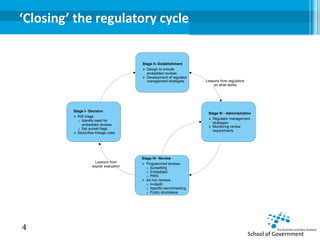

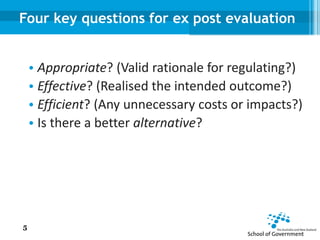

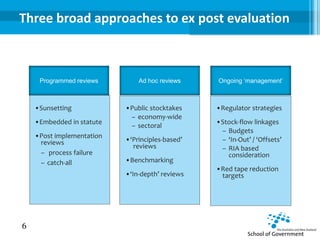

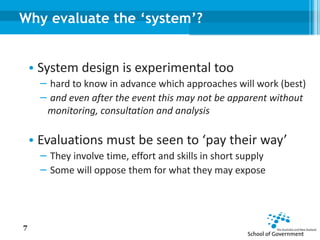

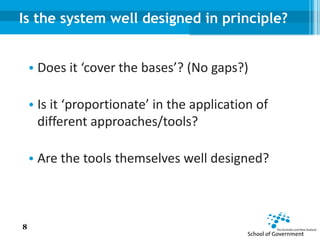

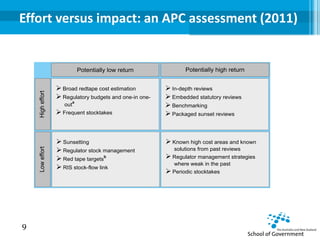

The document presents an overview of ex post evaluation in regulatory performance, emphasizing the necessity of assessing regulations that may not be effective or may have outlived their usefulness. It outlines a structured approach to conducting evaluations through programmed and ad hoc reviews, highlighting key questions to determine the appropriateness and efficiency of regulations. The content also mentions practical lessons learned from past evaluations, stressing the importance of institutional roles and the design of the regulatory system.