This document is a student's dissertation submitted as part of their master's degree program. It examines the use of fingerprint verification on contactless smart cards for physical access control systems. Specifically, it evaluates the security advantages and limitations of a "match on card" system by considering potential attacks an insider attacker could perform. It discusses low-cost attacks such as spoofing the fingerprint sensor, replay attacks across the contactless interface, and template extraction attacks. It also covers countermeasures like template protection through cryptographic techniques and feature transformation with salting and non-invertible functions. The goal is to assess the overall security of match on card verification given resource constraints on smart cards and a generic system architecture.

![List of Figures

2.1 A typical access control system [12] . . . . . . . . . . . . . . . . . 10

2.2 Diagram of an ID-1 smart card [62] . . . . . . . . . . . . . . . . . 12

2.3 Processing steps for enrollment, verification and identification [40] 15

2.4 FMR vs FNMR (extracted from [76]) . . . . . . . . . . . . . . . . 16

2.5 Three Strategies for Fingerprint Verification (extracted from [44]) 18

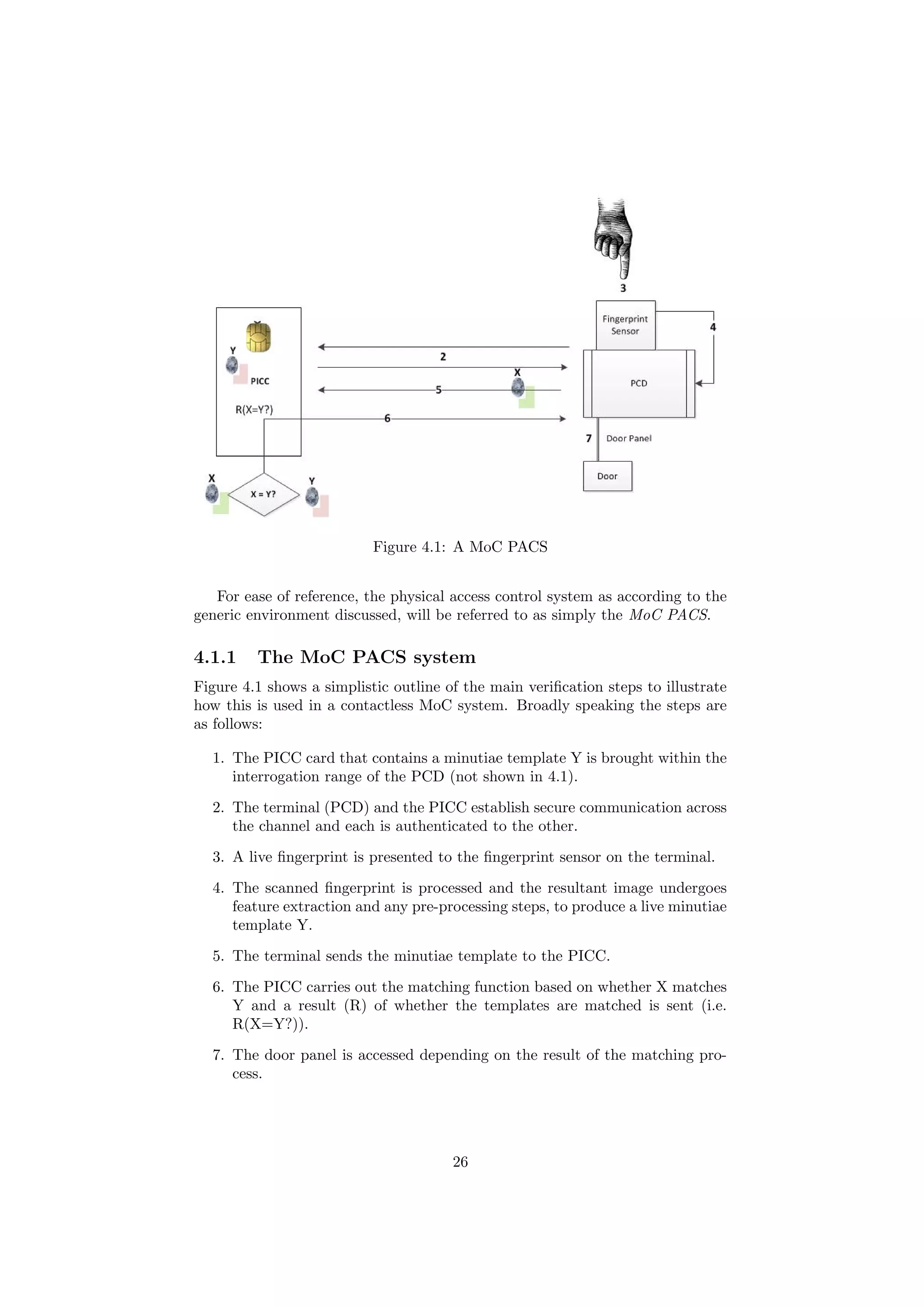

4.1 A MoC PACS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

4.2 Hill Climbing Attack System [150] . . . . . . . . . . . . . . . . . 36

4.3 Template Protection . . . . . . . . . . . . . . . . . . . . . . . . . 39

4.4 Authentication Process Using Feature Transformation [80] . . . . 40

6.1 Challenge-Response Protocol [28] . . . . . . . . . . . . . . . . . . 54

6.2 Application Specific Transformation Function [31] . . . . . . . . . 55

3](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-6-2048.jpg)

![Chapter 1

Introduction

This section specifically describes the motivation behind this topic and how the

subject area can add further value to the wider field of access control. This will

include a brief introduction to the topic as a way of leading on to the main body

of the project.

1.1 Motivation

This project discusses the use of fingerprint verification on contactless (proxim-

ity) cards with microprocessors for use within Physical Access Control Systems

(PACS). It will evaluate the potential advantages and limitations in terms of

security within such a system. This will be done specifically by way of consid-

ering a range of potential attacks that may be performed, when presented with

a specific attacker profile and a generic match on-card architecture - typical of

that within constrained embedded systems, as seen within the current market.

As physical access control concerns the management of direct access to an

area or building, it should be appreciated that it is an essential element in the

overall protection of critical assets. Such systems are generally seen alongside

myriad perimeter (access) controls in various physical locations including private

organisations, public attractions or transportation facilities and high security

government buildings, where control of access requires regulation. Although

they are generally not considered catch-all solutions, they are often deployed

alongside other first line perimeter security controls including wire fences, secu-

rity guards, time controlled door locks and surveillance equipment. In practice,

electronic PACS are used to regulate access on the basis of predefined access

profiles or access control lists (ACLs). These ACLs can be used to support

any particular security policy by correctly authorising access to one or more

location(s), following on from an initial positive identification.

Contactless tokens are commonly used within PACS because of the enhanced

speed, robustness and convenience as a consequence of not having to closely posi-

tion or orientate the smart card to communicate with its reader. This technology

also seems to resolve some of the problems of contamination or degradation of

contact parts, as is pertinent to contact-based smart card, which may be dam-

aged by electrostatic discharge [22]. These cards are used at longer distances

(close-coupling RFID systems are the exception[48]), the magnitude of which

4](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-7-2048.jpg)

![varies according to the type of system used. This permits users to quickly es-

tablish access through an entry point(see [69]) which is further advantageous as

the amount of time required for communication between an external terminal

and smart card is reduced, as attributed to the enhanced transmission speeds

supported by contactless card standards.

However, one issue with the majority of PACS is that they tend to only use

single, token-based authentication for access. This may not be an issue within

low-security environments, but where access needs to be restricted carefully on

the basis of identity, this is certainly an issue. It has been long recognised

that a token can be “lost, stolen, forgotten or misplaced”[27], which can be-

come a significant security risk in such an environment. The alternative,“two-

factor” authentication, involves combining an identity token (something you

have) representing a claimed identity and a second factor. This second factor

has traditionally been a memorable credential such as a password, passphrase

or PIN (something you know). Regardless, passwords are frequently forgotten,

and therefore as a counter-step to resolve this, they are often made simple and

predictable; their overall management can, therefore, be expensive [75]. Those

passwords that are stored electronically are prone to brute force, else they can be

dictionary attacked with relative ease, depending on length, without requiring

the use of particularly advanced hardware or computationally intensive pro-

cessing [88]. Moreover, these specific factors only partly answer the question “is

someone who they claim to be?” a question which encompasses the essence iden-

tification. Possession or knowledge is an unreliable and circumstantial indicator

of identity.

The use of tokens or “object-based authenticators” combined with an “ID-

based authenticator”[119], may add an additional level of security by identifying

someone on the basis of their unique biological traits[67]. The perceived advan-

tage of this approach is that it is difficult for biometric credentials to be lost,

forged, forgotten, shared or easily acquired; unless the biometric authentication

subsystems are manipulated, only an enrolled person can be verified[78]. The

advances in general communication technologies, and the frequency in which

people travel between physical locations has prompted the use of biometrics

as a way of automatically and conveniently establishing identity. Biometric au-

thentication is one potential way of circumventing any requirement to hold large

databases of stored PIN numbers or passwords (hashes), where localised storage

of an enrolled template can be adopted instead. Furthermore, biometric authen-

tication has been considered to be a reliable, trusted means of binding the owner

of an identity record to that record [84]. The degree to which this is achieved

within a PACS depends on the additional credentials or protection mechanisms

stored on a card[43], and whether the biometric authenticator originated from

that person whom was present at the time of verification.

Owing to the maturity [27] of its usage and the relative uniqueness and per-

manence of fingerprints, this method of authentication is considered one of the

more reliable forms of biometric authentication, and is well understood. Finger-

prints satisfy the “7 Pillars of biometric wisdom” for the particular reasons that

they are mature, well understood, reasonably resistant to change and unique[27].

Fingerprint sensors are relatively cheap compared to others and therefore they

have been used in high security environments; in the United States Department

of Defense(DoD) they are the dominant biometric authenticator[118]. Although

some have questioned the efficacy and scientific foundation of fingerprints as a

5](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-8-2048.jpg)

![method of uniquely identifying people[121][34] it is indisputable that as a bio-

metric method or modality it has been widely studied, and furthermore, it has

been used as a contemporary means of identification within automatic identi-

fication applications [122]. Certainly high levels of accuracy for this mode of

biometric authentication have been shown to be demonstrated [96] and it is the

first type of biometric introduced into true match on-card technology [21]. This

provides a strong justification as to why this biometric has been chosen, above

others, to be included within the framework of discussion.

In terms of the various configurations used for verification using a card,

match on-card seems the most ideal in terms of balancing the protection of a

biometric template on a card, and accounting for resource limitations in line with

those at present time. In this configuration specifically, the tamper-resistant

environment of the card is used to both house the template and perform the

match function. This reduces the attack surface by ensuring that the tempate

remains on the card, and only the result of a matching- or live- template is sent.

Storing the card also removes any overhead from maintaining large databases

of templates within potentially insure databases.

However, there are several constraints and limitations in terms of the re-

sources available to a card. Among these limitations are the amount of power

and clock provided by the card, as well as further limitations within the RAM,

ROM, EEPROM and transmission speed, as distinct from conventional PC ar-

chitectures. The architecture and implementation of such a solution can there-

fore vary, and similarly the potentially insecure contactless interface may be

exploited. All of these aspects have a direct bearing on the time and reliability

of the verification - and therefore - end-to-end process of access control us-

ing fingerprint verification within a smart card. Furthermore, it is the case that

both the contactless communication interface and biometric system components

possess vulnerabilities that may be exploited.

It should be noted that at the time of writing there are a few major devel-

opments progressing that have combined these aspects.

1. Within the US, there are some developments occuring:

• The U.S Department of Defense (DoD) has taken an interest in this

area since the passing of the U.S Homeland Security Presidential

Directive 12 or HSPD-12 [153]. Personal Identification Verification

(PIV) cards required to be compliant with the follow-on U.S. stan-

dard FIPS-120 are one particular example of where development in

this area is taking place.

• The contactless version of the Common Access Card, CAC [117] is-

sued by the DoD to the DoD community, is another example of a

development combining the use of on-card verification with contact-

less technology. Early tests focused mainly on template on-card ver-

ification, but since then it was demonstrated that these cards can

perform match on-card across an encrypted channel [136] and sup-

port for match on-card over a contactless interface exists within the

next-generation cards.

2. One of the most pertinent examples of where this combination of tech-

nological factors is being researched is within the Europe Union, under

6](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-9-2048.jpg)

![the multi-million pound funded “Turbine” Project [51]. The objective of

this project is to research and ultimately develop e-identity solutions that

incorporate fingerprint-based biometric authentication.

With these developments in mind, the principle aim of this project is to

highlight some of the restrictions involved in incorporating fingerprint verifica-

tion onto a PICC and how the compromises made to the architecture, in order

to balance cost with practicality, can result in the presence of vulnerabilities

within PACS. The specific areas of focus, to this end, will be the attacks that

exploit these vulnerabilities, and those which are low cost and unique to match

on-card, as well as the various means in which these may be mitigated.

1.2 Structure of the dissertation

This project will closely follow the objectives as set out below, beginning in

Chapter 2 with the key concepts behind a contactless MoC implementation

within a PACS. This lays out the the concepts and technologies behind the

various components of generic contactless access control and biometric systems.

Chapter 3 discusses the resource limitations on embedded smart card systems

and how match on-card offers a balanced approach that considers these, given

the challenges of integrating fingerprint verification onto a card in line with

resource constraints. It also details the compromises made on such a system.

Chapter 4 discusses a range of attacks that can be conducted against a

generic solution, in accordance with a specific attacker profile, as well as the

countermeasures that can be implemented against such attacks. This will in-

clude an analysis of how realistic these possibilities are in relation to both the

robustness of the system and the resources of the attacker. Various assumptions

and exclusions will be applied to scope this environment.

The final chapter, Chapter 5, will conclude with a summary evaluation of the

relative levels of security as a consequence of match on-card implementations,

their practicality for access control environments and some of the activities being

done to harmonise/standardise efforts to develop this area across industry.

1.3 Statement of Objectives

1. To discuss the main resource constraints on microprocessing proximity

cards and how this can lead to vulnerabilities.

2. To discuss a range of low-cost attacks that are applicable to the environ-

ment being considered.

3. To discuss the various countermeasures to the above attacks and potential

ways to secure templates.

4. To evaluate the potential security advantages or disadvantages of MoC

implemnentations.

7](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-10-2048.jpg)

![Chapter 2

Key Concepts

This chapter presents a background to the underlying concepts and technologies

behind a physical access control system using fingerprint-based verification on

a contactless smart card. The concepts within this section will be:

1. Access Control Systems.

2. Smart cards - what they are, their properties, and the types of smart card.

3. Proximity Coupling - the technology most frequently used by contactless

access tokens.

4. Biometric Authentication - including the subtypes identification and ver-

ification.

5. Errors in Biometric Authentication.

6. Fingerprint-based Authentication.

7. Verification Strategies.

2.1 Physical Access Control Systems

In Chapter 1, it was briefly explained that access control is the automated

process of authorising and granting access to a physical location. To build

on this definition, a reference should be made to the international standard

ISO/IEC 10181 part 3[72], which specifies a security framework for access control

in open systems. This defines access control as “The process of determining

which uses of resources within an Open System environment are permitted and,

where appropriate, preventing unauthorized access”. As the scope of this project

concerns physical access control, this definition should be applied to the more

focused remit of physical access control environments.

In general, this process starts when a user presents an authenticator to a

reading device attached adjacent to (or more usually - directly) at the access

point. This reading device is responsible for capturing information from the au-

thenticator; typically a chip card, and passing this on to a portal∗

. During this

∗The term portal is an alternative to control panel, as per the definition within[32]

8](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-11-2048.jpg)

![process, the smart card may or may not trust the reading device and therefore

further validation or authentication mechanisms may be required. The portal

then communicates further with the additional access control subsystems to

authorise access, the set up for which may vary. In many access control sys-

tems, portals are connected to host computers which are themselves connected

to back-end databases or access control servers (usually containing encrypted

and/or hash-protected data), against which a live credential is compared. Alter-

natively, the reader itself may have enough logic to authenticate the presented

credentials. This matching function is normally carried out at the application

layer using matching software resident either on the host computer, or within an

embedded/smart card operating system, depending on whether more enhanced

authentication mechanisms are used.

Once there has been a positive identification match, the embedded device

or terminal communicates with the portal and transmissions are sent to a door-

release mechanism or “door strike” and an aural sound may be produced, in-

dicating that access has been granted (or in some cases denied). Figure 2.1

illustrates this process.

In contactless physical access control systems incorporating microprocessing

cards, the door reader and card communicate via radio frequency (RF) technol-

ogy, under which the most widely used technology - proximity coupling, is used,

as described in 2.5. In this case, the contactless card is presented within the

RF field and is powered by, and communicates with the reader. Any additional

factors - PIN or biometric - may be used in combination, and tend to transmit

by a separate interface, commonly a serial interface.

Regardless of whether or not authentication is performed within the closed

environment of an embedded system, access to each physical location can be de-

fined and enforced by the use of specific access control lists (ACLs), a type of ac-

cess control scheme used enforce a system specific policy (SSP). Such a policy is

often derived from an overarching enterprise information security policy (EISP)

or other high-level security policy, depending on the type of organisation[26].

Higher security environments often employ the use of formal access control

frameworks supported by granular ACLs, which should be under strict con-

trol. This is in order that access privileges are updated, restricted or revoked

when necessary. As within the framework specified under [72], access control

mechanisms utilise access control information (ACI), including ACLs, which is

used to make a decision by an access control enforcement function (AEF), which

is itself mediated by an access control decision function (ADI). Although these

components are logically separate, they may be part of the same component

within an access control system. For example (as in this case), the system

may be configured so that the AEF and ADF are on the control panel and the

ACI/ACL information exists on the smart card or backend server.

2.2 What is a Smart Card?

Smart cards are currently widely used across several industries, and have been

adopted to suit many purposes, in particular tokens used for authentication and

access control. A generalised definition of a smart card is “a plastic card the size

of an ordinary credit card with a chip that holds a microprocessor and a data-

storage unit”[68], which is a useful but simplistic description of a smart card.

9](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-12-2048.jpg)

![Figure 2.1: A typical access control system [12]

Smart cards have been around for some time the first example of which was

the “Diners Club” card, a payment card used in the travel and entertainment

industry[124]

Smart cards can be broadly grouped into 2 major categories, the first of

which is known as the “dumb” smart card because of its limited processing

capabilities. This category includes the “memory card”, the first example of

a card containing a microprocessor chip, which contains only memory modules

and practically no CPU power. A key component within the memory card

architecture is the security logic, which mediates memory(ROM and EEPROM)

access. As its name suggests, it is responsible for providing additional security

within the chip and its activity ranges from simple write protection to basic

encryption functions in more advance adaptations.[124]

The second group of smart cards are the “true” smart cards, which have the

extended ability to perform processing functions, carried out by an embedded

smart chip processor (CPU).[49]. State-of-the-art microprocessing cards have a

far greater processing capacity and more memory than dumb cards, and these

levels are advancing. The higher quantities of memory and processing capability

can support many advanced additional features such as multiple applications

and high-level programming languages (APIs) to support specialised applica-

tions including match on-card implementations. This is distinct from the dumb

smart cards. Advanced microprocessor smart cards also support fairly advanced

coprocessors “utilized for accelerating the computation of time-critical proce-

dures relieving the systems microprocessor from these application parts”[53].

Many examples of these exist[110] including cryptographic coprocessors, which

have been developed to support both symmetric and public key cryptography

[48]. In the latter case, this is done by carrying out performance-intensive mod-

ular exponentiations for cryptographic algorithms such as RSA.[65] These cards

consist of operating systems that are multi-threaded and capable of multitask-

ing, ensuring that data stored on the cards need not leave the card itself.[22]

10](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-13-2048.jpg)

![Match on-card implementations can only be supported by these advanced

cards because of the resources required on what are, in general, constrained

and limited processing environments. Such limits, and their effects on on-card

verification, will be discussed in 3.1.

However, generally speaking, a smart card should satisfy the following qual-

ities [103]:

1. It can participate in an automated electronic transaction.

2. It is used primarily to add security.

3. It is not easily forged or copied.

4. It can store data securely.

5. It can host/run a range of security algorithms and functions.

2.3 Contactless Cards

Within the category of true smart cards are the “Contact” and “Contactless”

cards, both of which require a smart card terminal or reader for the purpose

of rendering data to and from the host, and powering the smart card chip. In

general, cards with IC chips will have 8 electrical contacts (C1 to C8) each of

which with a specific purpose as according to the standard ISO/IEC 7816 part

2. These cards are so-called as they must be inserted into an external smart

card reader, whereupon there is physical contact between the electrical contacts

within the reader and those on the smart card module, with which they are

interfaced (aligned). In order for the card to be correctly read, this process also

requires that efforts are made to correctly orientate it.

However, contactless cards contain an additional communications interface,

most commonly a Radio Frequency (RF) interface. This employs the use of

electronic devices attached to objects or hosts, using either RF or magnetic field

variations to communicate data [56][48]. The main components that perform

the communication dialogue are an external reading device containing a control

unit and an RF module, and a transponder device responsible for carrying data,

containing a microchip. Both of these components have coupling devices that

communicate across an RF channel, so that data can be transferred both ways.

Rather significantly, there is a difference between RF identification in the

context of RFID tags and the use of RF technology in PACS (smart) tokens [13].

These latter smart devices typically should be consistent with the definition

“intelligent read-write devices capable of storing different kinds of data and

operate at different ranges” as in [12]. Simple contactless tokens tend to be little

more than state machines, but as stated, cards that are used for match on-card

verification have operating systems and advanced processing capabilities.

An additional assumption that has been consistently held for some time is

that smart cards predominantly fall into the ID-1 card format family , which

consists of dimensions 85.6mm x 53.98mm x 0.76mm (see figure 2.2)[62]. This

is as defined by the international standard ISO 7810-1 [71]. However, there are

other smaller card formats that also exist, including the ID-000 format, which at

the lower end of the scale can be 25mm x 15mm, and the ID-00 card, dimensions

for which are an intermediate of the formative[87]. However, the most widely

11](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-14-2048.jpg)

![Figure 2.2: Diagram of an ID-1 smart card [62]

used access control and identity tokens to date still fall within this ID-1 format.

The physical format and properties of the card are of significance, because they

impact the overall availaility of resources within a card (see 3.1).

2.4 Principle Contactless Card Standards

There are 3 main international standards for contactless systems, all operating at

a high frequency band: (1) ISO/IEC 14443 - Proximity Coupling, (2) ISO/IEC

15693 - Vicinity Coupling and (3) ISO/IEC 18092 - Near Field Communication,

all of which use the operating frequency of 13.56MHz. †

. The signal interfaces,

protocols and operating ranges of these system are specified in each of the

standards, which also have the following features:

• Read/write capability including the capacity to store biometric templates

and PINs

• Capability to add security features (although not explicitly designated)

including cryptographic algorithms and digital signatures

• Support for multiple technologies and interfaces

• Authentication between the contactless reader and the contactless card

†Close-contact cards have not been specified as they require precise orientation and are

not in the HF range

12](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-15-2048.jpg)

![2.5 ISO 14443 - Proximity Coupling

Of all of these standards, ISO/IEC 14443[1] is the most widely used for con-

tactless systems [142] and has been described as “the standard of choice for

e-passports, credit cards and most access control systems.”[103]. In

practice, however, compliance against this standard is most often obtained for

card readers, due to the sheer variety and volume of contactless cards that are

produced.

Part 1 of the standard defines the physical properties of the card including

physical tolerances and environmental stresses, and its dimensions as in line

with the ID-1 format are specified in ISO/IEC 7810 part 1[71].

The second part [2] specifies RF power and signal interface, including details

regarding data modulation and bit representation (coding). Specifications for

the initialisation of communication, use of commands and the data byte format

of frames are included in part 3, as well as anticollision methods. Finally, Part

4 [4] defines the transmission protocols.

These systems operate at a range of 0 to 10cm and consist of notional

Proximity Integrated Circuit Cards (PICCs) and Proximity Coupling Devices

(PCDs) which transfer data using “proximity coupling” [48]. Under the

standard, PICCs are passive tokens, deriving their power and clock from PCDs,

and transferring data across an alternating high-frequency electromagnetic field

before communicating across the channel. Power is provided by looped coils of

wire in the PCD antenna (consisting of 3-6 windings) when current is applied to

the circuit and the electromagnetic field is produced. When the PICC is in the

field range of the PCD, power is transfered across to the PICC transponder coil

as a result of magnetic flux transfer. Resonant transponder coils and the capac-

itor within the PICC then form a circuit, which is powered at a transmission

frequency equivalent to that of the PCD. This sets up a carrier channel between

the PCD and PICC, along which the PCD can transfer data using direct data

modulation, which alters the baseband signal of the carrier. In the reverse di-

rection, load modulation is used to alter impedance in the antenna of the PCD,

i.e. the PCD is induced to carry out amplitude modulation by responding to

the feedback generated in antenna. ∗

The exact approach of data modulation differs according to 2 disparate sig-

naling interfaces specified in part 2 of the standard: type A and type B. Type

A is used for the majority of contactless smart cards and differs from type B in

3 main areas [2]:

• The exact method for modulating the magnetic field

• The bit/byte coding

• The anticollision method

The latter of these - anticollision - is another important aspect covered by

the standard, in part 3. A collision is the term associated with interference

between data blocks of a PICC when more than one PICC is present within the

interrogation field of a PCD. Clearly this can be an issue as it is not desirable

for data to be corrupted, which can significantly impact verification times and

authentication of PICCs to the PCD. Anticollision measures are important in

∗see 6.1 for further details

13](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-16-2048.jpg)

![ensuring that individual PICCs can be chosen for communication as necessary,

which is of significant importance - multi-access for PICCs is essential in an

access control environment where many PICCs may be present. See 6.2 for

more details.

2.6 Biometric Authentication

Biometric authentication is the process of identifying a person according to

individual behavioural or physical(physiological) characteristics [105], which is

carried out by a “biometric system.” A biometric system carries out the identifi-

cation process by acquiring raw data from a person using a sensor(data acquisi-

tion), converting it into digitised data and then further processing it to generate

a template representative of a distinctive feature set, in a process known as fea-

ture extraction [79]. While the template is being extracted at any one time, the

template is referred to as a live template.

During enrollment the template may undergo processing to ensure that the

quality is of an acceptable saliency∗

before it is then stored, either in a stor-

age subsystem such as a database, or in a smart token(as is the case for the

MoC solution being considered). This template is often described as a reference

template. The authentication process then takes place and one or more refer-

ence templates are compared with a single live template representing a claimed

identity, using a biometric feature matcher [76].

The outcome of the matching stage is a quantifiable measurement of the

degree of similarity between templates - known as a matching score, or a binary

decision (positive or negative access). In general, there are five major subsystems

(modules) in a generic biometric authentication system responsible for carrying

out these steps: (1) sensor, (2) feature extractor, (3) storage subsystem, (4)

matching module (5) decision module [80].

The two subtypes of authentication method are identification which involves

a 1:n comparison and verification, a 1:1 comparison[57]. In other words, the

former compares a live identity with several stored reference templates (used

commonly in law enforcement when attempting to identify an individual from

a pool of credentials) and the latter with a single reference template. Of these

types, it is a verififcation system that will be the focus in this project.

Figure 2.3 is a simplified diagram of a generic biometric authentication sys-

tem, showing the same basic steps that apply to both verification and identifica-

tion. This illustrates that during verification an identity is claimed (such as by

a PIN) and a single live template representing the claimed identity is compared

with a stored template corresponding to the genuine identity. In the example

given in figure 2.2, the reference template is stored on a database, although

where biometric verification using a smart card is employed, this reference tem-

plate is typically stored on a card.∗

.

∗preprocessing also applies to generation of a live template

∗In 2.9 various types of on-card verification strategy will be discussed

14](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-17-2048.jpg)

![Figure 2.3: Processing steps for enrollment, verification and identification [40]

2.7 Errors in Biometric Authentication

Biometric authentication based on physiological features is wide-ranging and

includes fingerprint, iris, retinal, facial, vein-pattern and hand geometry recog-

nition among the most popular methods. Regardless of the feature, or biomet-

ric mode, there are considerations regarding their performance that have to be

taken into account, especially when designing a system. In any biometric sys-

tem, it is unlikely that genuine live samples will be consistently identical, as

they are affected by a range of issues [75] particularly those resulting from the

inconsistent presentation of the trait and background noise/distortion. Each

system is therefore designed with a particular tolerance threshold, below which

a sample is rejected. By its own very nature, the biometric matching process

is probabilistic [23] and results in errors occurring, which are affected by the

threshold levels that are set. This gives rise to two major types of error: false

match or false non-match errors. The probabilities of either occurrences are

respectively expressed as the “false match rate”(FMR) and “false non-match

rate” (FNMR)[79].∗

Both rates are influenced by the threshold of the system so

that as it decreases, more false matches are tolerated, ergo the false match rate

increases; and the same is true of the opposite. Various attempts have thus been

made to formulate consistent methods to calculate error rates [152] and assess

overall system performance [96]. One way in which this type of assessment is

illustrated is by the use of a Receiver Operating Characteristic (ROC) curve,

which plots the FMR against FNMR at different operating points [77]. The

error rate at a particular threshold when both of these rates are equal, is known

as the Equal Error Rate (EER) and is a useful indication of the accuracy of a

biometric system as illustrated in figure 2.4.

The trade off between FMR and FNMR is an important consideration for the

Match on-Card solution, since any successful attempts made by an impostor, to

gain access, will warrant an increase in the tolerance threshold of the system.

This could potentially impact the convenience of the access control system if an

∗there other kinds of error - failure to enroll (FTE) and failure to acquire (FTA). [26],[99]

15](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-18-2048.jpg)

![Figure 2.4: FMR vs FNMR (extracted from [76])

unacceptable number of false non-matches results from such a change.

2.8 Fingerprint-based Authentication

Fingerprint-based authentication appertains to the recognition of fingerprints,

the unique features displayed on the epidermis of a digit(or finger), which are

formed during early fetal development [18]. The features of the digit include

papillary ridges and furrow (valley) patterns, from which singularities and more

express features of the ridges are derived(minutiae) among which are ridge end-

ings, bifurcations and lakes [74].

The process of fingerprint authentication involves the same generic steps and

subsystems as in 2.6. A basic overview of this process shall be described in this

section.

The processes of both enrollment and authentication involve similar basic

steps. During the process of fingerprint image data acquisition, a human digit

is presented to a fingerprint sensor responsible for reading the biometric feature

and converting it to raw data (image) using a fingerprint sensor. There are

numerous sensing technologies (as will be discussed in 4.3), the majority of which

fall within the optical and solid-state families, each using distinct techniques to

capture fingerprint images.

Prior to feature extraction, an optional quality assessment module may be

used to assess the image for variations in quality such as broken or unseparated

ridges, image contrast, ridge aberrations and other varying conditions [80][79].

16](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-19-2048.jpg)

![In many cases this module assigns a quality metric between 0 and 1 [27], in-

creasing in terms of accuracy. Quality assessment subsystems are common in

traditional AFAS systems as opposed to embedded systems, again due to re-

source constraints.

Once a fingerprint has been scanned by a fingerprint sensor, it typically

first digitised and then binarised, where a low pass filter is used to smooth

high frequency image regions thereby improving clarity and circumventing noisy

areas of the fingerprint and background [154]. This can be performed by one of

many mechanisms, for example those based on normalisation (to a “pre-specified

mean and variance”, local orientation and frequency variations or contextual

filtering [79]). The image can then be further enhanced by refinement into a

thin skeleton, of width one pixel, from which features can be extracted [109][44].

The template signal is next passed to a feature extraction subsystem to

extrapolate features and render the signal into a format suitable for match-

ing. Generally representations are based on spacial locations corresponding

to orientation and the type of minutia[77][109] used in point-matching. How-

ever, there are alternative approaches such as those involving algorithms, which

act on the number of ridges per distance (ridge density), pattern class, rota-

tion and shift[128]. Novel approaches have been proposed which involve using

characteristic features verging the minutiae, such as notional adjacent feature

vectors[146], which have unique global transformations such as rotation and

translation. The majority of these approaches tend to be based on proprietary

algorithms.

During enrollment, the selected minutiae points (usually between one and

twenty) are stored within an enrolled template [108], which can then be used

for comparison in the matching module, during the authentication stage. This

is carried out by a decision making subsystem, and is often based on the degree

of accurate matching of minutiae points. One such way this can be done is by

dividing the number of successfully correlated points by the total number in a

template. This gives a metric between 0 and 1 (as previously described) where

1 is a 100% match and a threshold is chosen within this range, under which

access authentication is denied.

2.9 On-card Verification Strategies

Three dominant strategies exist for verification using a smart card[44], all differ-

ing in how the template is used and the location in which the matching process

takes place as in figure 2.5.

• (i) Template on Card (ToC), otherwise known as Storage on-card.

• (ii) Match on-Card(MoC)

• (iii) System On-Card (SoC), as illustrated in figure 2.5.

.

All three of these strategies differ in how and where the verification process

takes place.

17](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-20-2048.jpg)

![Figure 2.5: Three Strategies for Fingerprint Verification (extracted from [44])

2.9.1 Template on-Card

Template on-Card (ToC) - also known as storage on-Card, is a form of biometric

verification whereby the smart card acts simply as a storage device that holds

the template. In this configuration, the matching is not done within the smart

card; the template is instead transmitted to an external device that performs

all functions required for matching i.e. image scanning, feature extraction and

matching etc.

Where a positive match has been made by the terminal within a PACS, the

terminal will securely pass the result to the other subsytems. Hence beyond

the transmission of the template, the (PICC) card is no longer involved for that

instance of verification.

Consequently, this is the least secure of the three strategies, as the expo-

sure of the template representative (as a result of leaving the card) renders it

vulnerable to interception. Across a potentially insecure RF channel, this re-

quires cryptographic protection or the use of digital signatures to secure the

template during communication. Cards used for this process tend to be low

cost state-machines, which presents a challenge to this end. Furthermore, veri-

fication must take place in a device attached to a database, server or network,

which are potential points of vulnerability [21]. This method is therefore not

ideal for use in a secure PACS.

2.9.2 Match on-card

Match on-card (MoC) verification does not require that a template or its repre-

sentative leave the card at any stage, unlike ToC. Instead, the matching stage

takes place within a smart card, without requiring that the stored template

leaves the card.

In this case, a master template is generated during enrollment, as well as

other identifiable information associated with the template, which are stored

on a card instead of a database subsystem. The sensor is located within the

terminal, where a live (query) template or representative thereof is produced

18](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-21-2048.jpg)

![and passed on to the card, where the matching is algorithm is executed. Conse-

quently any card used in this system requires a microprocessor and an operating

system to invoke the matching algorithm, which may also carry out feature ex-

traction prior to the matching process[44]. Cards used for MoC implementations

are capable of possessing advanced microprocessors and smart card operating

systems that can support this. In addition these operating systems can carry

out authentication, cryptographic and matching operations.

Once the matching process has occurred within the card, a result derived

from the matching process is transferred across the interface between the card

and reader. The security is enhanced in this system, as the tamper resistant

environment of a smart card is used to protect the template and can only be

accessed with a concerted amount of effort and resources available. A useful

example of the kind of architecture used for this process is in [120] which il-

lustrates how a match algorithm stored within the chip (using native code) is

used to process the matching before the result following a successful match is

securely passed up to the application layer.

However, as will be discussed, there are still points of attack that exist in

this system.

2.9.3 System on-card

In a system on-Card (SoC) verification system, the data acquisition, feature

extraction and matching processes all take place within the smart card, as the

fingerprint sensor is present within the card. Cards using this type of verification

system must be capable of high performance and their components are likely to

be expensive.

Potentially the most secure implementation of these strategies is SoC ver-

ification. However, such systems would require investment in state of the art

microcontrollers, including embedded co-processors and additional processing

components [50], which, within a high-volume PACS may not always be cost-

effective. Even CPU chips (processors) up to 32-bit RISC do not fall within

acceptable processing thresholds to cope with performing feature extraction or

image processing computations at least not without significant investment in

additional memory and components to speed up this process [44]. Although

enhancements are certainly progressing in this area and various modified archi-

tectures have been proposed [50], cards used with SoC based implementations

are not widely used, particularly in microprocessing cards with proximity inter-

faces.

This leaves MoC as the most widely accepted form of biometric verifica-

tion that involves the use of a smart card template and a suitable compromise

between capability and security.

19](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-22-2048.jpg)

![tests specified therein, in order that they are suitably flexible to avoid damage,

as per ISO10373-1 [73]; in addition they must adhere to a reasonably small

form factor. This significantly limits the smart card die and capacitor size, and

the specific components included within the smart card microcontroller (i.e.

memory and processor).

3.2 Resources on the Microcontroller

.

A microprocessing smart card will, by its own nature, contain a (sub-electrical

contact) microcontroller, within which the main functional resources are con-

tained. It will contain the following components as a minimum [46]:

• CPU

• Memory: RAM, ROM and EEPROM.

These components are the basic limiting factors with regard the overall per-

formance of a smart card and its ability to perform functions efficiently. The

functions of these components are summarised in the table that follows below.

Resource Function

CPU The processing unit within the microcontroller of a smart

chip. It is responsible for all of the card’s logic and activi-

ties, is closely associated with the memory modules within

the card, and is directly affected by the clock speed (signal).

ROM The memory module that has data written to it once during

the lifetime of a smart card, after which time the contents

become read-only. This data is consistent within a batch of

a production run. In the more advanced smart cards this

contains the elementary parts of an operating system, as

EEPROM can be used to load any additional data or code.

RAM The memory module containing temporary volatile data

used for storage of data (i.e. working memory), used for

run-time processing.

EEPROM The EEPROM is programmable memory, providing the im-

portant task of extending the functional capabilities of a

chip card. This is particularly useful when the smart card

needs to be updated, for example when adding the capa-

bility of a card to stored fingerprint minutiae matching ap-

plication code, as this can be done after the manufacturing

process.

In addition to the latter 3 types of on-board chip memory, flash memory

has been integrated into some of the latest chip cards, as it has faster write

capability, generally ages better than EEPROM and configurations can be soft

loaded. However, there are security concerns about flash memory and its higher

costs, and so at the present time, it is rarely used for verification on-card within

PICCs.

21](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-24-2048.jpg)

![3.2.1 Processing Capability and Clock signal

The vast majority of smart cards do not generate their own clock signal, but are

reliant on the clock signal of an external terminal, from which the internal clock

signal (that powers the logic of the microprocessor) is derived. The resultant

internal clock signal of a processor is normally around half that of the external

clock signal generated from the oscillators of a reading device, which further

affects the ability of the processor to perform instructions.

Under the latest revision of the ISO/IEC standard 7816-3 [70], the thresh-

olds for the “VCC” contact of a smart card (compliant with ISO standards)

associated with supply voltage and and supply current are specified for smart

card types A, B and C. The minimum and maximum power supply values spec-

ified are 1.62 and 5.5V and the minimum and maximum supply current values

are specified at 30 and 60 mA respectively.

The standard also specifies thresholds for the CLK contact which receives the

clock signal. The recommended values for when the clock is active is 1MHz and

maximum is 5MHz, although the clock tends to be set at 3.57MHz. [70]. This is

small and becomes a particularly limiting factor under the ID-1 standard card.

This contrasts with the capabilities of state of the art conventional Personal

Computers, which commonly run within the GHz range in respect of clock

signal.

These constraints can be overcome by the use of additional circuitry to

control the internal clock frequency, including phase-lock loops (PLLs )[60].

These are circuits functionally located between the external and internal clocks,

and have been used in embedded systems as a means to boost the frequency of

the internal clock signal [112] derived from that of the external clock (frequency

multiplication). The latest version of ISO/IEC7816 part 3 (2006) accounts for

a maximum clock frequency of 20MHz, which would support this.

In modern smart cards, these components are included: - to resolve issues of

power and clock issues - during the design phase of contact-based smart cards

- for security purposes [59] in biometric-capable embedded systems [11] and as

a method for efficient power management ∗

. However, this is not always ideal,

as clock multipliers can interfere with the clock signal in RF based systems[90],

and do not have any bearing on on EEPROM read/write cycles.

Dedicated crypto-coprocessors have been used to support cryptographic pro-

cessing at a low level, an essential requirement for sufficient security over a con-

tactless interface [36]. The issue of their implementation is no longer considered

one of the important influential factors in terms of the overall processing time

of a smart card[100]. In the current market, RISC architecture-based chips typ-

ically contain these, an example of which is the FameXE coprocessor within the

P5CD036 chip by NXP, [116] which supports advanced cryptosystems.

3.2.2 Memory

The memory modules are all limited in capacity compared to those within PCs.

The ROM, while the most efficient in terms of packing density, allows soft-

ware to be written once-only. Therefore it is typical that the operating system

is loaded into it and remains permanently written therein. It is not used for

∗A PLL can also offset power consumption and regulate clock signal as a consequence of

heavy-duty processing otherwise carried out by any coprocessor with the microcontroller

22](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-25-2048.jpg)

![any transient storage or dynamic data, although depending on the smart card

ROM mask it can be designed to contain the matching code (during manufac-

turing), in order to reduce the storage burden on the rest of the memory on the

microcontroller. However, adding specialiased functionality to ROM lengthens

the term of the manufacturing process, contributing to increased production

costs. Regardless, with improvements in storage capacity in current generations

of smart cards, (particularly EEPROM) both advanced smart card operating

systems and platforms have been developed that support multiple applications

(and programming interfaces) that extend beyond previous resource limitations.

EEPROM is the next most useful in terms of dynamic storage and overall

packing density. EEPROM does age, however, and is limited to a typical write

cycle threshold of “between 10 000 and 100 000 for EEPROM cells”[48] per

lifetime. EEPROM is important in the context of MoC systems as it is used as

non-volatile long-term storage and is used to store the matching code and/or

reference template of the enrolled user. In microprocessing cards such as the

Java Card, applets that form part of the Java Card API [21] are stored in

EEPROM.

In order to write to EEPROM, the appropriate power supply requires a

write voltage of 12V, although the RF carriers are supplied with 3V and 5V

(as constrained by ISO/IEC 7816). Overall, the power provided by the PCD

to the card is restricted by the electromagnetic spectrum (as specified by ISO

14443) to a value of 7.5 H/m [94]. The extra voltage is provided by a cascaded

charging pump on the microcontroller, which is, as standard, integrated into a

smart card - up to 25V.

RAM is the least densely packed and at a premium compared with the other

memory modules within the microcontroller. This is also a crucial element of

the on-card matching process as it is used to store dynamic, volatile data and

therefore influences the overall runtime environment. This would include any

session data present as well any results required in computations, i.e. matching

results. This is the fastest form of memory to write to (approximately x 1000)

[120]

Historically, and until recently, memory has been a restrictive factor for

smart cards, with a direct bearing on computations, which themeselves require

more complex processing requirements, both in terms of running matching algo-

rithms as well as any cryptographic security mechanisms incorporated. Nonethe-

less, improvements in this space are certainly being seen.

One of the issues that constrained PICCS have (associated with memory)

is that the template sizes held on the RAM are much smaller compared to

the images stored within conventional online databases. As a result biometric

template sizes are short and tend to be around 512 bytes [30] and as such tend

to be transmitted within multiple APDU structures [44].

3.3 Data Transmission

Transmission speeds are naturally among the most influential aspects in terms

of the performance times for on-card verification.

As specified in part 3 of ISO 7816 [70] data at the physical layer are sent

via asynchronous half-duplex connections, whereby bit streams of data are sent.

The equivalent protocols are specified for contactless transmission and are also

23](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-26-2048.jpg)

![referred to as T=CL [48]. Any aggregated blocks of data are therefore required

to be organised with contingent synchronisation and termination bits flanking

the data. Timing must be coordinated between the reading device and the

smart card, however this process is largely dependent on the clock signal of

the microcontroller. The more commonly deployed 32-bit RISC processors are

being used alongside increased quantities of RAM and EEPROM, such that

this is becoming more increasingly tolerated. In contactless systems, type A

PICCs support higher communication rates up to 848Kbps.[2]. This supports

faster data transfers than the serial interfaces used in many match on-card

implementations.

Within a smart card, the transport and application layer message block

sizes are limited. This is also the case for contactless cards which use the T=CL

protocol, where the APDUs that fit within the frames are bound by an upper

limit of 255 bytes. These limits are again bound by the specifications of the

14443 ISO standard, which has resulted in a fragmented system of transmission,

despite the allowance for chaining of blocks under the message protocol. In

addition, the I/O buffer sizes are limited, which is a significant influential factor

in the timing of communications [85]. In response to this, the buffers can be

increased to tolerate larger message sizes, however this will affect the runtime

environment and demand increased RAM capacity. As RAM is at a premium

in smart cards, even to an extent in current generation microprocessors, this

increases its cost.

3.4 Impact of Constrained Resources

These restrictions all affect the timing in which verification can be performed on

a smart card. What this means in practice, is that extra expense is required in

order to ensure that the each of the microcontoller components are sufficiently

advanced that verification can be carried out within a suitable length of time.

At present time, state-of-the-art specifications do exist, for example, the most

recent version of Gemalto’s .NET card, (which can be utilised with .NET match

on-card application software for match on-card verification) is the .NET v2+

card (chip model SLE88CFX4000P). This card has 400kB EEPROM, 16kB

RAM (and in addition, an internal clock at 66MHz, external clock at up to

10MHz and voltage between 1.52 and 5.5V) [55].

However, this type of advanced processing is still relatively uncommon in

PACS used within large user communities. Each of the hardware components

incorporated at the design phase requires an added level of cost. These costs

will all multiply, when considering the sheer numbers that PACS tolerate. In

practice there are always compromises that are made, even within biometric-

based systems, depending on whether speed of entry is the priority, or the level

of security.

24](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-27-2048.jpg)

![4.1.2 The Attacker Profile

In the context of this project, the attacker by definition will be an intelligent

collusive adversary; in other words an adversary with the ability to collude with

personnel at the site of the PACS to gain “insider” knowledge, else an insider

him/her-self. For convenience, the attacker will be frequently referred to as the

profiled attacker .

The equipment available to them will only be of limited cost and sophistica-

tion, but given the attacker’s level of intelligence, they are potentially capable

of creating some additional components of their own, mirroring those of the sys-

tem. This would include rogue cards, external terminal devices and synthetic

gummy fingers, all of which can be covered under a moderate budget.

The focus of the discussion will be held on the main points of vulnerability

inherent in, and unique to a MoC PACS using a contactless interface. As such,

it will exclude any detailed discussions of the generic types of attacks that can

be performed against smart cards (PICCs). The attacks discussed will not focus

on those involving the manipulation or analysis of the PICC, which is mainly

concerned with deriving key information that could be used to compromise

the template stored within. Instead, it will be assumed that the storage of

the template itself is trusted, although in practice this may not be the case.

This is an important exclusion, since a vast number of potential categories of

attack against a card (and therefore the stored template itself) exist as well as

respective countermeasures. Many of these are well covered within [15], [14],

chapter 8.2 of [124] and chapter 9 of [103]. ∗

One reason for this exclusion is that for such an adversary it may very well

be the that they are less likely to dedicate their time and limited resources

on trying to reverse engineer various components of a smart card or analyse

the effect of random power or timing fluctuations, in preference to carrying

out low-cost attacks exploiting very apparent vulnerabilities in such systems.

Consequently, it has been assumed that the attacker’s profile will reflect this,

so that the main attacks of concern can be discussed.

4.1.3 The Generic System

The focus will be refined to reflect the attacker profile described, and a rea-

sonably generic set of components for the PICC and access terminal (reading)

device. This will be used on top of a framework within which various applicable

attacks and countermeasures can be discussed.

In that respect, some assumptions can be made:

• The same basic PACS components as in figure 4.1 will be used.

• The terminal device is assumed to be physically robust, regularly main-

tained and absent of any operational defects.

• The sensor subsystem and feature extraction functions are both contained

within the same subsystems.

• It is assumed that the terminal will not permanently store any card image

or template data, but rather, that it loads a live image into RAM for

∗Although attacks on the storage subsystem are not covered, template security will be

partly addressed in [20]

27](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-30-2048.jpg)

![the signal processing/feature extraction steps. The RAM will be flushed

periodically as part of normal system housekeeping.

• Any components used as part of access control decision making (as per

2.1) are assumed to be secure.

• The PICC will store and match ISO 17974-2 format minutiae templates.

• The type of fingerprint sensor shall not be pre-defined explicitly - it is

assumed to be a reasonably middle-market optical or capacitative sensor.

• The terminal device is free of any malware and on a hardened network.

The component-level features of the system shall not be further defined.

4.2 Applicable attack routes

Jain et al [80] categorised two broad categories of failure type that can be desig-

nated to a biometric system. The first is an“intrinsic failure”and the second a

system failure, as a result of an adversarial attack. It has already been assumed

that the terminal is absent of operational defects. In other words, the system

is assumed to operate within its intended parameters. Hence intrinsic failures

are not of concern in the scope of this discussion. However, this is not to pre-

clude the possibility that attackers can exploit various weaknesses inherent in

terminals (in particular their sensors), which is of course the principle topic of

focus.

Within a standard biometric verification system, there are various points of

compromise that can be realised. In [126], 8 distinct points of compromise are

highlighted, applicable to such generic biometric systems. This concerns the

following points of attack:

1. At the point of the sensor - i.e. by presentation of a fake biometric.

2. Along the communications interface between the sensor and feature ex-

traction subsystems.

3. Within the feature extraction subsystem.

4. Between the feature extraction subsystem and matching subsystems.

5. Within the matching subsystem.

6. Within a template storage subsystem, at the level of the stored template.

7. Along the channel between the storage and matching subsystems.

8. Between the matching subsystem and application device.

This is useful, to an extent, as a framework for discussion. However, if we

map this onto the model representation of the MoC PACS as in figure 4.1, some

of these attacks are not applicable because of the different logical locations of

the subsystems concerned and because of the specific scope of this project.

Attack point 3 involves overriding the feature extractor, which would theo-

retically involve the use of malicious software (to both override the feature set

28](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-31-2048.jpg)

![and select arbitrary features within the system). The system could then be later

compromised. Cleverly crafted software, including Trojan Horses would allow

an attacker to willingly inject variations to do so. However, the infrastructure

concerning the attacks in focus incorporates a hardened network.

As the interface between the sensor and the feature extraction subsystem

is contained within the terminal device, any replay attacks between the sensor

and feature extraction subsystems (attack point 2) are out of scope. It is also

assumed that the sensing and feature extraction functions are held within the

same subsystem, which would exclude attack number 2 from consideration. As

discussed in 4.1.2 it is assumed that the smart card is trusted.

Hence the applicable attack points as within this model are 1, 2, 4 and 8,

and will all be discussed in the following sections.

In addition to the inapplicability of some of these attack points, it is worth

noting that attack point 4 occurs across the contactless interface in this MoC

implementation. The same interface would be used in a further attack point

between the matching subsystem and the decision making subsystem (attack

point 8).

4.3 Spoofing attacks on the sensor

The sensor subsystem (attack point 1) is still a major point of vulnerability

within a biometric system and there are various potential attacks that can be

targeted against it within a MoC system.

A fingerprint sensor is a device responsible for reading the surface character-

istics of a finger, in particular ridges and valleys. The vast majority of these fall

into one of two categories - optical or solid state, with the most common of the

latter (and most widely used sensors) being capacitative sensors. The optical

sensors generally detect reflective differentials, and capacitative sensors elec-

tronic transitions (capacitance) between valleys and friction ridges[141]. Both

of these types are commonly used among MoC systems, for example the capac-

itative sensor used by Precise Biometric’s BioAccess 200 fingerprint scanner[24]

and the optical sensor within the MorphoAccess 120 PIV card [130]. Several

examples of both types of sensor are given in Chapter 2 of [79].

The most simplistic actually require no intervention from an impostor, but

instead the use of pre-existing latent fingerprints. Among the attacks of this

kind, early attacks on these sensors were observed as documented within [93],

whereby fingerprint reading devices with capacitative sensors (albeit on a desk-

top mouse) were fooled by the simple act of breathing on the front of the sen-

sor, assisted by the act of hand cupping around it. On the basis that there

was sufficient fatty residue left behind this attack was shown to be effective in

reactivating the latent fingerprint to fool the system.

It has also been shown, as documented as in [45], that attacks can be carried

out by developing latent fingerprints (using printing toner) and then lifting them

with tape, as well as producing wax moulds to use against a sensor.

A ground-breaking study from Matsumoto et al [102] observed that artificial

fingerprints can synthesised from gelatinous “gummy” sheaths designed to fit

around fingertips of impostors. In these cases the artificial fingers fooled 11

state of the art sensors, both of the optical or capacitative categories.

29](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-32-2048.jpg)

![4.3.1 Anti-spoofing countermeasures and exploitability

There are a great variety of liveness detection mechanisms that have been de-

veloped and are available in sensors, the main types of which will be covered.

As the vast majority of sensors are optical and solid-state, most of the spoof-

ing mechanisms relate to the liveness detection mechanisms built within these

types. This includes the generic environment in focus. Other types of sensor do

exist, including ultrasonic sensors, that use high frequency signals and resultant

echo signals from a fingerprint layer. An example includes using high frequency

ultrasonic pulses reflecting off the fingerprint surface, which measure acoustic

impedance between surface features and the valleys (i.e. air) to produce an im-

age of the fingerprint [134]. However these components are currently reasonably

expensive, hence the focus will be on the former types of sensor.

Various studies on liveness detection have tried to address exactly what

vulnerabilities artificial fingerprints exploit, and the fingerprint properties that

these relate to. A useful categorisation [52] summarises 3 major categories of

these property:

• Analysis of skin details.

• Static properties of the finger i.e. temperature.

• Dynamic properties of a finger.

The various types of detection mechanisms as will be discussed, relate to

these categories.

Skin Details:

The coarseness of the skin surface of a finger can be detected, and used, to

differentiate between a live and artificial finger, as the latter is general more

coarse. In [107] this was done by treating the coarseness as white noise relative

to the ridge features, and removing it using wavelets. Other features of the

skin have been measured including sweat pores, which can be detected at high

resolution. This was needed because of proven studies to show that these could

be reproduced easily in such artificial fingers [102].

Static Properties of the Finger:

Capacitance and reflective characteristics, as described, are also in this cat-

egory, and by using the attacks methods as described in 4.3 these can also be

fooled by latent lifts and artificial moulds or gelatinous fingerprints of various

sorts. In the former case, the capacitance is simply removed from the equation

by adding saliva or water to reduce conductivity, which can fool the system. In

the latter, optical sensors (which measure reflective light) can also be fooled by

gelatinous artificial fingers with a similar composition of an artificial finger, or

a thin silicone layer, which will display similar optical properties to that of an

enrolled user’s finger [79].

The thermal properties of the finger are another type of static property fac-

tored into simple liveness detection mechanisms, on the basis that temperatures

within gelatinous fingers would normally be a couple of degrees cooler than live

finger ambient temperatures. Solid state scanners will detect temperature and

verify the finger according to the temperature it is preset at [151]. However,

fingerprints suffer irregularities not just in body temperature, which can vary

to a small degree, but also from outside influences. Moreover, the differences

30](https://image.slidesharecdn.com/7b45df4c-ad3c-4296-9e38-e8d4682d8dd7-150626211639-lva1-app6892/75/Dissertation-33-2048.jpg)

![between live and artificial fingerprints are small and so it becomes difficult (and

counterproductive) to limit verification according to any meaningful tempera-

ture range. As a result, artificial fingers and gelatinous sheets will fool several

of these detection mechanisms, the former of which can be incrementally heated

up in a plastic bag and the latter simply placed on a finger until it is verified

[93].

Dynamic Properties of a Finger:

Probably the most effective of all detection mechanisms rely on those char-

acteristics that are unique among fingerprints, i.e. those that vary and are

dynamic. There are several types of dynamic characteristic and they will not

be covered exhaustively.

Blood pressure and pulse oximetry (oxygenation of haemaglobin) can be de-

tected by optical scanners, which are augmented to image fingerprint subdermal

layers on the basis of several characteristics. An example of this is chromatic

texture, as visible under different wavelengths, to differentiate between spoofed

and live fingers [114].

More recently, multispectal analysis has been extended so that other meth-

ods, such as contactless imaging with polarisation, are combined [9].

Skin elasticity is another aspect that can be used to distinguish finger types,

and can be used to create a unique image, when (for example) they are com-

pressed and rotated against a sensor [16].

Another dynamic feature that is commonly exploited for use within novel

detection mechanisms is electronic odour, whereby the emission of odourants is

detected and utilised to profile a fingerprint. Electronic noses have been built

which can detect such emmisions. [19]

4.3.2 Feasibility of Spoofing Attacks

As per the broad variety of attacks and defenses described above, the question of

how effective these attacks and countermeasure can be, depends on the relative

level of resources both on the part of the system owners and the attacker. With

only a moderate amount of investment, it is a given that some basic liveness

detection mechanisms will be included, and will stop the attacks that use latent

fingerprints or moulded fingerprints. Indeed this is the case with most current

implementations of MoC. This is with the exception of artificial fingers that

can be developed from them, as in [131]. This attack is potentially one of

the more serious as it can be available to the profiled attacker without any

involvement from an enrolled user. In general, the liveness detection mechanisms

that observe the details of the skin and static properties of the finger, are prone

to spoofing. Given enough time, the resources available to the profile attacker

will counter such measures with reasonable ease.

Some recent methods have been proposed to detect static features by sta-

tistical analysis and fusion of their results [33]. However, whether building-in

these detections is cost-beneficial/scalable or not, is questionable. Spoof detec-

tion using the dynamic features of a fingerprint is generally more effective than

the other methods, but with any new implementation, it can also be expensive.

In addition, one major hurdle to the operation of effective liveness detection

controls, is the usability of the system. Those sensors detecting elasticity, such as

the one described previously, would in practice involve a certain level of training.

Moreover, they would become prone to FTE errors(as in 2.7) because they are