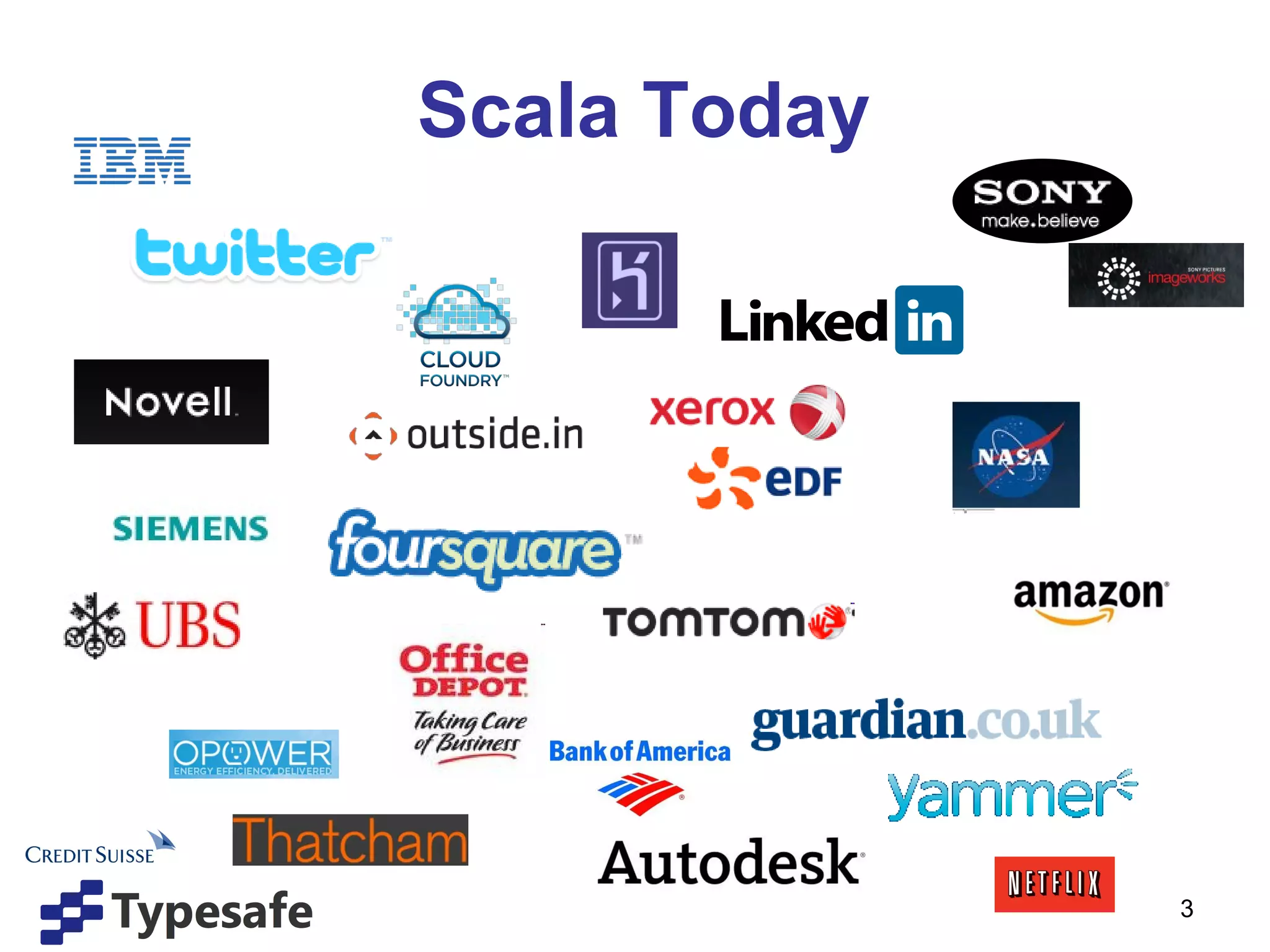

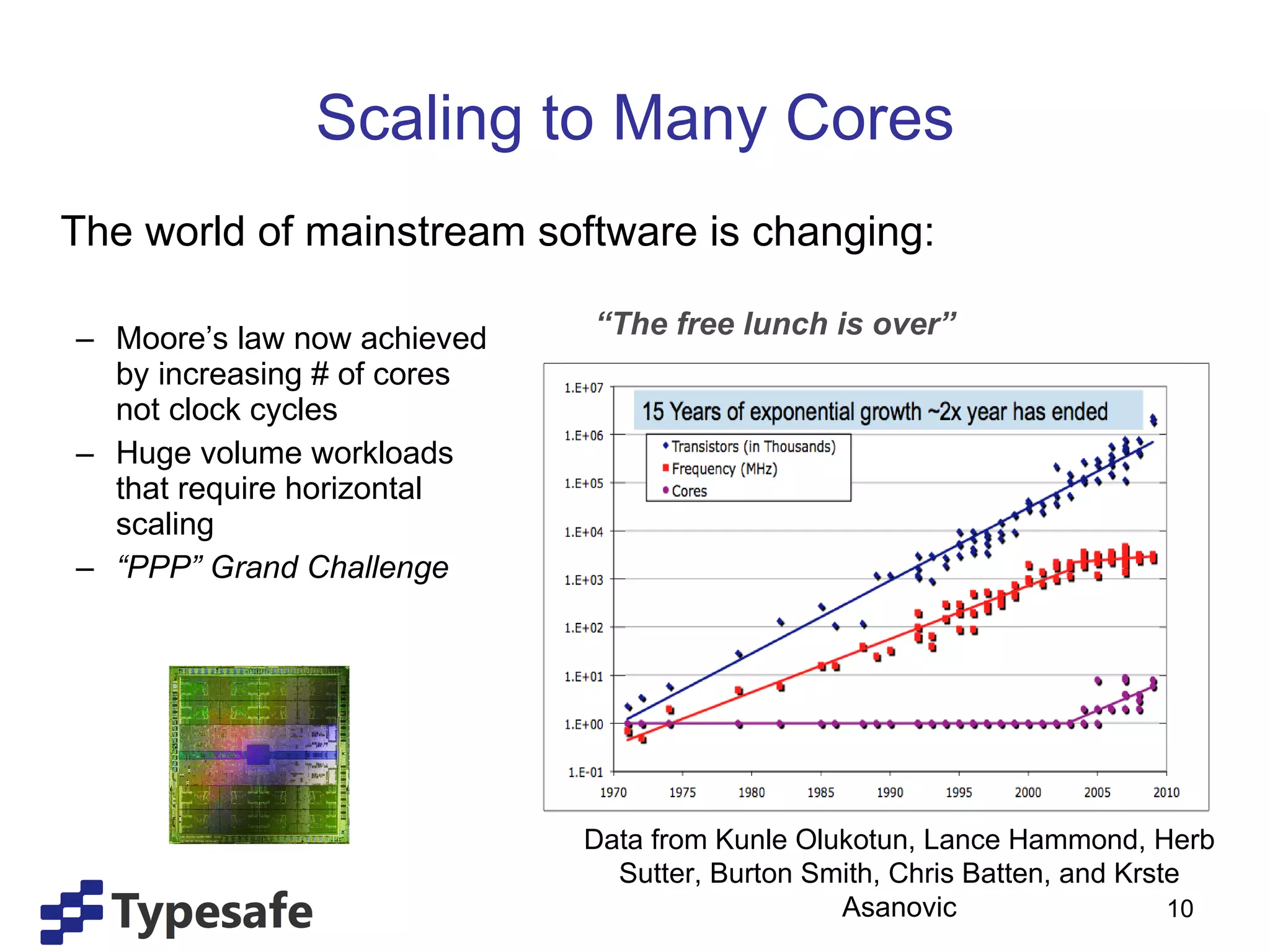

- Scala is being adopted for web platforms, trading platforms, financial modeling, and simulation. Scala 2.9 includes improvements to parallel and concurrent computing libraries as well as faster compilation.

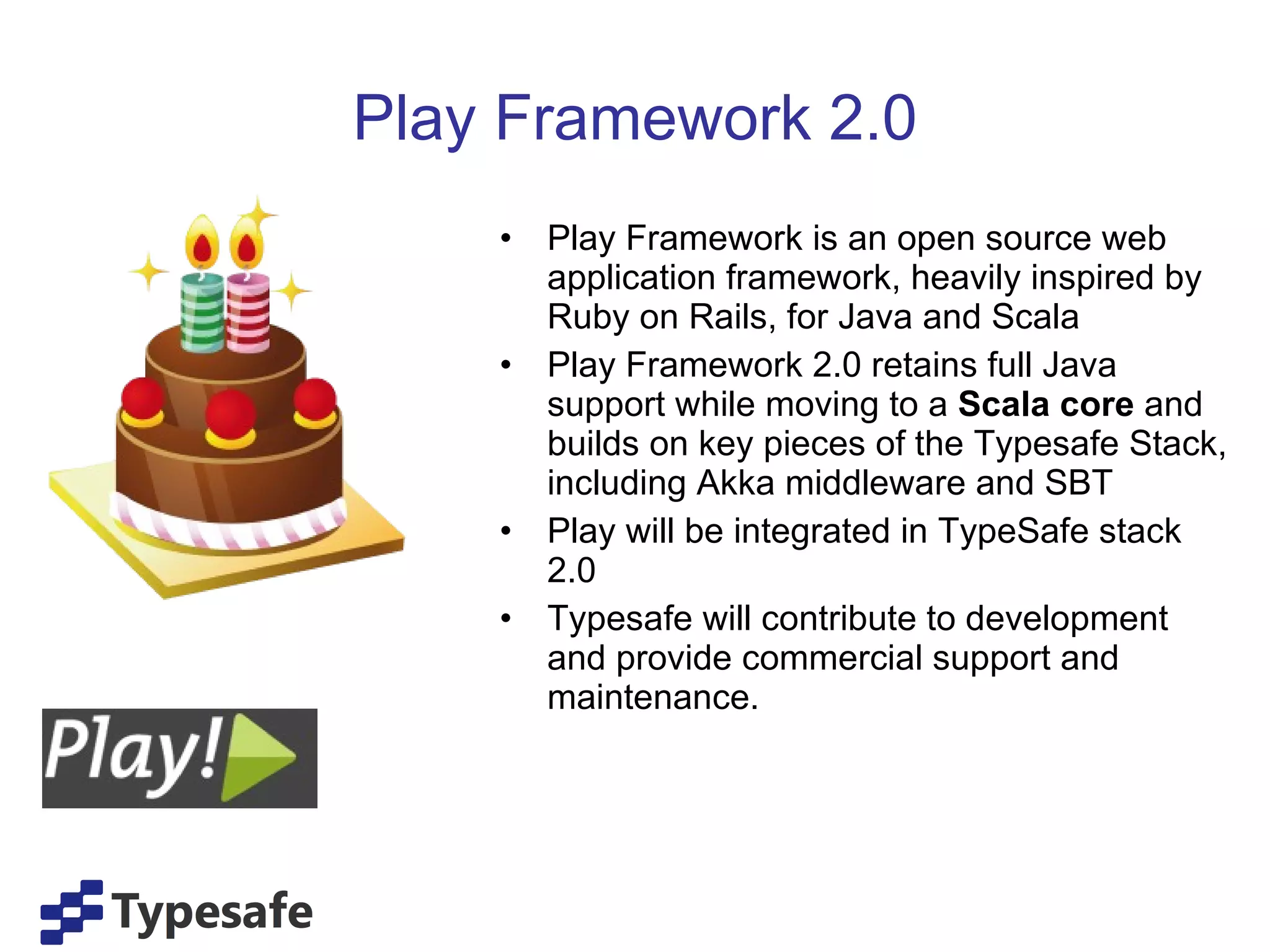

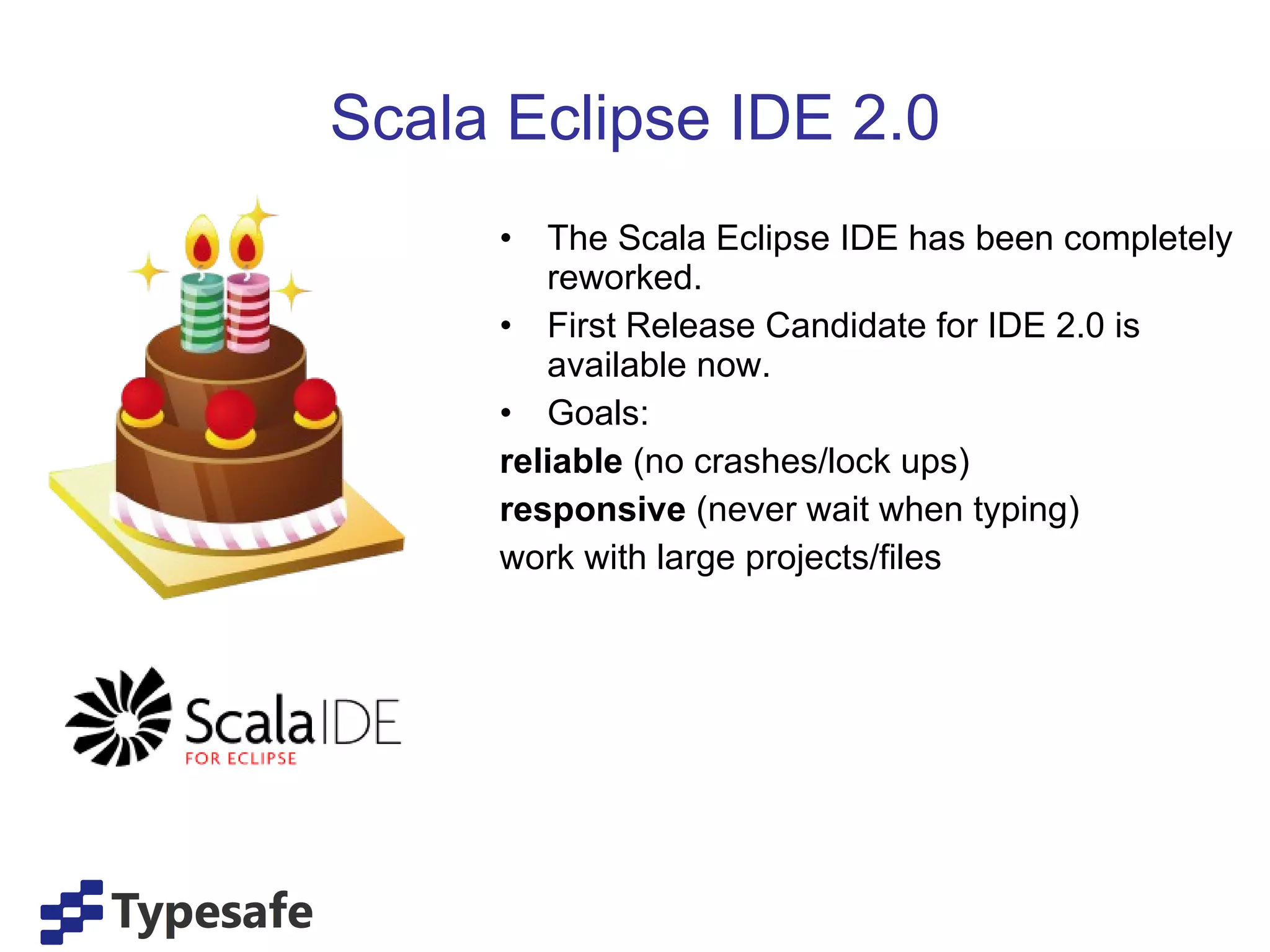

- Play Framework 2.0 will move to a Scala core while retaining Java support. The Scala Eclipse IDE has been reworked for better reliability and responsiveness.

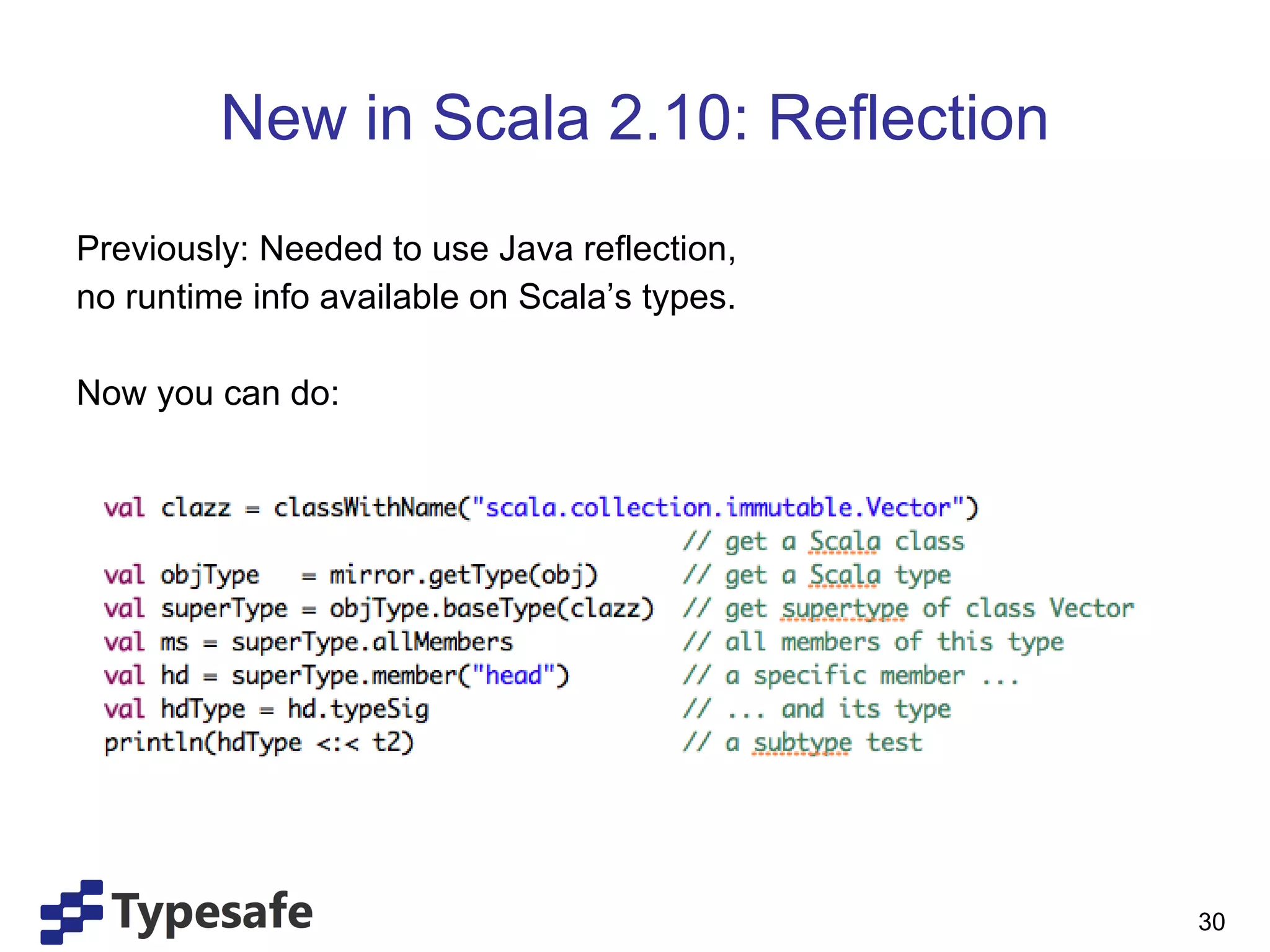

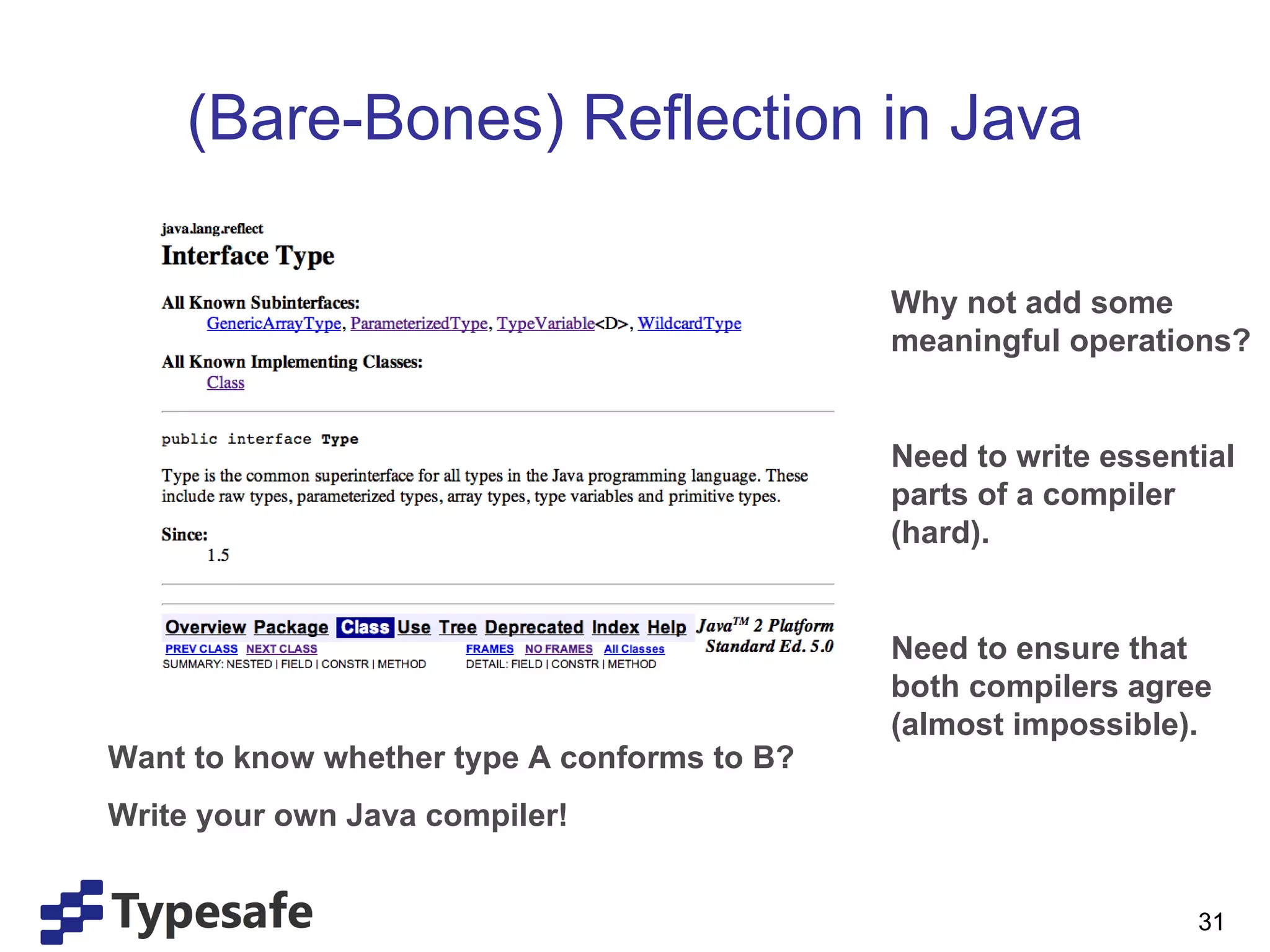

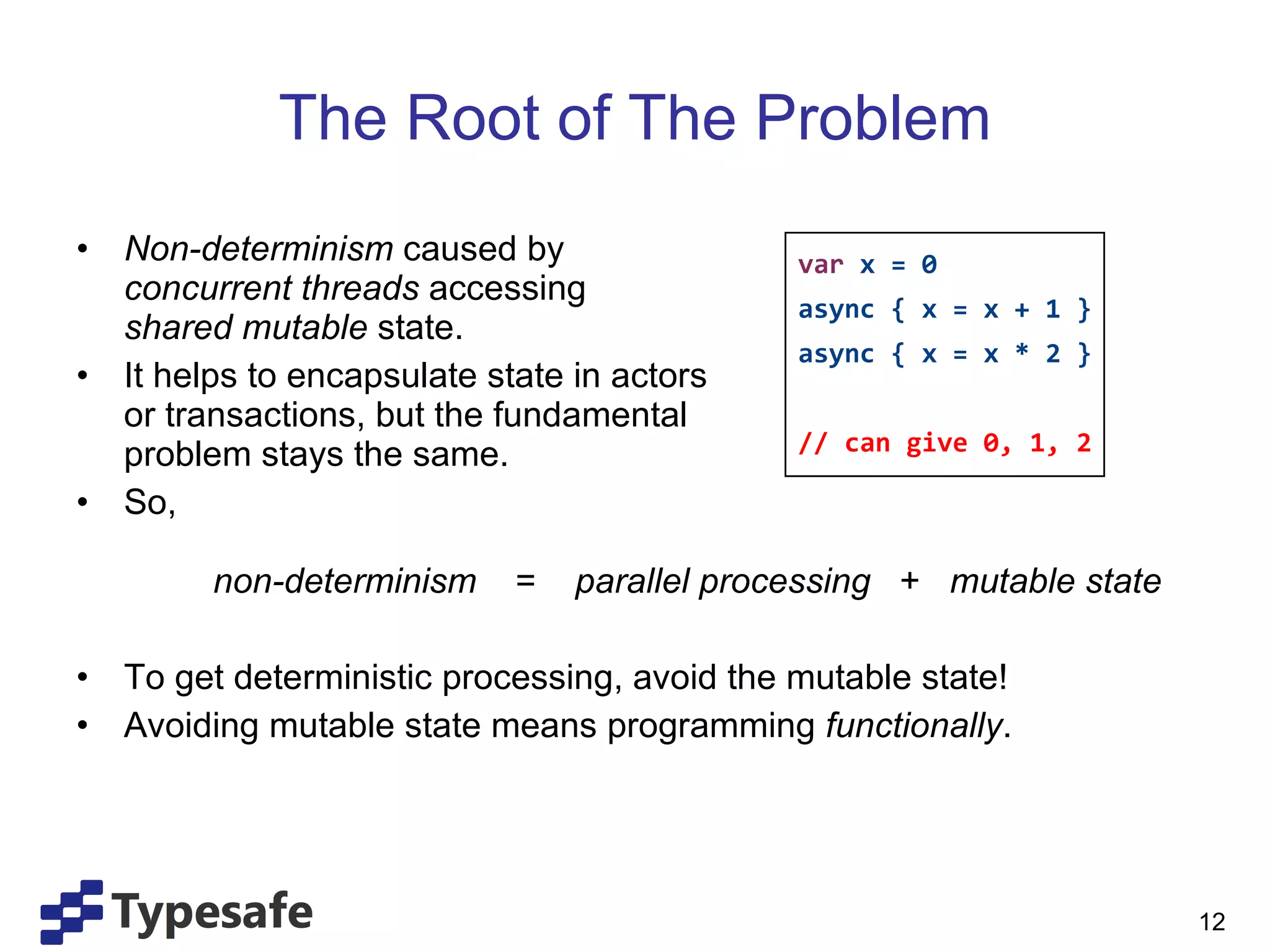

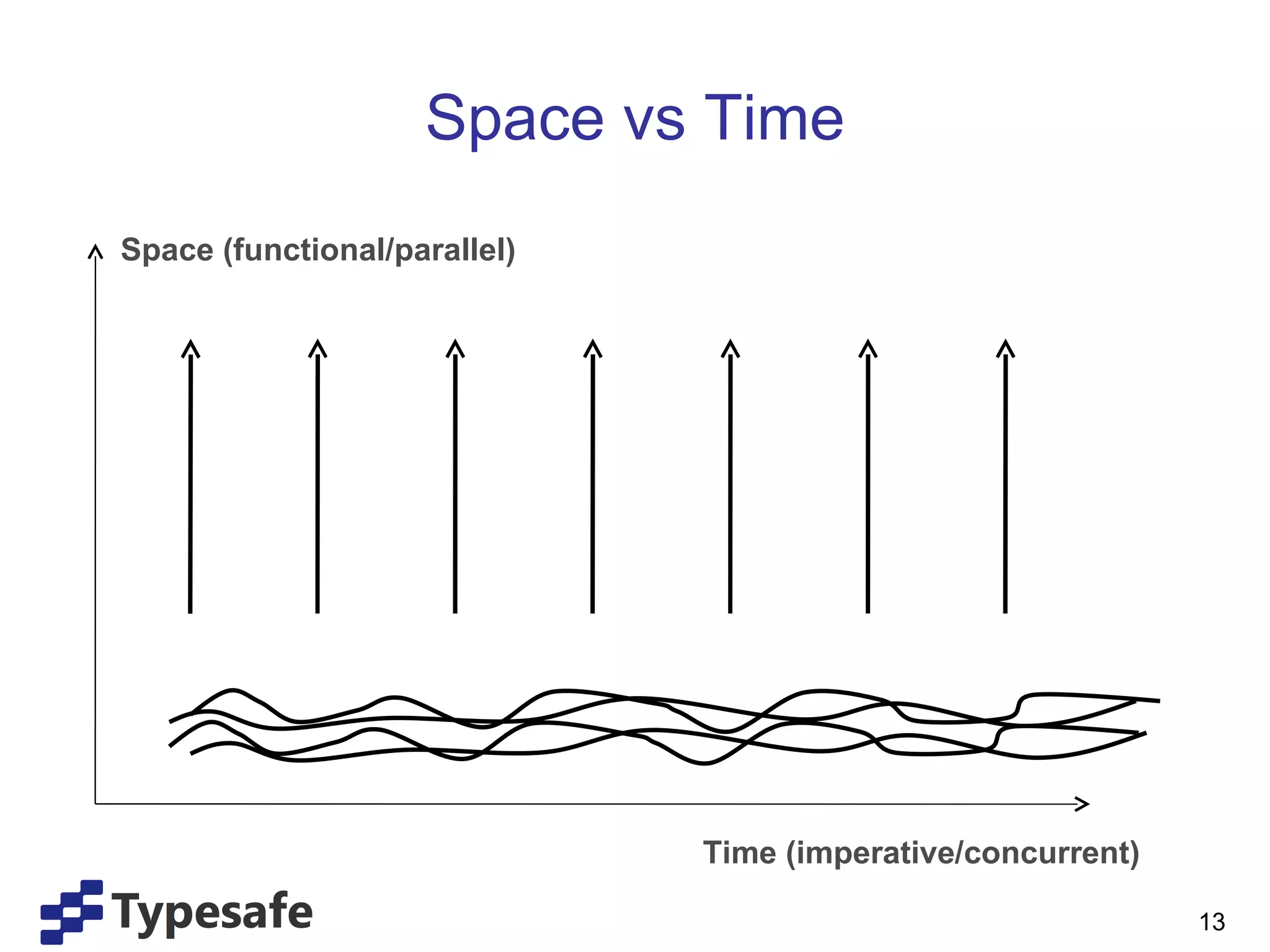

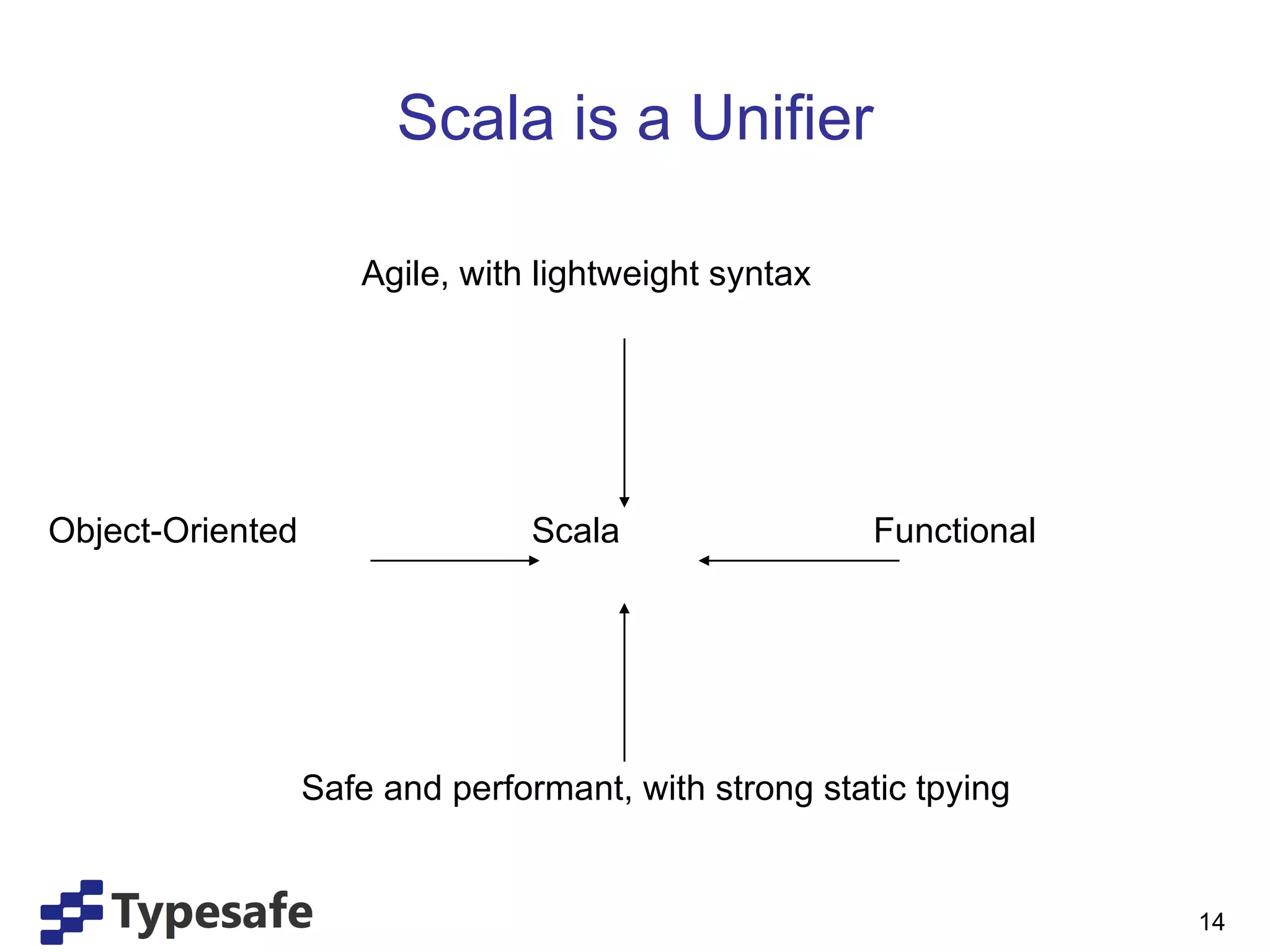

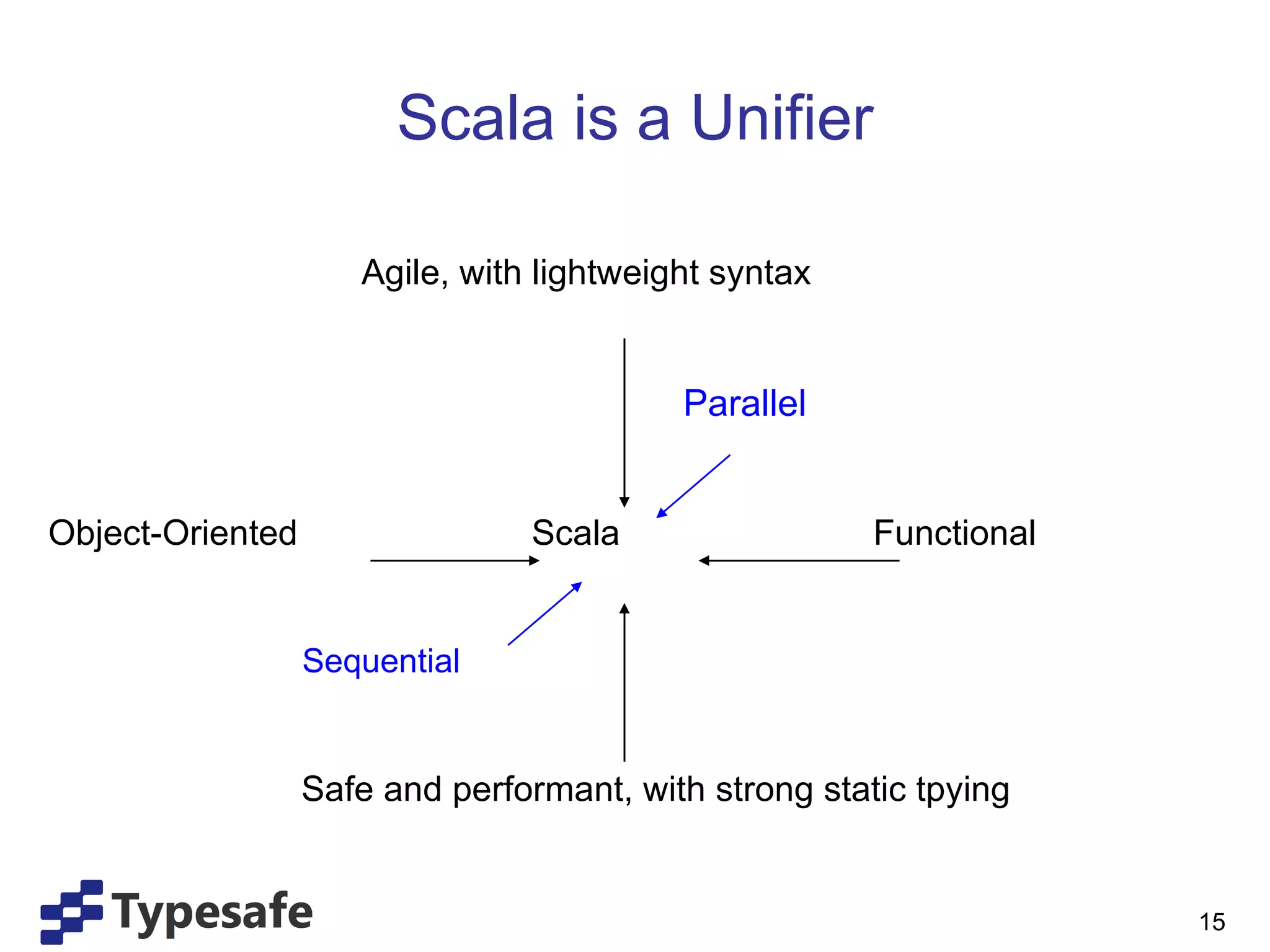

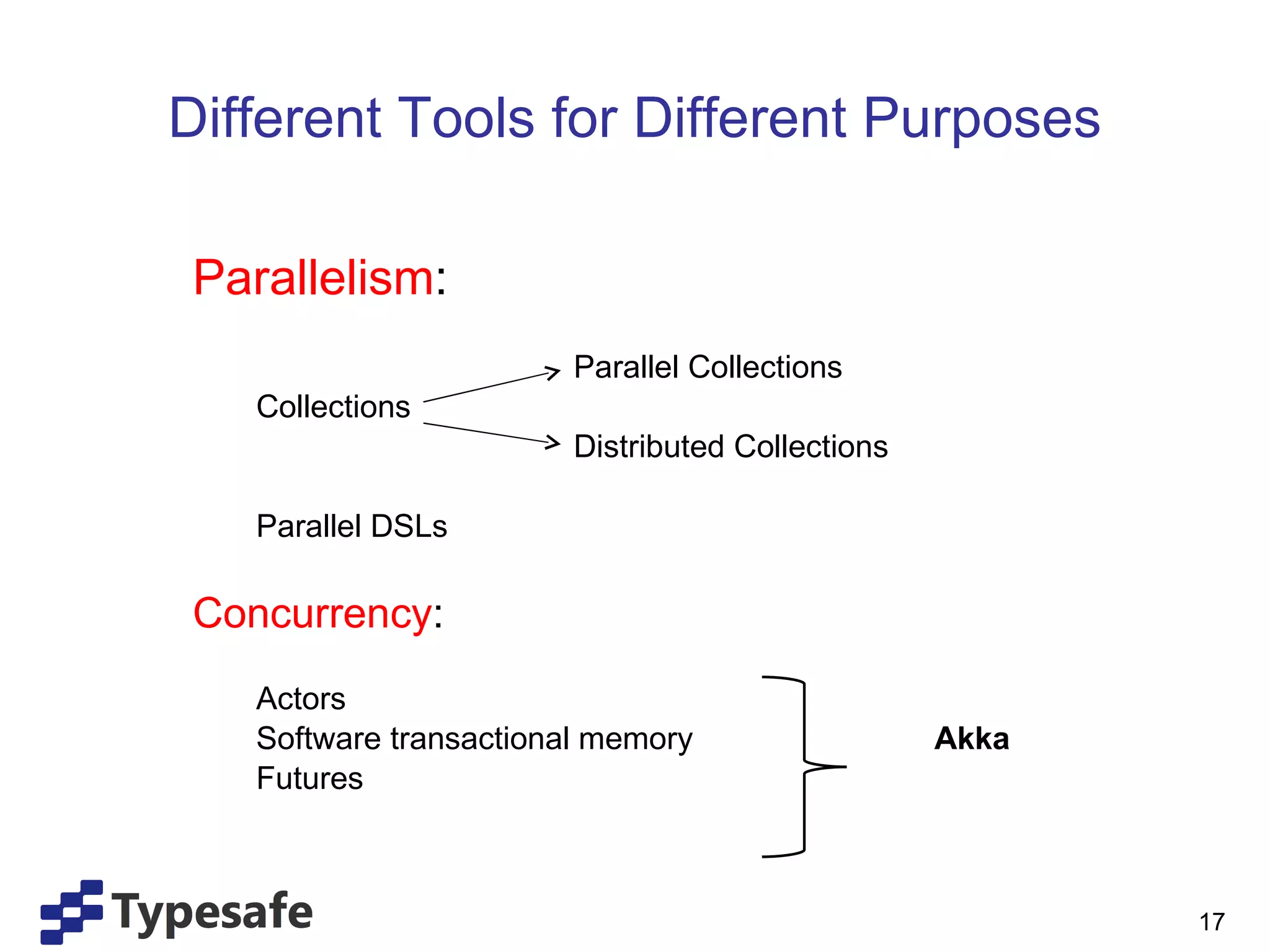

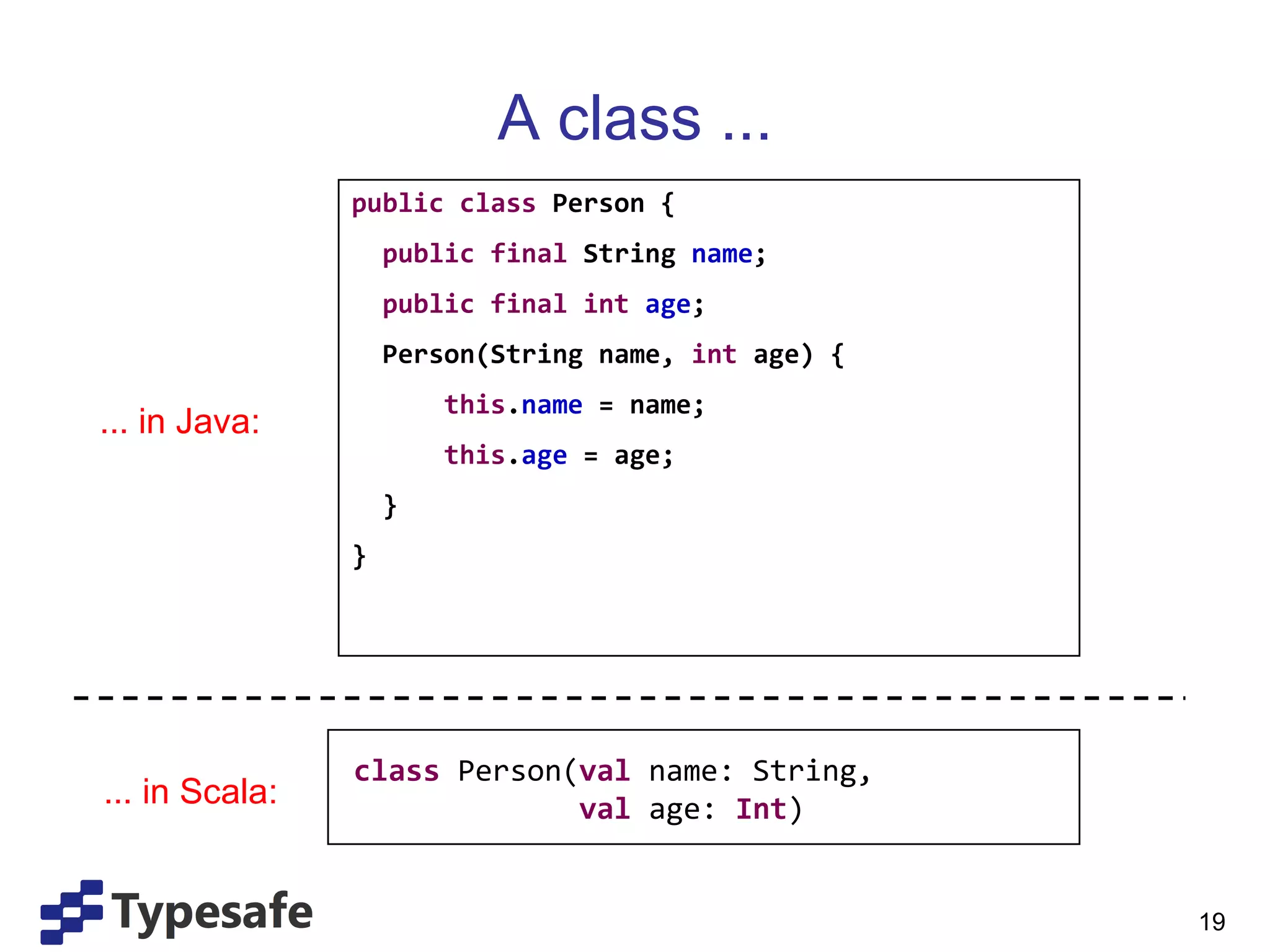

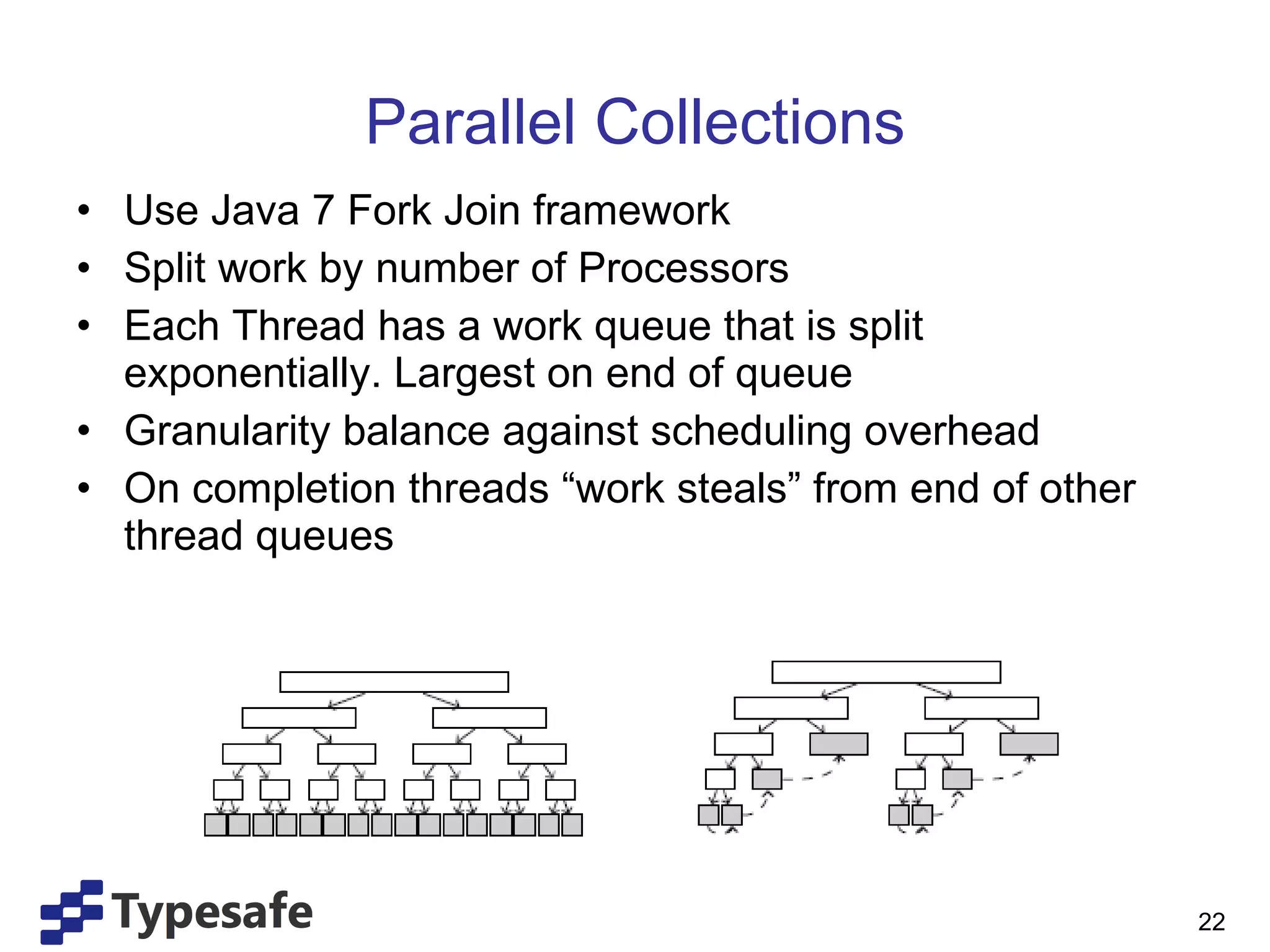

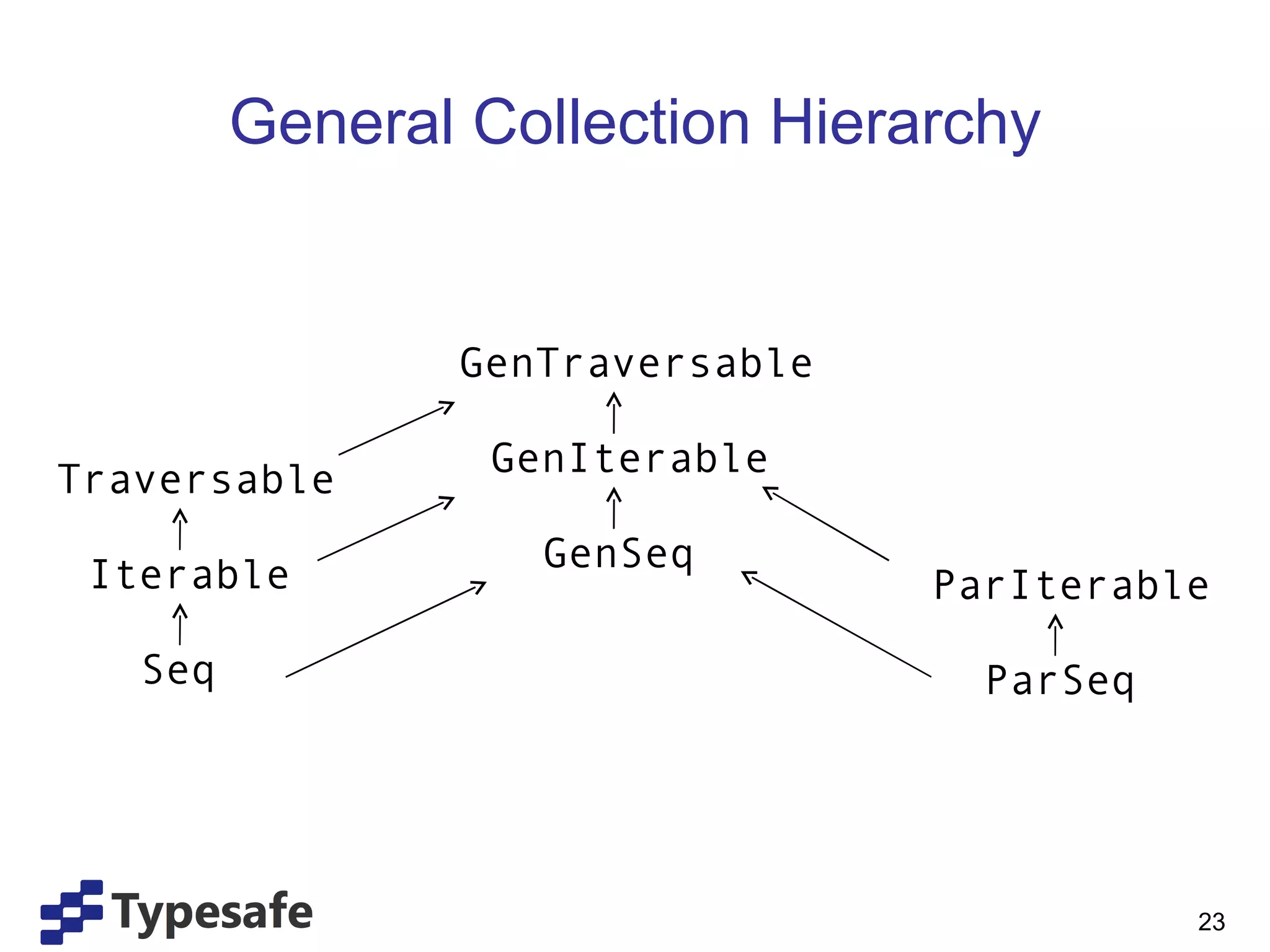

- Scala 2.10 will include a new reflection framework and other IDE improvements. Avoiding mutable state enables functional and parallel programming. Scala supports both parallelism and concurrency through tools like parallel collections and actors.

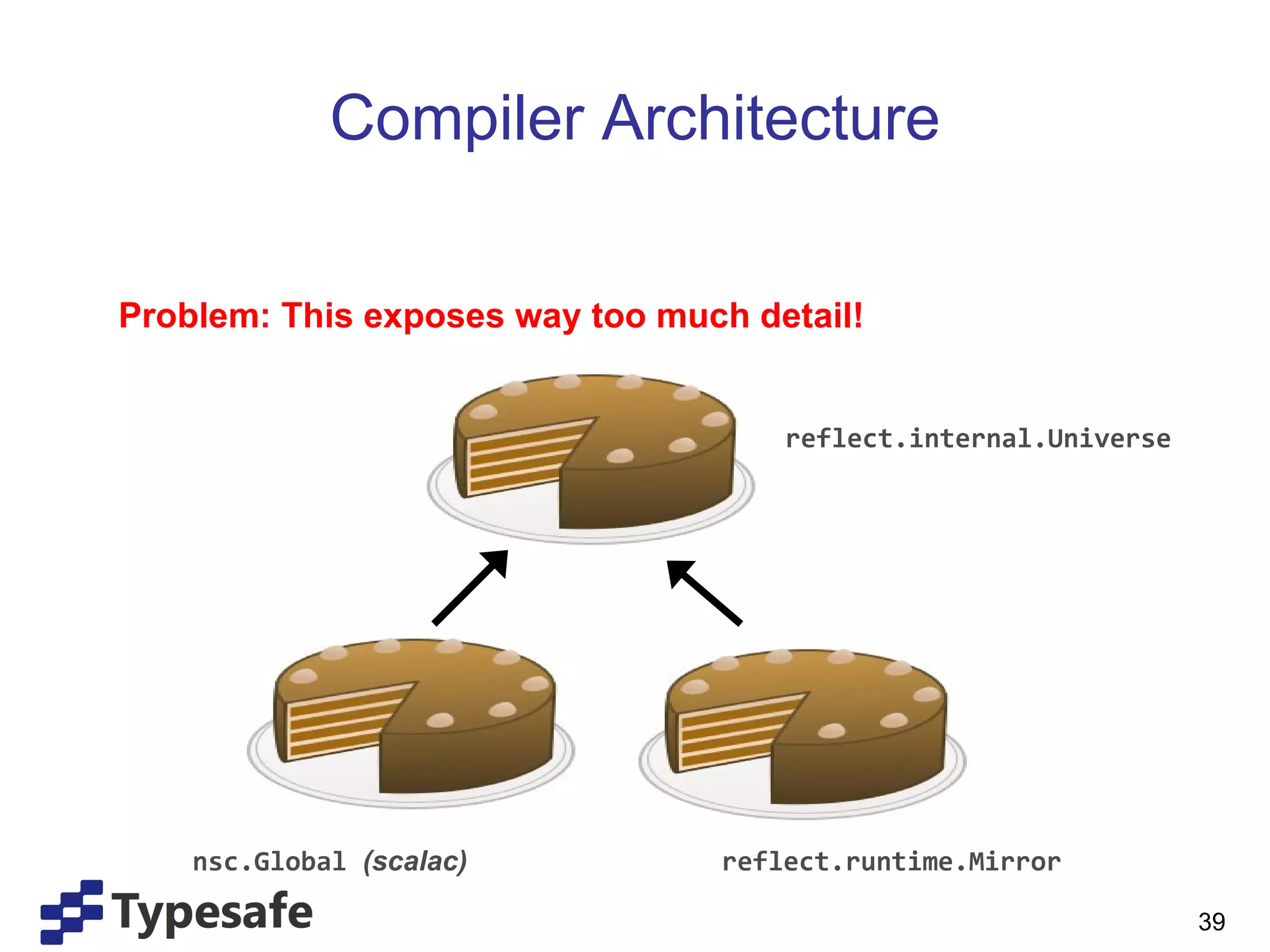

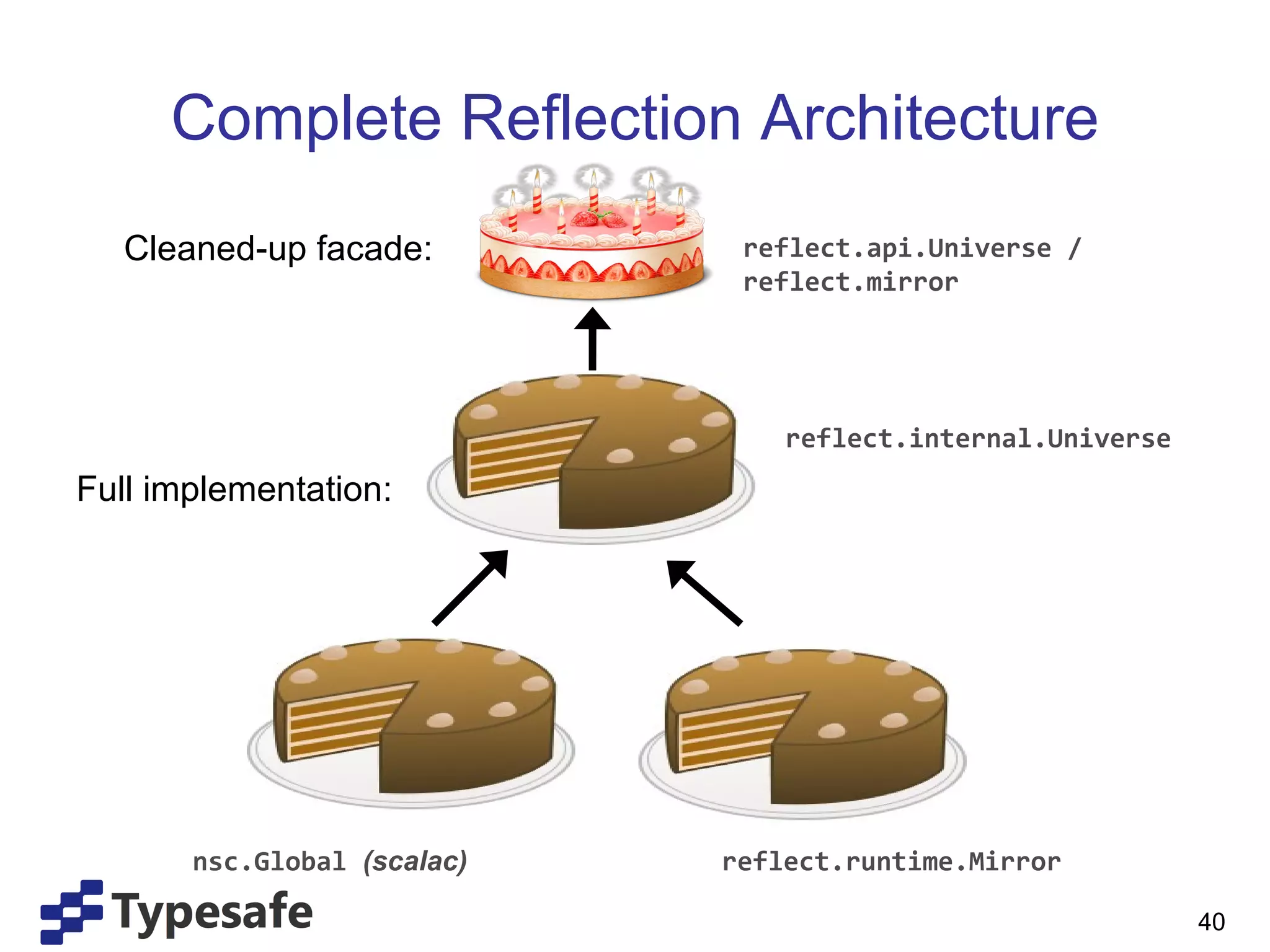

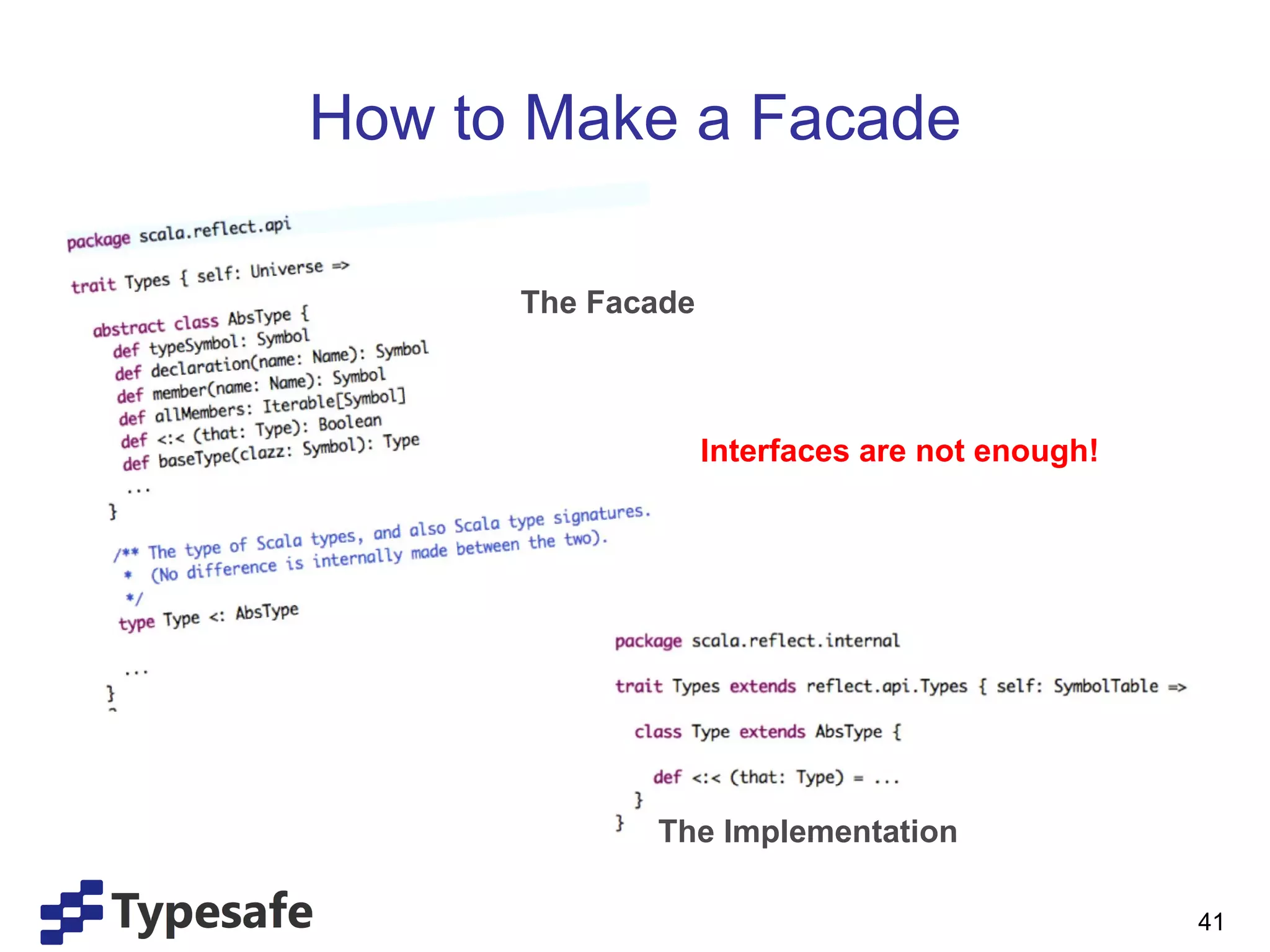

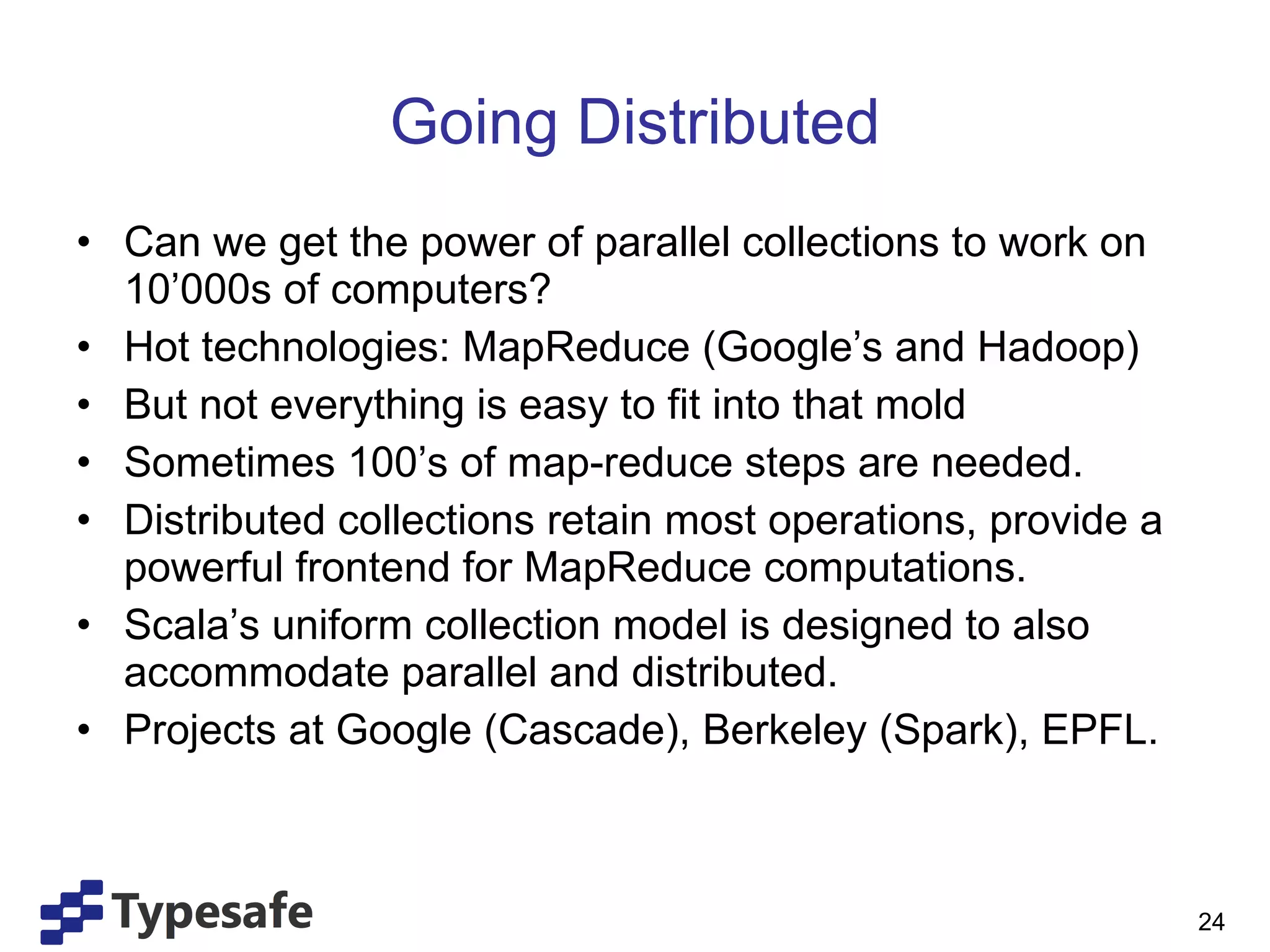

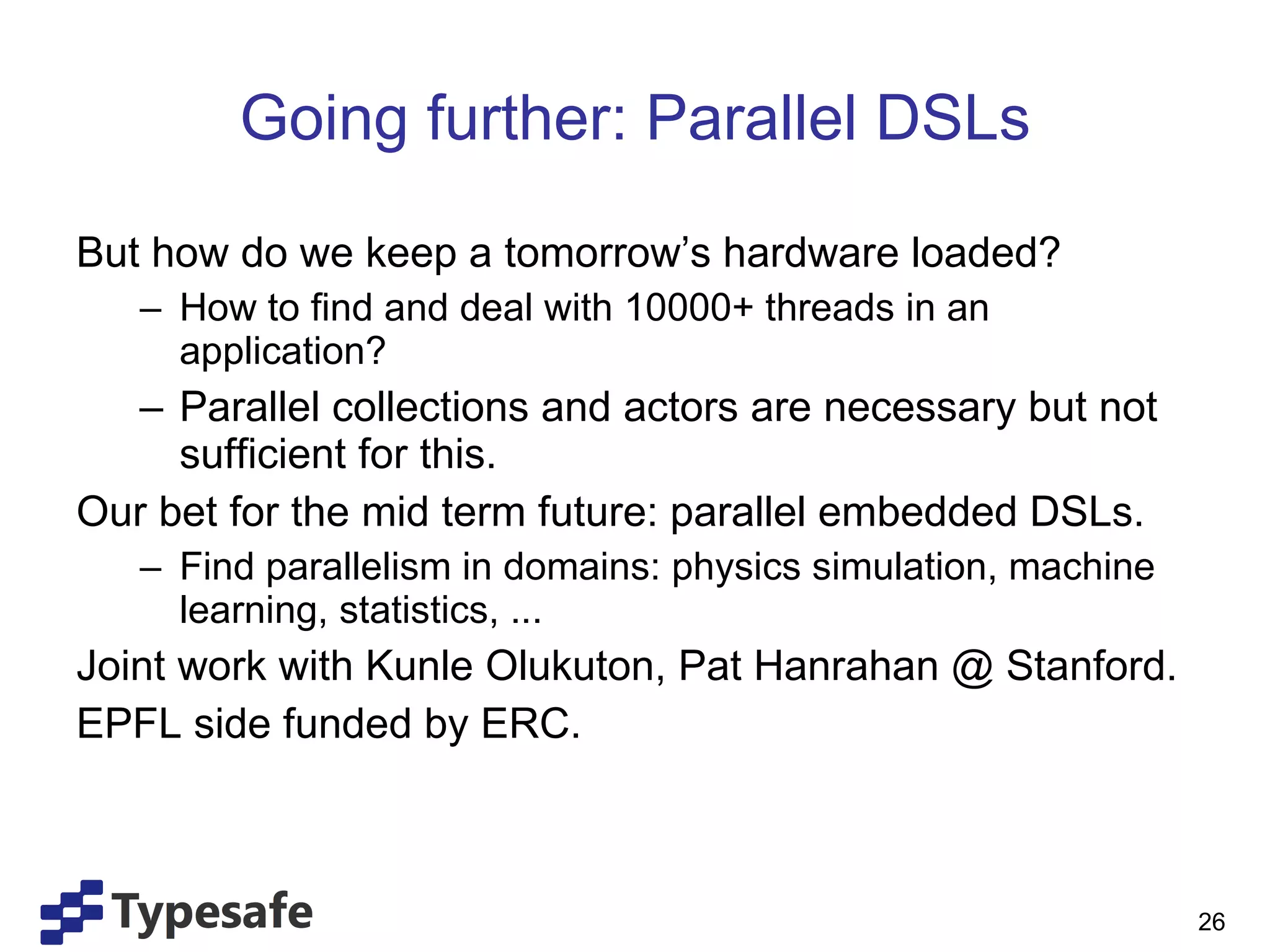

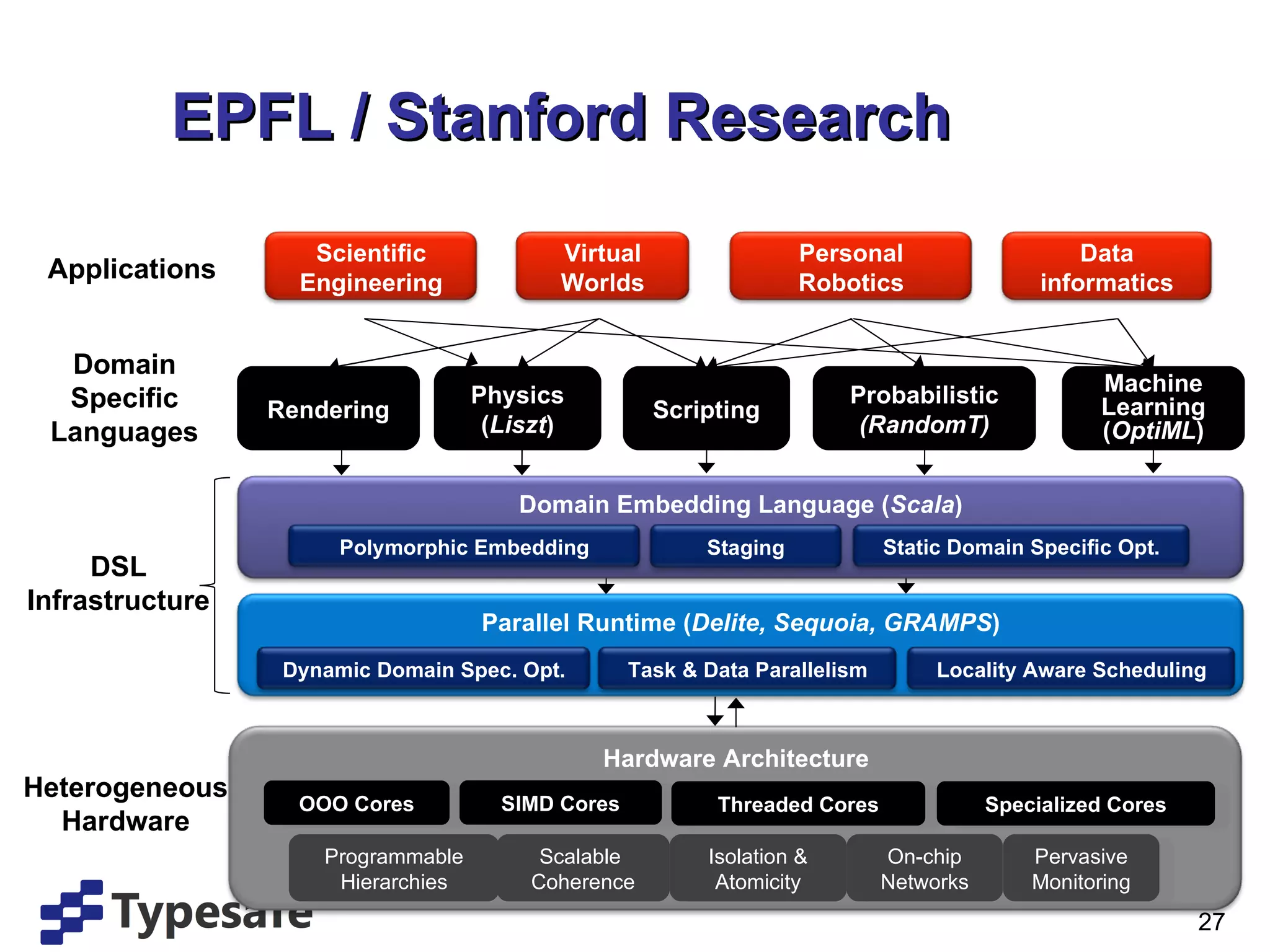

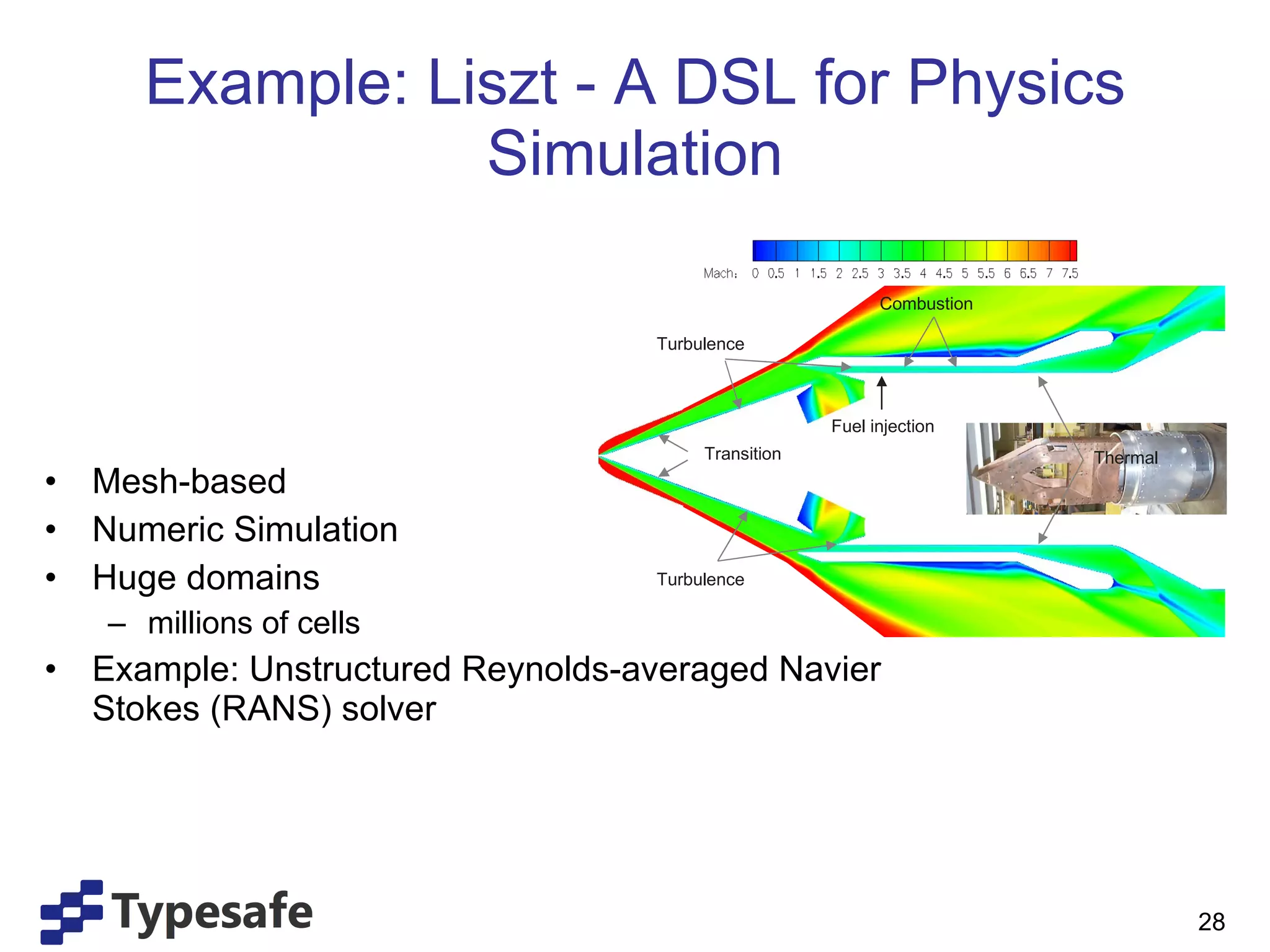

- Future work includes distributed collections, parallel domain-specific languages, and unifying the Scala compiler and reflection APIs. Scal

![... and its usage import java.util.ArrayList; ... Person[] people ; Person[] minors ; Person[] adults ; { ArrayList<Person> minorsList = new ArrayList<Person>(); ArrayList<Person> adultsList = new ArrayList<Person>(); for ( int i = 0; i < people . length ; i++) ( people [i]. age < 18 ? minorsList : adultsList) .add( people [i]); minors = minorsList.toArray( people ); adults = adultsList.toArray( people ); } ... in Java: ... in Scala: val people: Array [Person] val (minors, adults) = people partition (_.age < 18) A simple pattern match An infix method call A function value](https://image.slidesharecdn.com/devoxx-111119085409-phpapp01/75/Devoxx-20-2048.jpg)

![Going Parallel ? (for now) ... in Java: ... in Scala: val people: Array [Person] val (minors, adults) = people .par partition (_.age < 18)](https://image.slidesharecdn.com/devoxx-111119085409-phpapp01/75/Devoxx-21-2048.jpg)

![Liszt as Virtualized Scala val // calculating scalar convection (Liszt) val Flux = new Field[Cell,Float] val Phi = new Field[Cell,Float] val cell_volume = new Field[Cell,Float] val deltat = .001 ... untilconverged { for(f <- interior_faces) { val flux = calc_flux(f) Flux(inside(f)) -= flux Flux(outside(f)) += flux } for(f <- inlet_faces) { Flux(outside(f)) += calc_boundary_flux(f) } for(c <- cells(mesh)) { Phi(c) += deltat * Flux(c) /cell_volume(c) } for(f <- faces(mesh)) Flux(f) = 0.f } AST Hardware DSL Library Optimisers Generators … … Schedulers GPU, Multi-Core, etc](https://image.slidesharecdn.com/devoxx-111119085409-phpapp01/75/Devoxx-29-2048.jpg)