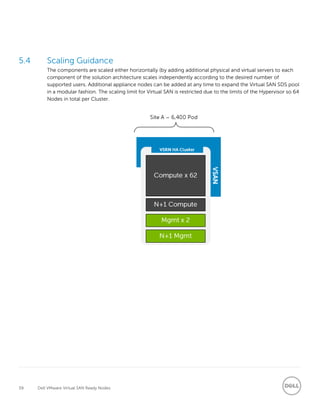

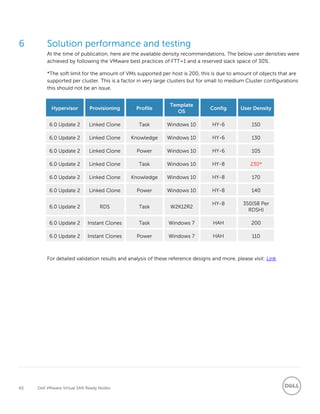

This document provides a reference architecture for implementing a Virtual SAN Ready Node environment using Dell hardware and VMware software. It describes the physical and logical architecture, including networking, storage, and server node components. Specific hardware models are recommended, such as Dell R730 servers and Dell networking switches. The architecture supports VMware Horizon, including hybrid deployments with Horizon Air.