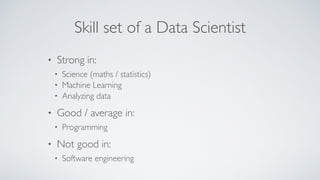

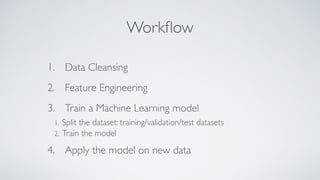

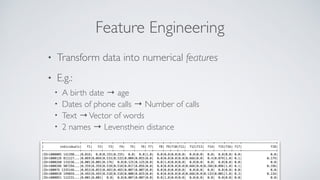

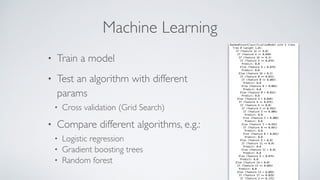

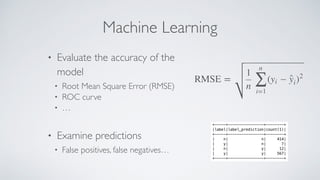

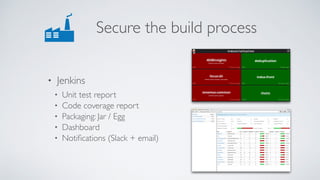

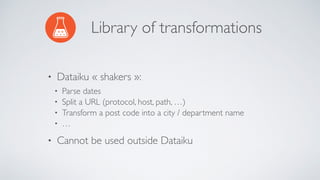

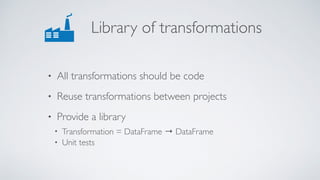

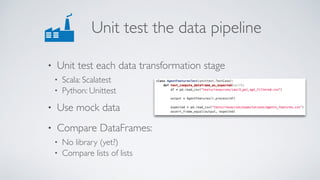

The document outlines the intersection of data science and software development within a data innovation lab at a large insurance company, emphasizing the roles and skills of data scientists and software developers. It details the workflow of a typical data science project, including data cleansing, feature engineering, and machine learning model training, while presenting strategies for effective collaboration and code management. Key recommendations include utilizing a centralized data store, adopting version control practices, automating processes, and implementing testing and packaging to enhance productivity and reliability.