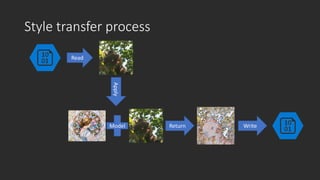

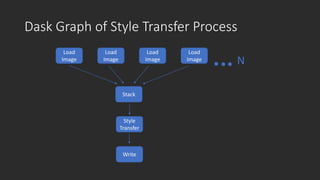

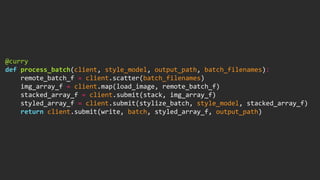

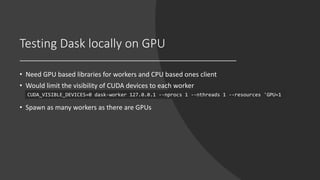

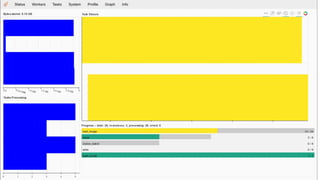

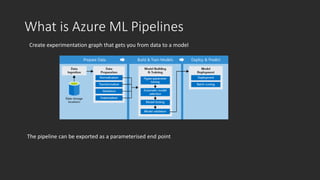

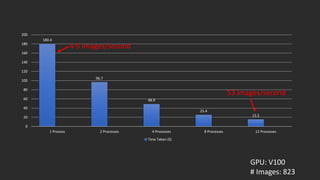

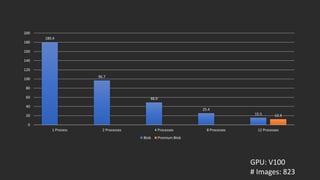

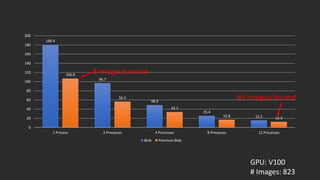

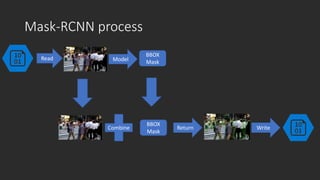

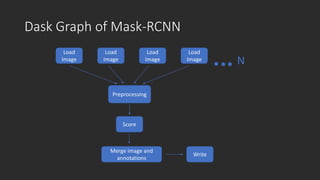

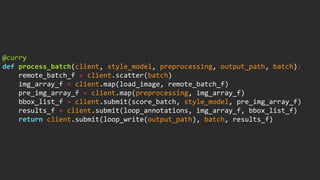

This document discusses using Dask to perform batch scoring of computer vision workloads using GPUs. It describes two use cases: style transfer and Mask R-CNN for object detection and segmentation. Dask allows the same Python code to run locally or in a distributed manner across a cluster. It handles data parallelism and orchestration without requiring additional code. Performance tests show Dask can process over 50 images per second for style transfer and over 8 images per second for Mask R-CNN using multiple GPUs on Azure Blob storage. The document also demonstrates deploying Dask on Kubernetes and using it within an Azure Machine Learning pipeline for batch scoring computer vision models at scale.