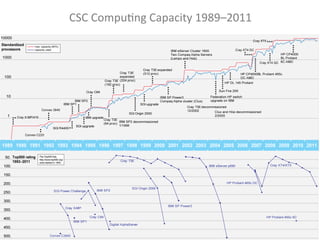

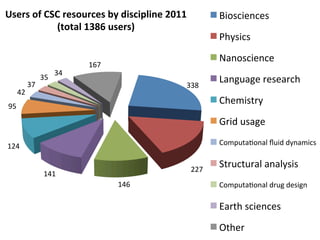

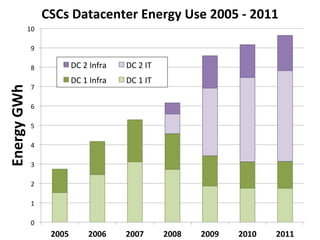

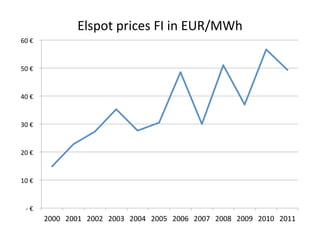

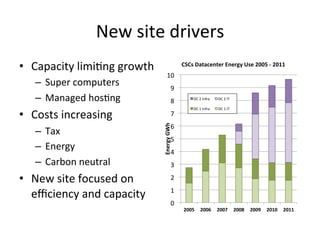

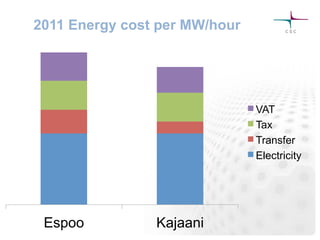

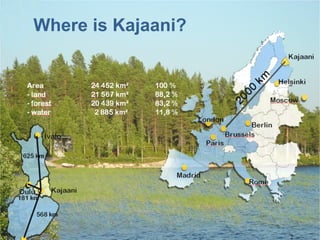

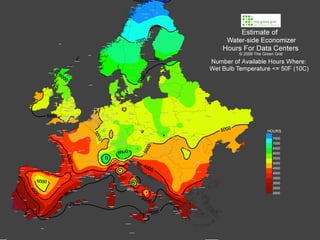

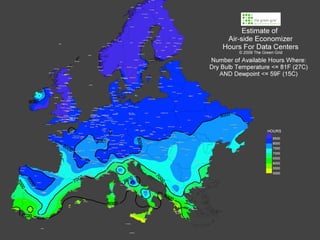

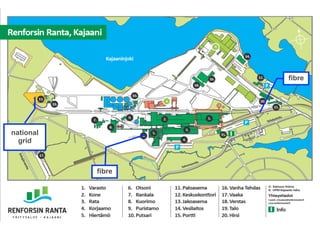

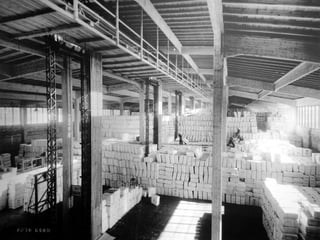

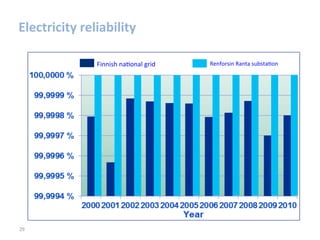

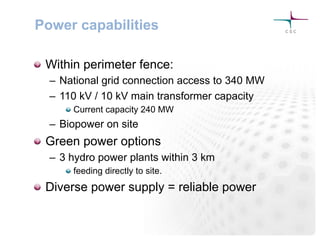

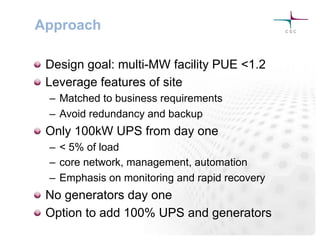

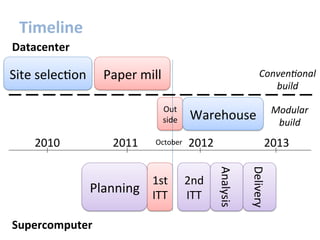

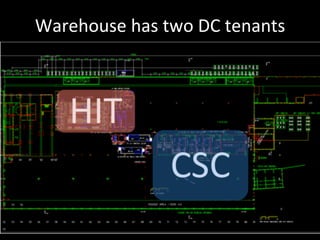

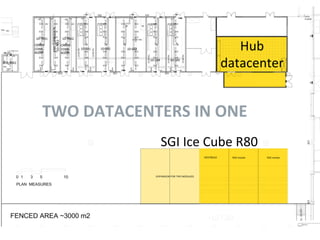

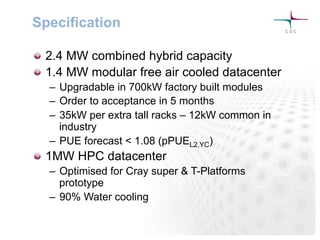

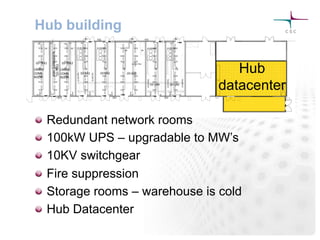

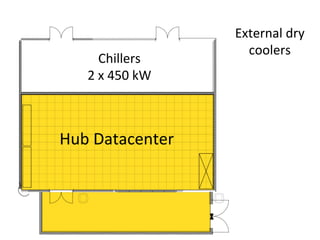

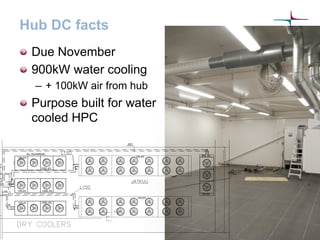

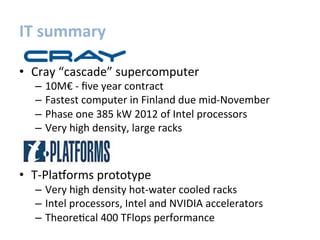

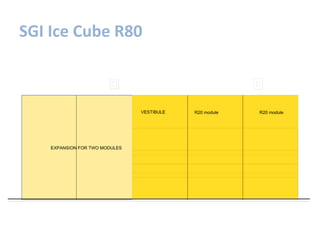

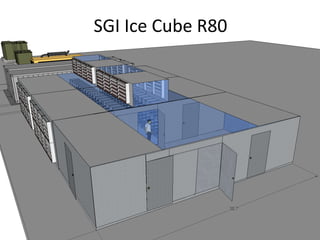

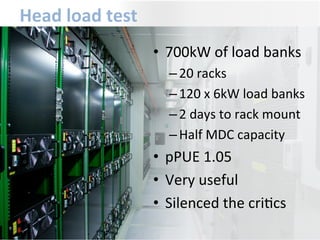

The document provides a case study of the Kajaani Datacenter project by CSC. It summarizes that CSC built a new 1.4 MW modular datacenter and 1 MW high performance computing datacenter in Kajaani, Finland to address growing capacity needs, rising costs, and sustainability goals. The project leveraged the site's access to abundant and reliable renewable energy sources. Construction involved renovating a paper warehouse and installing prefabricated modular datacenter units along with a purpose-built liquid cooling system for high-density computing. Lessons learned emphasized the importance of thorough planning and integration across technical systems.