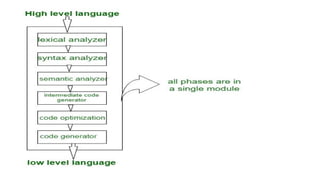

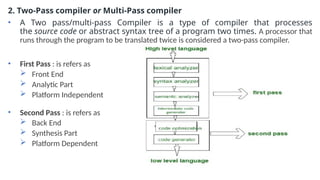

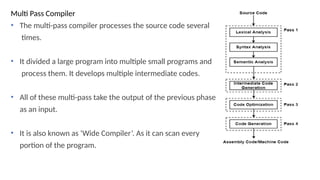

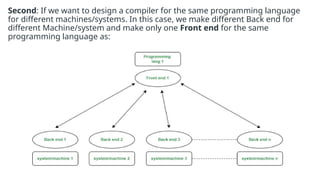

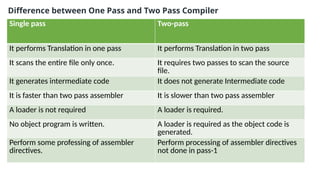

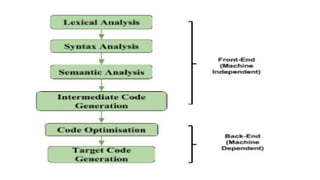

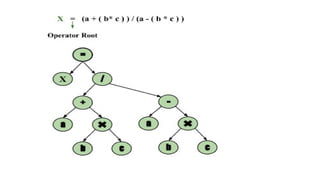

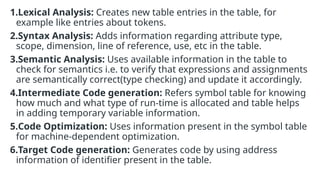

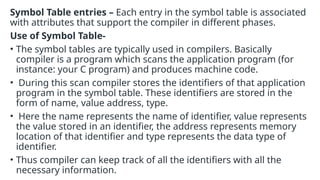

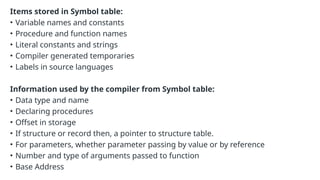

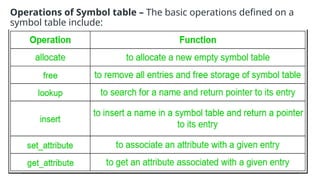

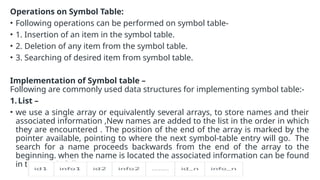

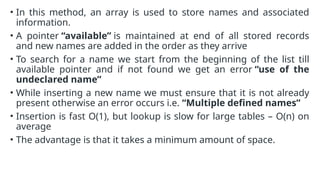

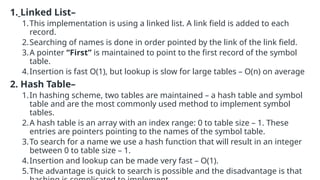

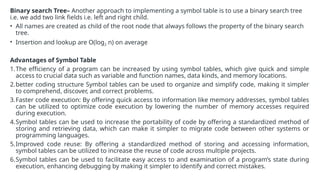

The document provides an overview of compiler construction, detailing the types of compiler passes, specifically single-pass and multi-pass compilers, including their functionalities, advantages, and disadvantages. It explores intermediate code generation, its benefits such as portability and optimization, as well as various representations of intermediate code like three-address code and syntax trees. Additionally, the document discusses the symbol table's role in a compiler, including its structure, operations, advantages, and applications in error checking and code generation.