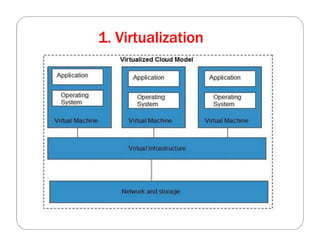

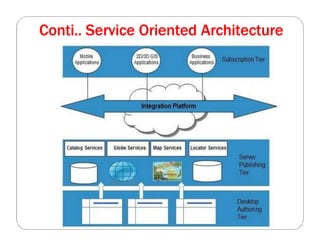

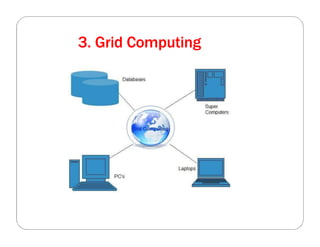

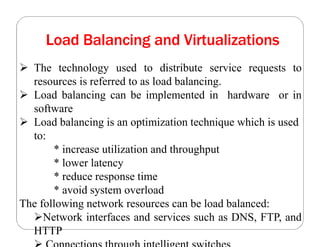

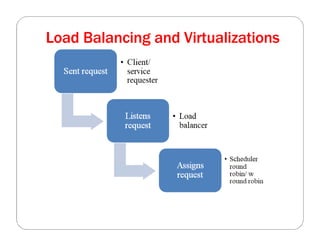

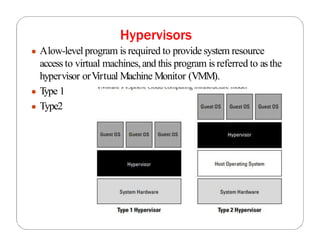

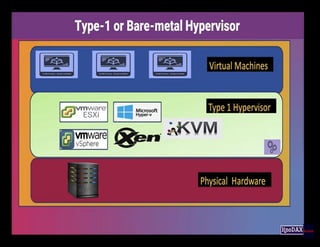

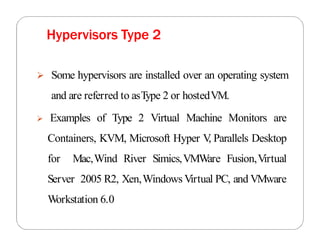

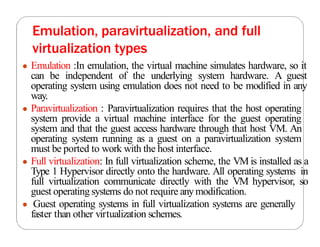

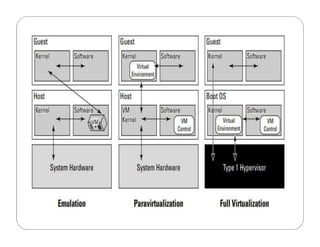

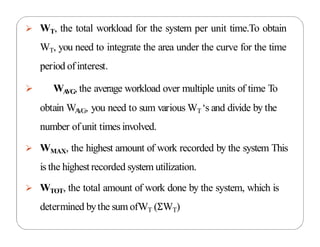

The document covers key concepts in cloud computing technologies and virtualization, including virtualization, service-oriented architecture (SOA), grid computing, and utility computing. It discusses the benefits of virtualization, such as resource efficiency and flexibility, and the roles of load balancing, hypervisors, and application delivery controllers in optimizing cloud environments. The document also emphasizes the importance of application, data, and platform portability, along with capacity planning and defining performance metrics in cloud systems.