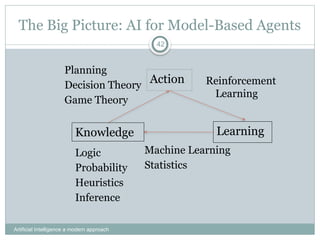

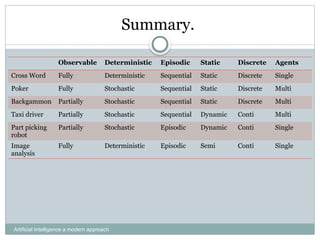

This document outlines concepts related to intelligent agents in artificial intelligence, focusing on their environments and types. It discusses the PEAS model (Performance measure, Environment, Actuators, Sensors) and explores various agent types including simple reflex, goal-based, and utility-based agents, as well as the importance of rationality in maximizing performance. The text further delves into the autonomy of agents and the characteristics of different environments they may operate in.

![Agents and environments

Artificial Intelligence a modern approach

5

• The agent function maps from percept histories to

actions:

• [f: P* A]

• The agent program runs on the physical architecture to

produce f

• agent = architecture + program](https://image.slidesharecdn.com/chapter2-250206184759-b4124f9b/85/chapter2intelligence-AI-and-machine-learning-ML-pptx-5-320.jpg)

![Vacuum-cleaner world

Artificial Intelligence a modern approach

6

Percepts: location and contents, e.g., [A,Dirty]

Actions: Left, Right, Suck, NoOp

Agent’s function look-up table

For many agents this is a very large table

Demo With Open Source Code](https://image.slidesharecdn.com/chapter2-250206184759-b4124f9b/85/chapter2intelligence-AI-and-machine-learning-ML-pptx-6-320.jpg)

![States: Beyond Reflexes

• Recall the agent function that maps from percept histories

to actions:

[f: P* A]

An agent program can implement an agent function by

maintaining an internal state.

The internal state can contain information about the state

of the external environment.

The state depends on the history of percepts and on the

history of actions taken:

[f: P*, A* S A] where S is the set of states.

Artificial Intelligence a modern approach

32](https://image.slidesharecdn.com/chapter2-250206184759-b4124f9b/85/chapter2intelligence-AI-and-machine-learning-ML-pptx-30-320.jpg)