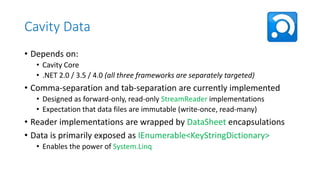

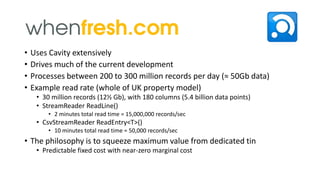

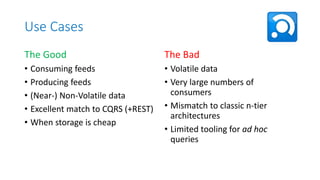

The document describes the Cavity open source project, which was created in 2010 to avoid repeatedly writing the same data access code. It includes 43 solutions for various components like the core, data, diagnostics, and domain. The Cavity Data component uses the core library and supports comma- and tab-separated files read as immutable streams. It exposes data as enumerables for LINQ queries and can process hundreds of millions of records daily for a client. The philosophy is to optimize for high throughput of large, read-only data files.