Cassandra is a distributed database designed without single points of failure. It addresses this by employing a peer-to-peer architecture where data is distributed across homogeneous nodes. All nodes are equal - there is no master or slave. Nodes exchange state information using a gossip protocol to maintain awareness of the cluster. Clients can send read or write requests to any node, which will coordinate with other nodes as needed to fulfill the request based on how data is partitioned.

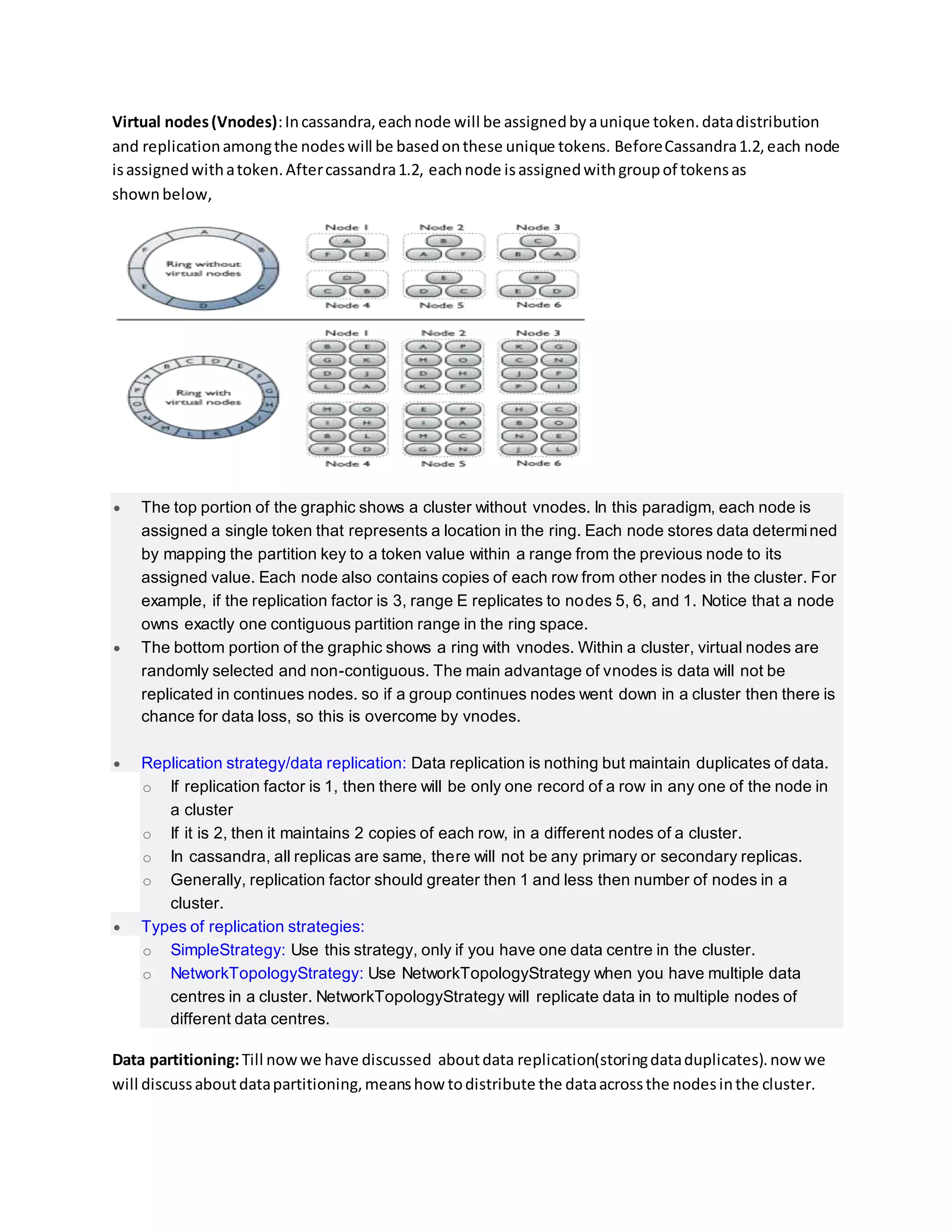

![Basically,apartitionerisahash functionforcomputingthe tokenof a partitionkey.There are 3 typesof

partitioner’sincassandra.

Murmur3Partitioner (default): Uniformlydistributesdataacrossthe clusterbasedonMurmurHash

hash values.

RandomPartitioner:Uniformlydistributesdataacrossthe clusterbasedon MD5 hash values.

ByteOrderedPartitioner:Keepsanordereddistributionof datalexicallybykeybytes.

Snitch: A snitchdefinesgroupsof machinesintodatacentresandracks (the topology) thatthe

replicationstrategyusestoplace replicas. The defaultSimpleSnitch doesnotrecognizedatacentre or

rack information.TheGossipingPropertyFileSnitch isrecommendedforproduction.The followingare

differenttypesof snitchs.

dynamicsnitching

simple snitching

RackInferringSnitch

PropertyFileSnitch

GossipingPropertyFileSnitch

Ec2Snitch

Ec2MultiRegionSnitch

GoogleCloudSnitch

CloudstackSnitch

This entry was posted in Cassandra and tagged AvailabilityCassandraConsistencyPartitionTolerance on January27, 2016 by Siva

Table of Contents [hide]

What is CAP Theorem?

o Consistency

o Availability

o Partition Tolerance

o Consistency – Partition Tolerance:

o Availability –Partition Tolerance:

o Consistency – Availability:

Share this:](https://image.slidesharecdn.com/cassandraarchitecture-181102095000/75/Cassandra-architecture-3-2048.jpg)

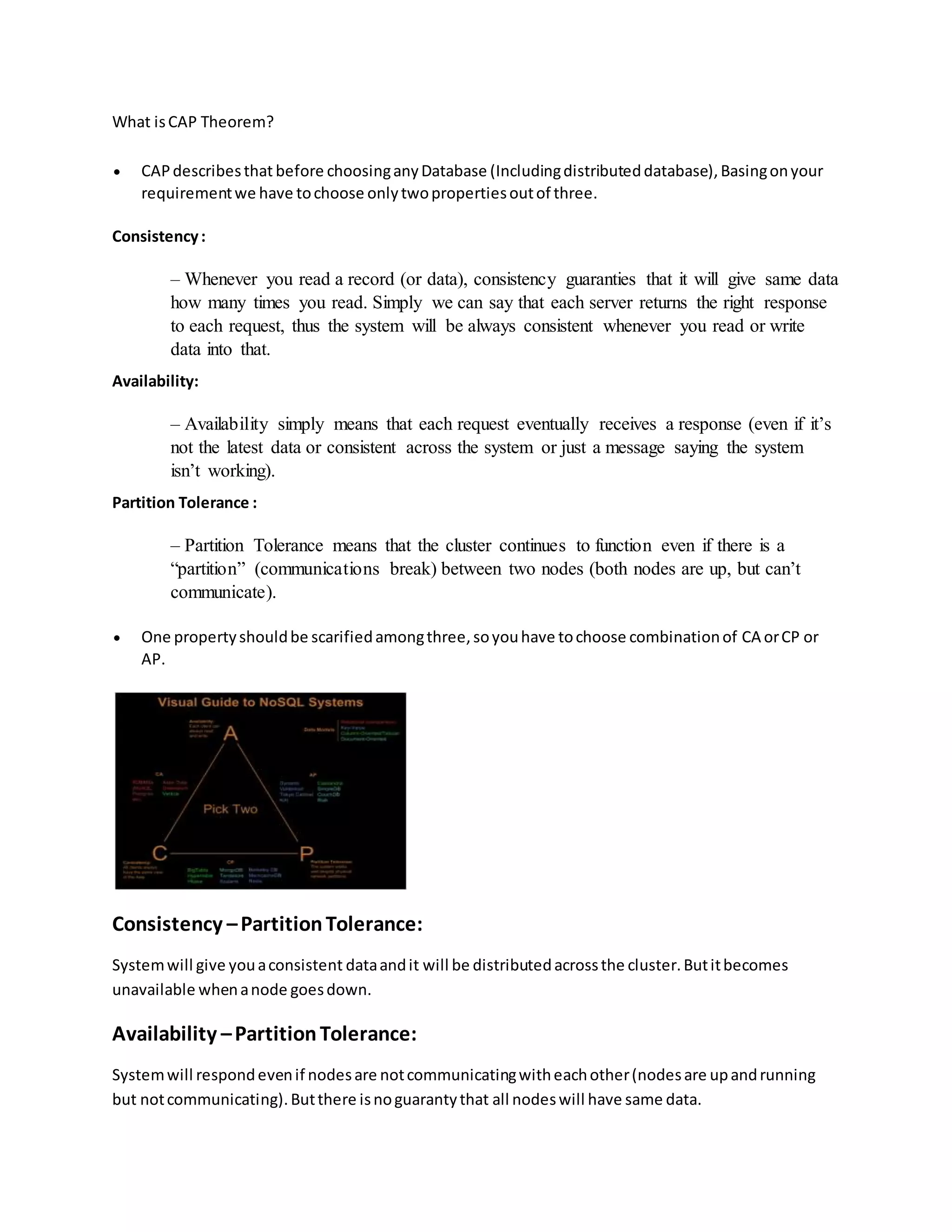

![Consistency –Availability:

Systemwill give youaconsistentdataandyoucan write/readdatato/fromanynode,butdata

partitioningwill notbe syncwhendevelopapartitionbetweennodes (RDBMSsystemssuchasMySQL

are of CA combinationsystems.)

Accordingto CAPtheorem, Cassandrawill fallintocategoryof AP combination,thatmeansdon’tthink

that Cassandrawill notgive a consistentdata.We can tune Cassandraas per ourrequirement togive

youa consistentresult.

Cassandra Interview Cheat Sheet:

his entry was posted in Cassandra on October 10, 2015 by Siva

Table of Contents [hide]

Cassandra

o

NoSQL

KeySpace

Partitioner

Snitch

Replication Factor

Partitioners

Share this:

Cassandra

Cassandra is a distributed database from Apache that is highly scalable and designed to manage

very large amounts of structured data. It provides high availability with no single point of failure.

NoSQL

The primary objective of a NoSQLdatabase is to have

simplicityof design,

horizontal scaling,and

finercontrol overavailability.](https://image.slidesharecdn.com/cassandraarchitecture-181102095000/75/Cassandra-architecture-5-2048.jpg)

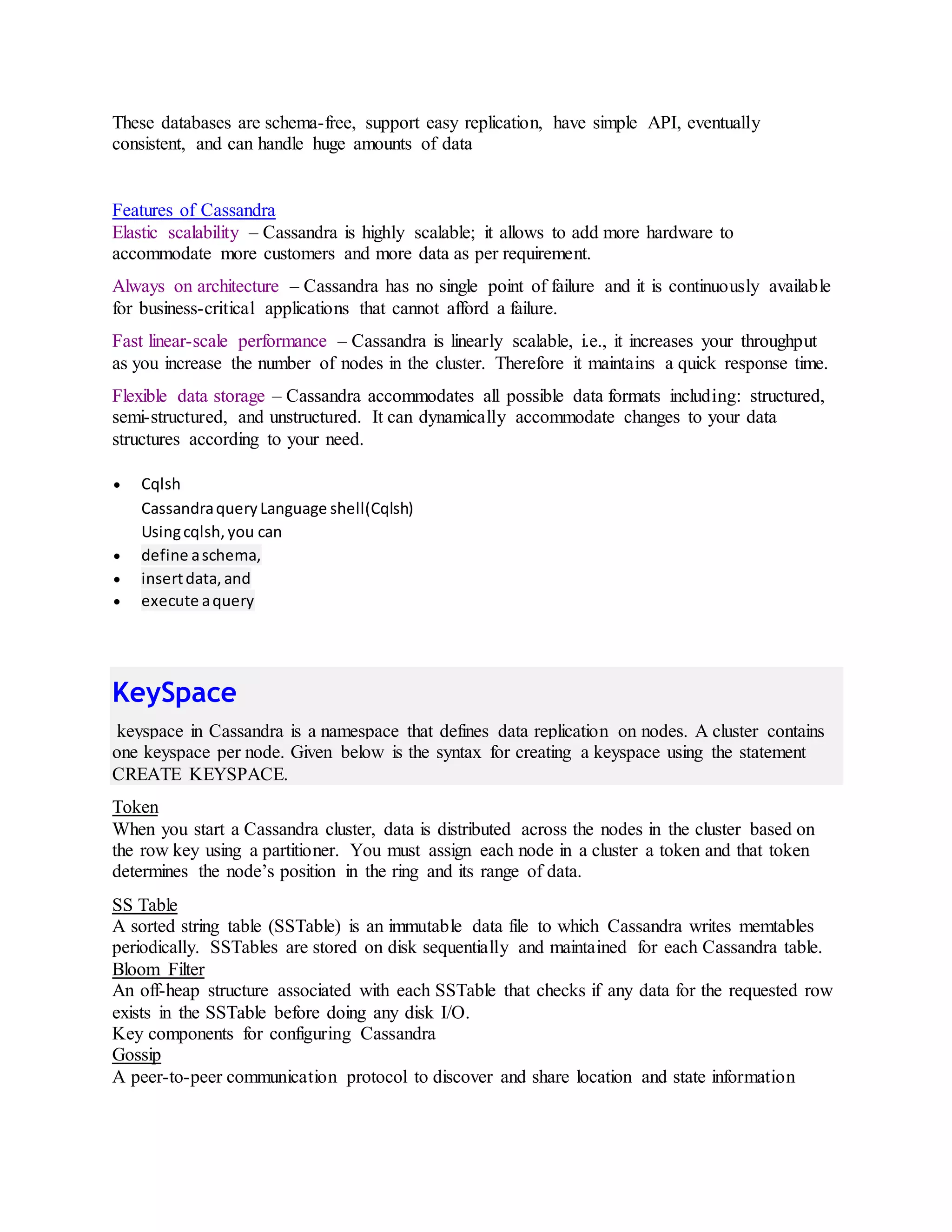

![TTL

Time-to-live. An optional expiration date for values inserted into a column

Consistency

consistency refers to how up-to-date and synchronized a row of data is on all of its replicas.

Backup and Restoring Data

Cassandra backs up data by taking a snapshot of all on-disk data files (SSTable files) stored in

the data directory.

You can take a snapshot of all keyspaces, a single keyspace, or a single table while the system is

online.

Cassandra Tools

The nodetool utility

A commandline interface forCassandraformanagingacluster.

Cassandrabulkloader(sstableloader)

The cassandra utility

Starts the CassandraJava serverprocess

.The cassandra-stresstool

A Java-basedstresstestingutilityforbenchmarkingandloadtestingaCassandracluster.

The sstablescrubutility

Scrub the all the SSTablesforthe specifiedtable.

The sstablesplitutilitysstablekeys

The sstablekeysutilitydumpstable keys.

The sstableupgrade tool

Upgrade the SSTablesinthe specifiedtable (orsnapshot)tomatchthe current versionof Cassandra.

Cassandra Overview:

This entry was posted in Cassandra on September 8, 2015 by Siva

Table of Contents [hide]

Cassandra Overview

o

Commit Log & MemTable

o Cassandra Key Components

](https://image.slidesharecdn.com/cassandraarchitecture-181102095000/75/Cassandra-architecture-8-2048.jpg)

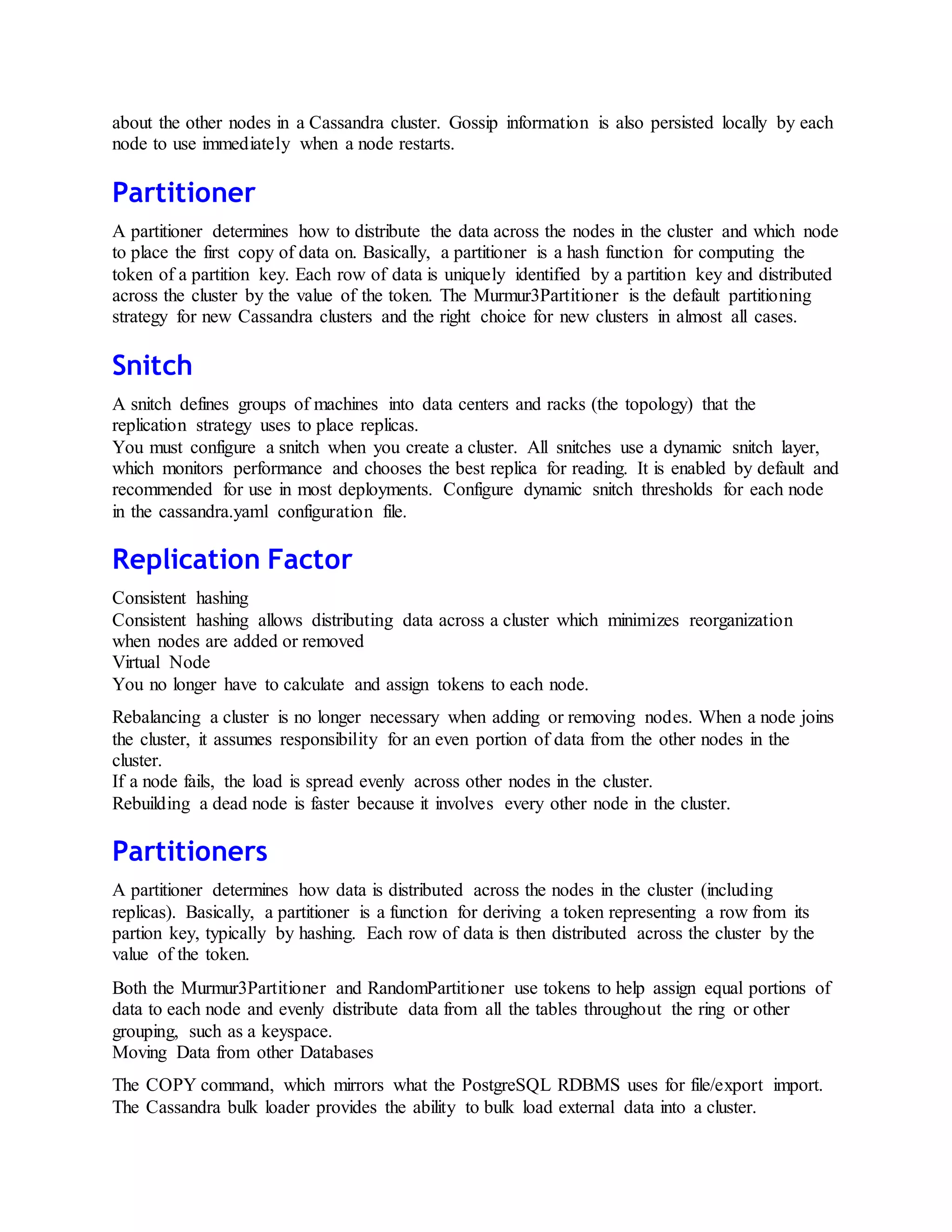

![There are manyvtypesof snitcheslike dynamicsnitching,simple snitching,RackInferringSnitch,

PropertyFileSnitch,GossipingPropertyFileSnitch,Ec2Snitch,Ec2MultiRegionSnitch,GoogleCloudSnitch,

CloudstackSnitch.

Cassandra is a partitioned row store database, where rows are organized into tables with a required

primary key.Clients read or write requests can be sent to any node in the cluster. When a client

connects to a node with a request, that node serves as the coordinator for that particular client

operation. The coordinator acts as a proxy between the client application and the nodes that o wn the

data being requested. The coordinator determines which nodes in the ring should get the request

based on how the cluster is configured

Cassandraproductionscenarios/issues

Thisentrywas postedinCassandra and tagged ConstantReconnectionPolicyDCAwareRoundRobinPolicy

DefaultRetryPolicydistributedcallsincassandraJDBCDowngradingConsistencyRetryPolicy

ExponentialReconnectionPolicyLoadBalancingPolicyReadReconnectionPolicyRetryPolicy

RoundRobinPolicyTokenAwarePolicyUnavailable Write onJanuary28, 2016 by Siva

Table of Contents[hide]

Production issue:

FewProductionconfigurationsincassandra

RetryPolicy

DefaultRetryPolicy

DowngradingConsistencyRetryPolicy

ReconnectionPolicy

ConstantReconnectionPolicy](https://image.slidesharecdn.com/cassandraarchitecture-181102095000/75/Cassandra-architecture-13-2048.jpg)

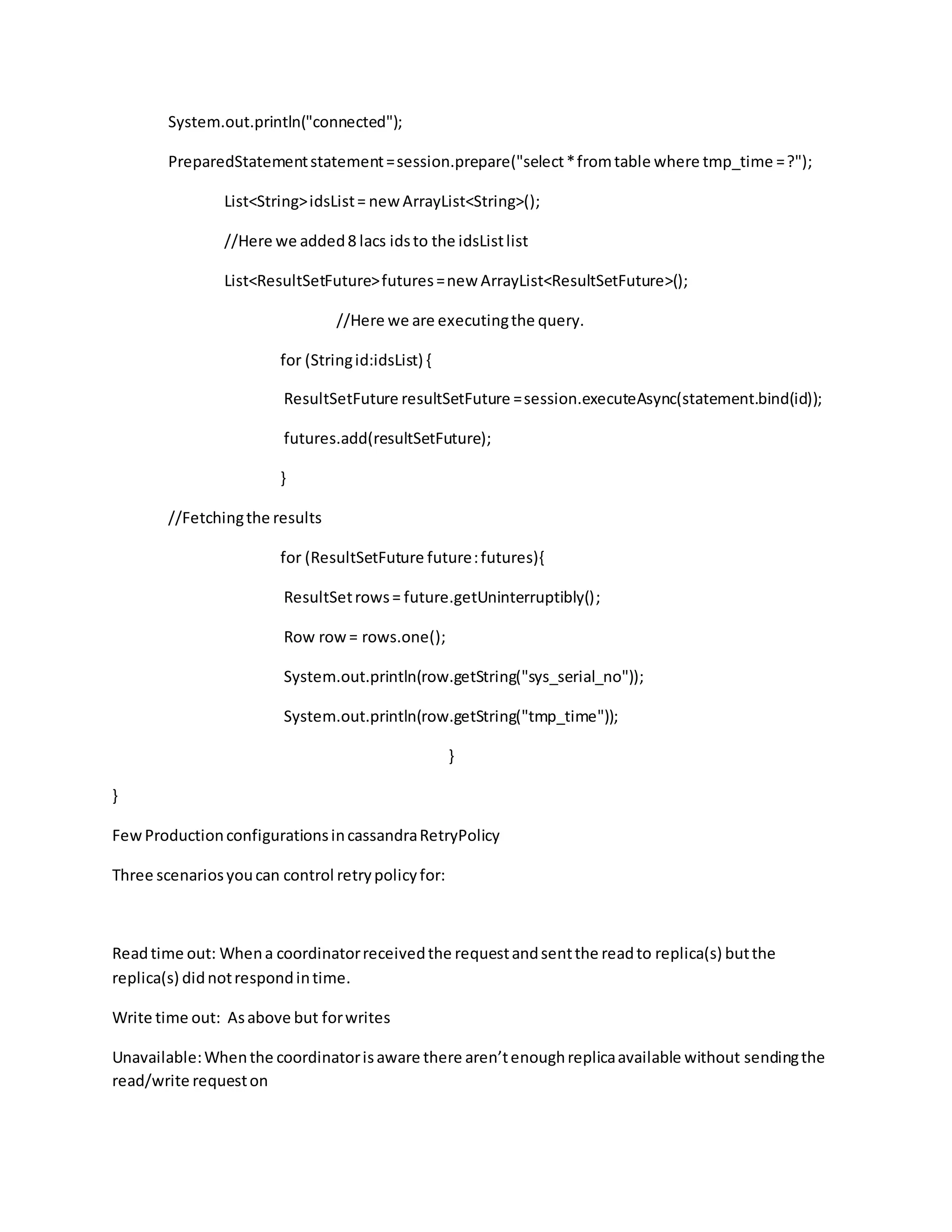

![importcom.datastax.driver.core.exceptions.NoHostAvailableException;

publicclassSelectCassandraDistrbuted{

publicstaticvoidmain(String[] args) {

Cluster cluster= null;

try{

cluster= Cluster.builder().addContactPoints("node1address/IP","node2address/IP","node3

address/IP").build();

cluster.getConfiguration().getSocketOptions().setReadTimeoutMillis(600000000);

Sessionsession=cluster.connect("keyspace_name");

System.out.println("connected");

PreparedStatementstatement=session.prepare("select*fromtable where tmp_time =?");

List<String>idsList= newArrayList<String>();

//Here we added8 lacs idsto the idsListlist

List<ResultSetFuture>futures=new ArrayList<ResultSetFuture>();

//Here we are executingthe query.

for (Stringid:idsList) {

ResultSetFuture resultSetFuture =session.executeAsync(statement.bind(id));

futures.add(resultSetFuture);

}

//Fetchingthe results](https://image.slidesharecdn.com/cassandraarchitecture-181102095000/75/Cassandra-architecture-15-2048.jpg)

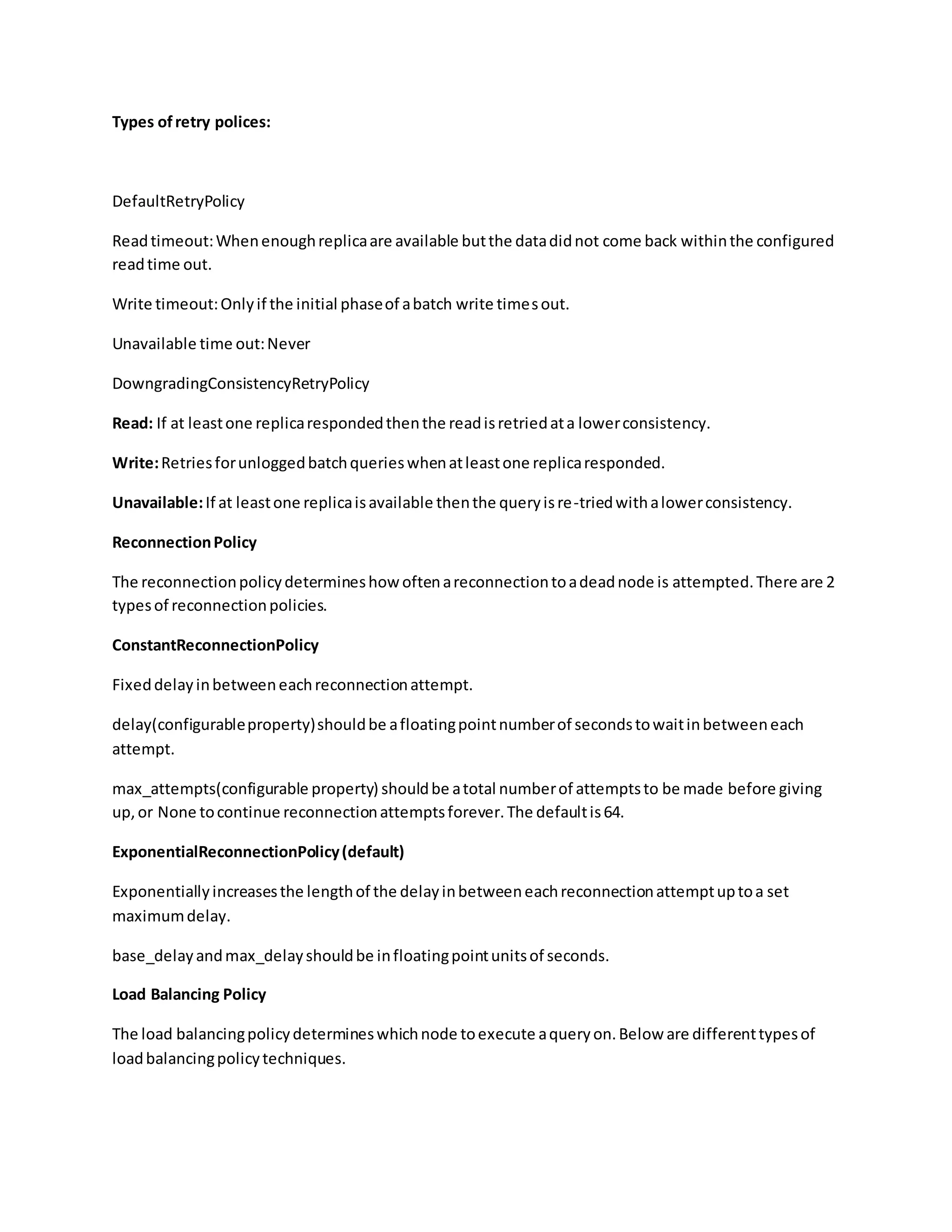

![for (ResultSetFuture future:futures){

ResultSetrows= future.getUninterruptibly();

Row row= rows.one();

System.out.println(row.getString("sys_serial_no"));

System.out.println(row.getString("tmp_time"));

}

}

importjava.net.InetSocketAddress;

importjava.util.ArrayList;

importjava.util.List;

importjava.util.Map;

importcom.datastax.driver.core.Cluster;

importcom.datastax.driver.core.PreparedStatement;

importcom.datastax.driver.core.ResultSet;

importcom.datastax.driver.core.ResultSetFuture;

importcom.datastax.driver.core.Row;

importcom.datastax.driver.core.Session;

importcom.datastax.driver.core.exceptions.NoHostAvailableException;

publicclassSelectCassandraDistrbuted{

publicstaticvoidmain(String[] args) {

Cluster cluster= null;

try{

cluster= Cluster.builder().addContactPoints("node1address/IP","node2address/IP","node3

address/IP").build();

cluster.getConfiguration().getSocketOptions().setReadTimeoutMillis(600000000);

Sessionsession=cluster.connect("keyspace_name");](https://image.slidesharecdn.com/cassandraarchitecture-181102095000/75/Cassandra-architecture-16-2048.jpg)

![RoundRobinPolicy

Distributesqueriesacross all nodesinthe cluster,regardlessof whatdatacentre the nodesmaybe in.

DCAwareRoundRobinPolicy

SimilartoRoundRobinPolicy,butprefershostsinthe local datacenterandonlyusesnodesinremote

datacentersasa lastresort.

TokenAwarePolicy(default)

A LoadBalancingPolicywrapperthataddstokenawarenesstoa childpolicy.Thisaltersthe childpolicy’s

behaviorsothat itfirstattemptsto sendqueriestoLOCALreplicas(asdeterminedbythe childpolicy)

basedon the Statement‘srouting_key.Once those hostsare exhausted,the remaininghostsinthe child

policy’squeryplanwill be used.

Cassandra write and read process:

This entry was posted in Cassandra and tagged Bloom filtercassandra

delete flowcassandra insert flowcassandra read flowcassandra update

flowcommit logCompactioncompression

offsetDateTieredCompactionStrategyHow data is written into

cassandraHow do write patterns effect reads in cassandraHow is data

deleted in cassandraHow is data read in cassandraHow is data updated

in cassandraLeveledCompactionStrategymemTablePartition

indexpartition keyPartition summaryrow

cacheSizeTieredCompactionStrategySSTable

Table of Contents [hide]

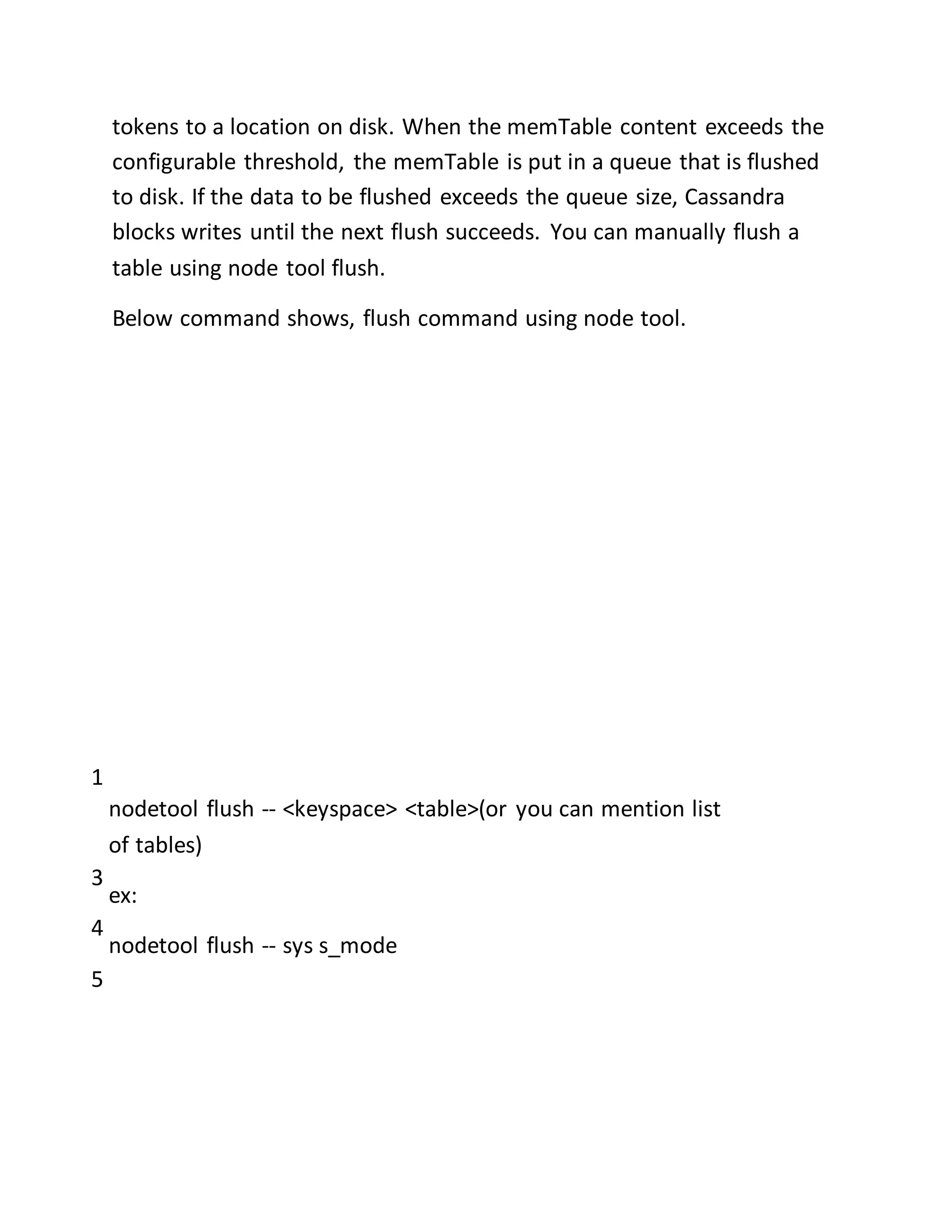

Storage engine

o How data is written?

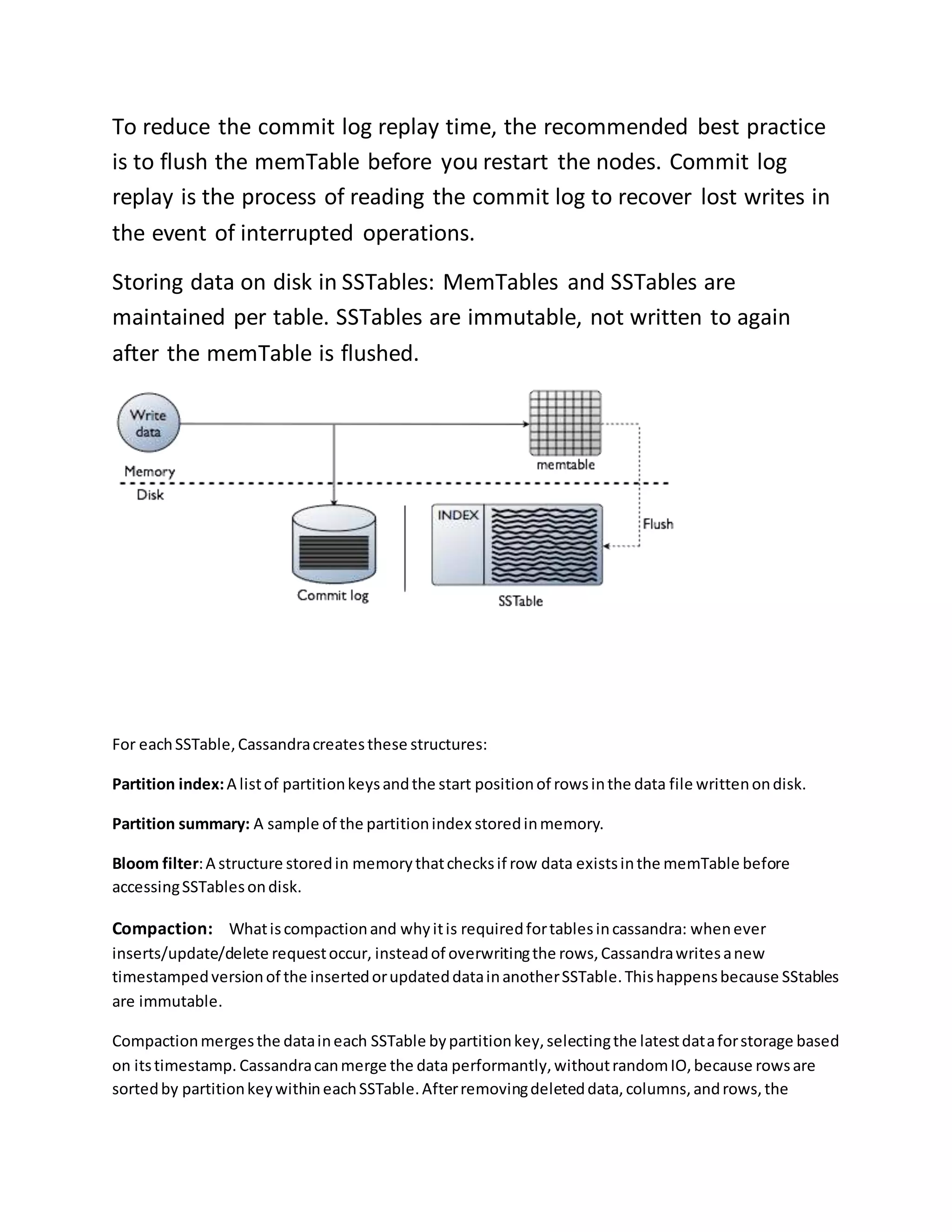

o Compaction:

o Types of compaction:

o How is data updated?

o How is data deleted?

o How is data read?

o How do write patterns effect reads?

o Share this:](https://image.slidesharecdn.com/cassandraarchitecture-181102095000/75/Cassandra-architecture-19-2048.jpg)