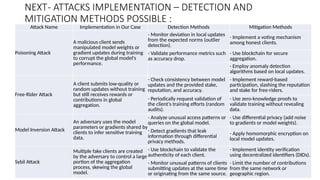

The document presents a literature survey on combatting model poisoning attacks in blockchain-enabled federated learning for healthcare, highlighting various methodologies that enhance security and privacy. Key approaches discussed include the MinVar algorithm for model updates, decentralized frameworks using blockchain, and utilizing secure multi-party computation for encrypted model verification. However, challenges like high computational complexity, increased storage demands, and limitations in existing defense mechanisms are noted.

![Federated Learning Module –Setup

Dataset used – Breast Tumour dataset

3 classes : normal , benign, malignant

CNN Model used – DenseNet121

Federated Learning algorithm used : FedAVG

INITIAL EXPERIMENTATION – Simple neural network IN FedAVG :

Algorithm :

global_model <- SimpleNN(input_shape=(128, 128, 1), num_classes=3)

for round in range(n_rounds):

client_models <- [Train_Model(Copy_Model(global_model), Get_Batches(Load_Dataset(data), batch_size=16))

for data in client_datasets]

client_data_sizes <- Get_Client_Data_Sizes(client_datasets)

global_model <- Σ (client_model_i * client_data_sizes[i]) / Σ client_data_sizes # Weighted FedAvg

return global_model

normal benign malignant

•Model Structure: 3 layers, 16384-

128-64-3 neurons.

•Weight Initialization: Random

initialization using PyTorch defaults.

•Forward Pass: Reshaped input

processed with ReLU.](https://image.slidesharecdn.com/10-241107063645-c2697369/85/BLOCKCHAIN-FINAL-YEAR-PROJECT-PRESENTATION-6-320.jpg)