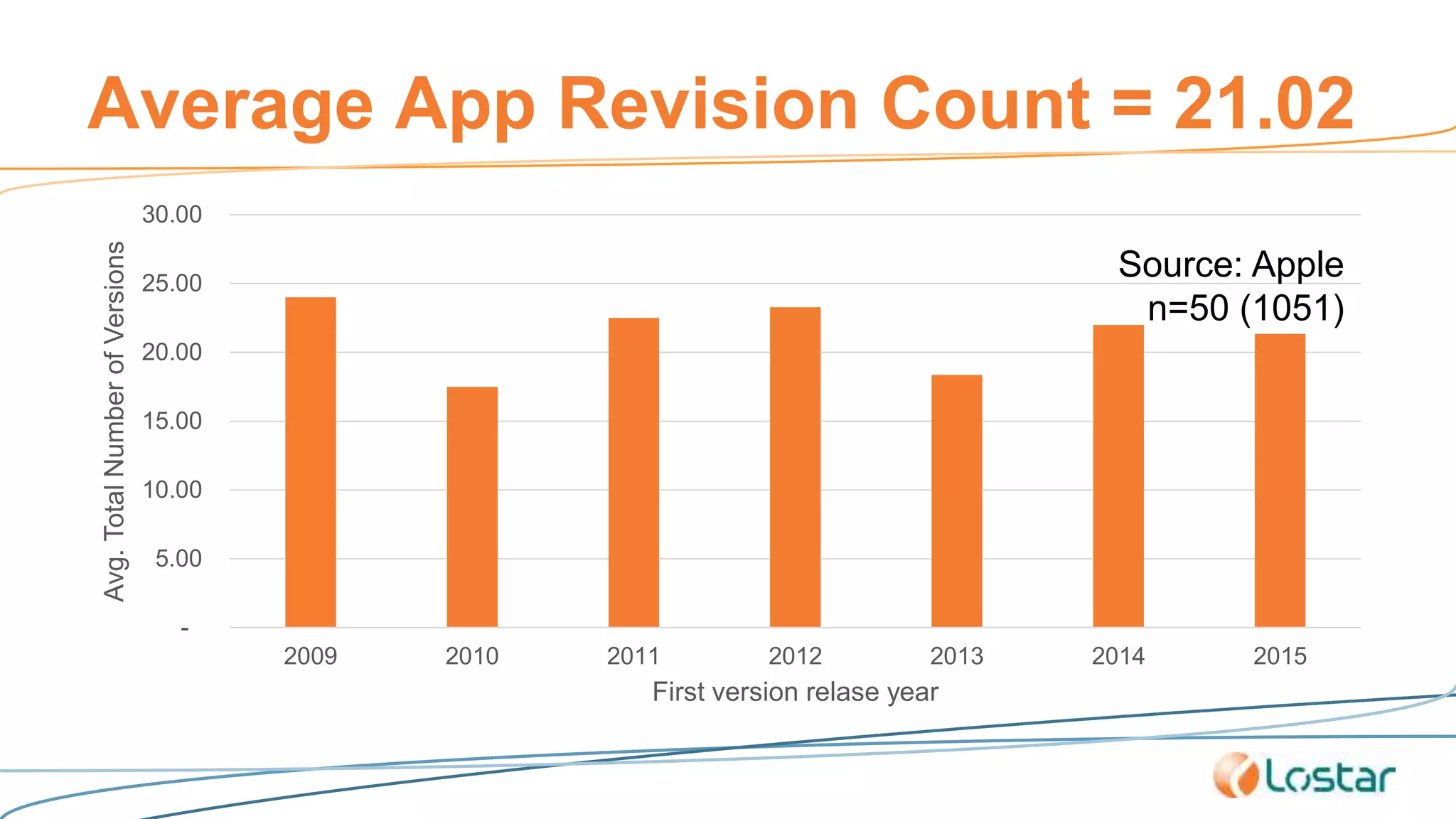

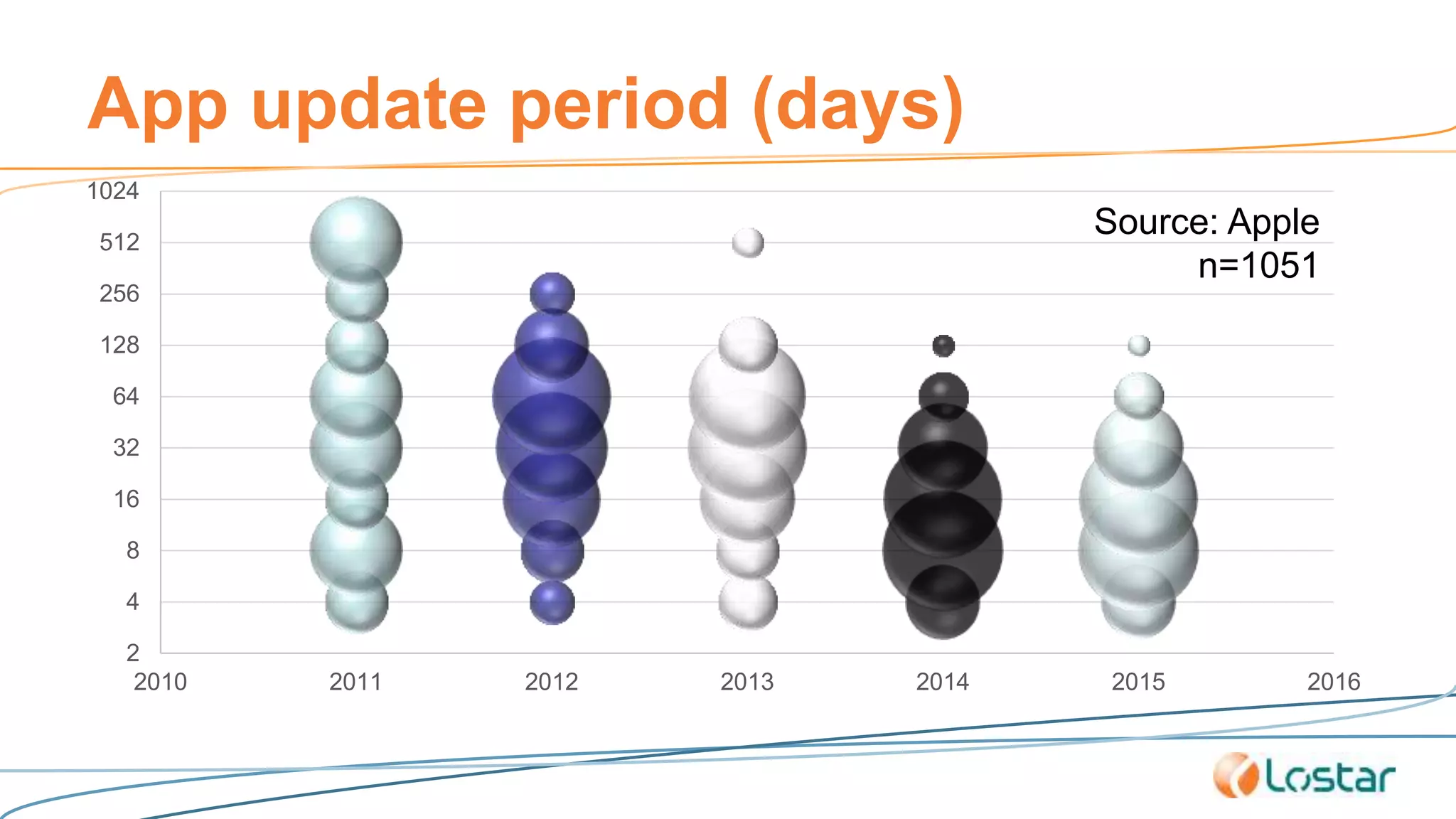

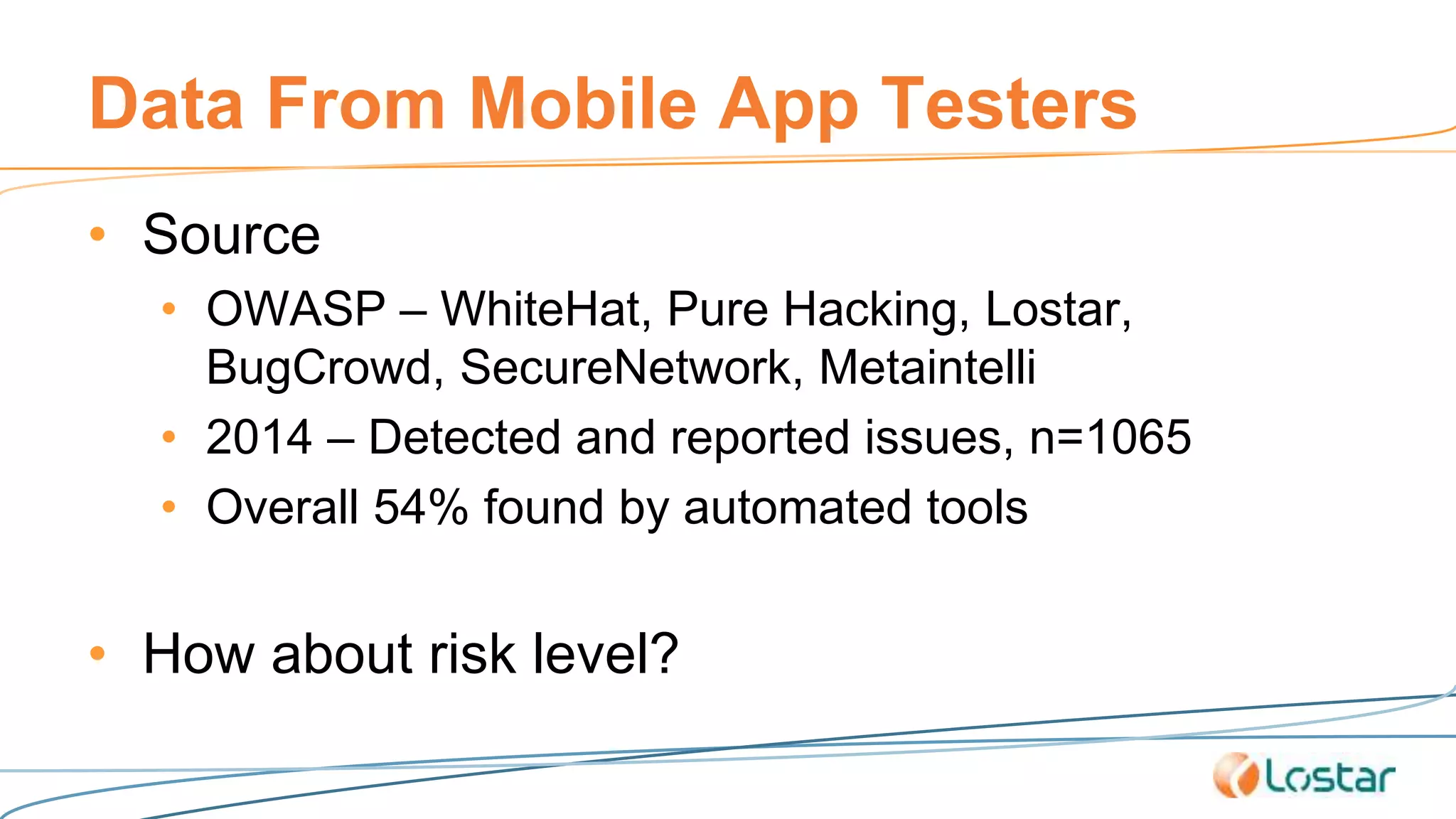

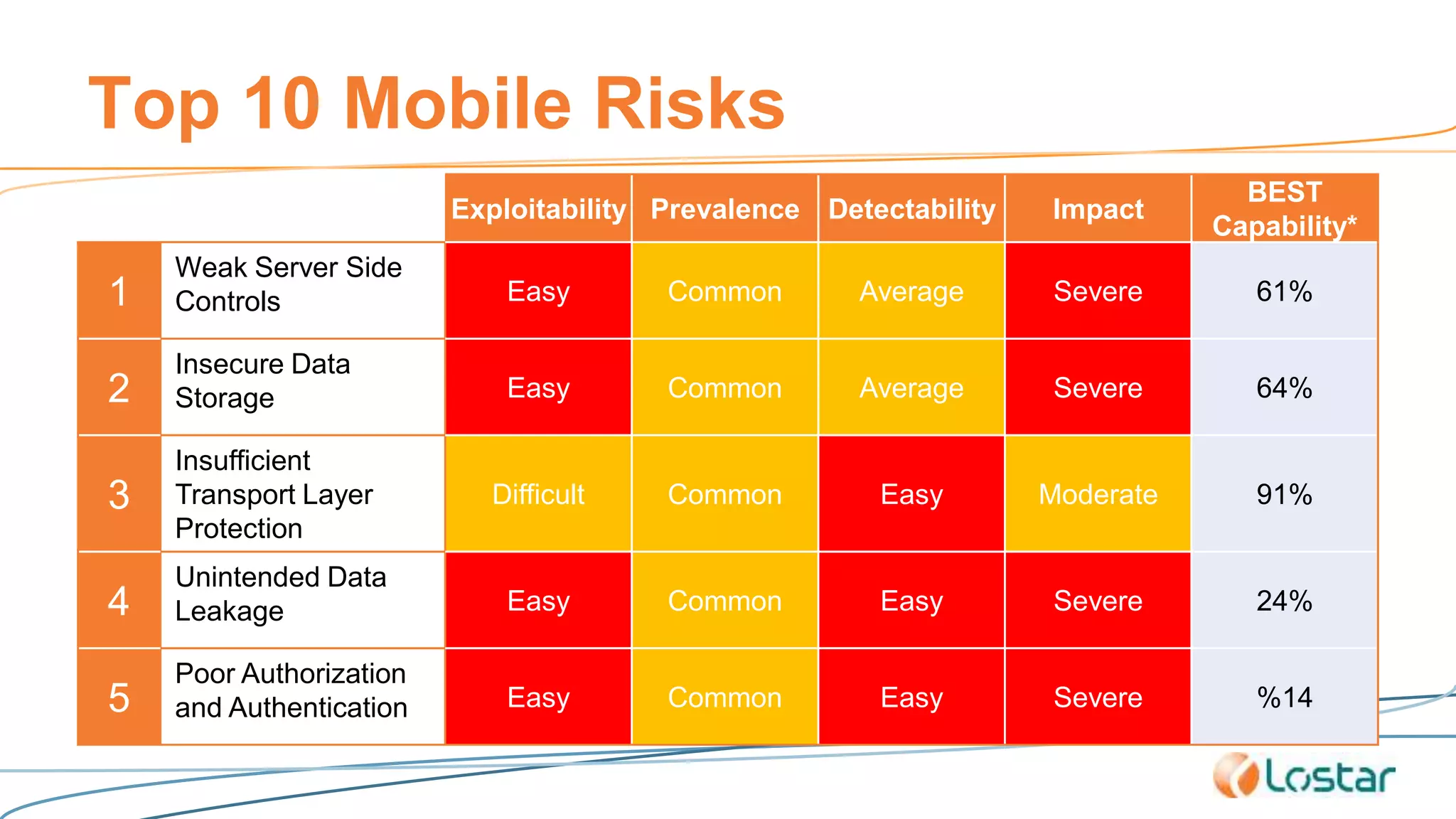

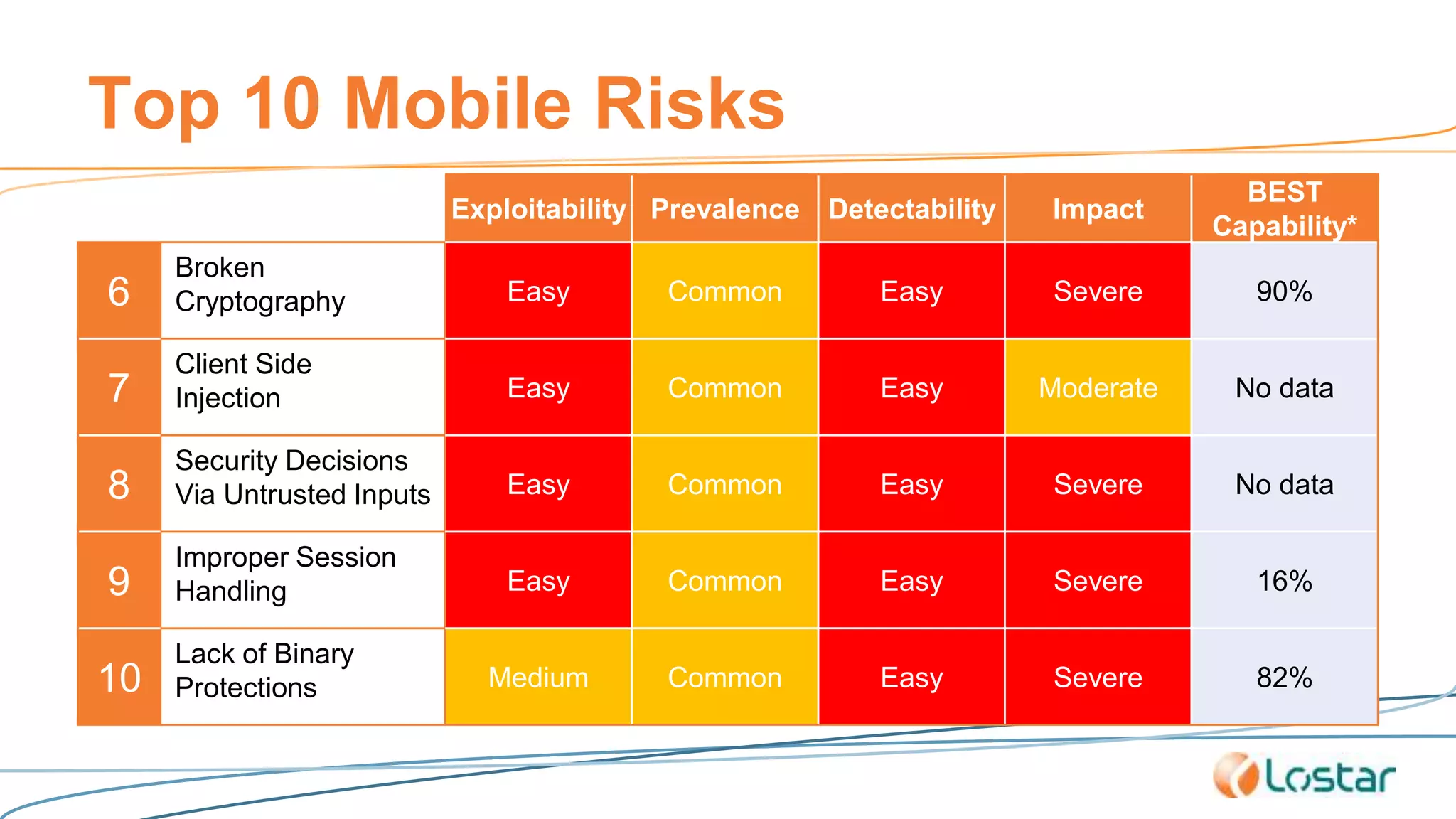

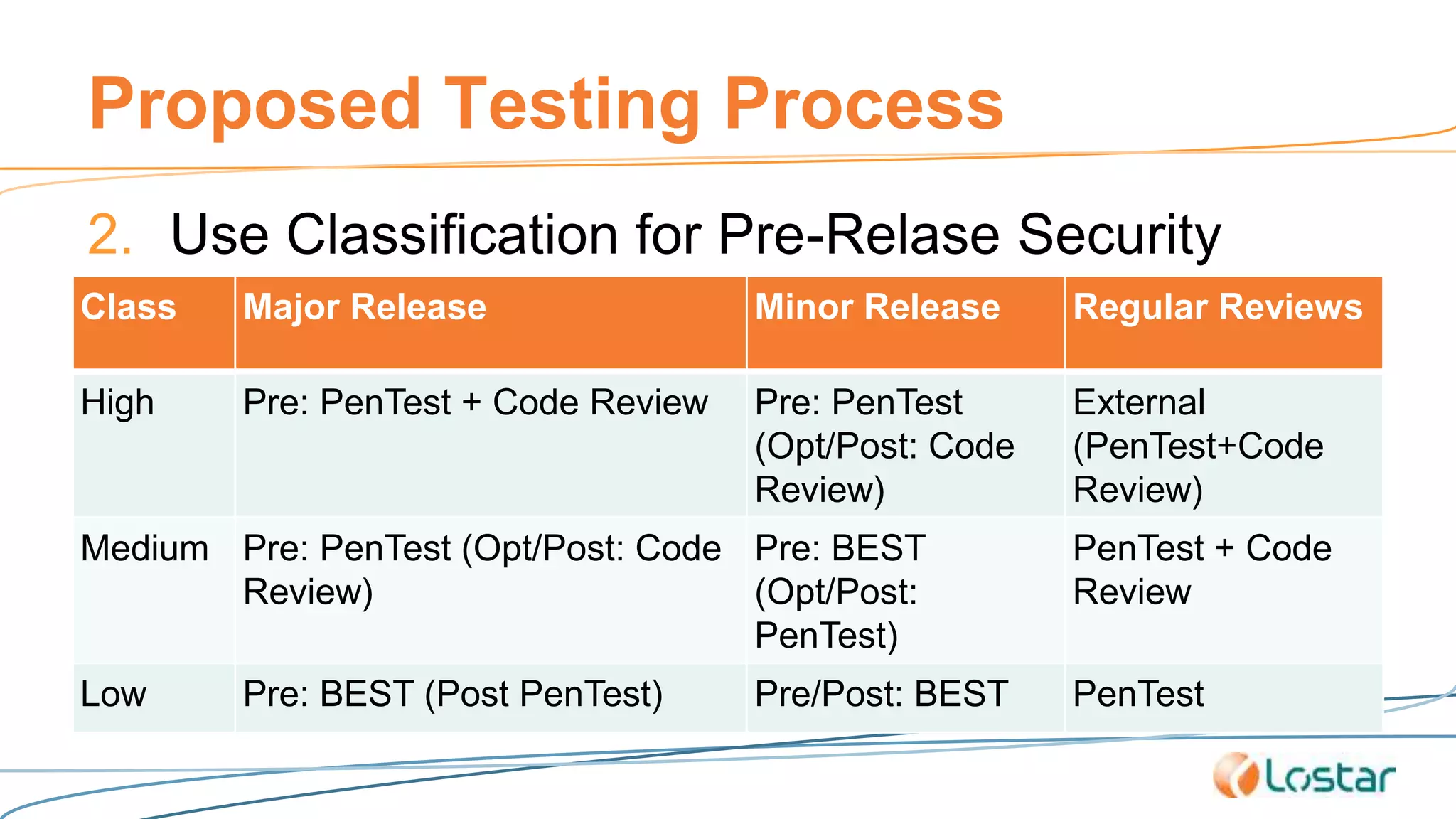

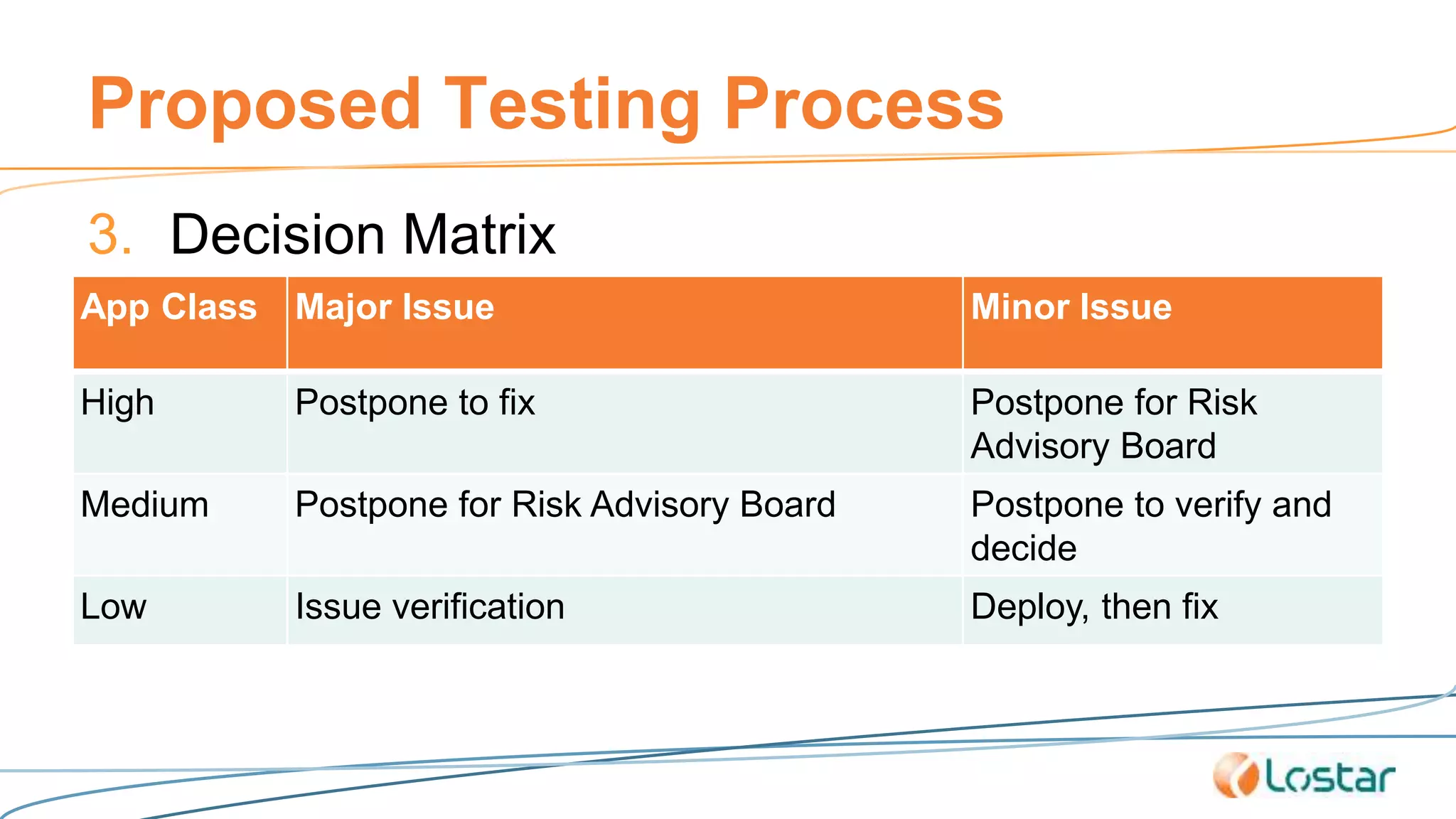

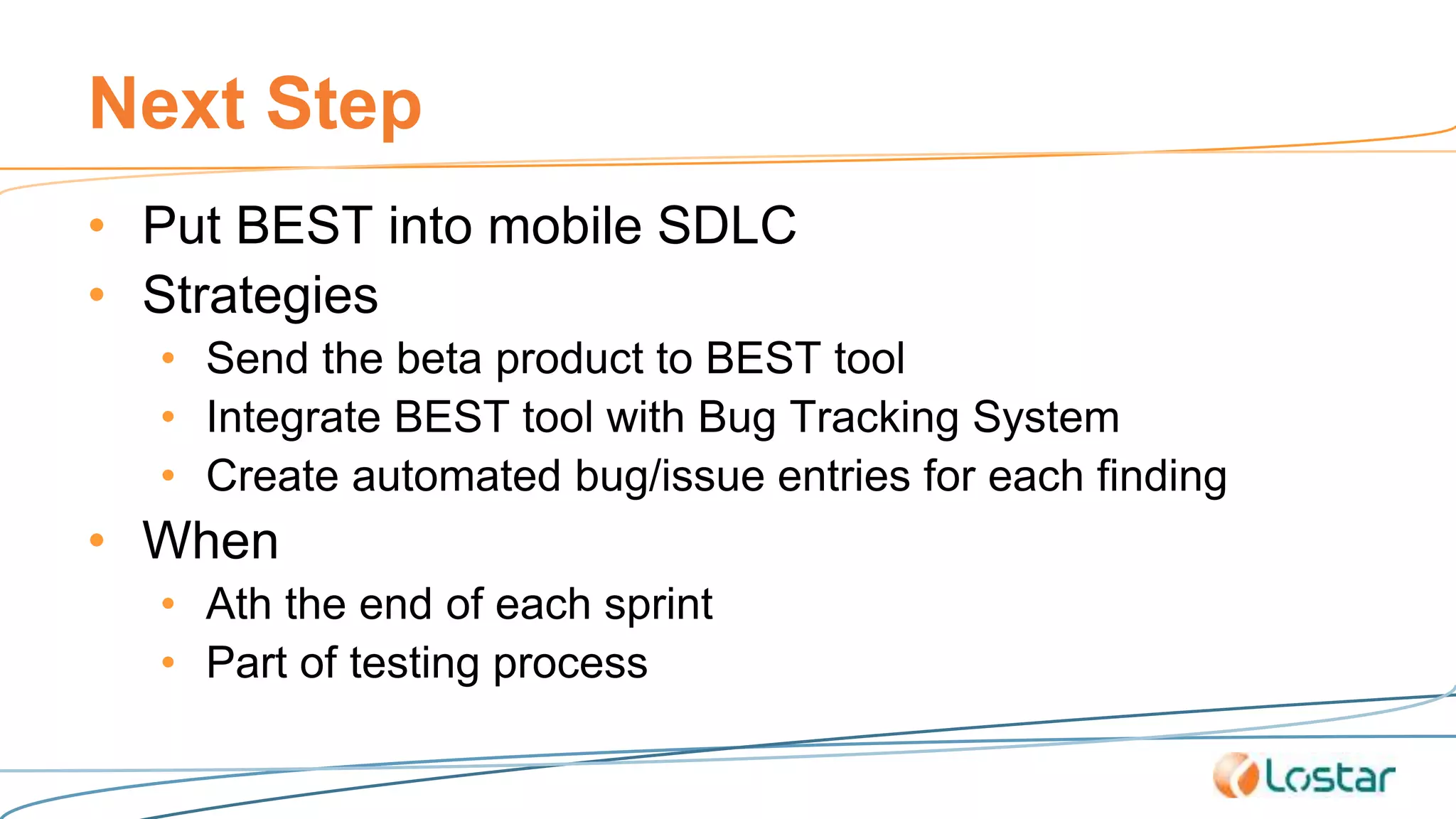

This document discusses using automated security testing tools to test mobile app updates on a "best effort" basis due to short release cycles. It finds that automated tools can detect around 54% of issues but may miss high risk issues. A proposed process classifies apps as high, medium, or low risk and applies different security testing techniques like penetration testing and code reviews based on the risk level and type of release. Automated testing is suggested for low risk app updates to catch basic issues based on the 80/20 rule.