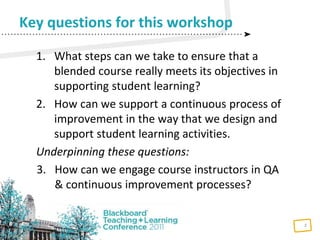

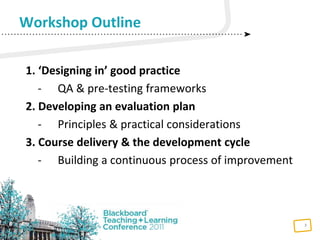

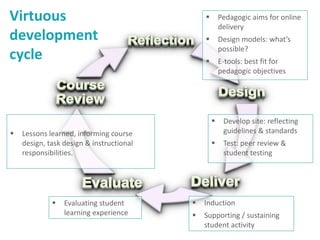

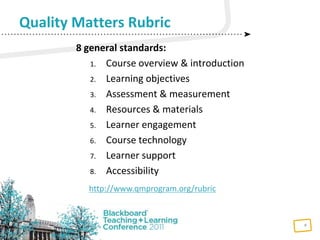

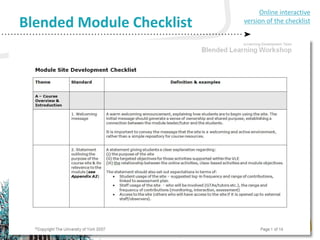

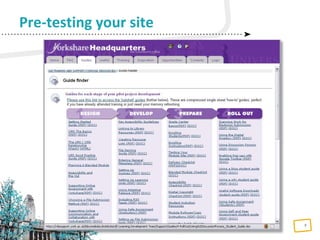

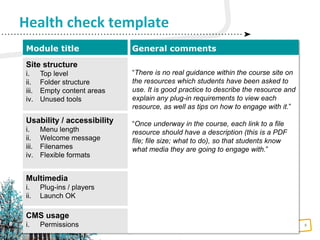

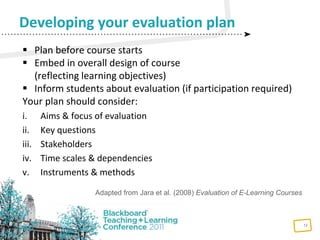

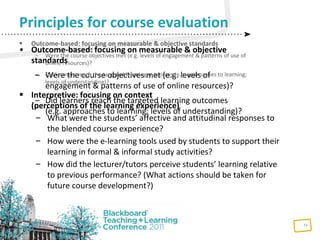

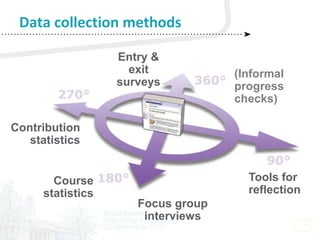

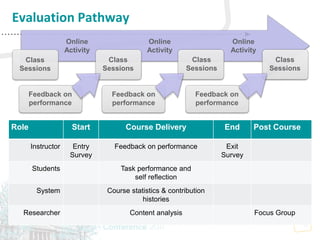

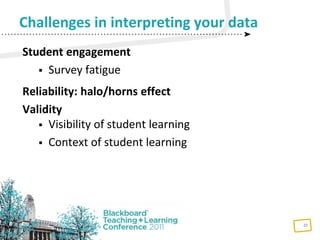

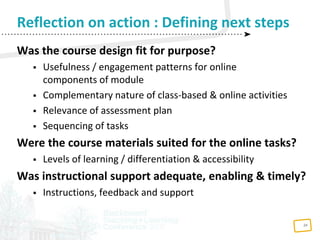

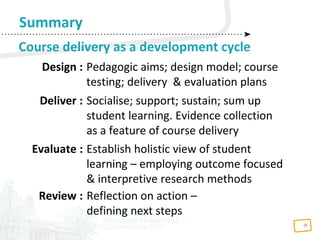

The document provides guidance on assuring the quality of blended courses through continuous improvement processes. It discusses designing courses with clear learning objectives and quality assurance frameworks in mind. An evaluation plan should be developed to collect both outcome and interpretive data on student learning and engagement. A virtuous development cycle is recommended where courses are delivered, evaluated, and reviewed to inform future design. Continuous feedback from instructors, students, course statistics, and research help improve the overall blended learning experience.