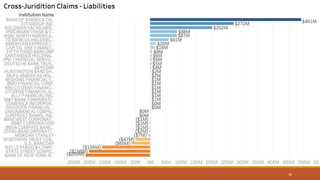

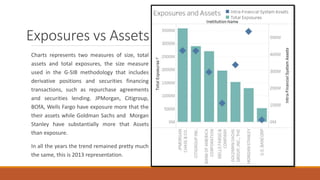

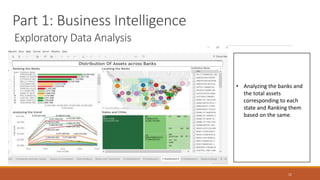

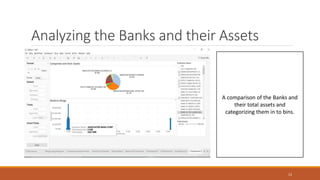

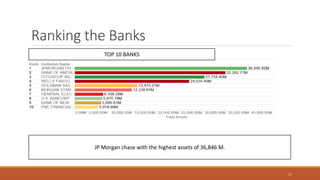

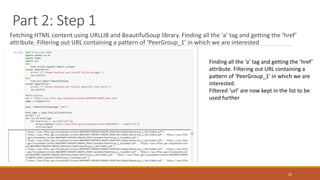

This document summarizes the goals and steps taken to scrape data from PDF files on the Banking Organization Systemic Risk Report website. It involved scraping the HTML, cleaning and formatting the raw data from the PDFs, and performing exploratory data analysis on the data in Tableau. Key steps included fetching the HTML using libraries in Python, extracting tables and text from PDFs using regular expressions, and creating visualizations of indicators like exposures, assets, liabilities, and scores in Tableau to analyze systemic risk across banks.

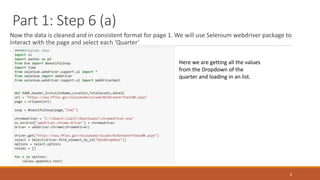

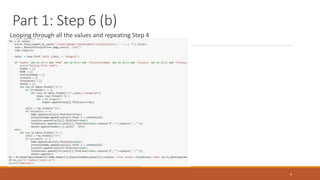

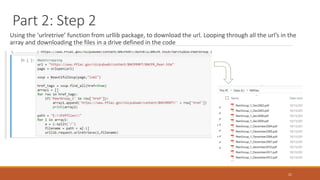

![Part 2: Step 6

Looping through the lines in flines array and adding into the arrays to make dataframe. Two loops for two tables, 1 loop

ranging from flines[1:is_id] and second in the range of flines[is_id:]

25

Converting the list into a dataframe as shown below

and converting to csv](https://image.slidesharecdn.com/bankingorganizationsystemicriskreport-170723160724/85/Banking-organization-systemic-risk-report-25-320.jpg)