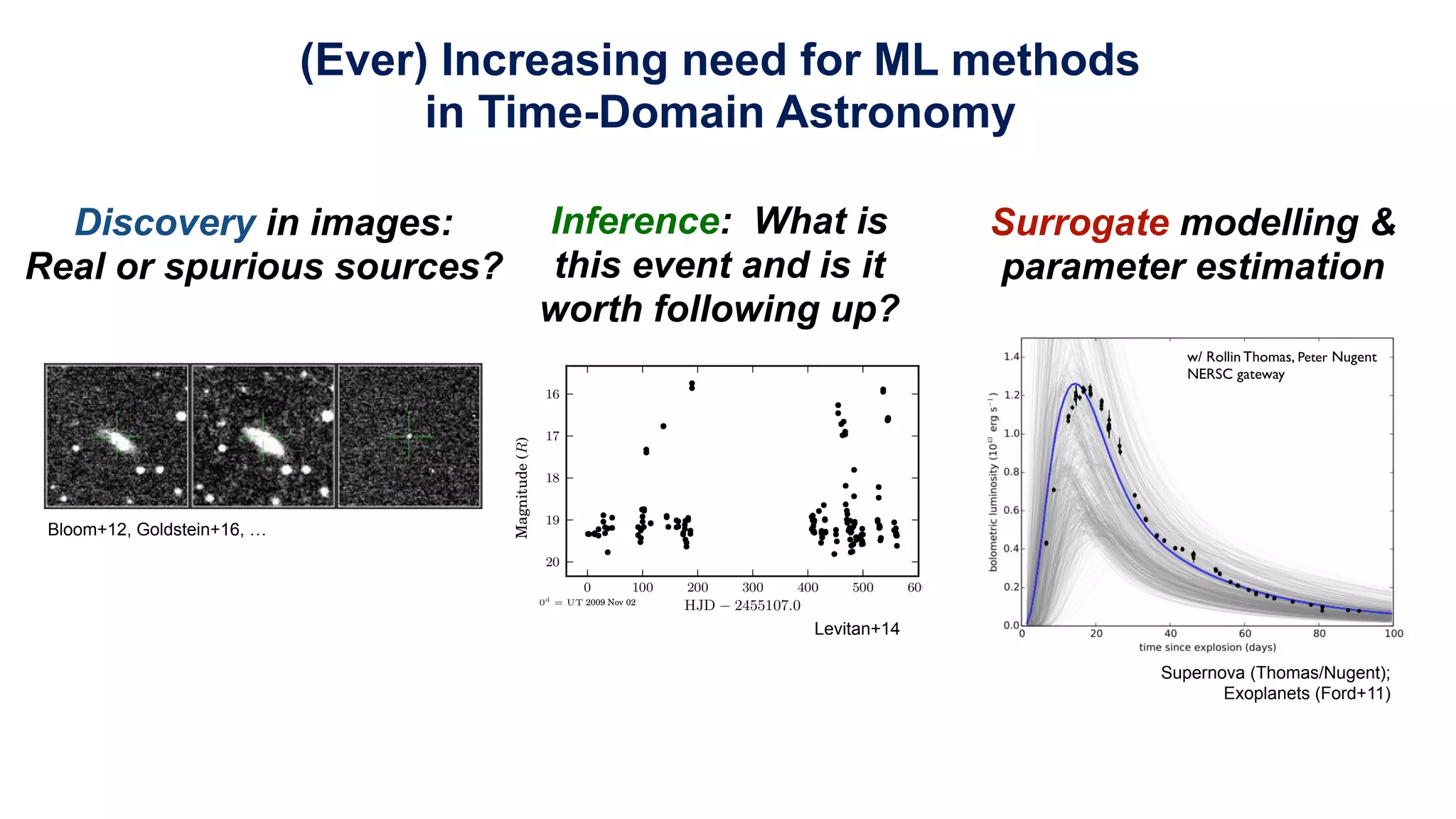

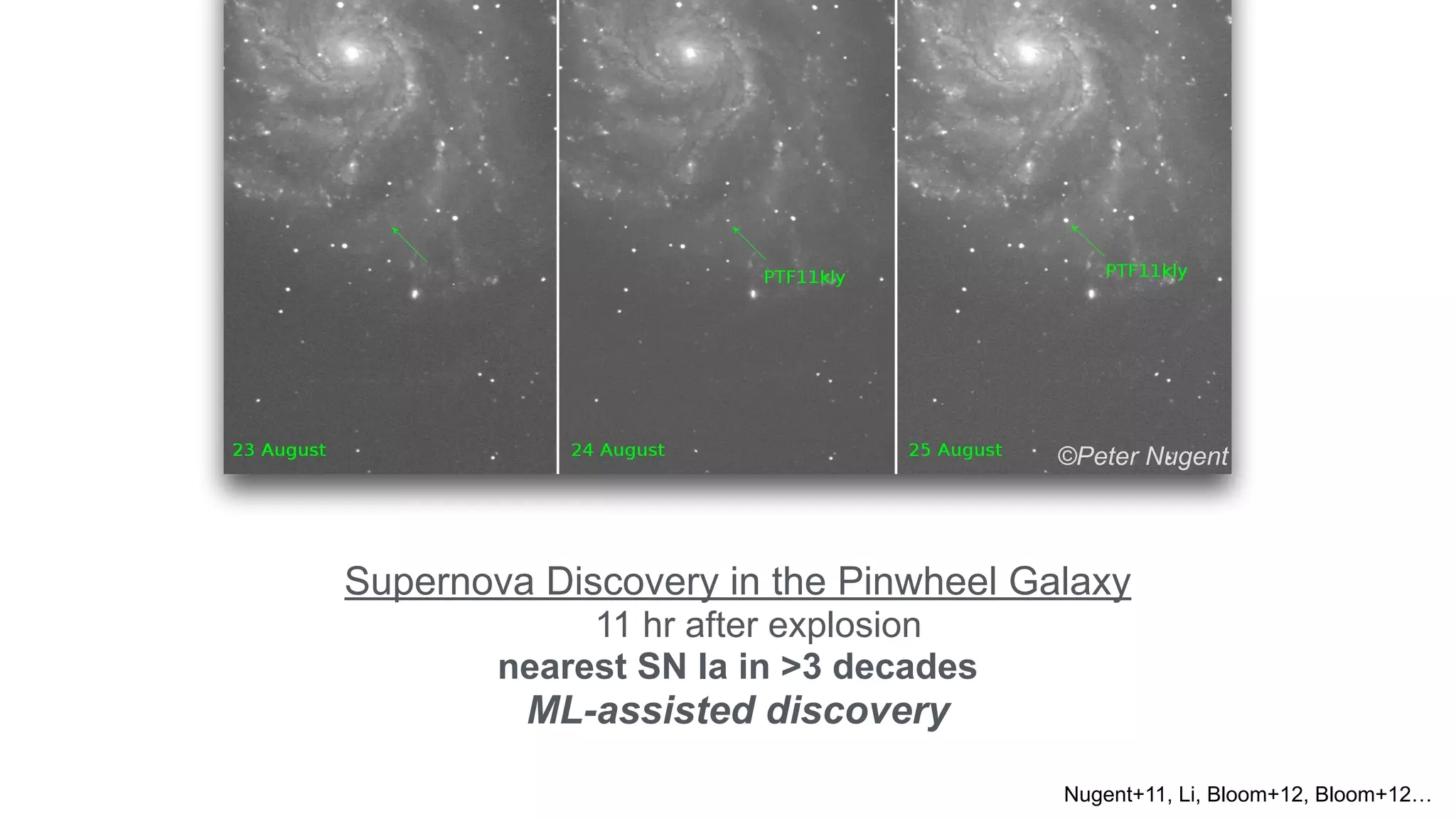

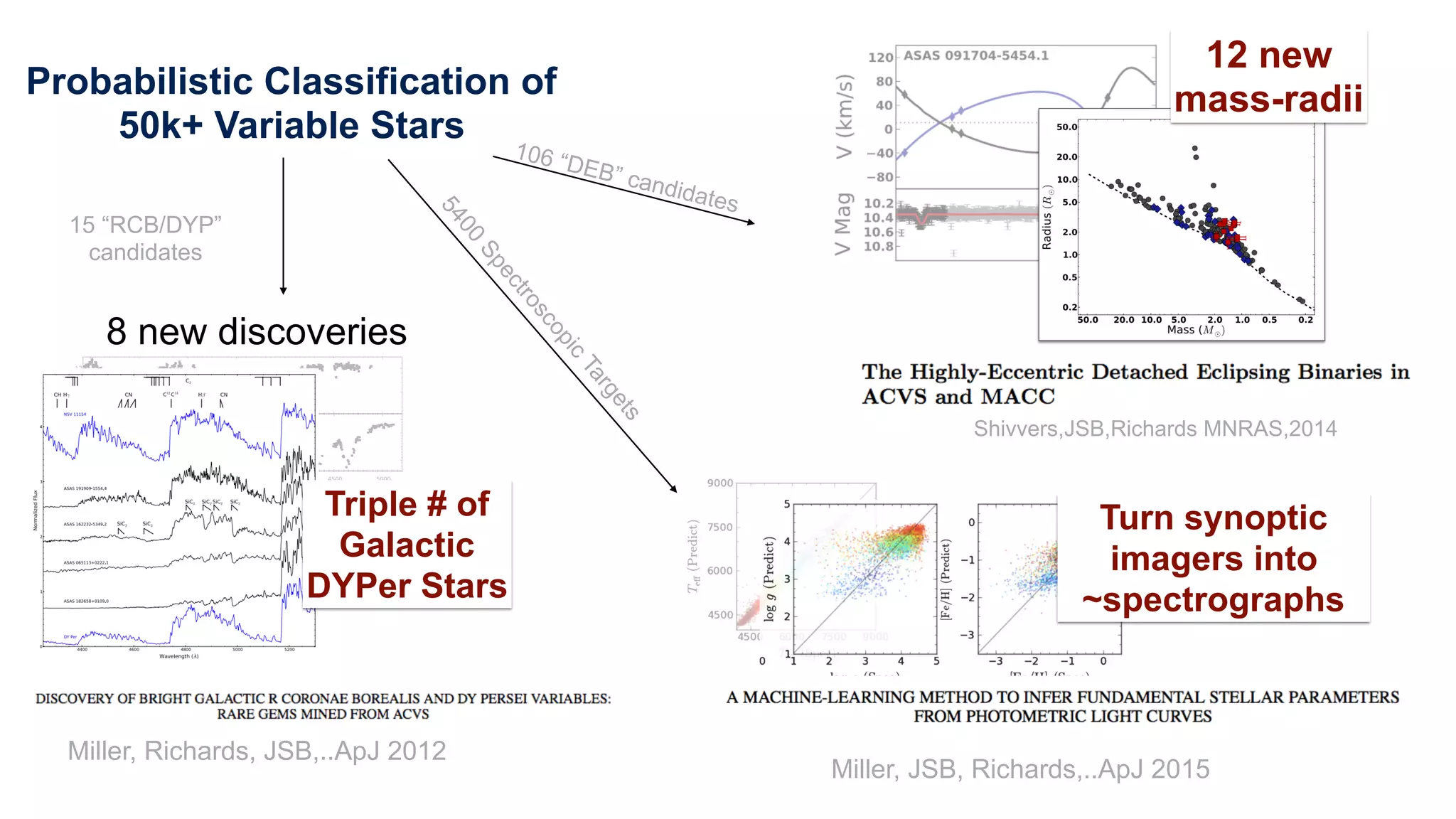

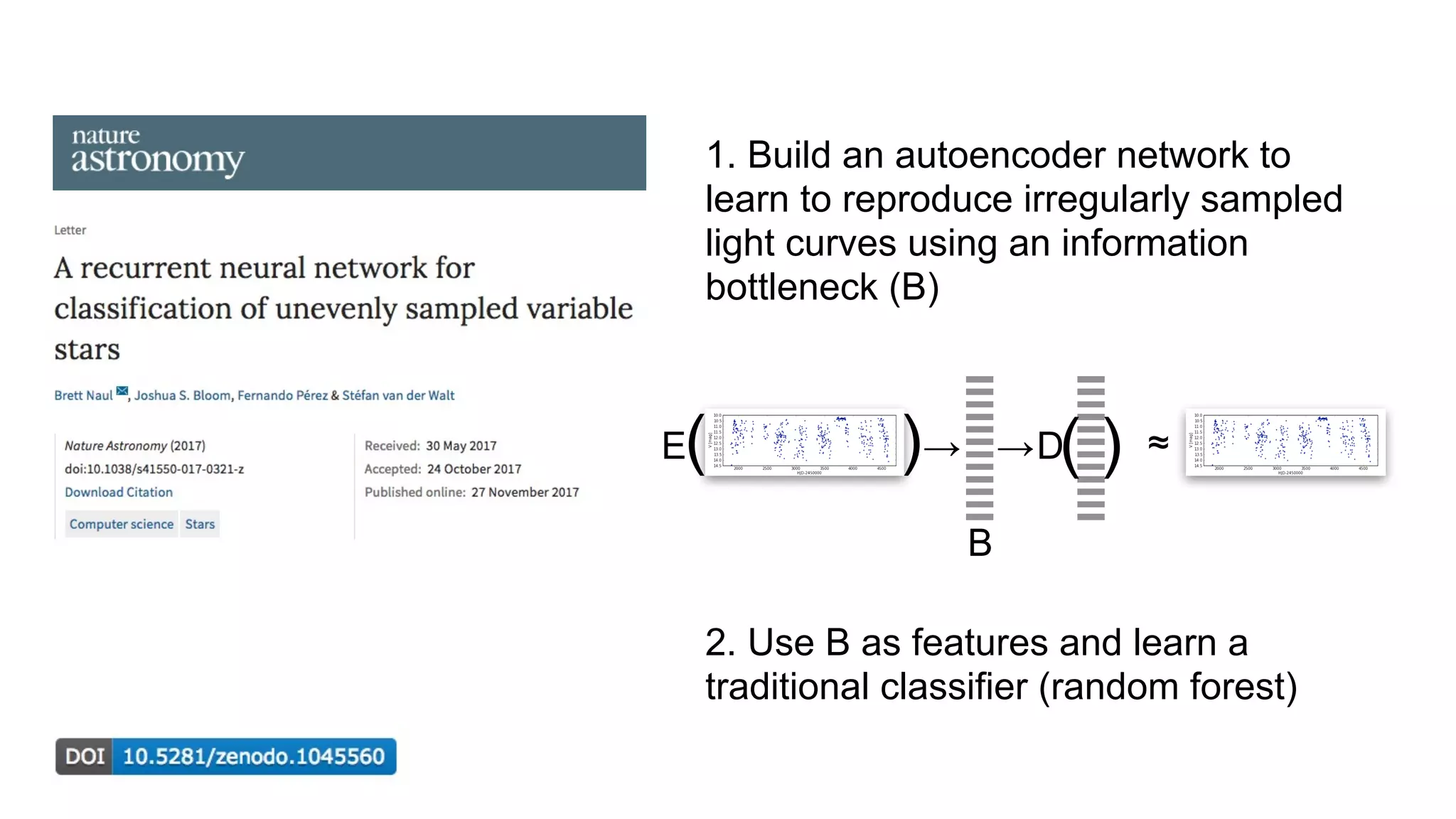

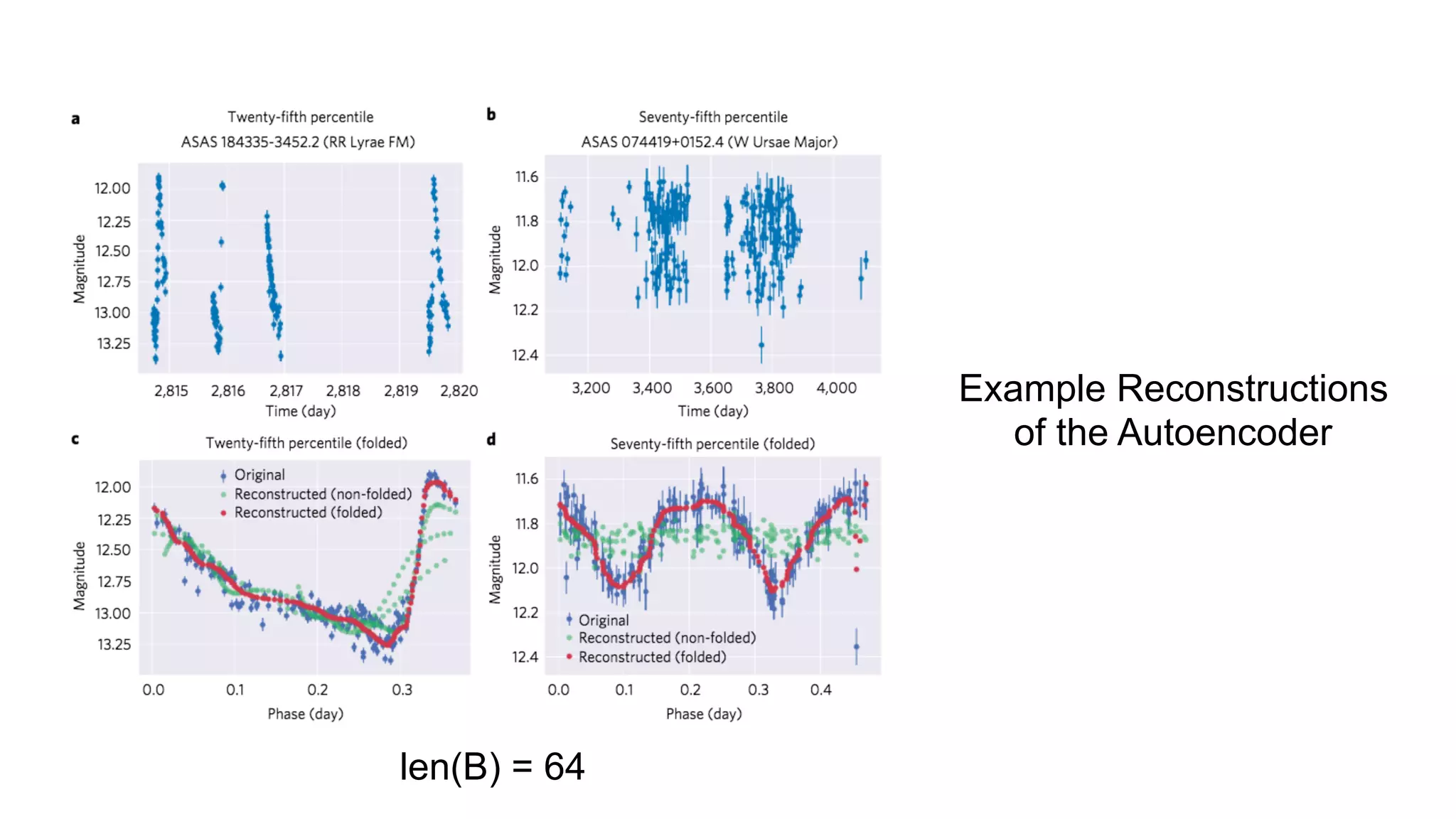

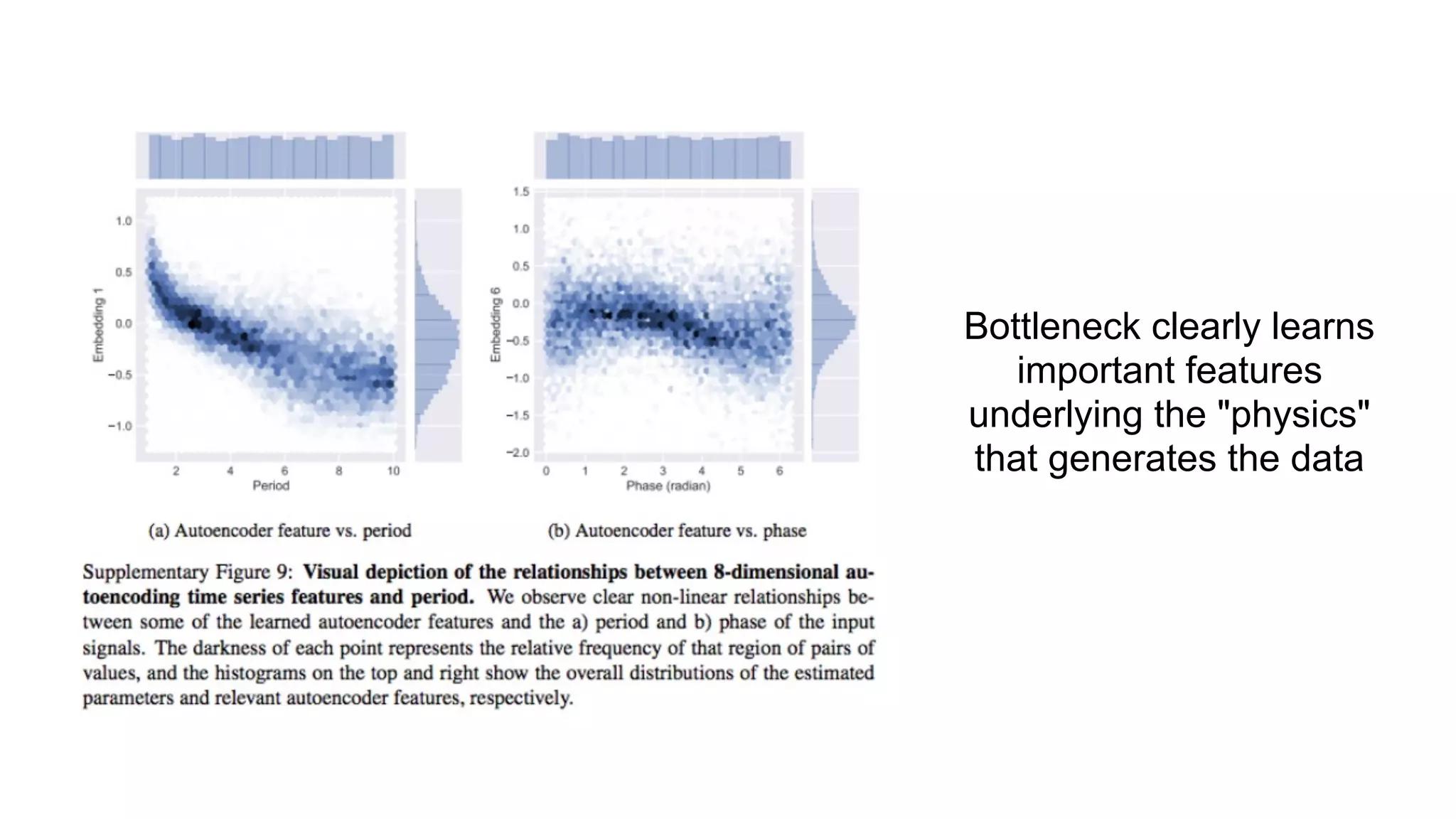

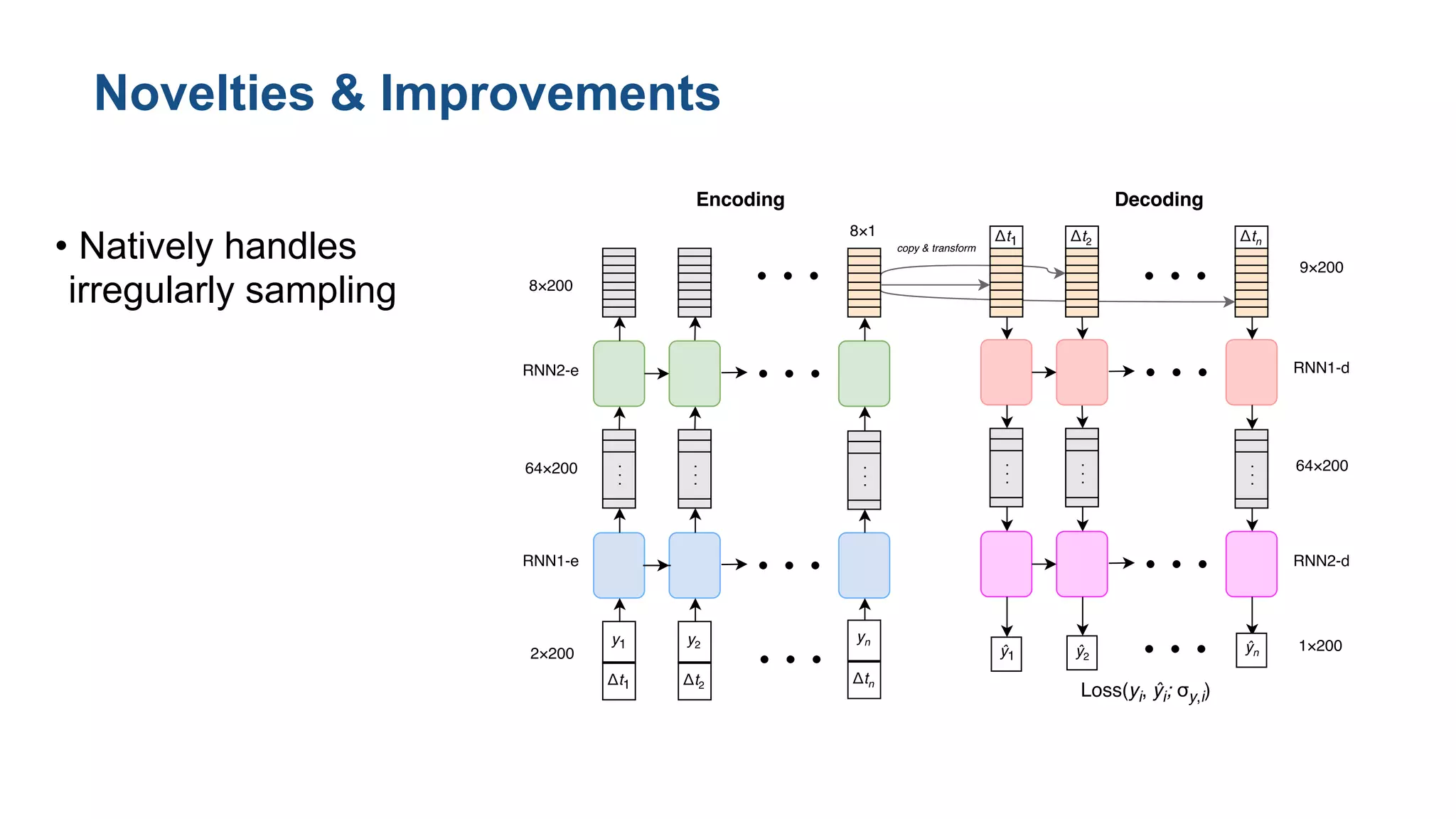

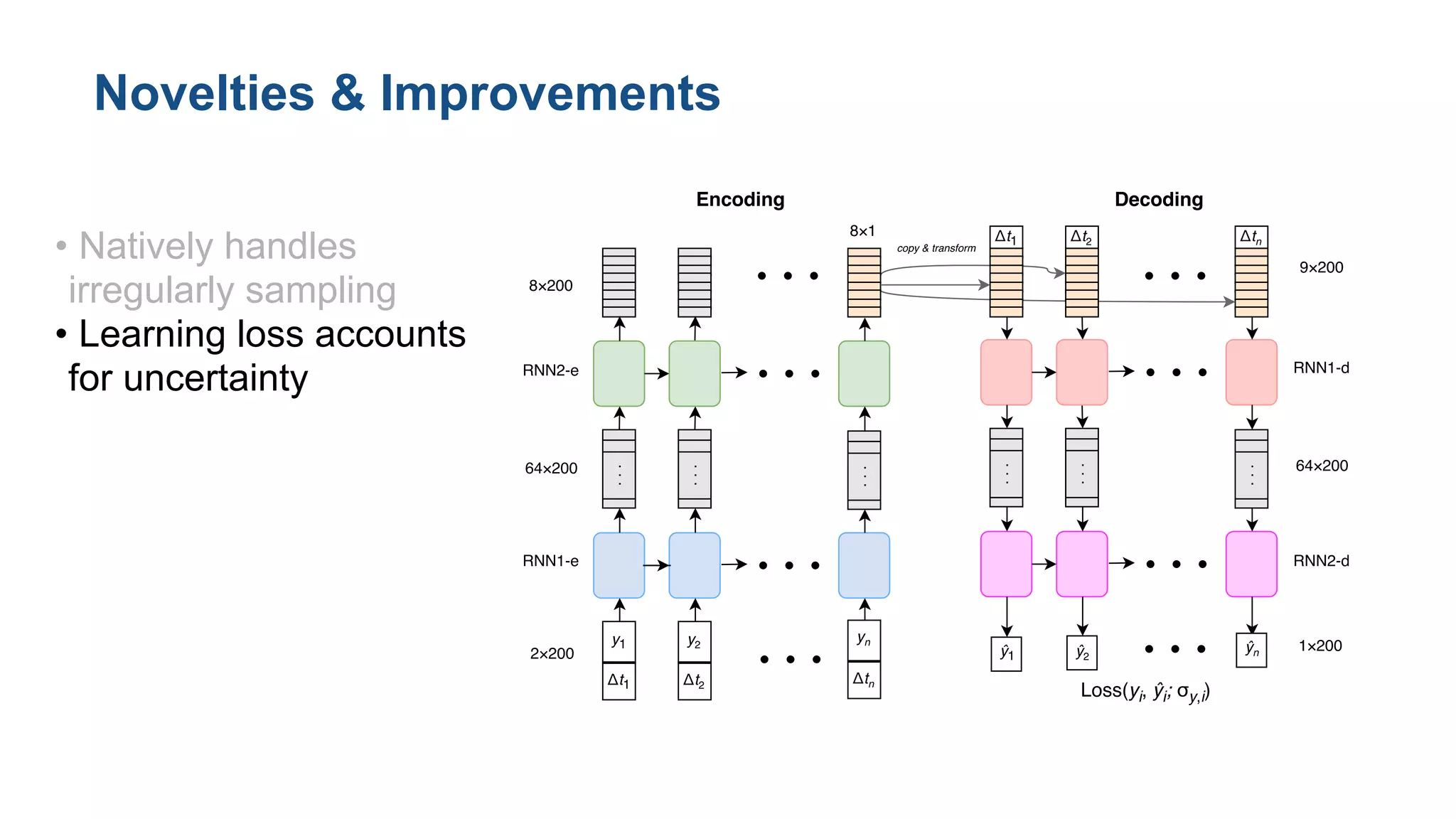

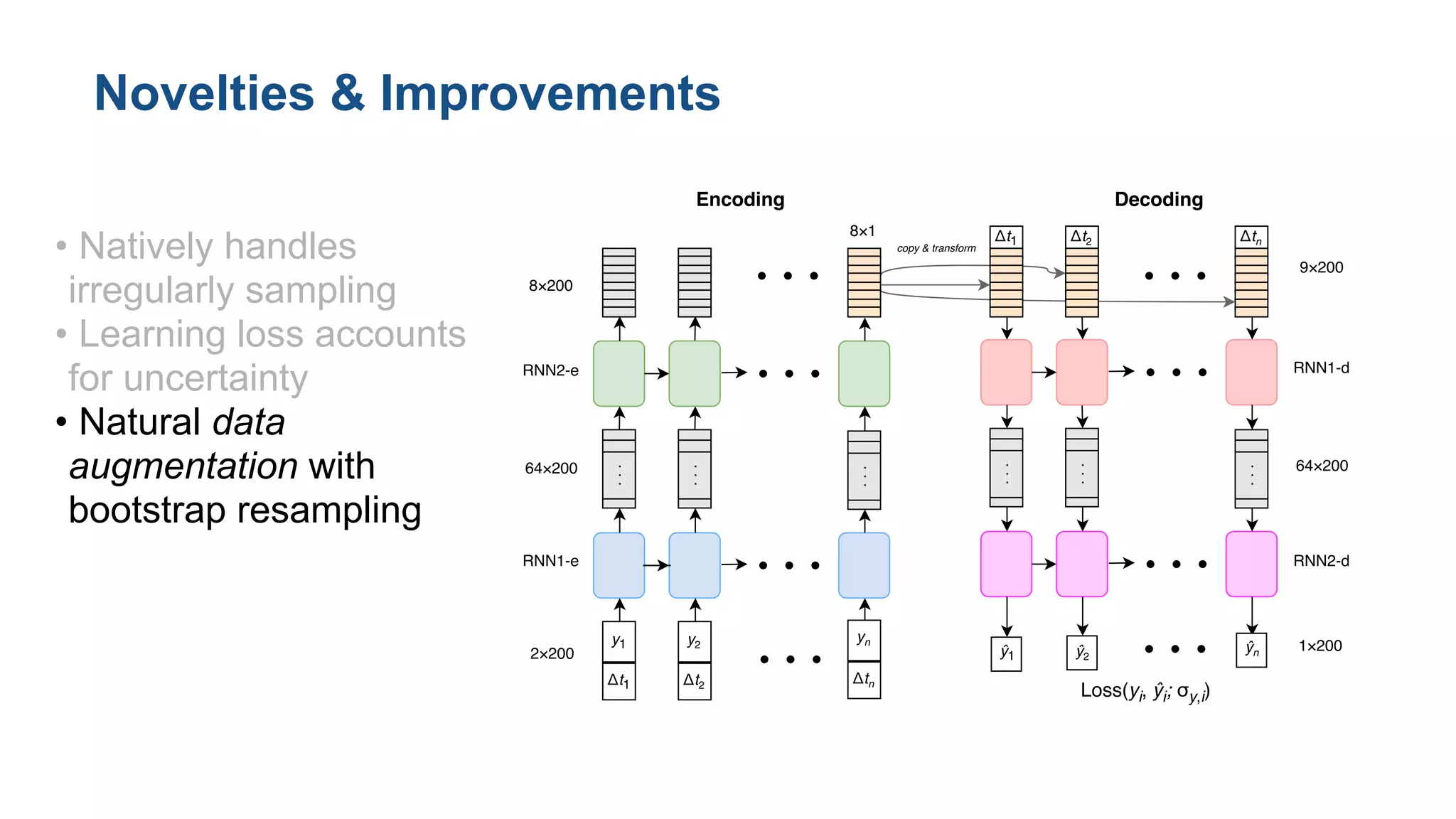

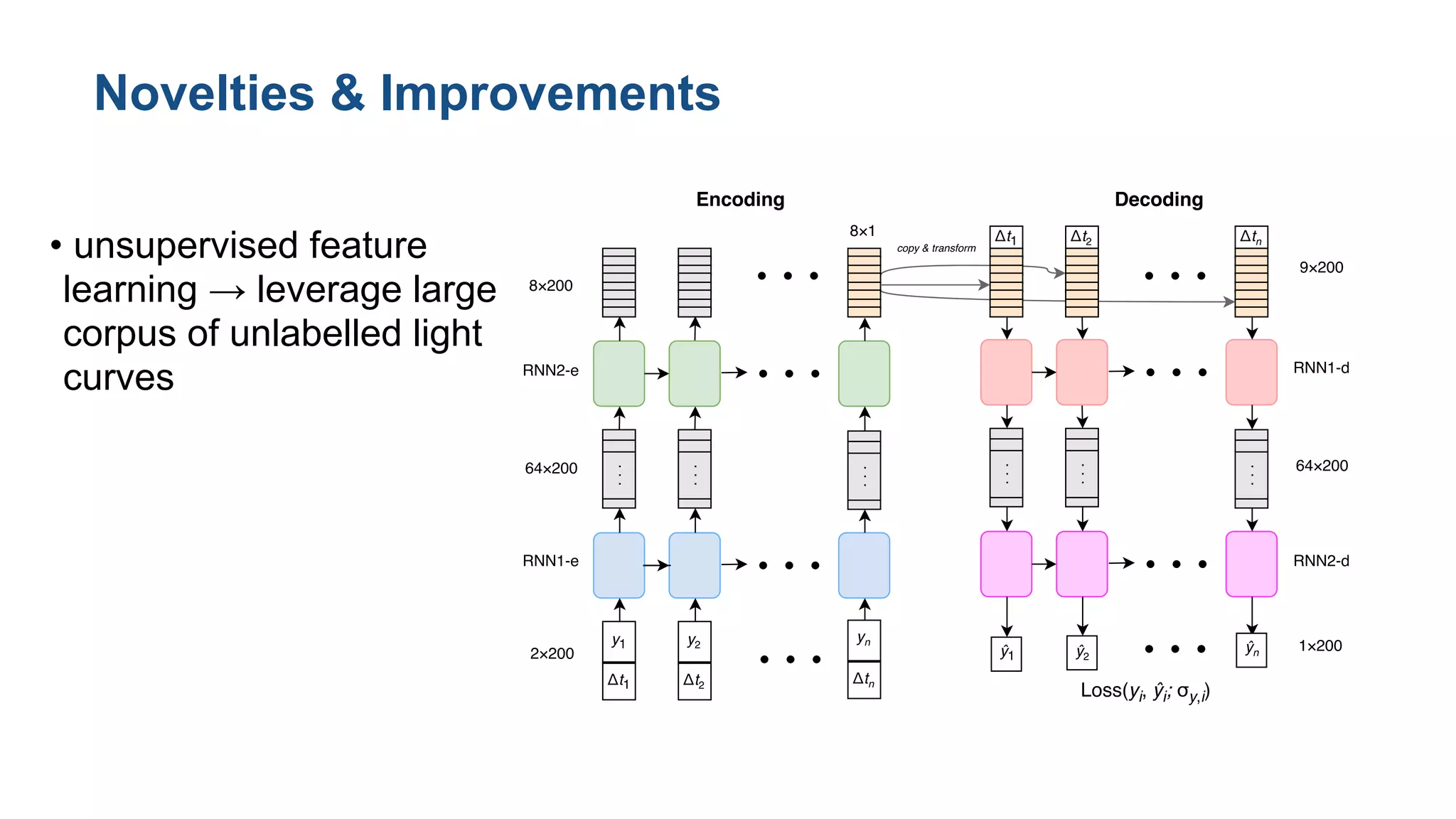

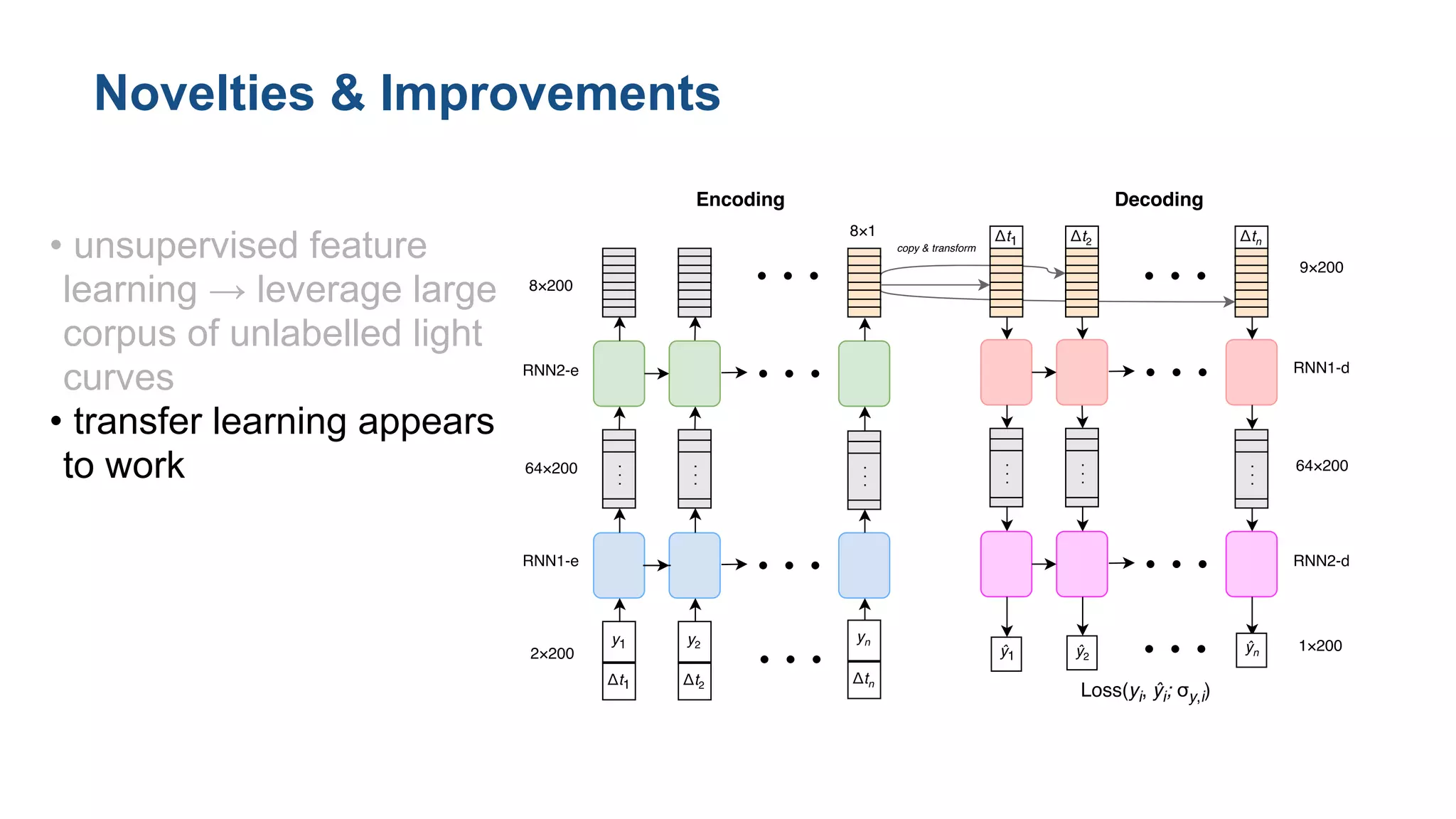

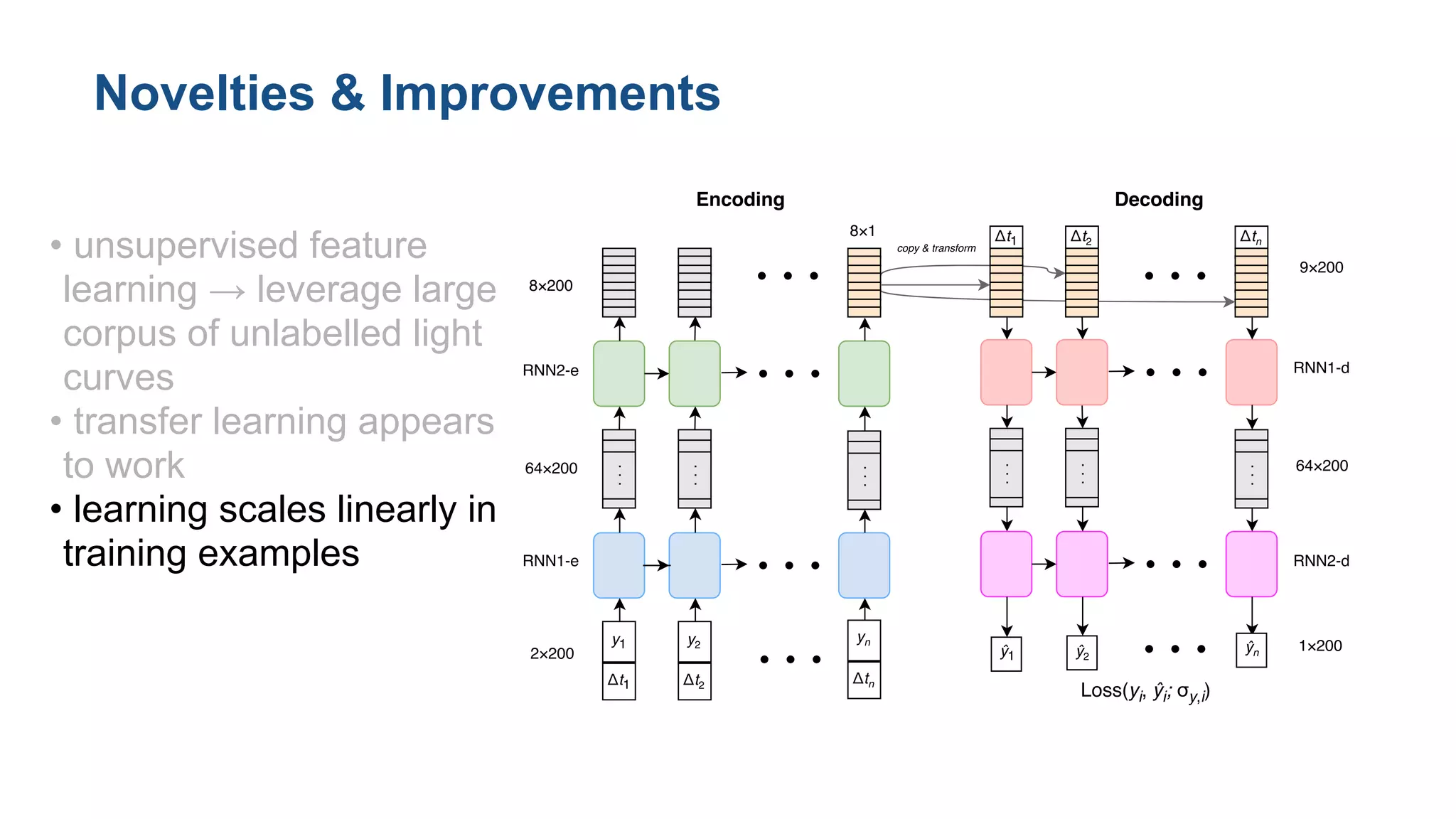

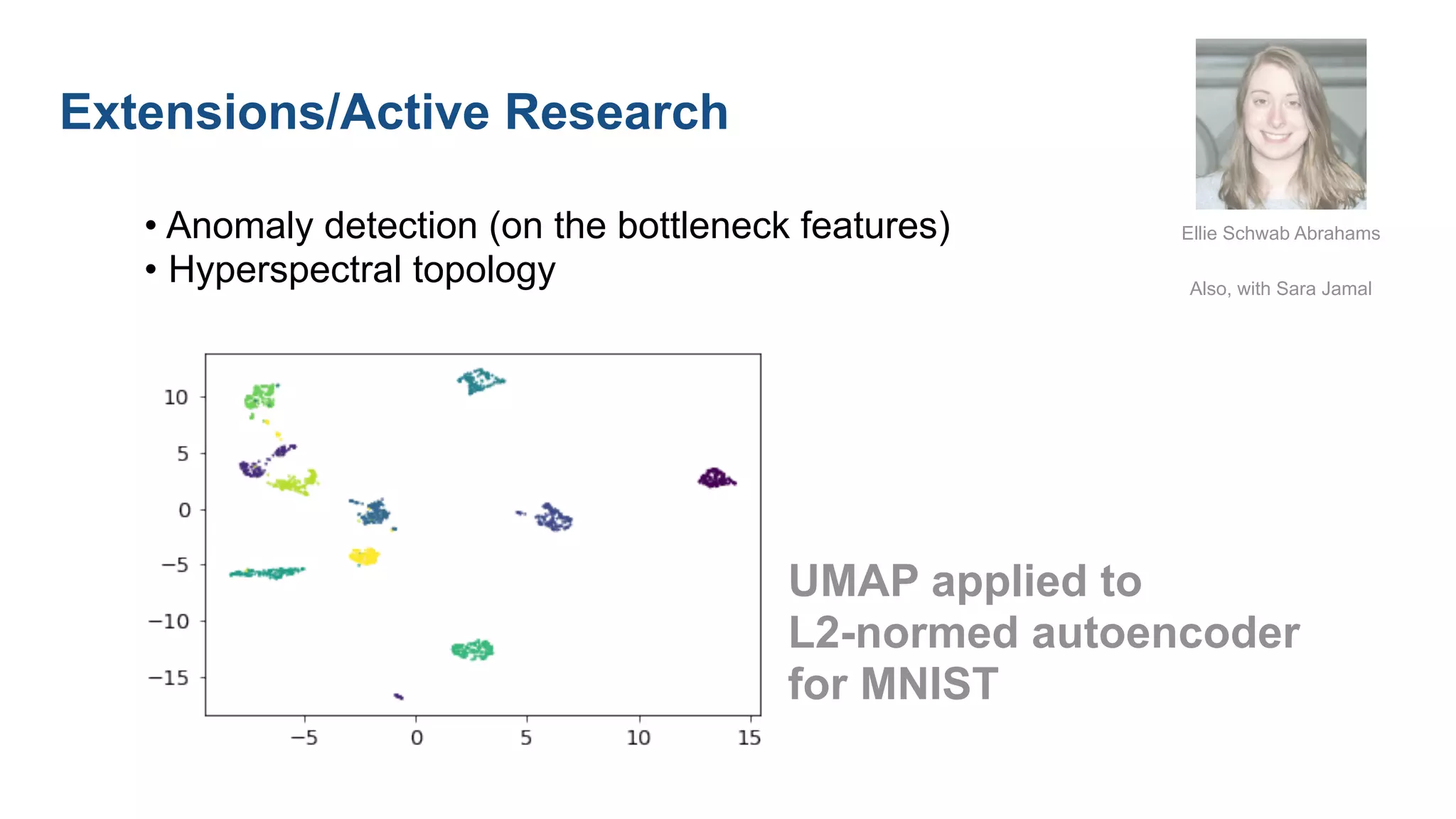

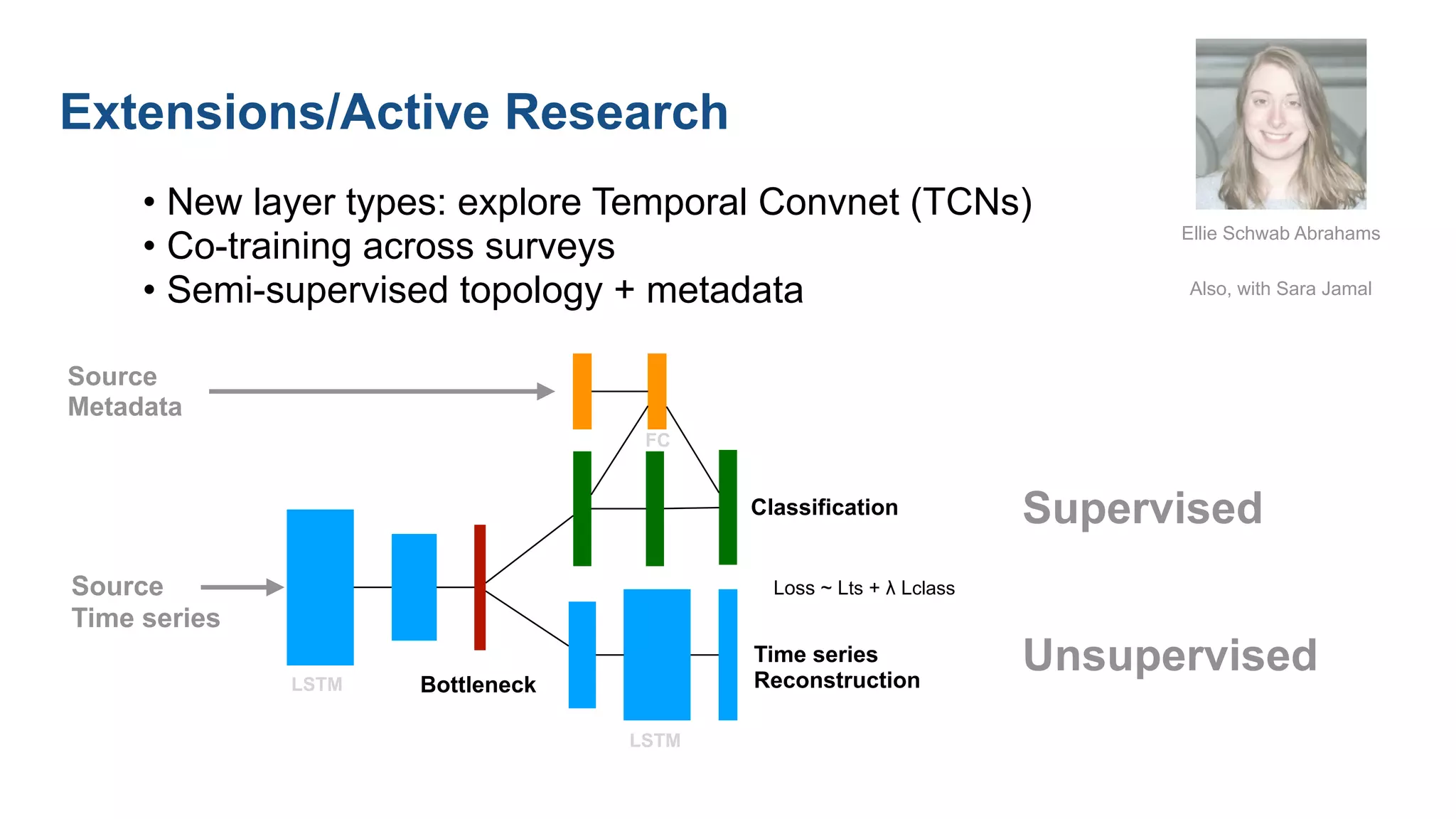

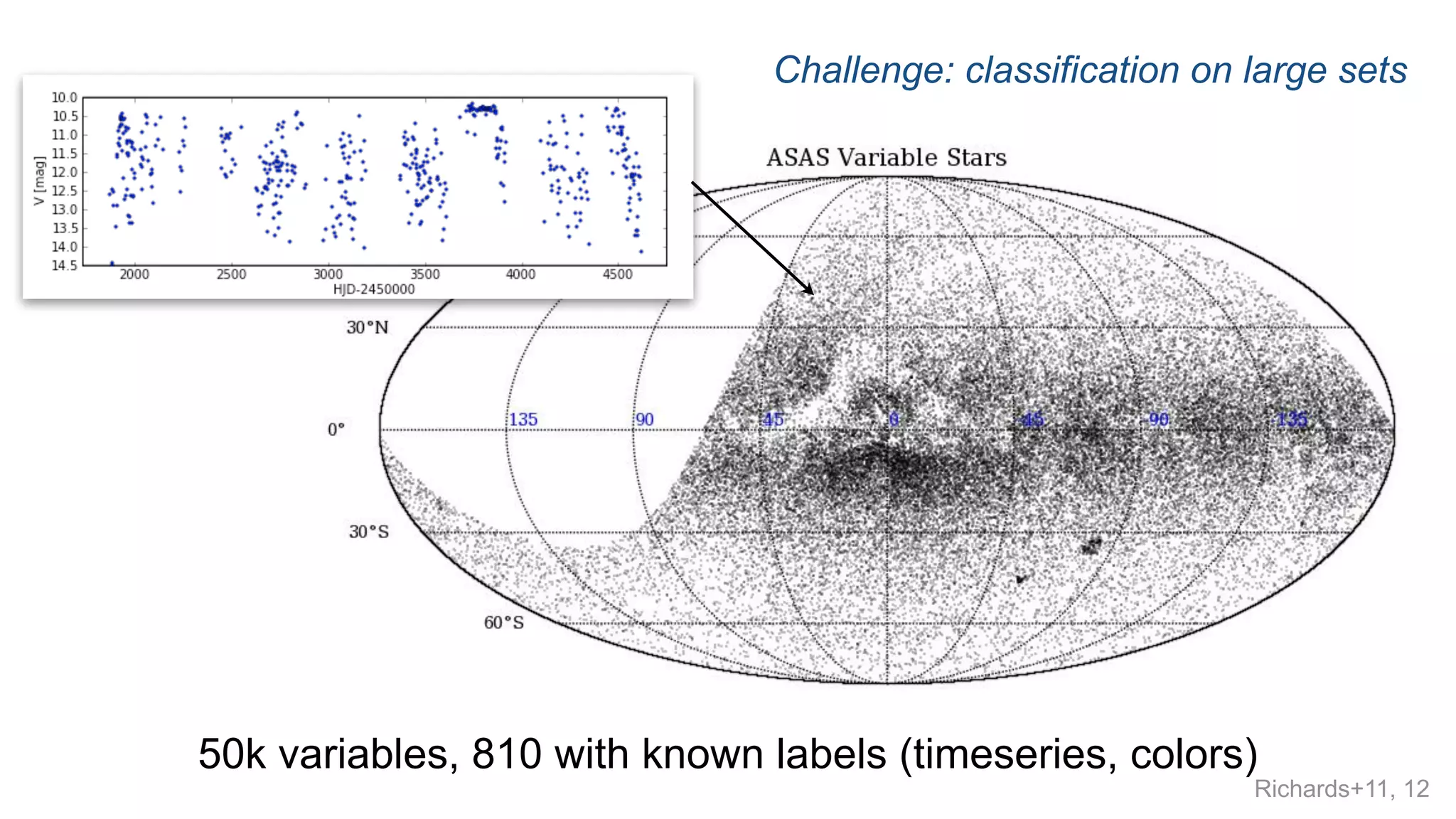

The document discusses the application of autoencoding recurrent neural networks (RNN) for analyzing unevenly sampled time-series data in astronomy. It highlights the challenges of traditional machine learning methods in handling feature uncertainty and the benefits of an RNN approach, such as unsupervised feature learning and transfer learning. The research also covers advancements in classification tasks related to a large dataset of variable stars, emphasizing the effectiveness of the proposed methodology.