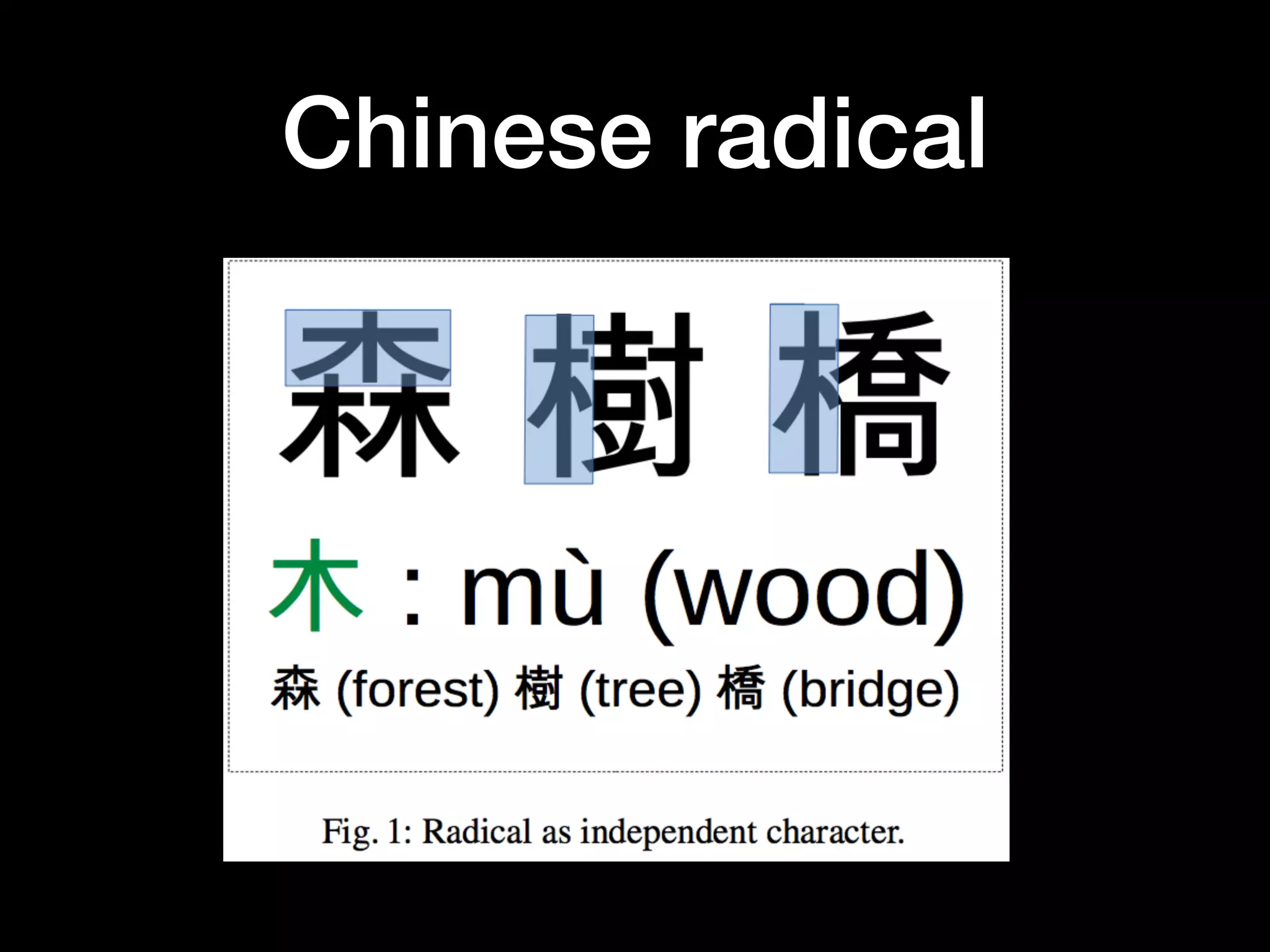

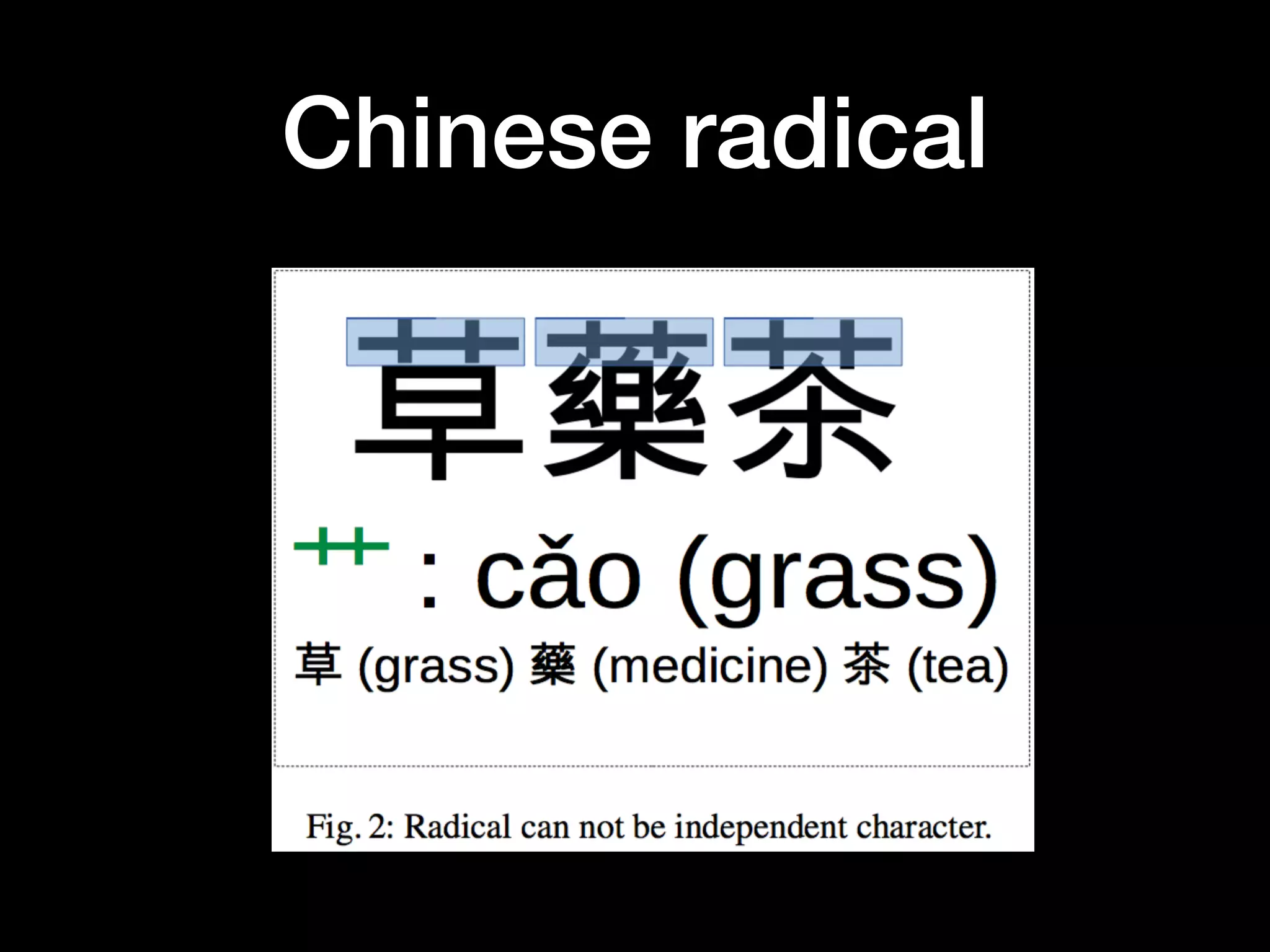

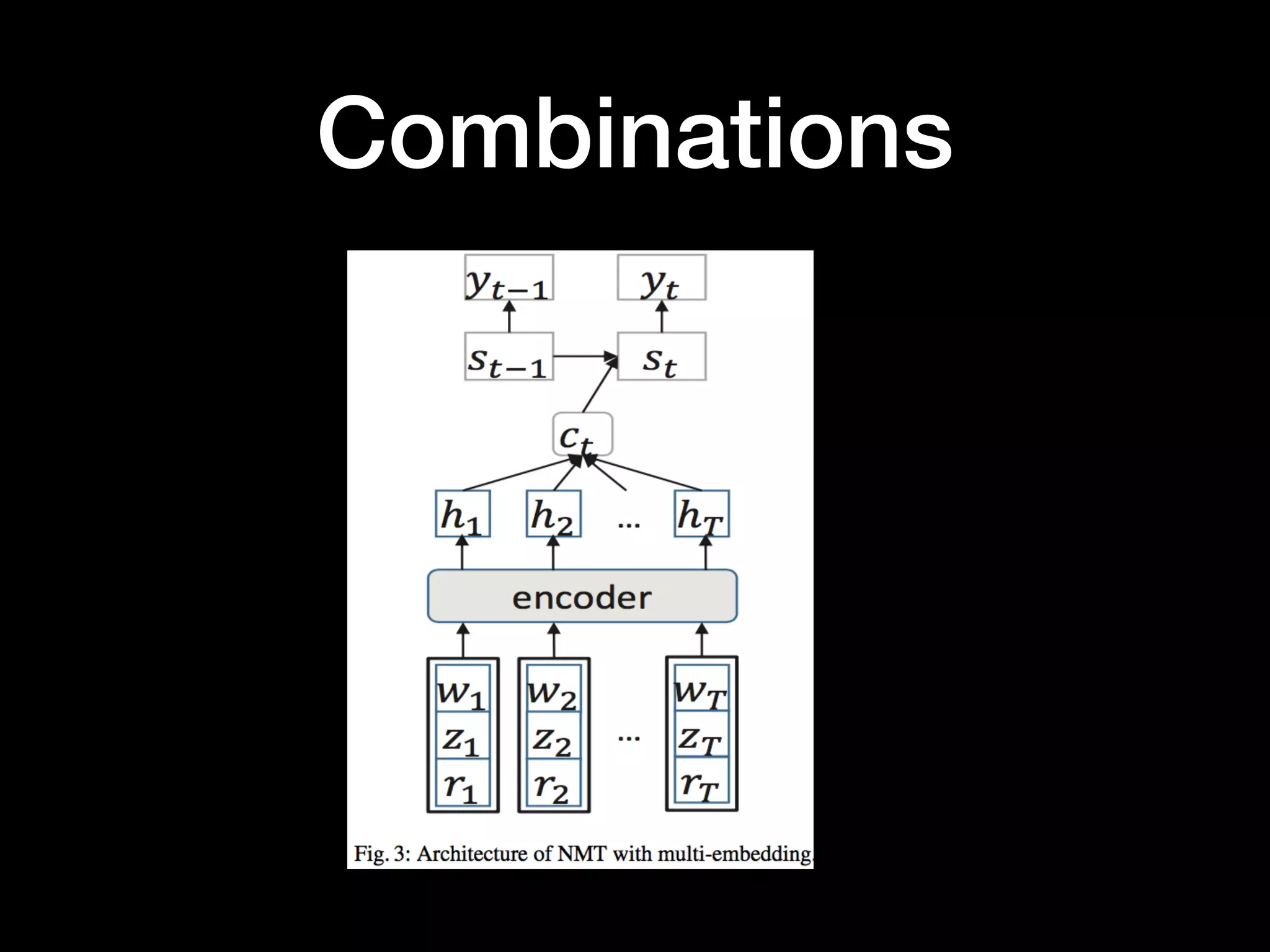

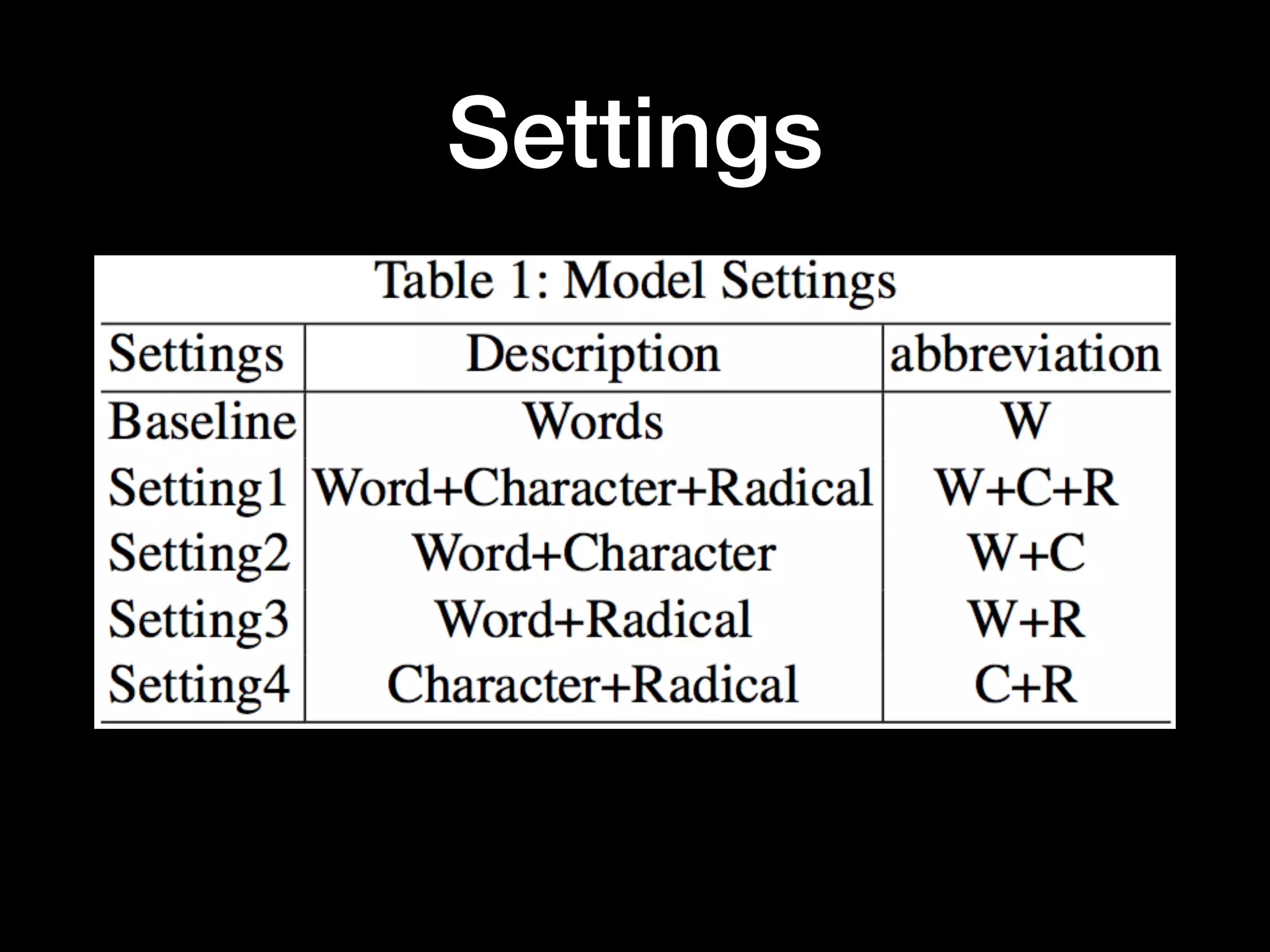

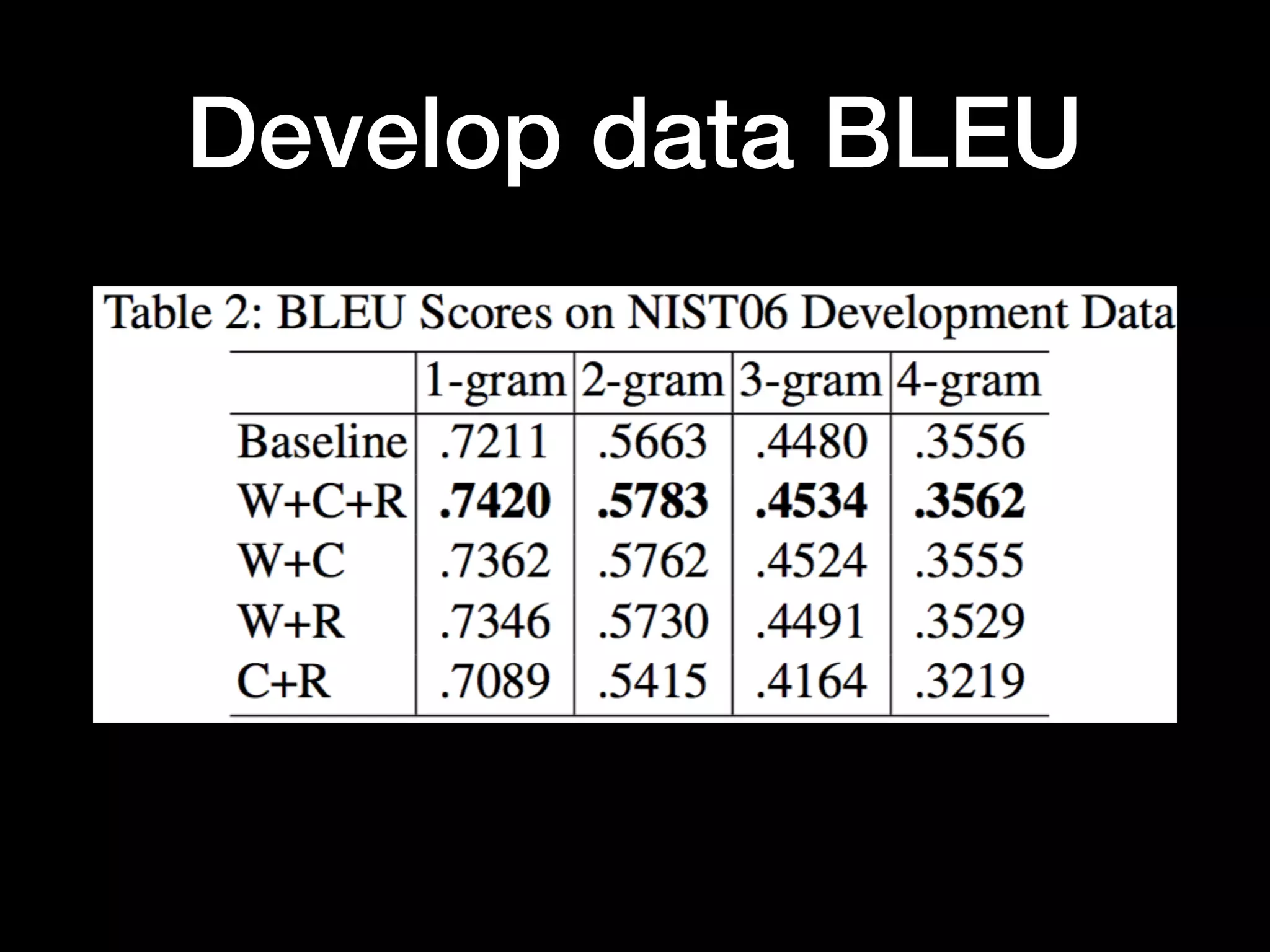

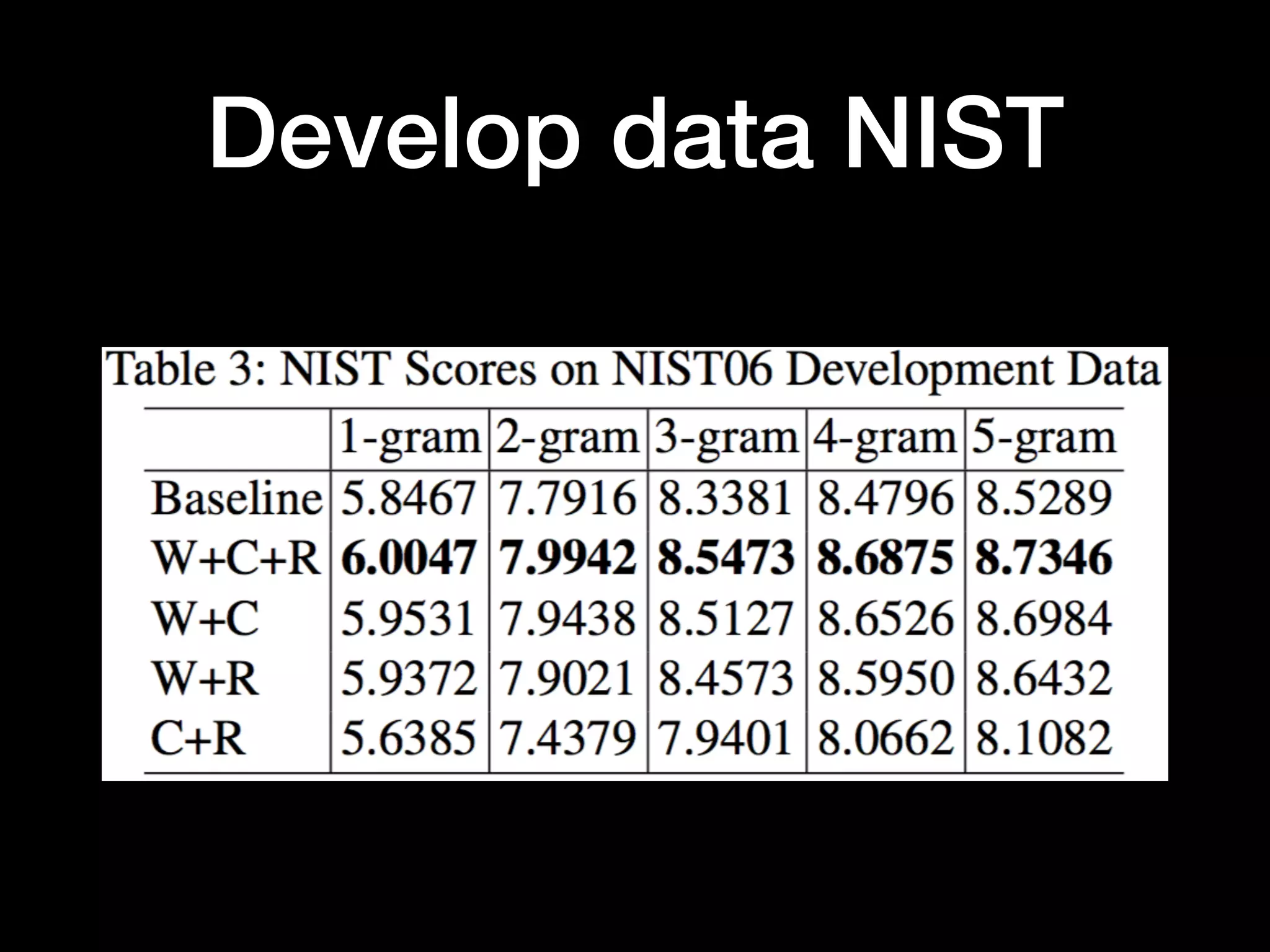

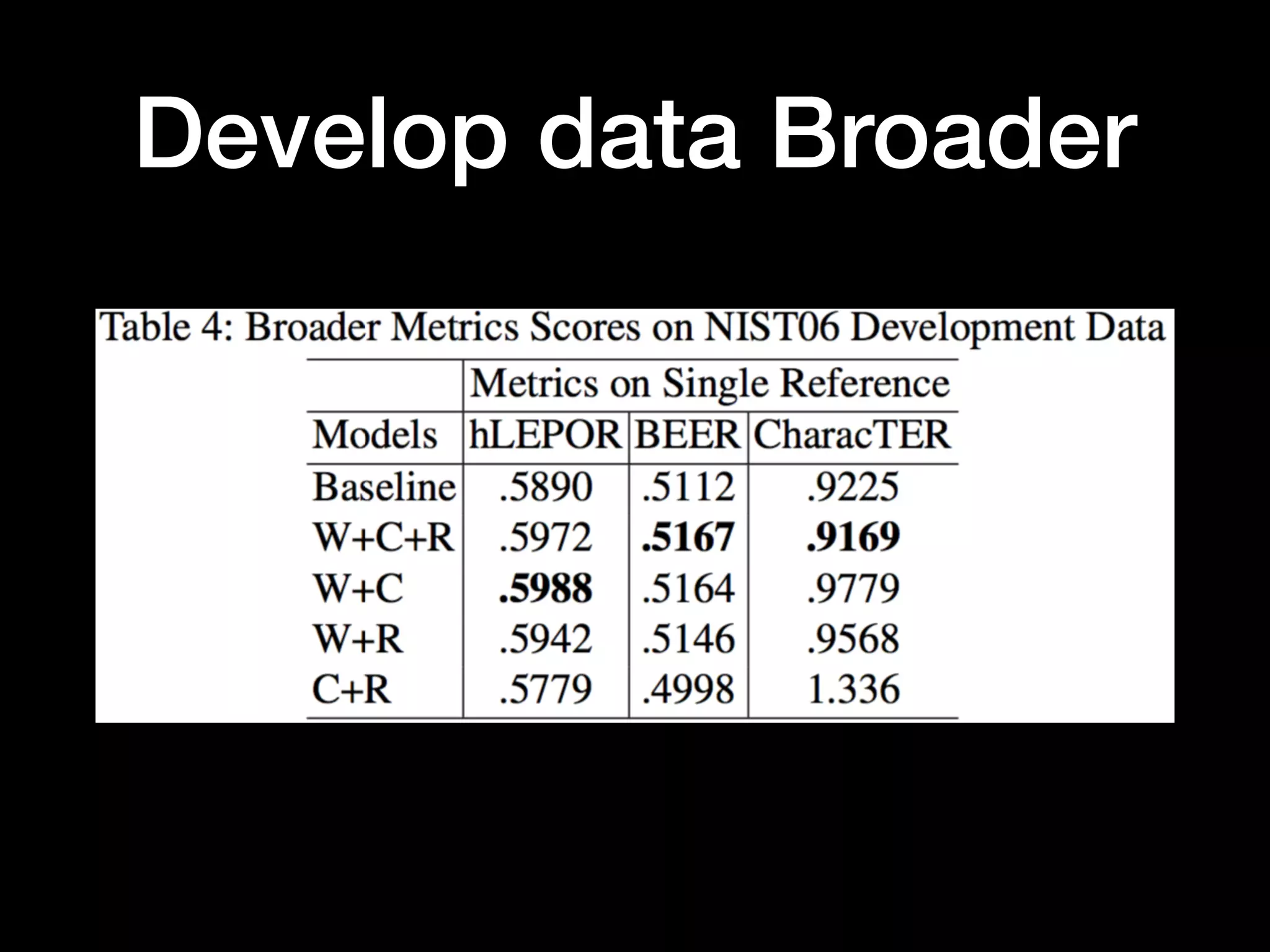

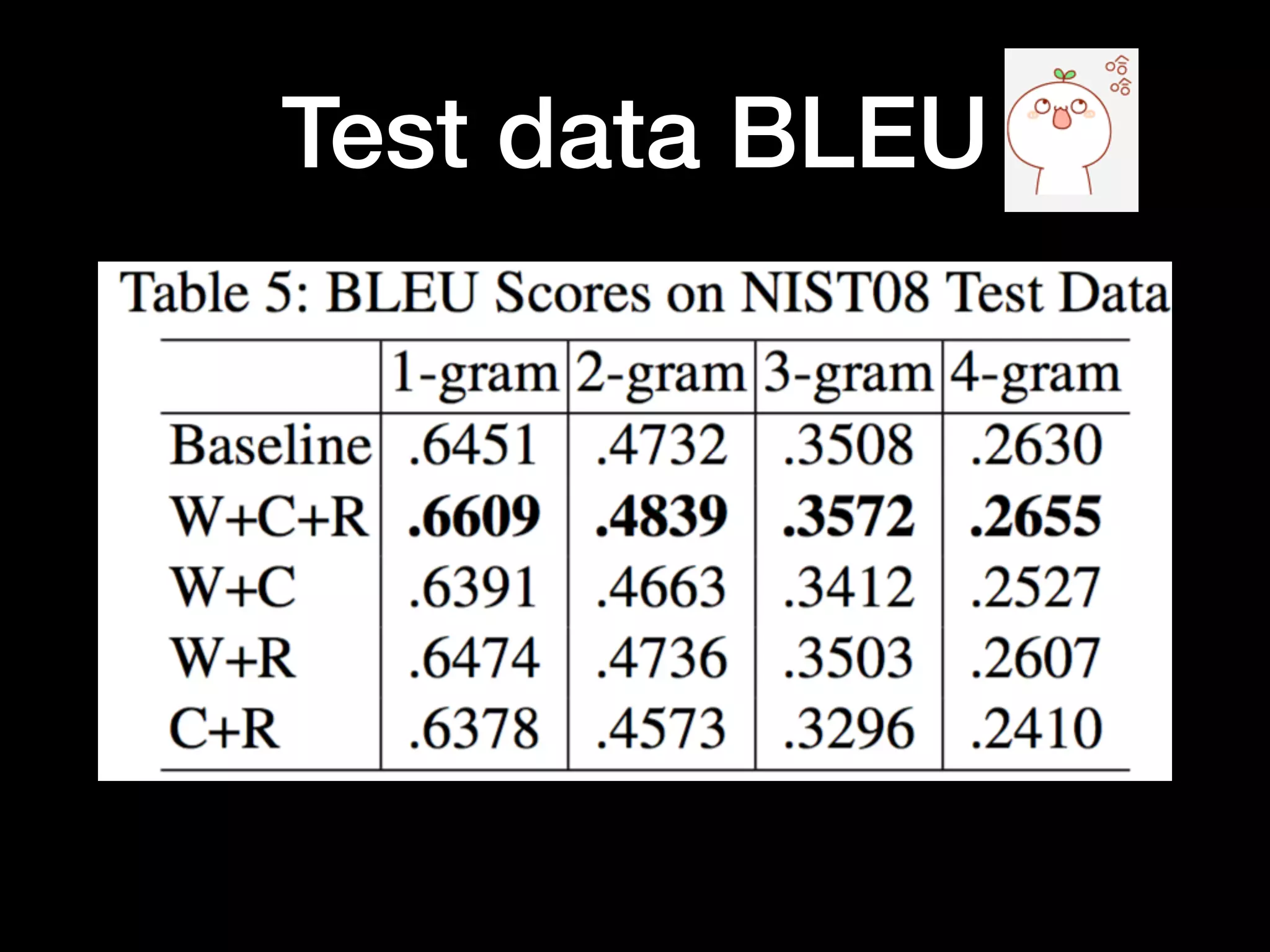

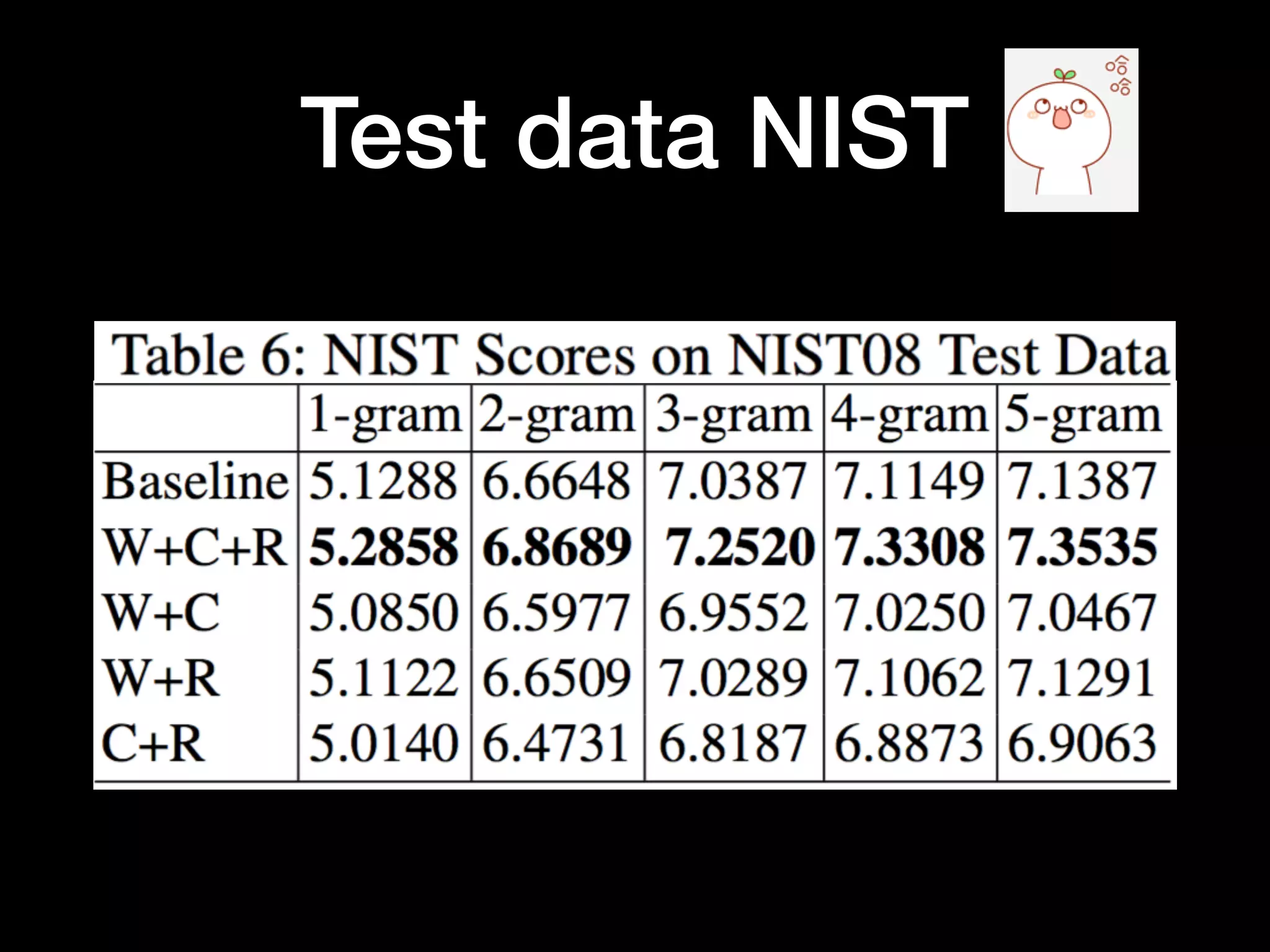

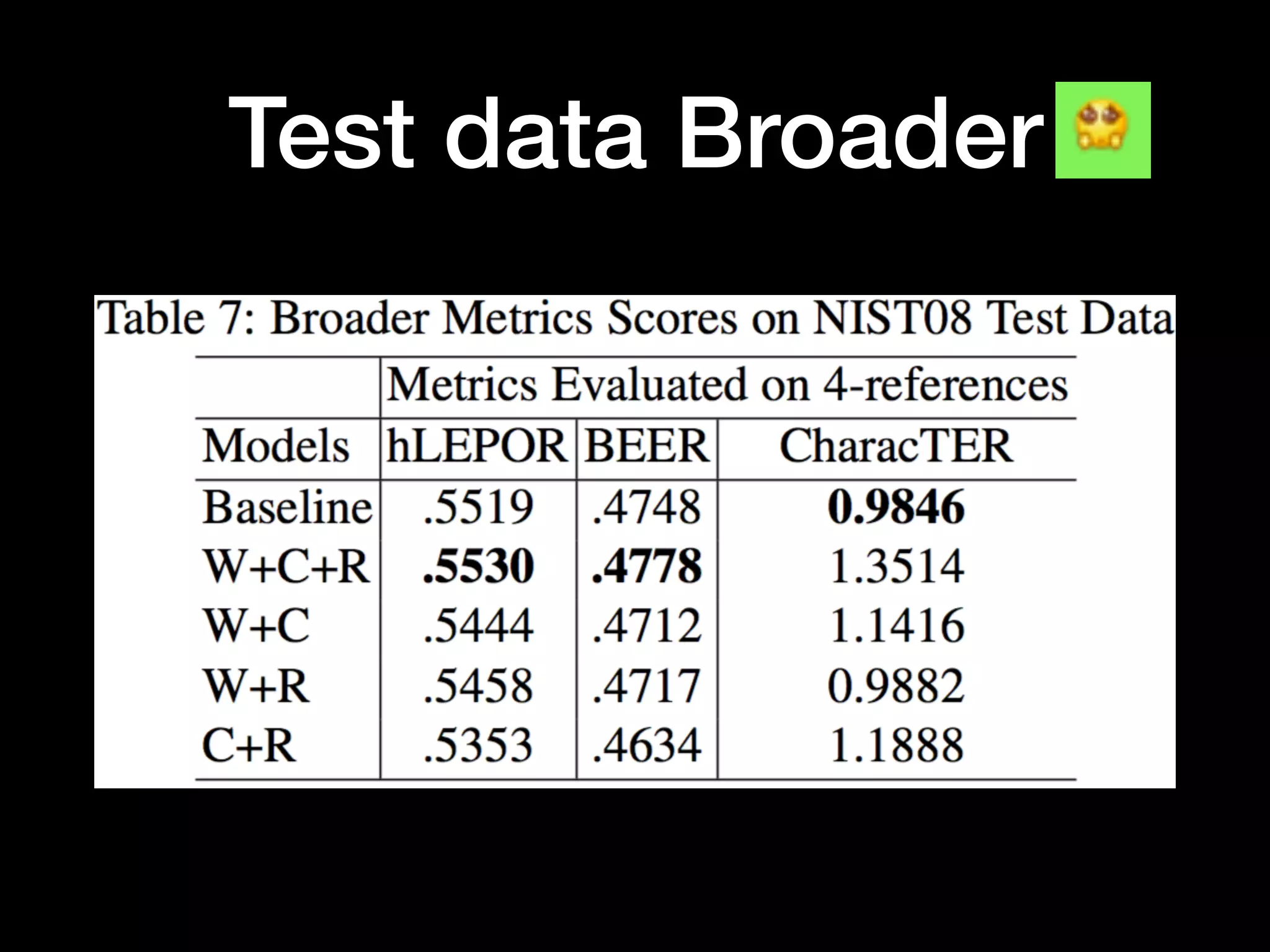

The document presents a proposed model to apply Chinese radicals into neural machine translation. It discusses related work on machine translation and neural networks. The proposed model would combine radical-level machine translation with an attention-based neural model, incorporating radicals into the input data. Experiments would evaluate the model on various data settings and metrics, comparing performance with and without radicals. Future work could improve parameter tuning and include more diverse data.