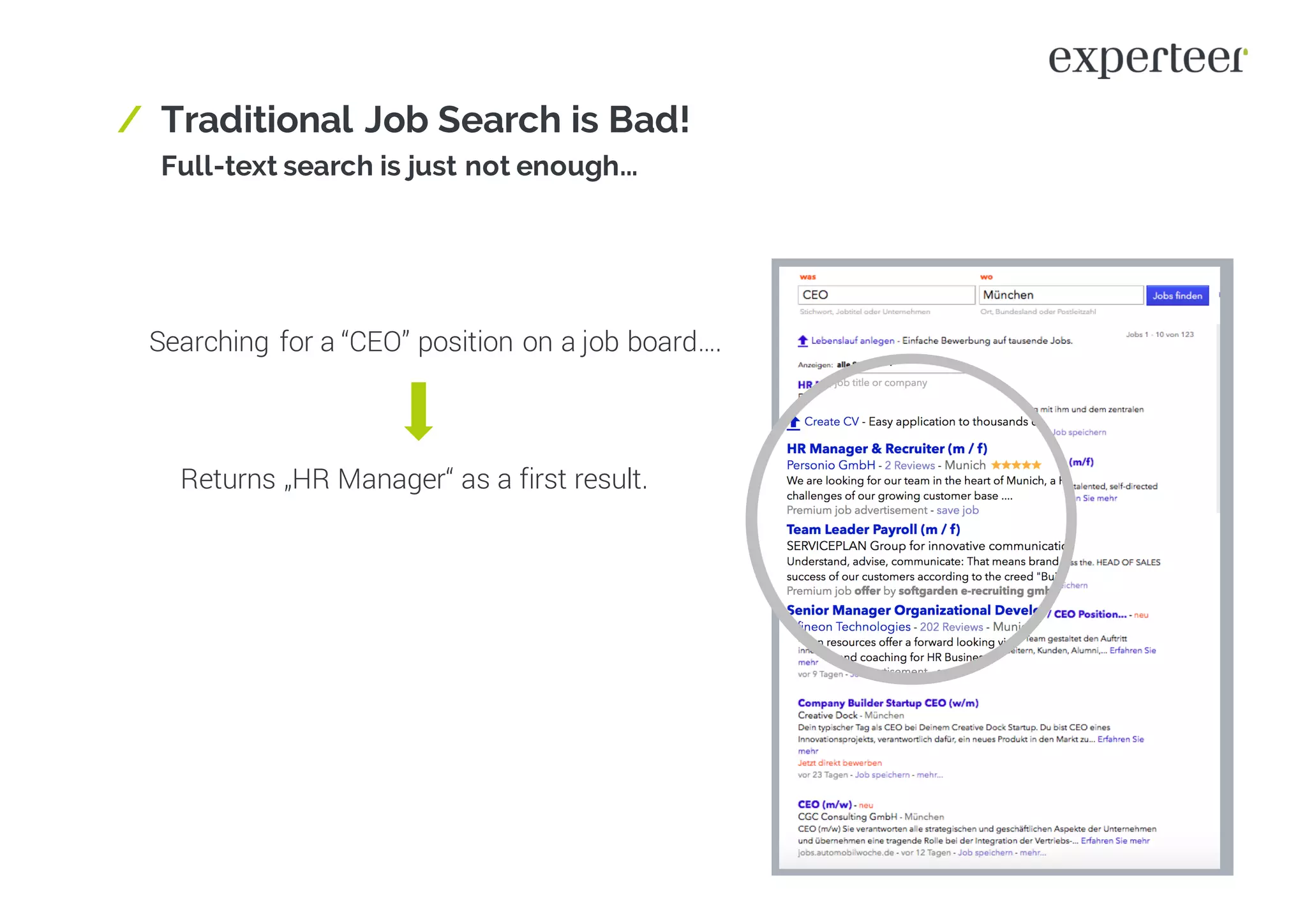

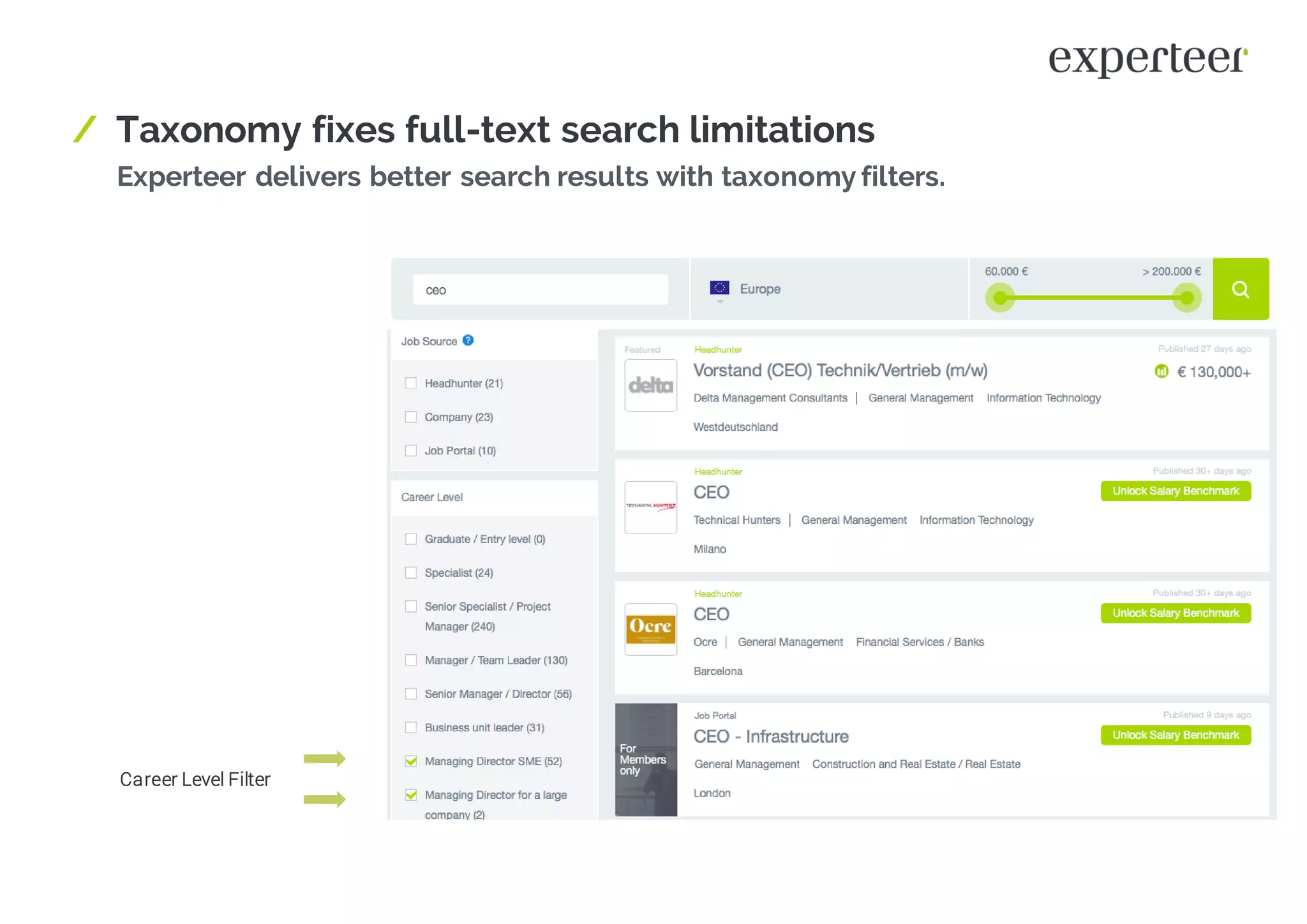

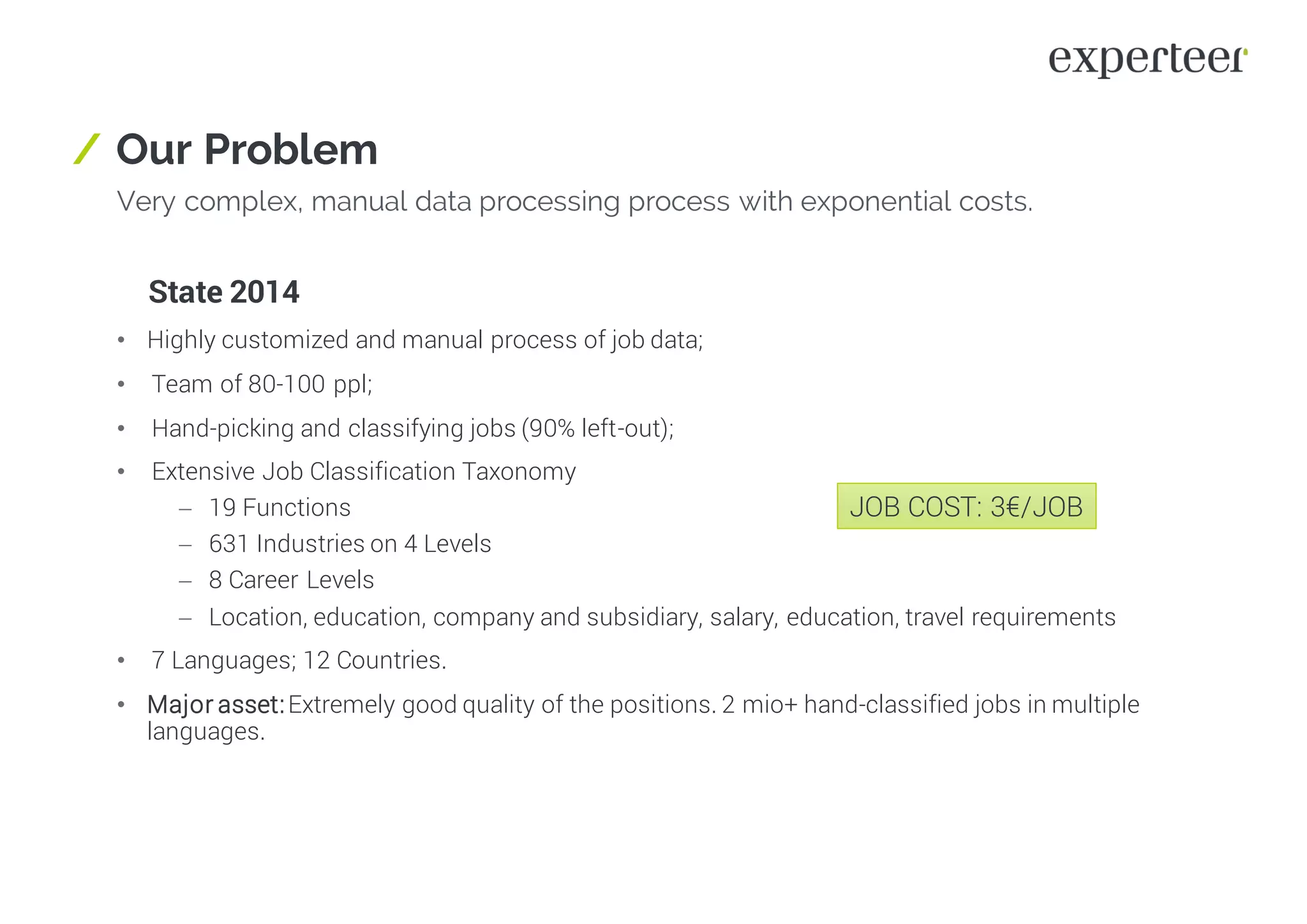

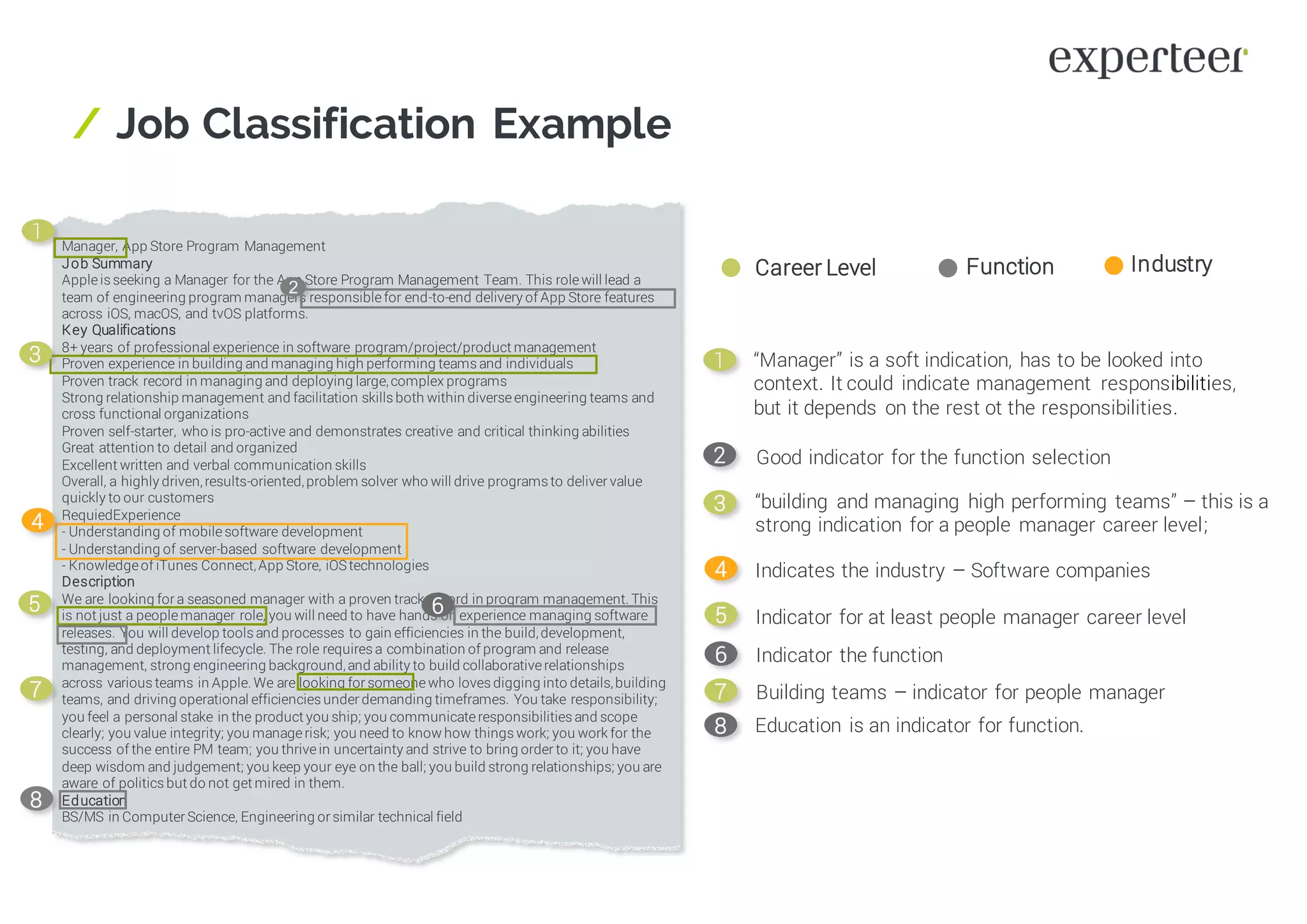

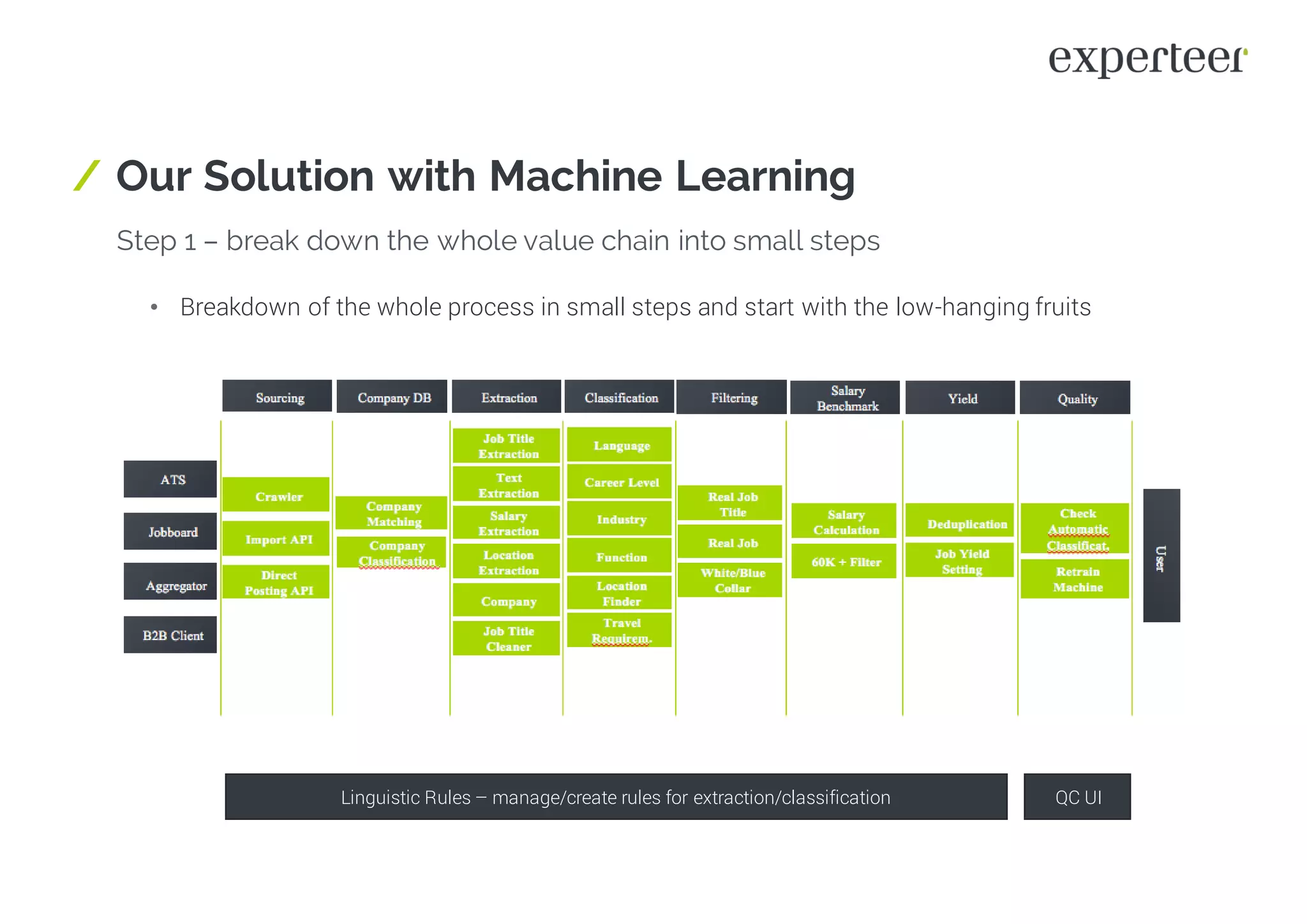

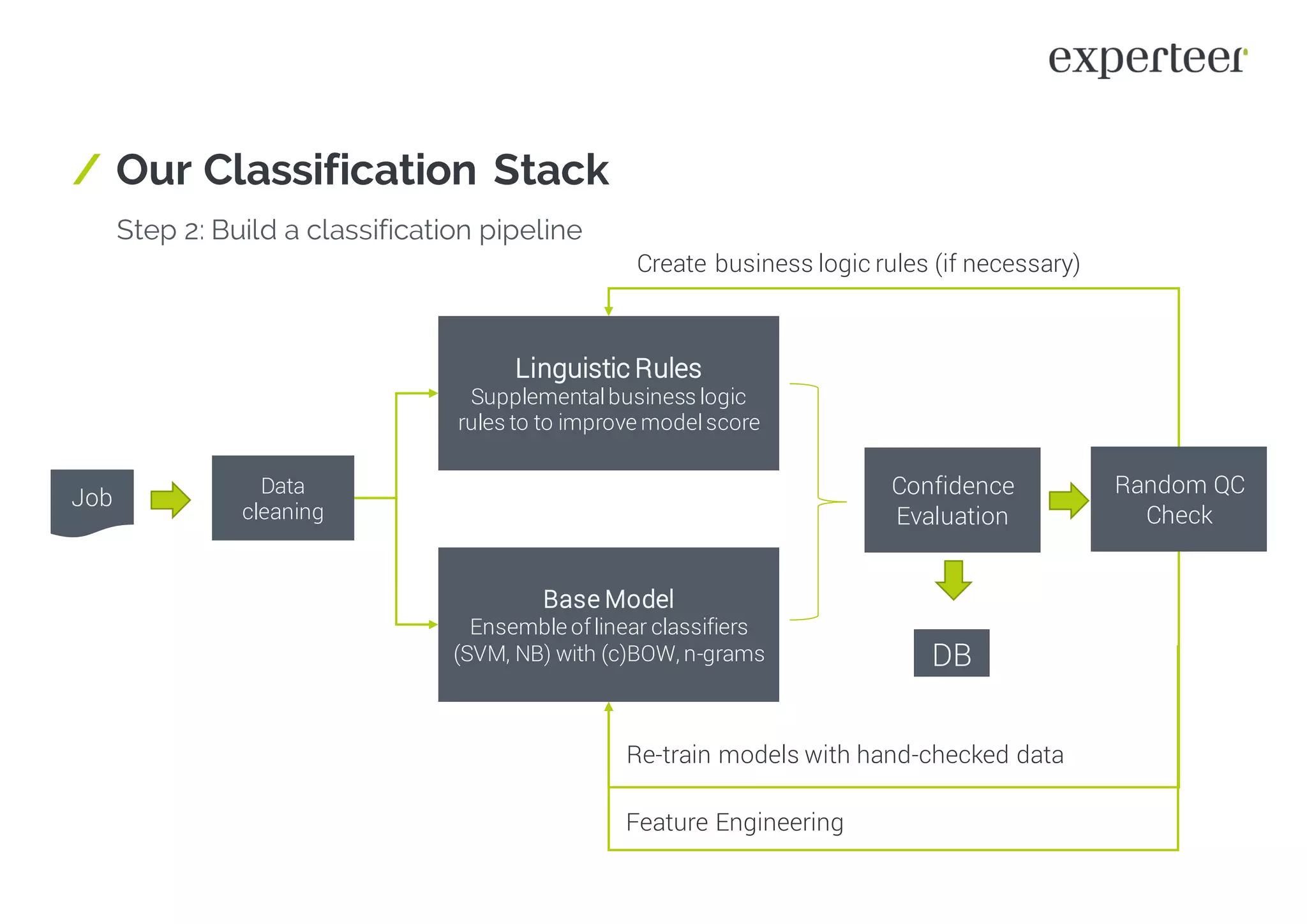

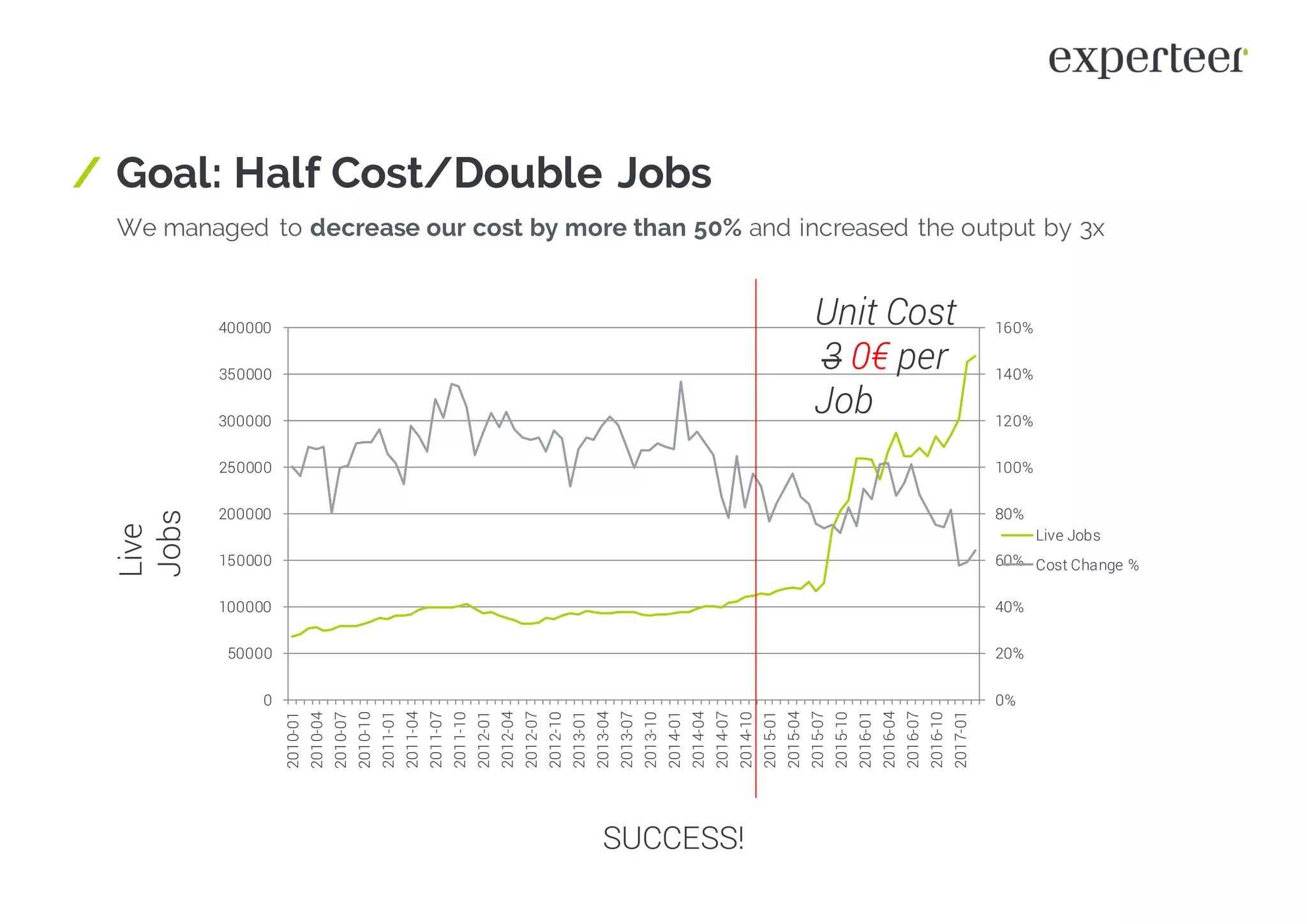

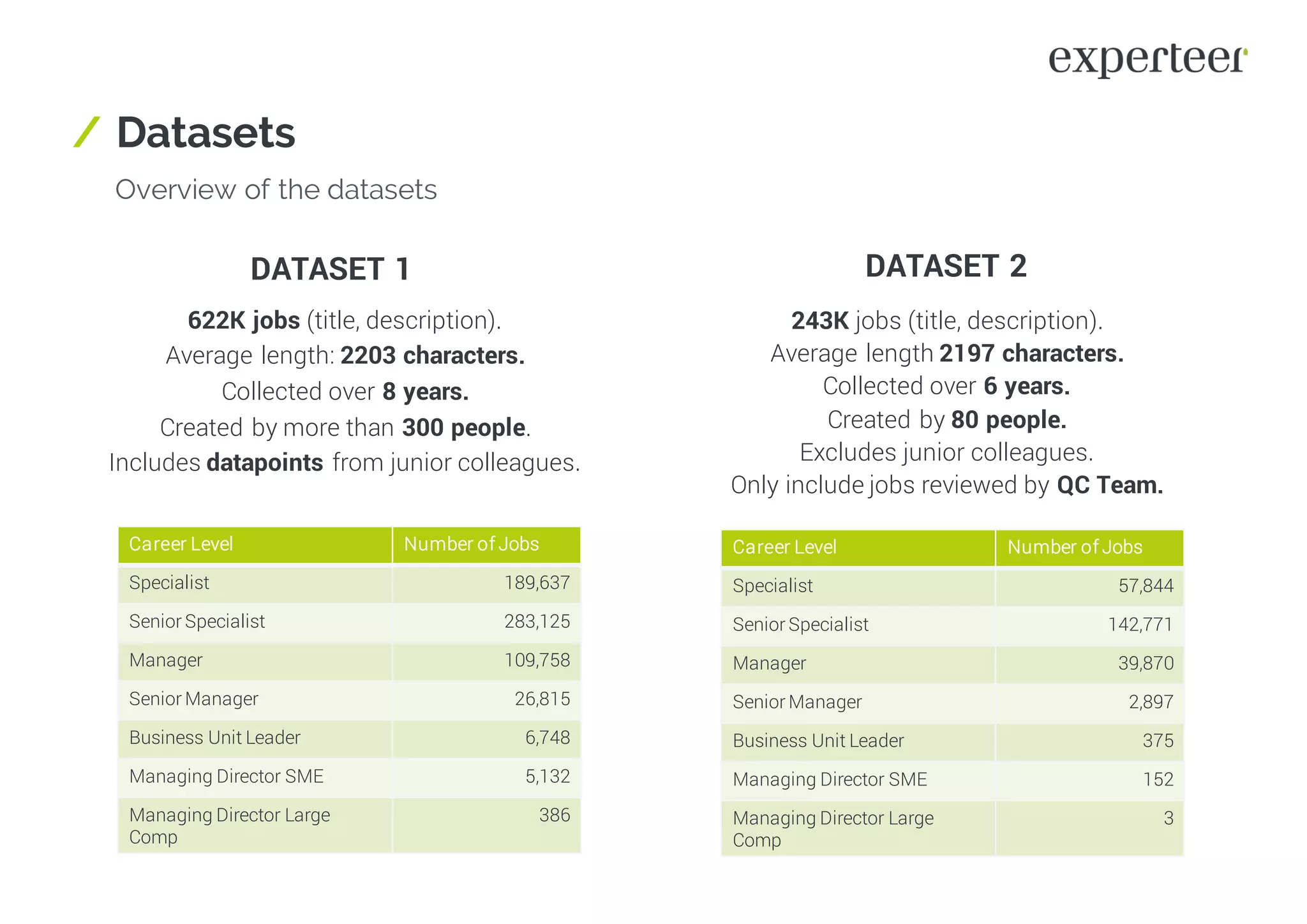

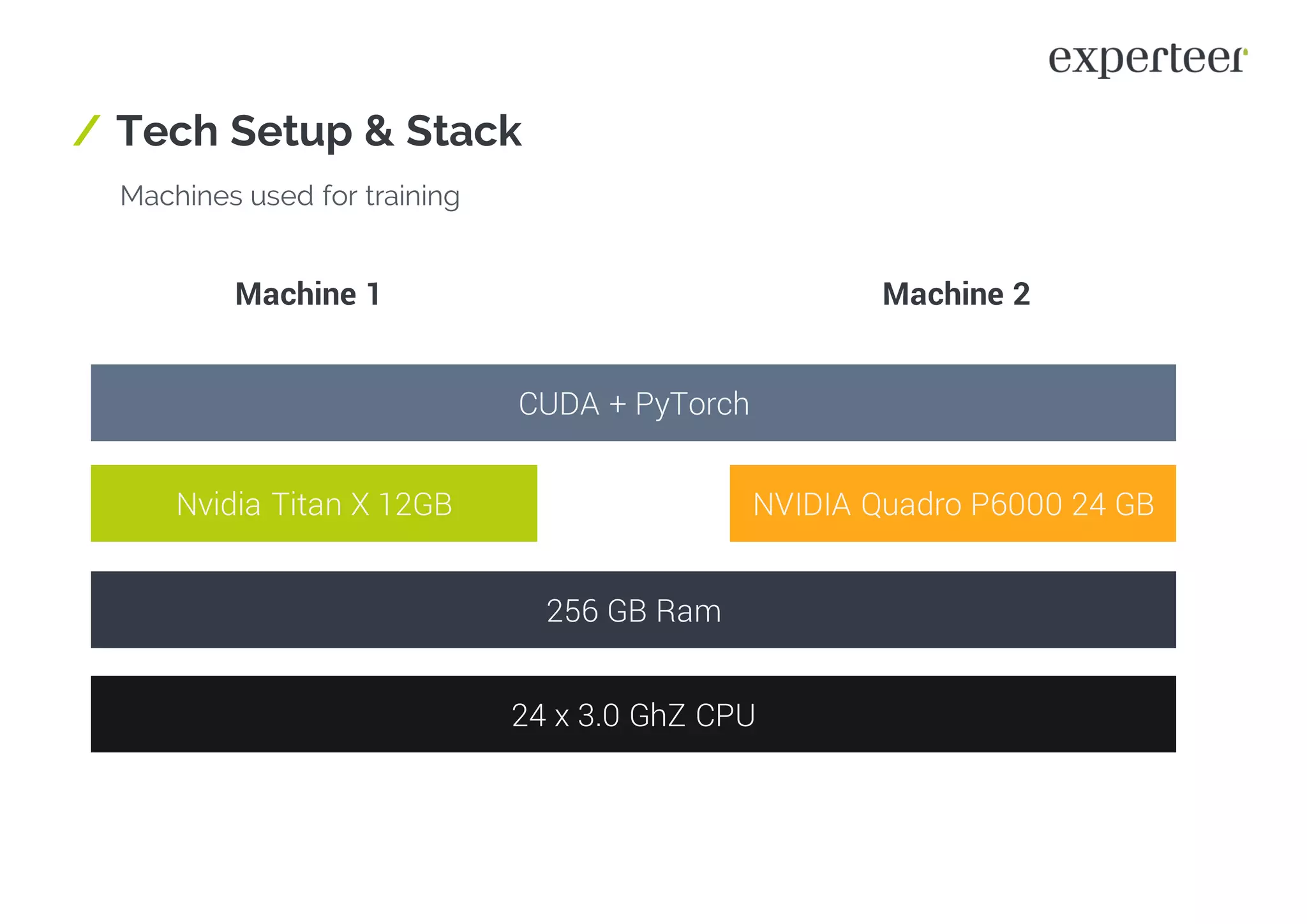

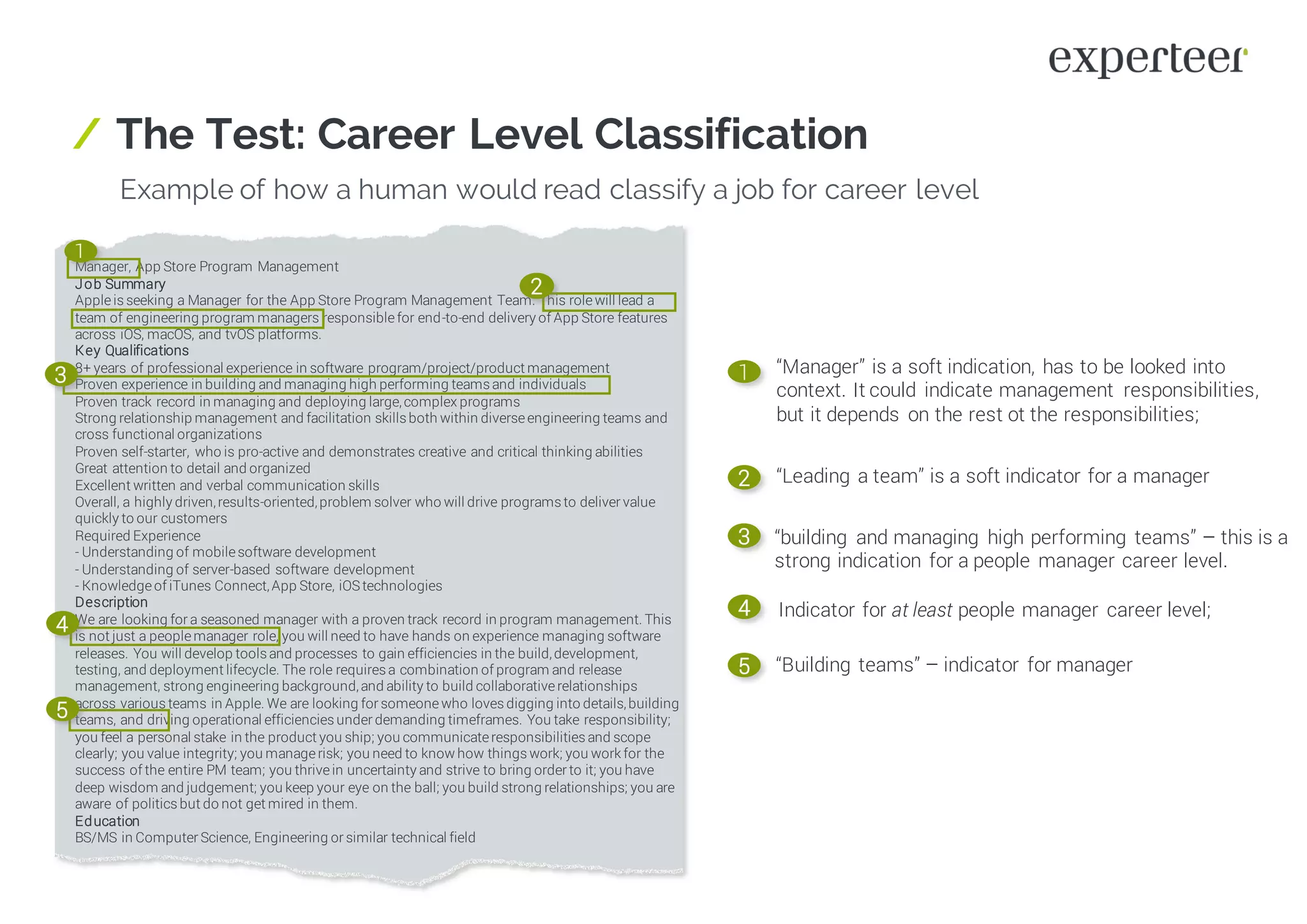

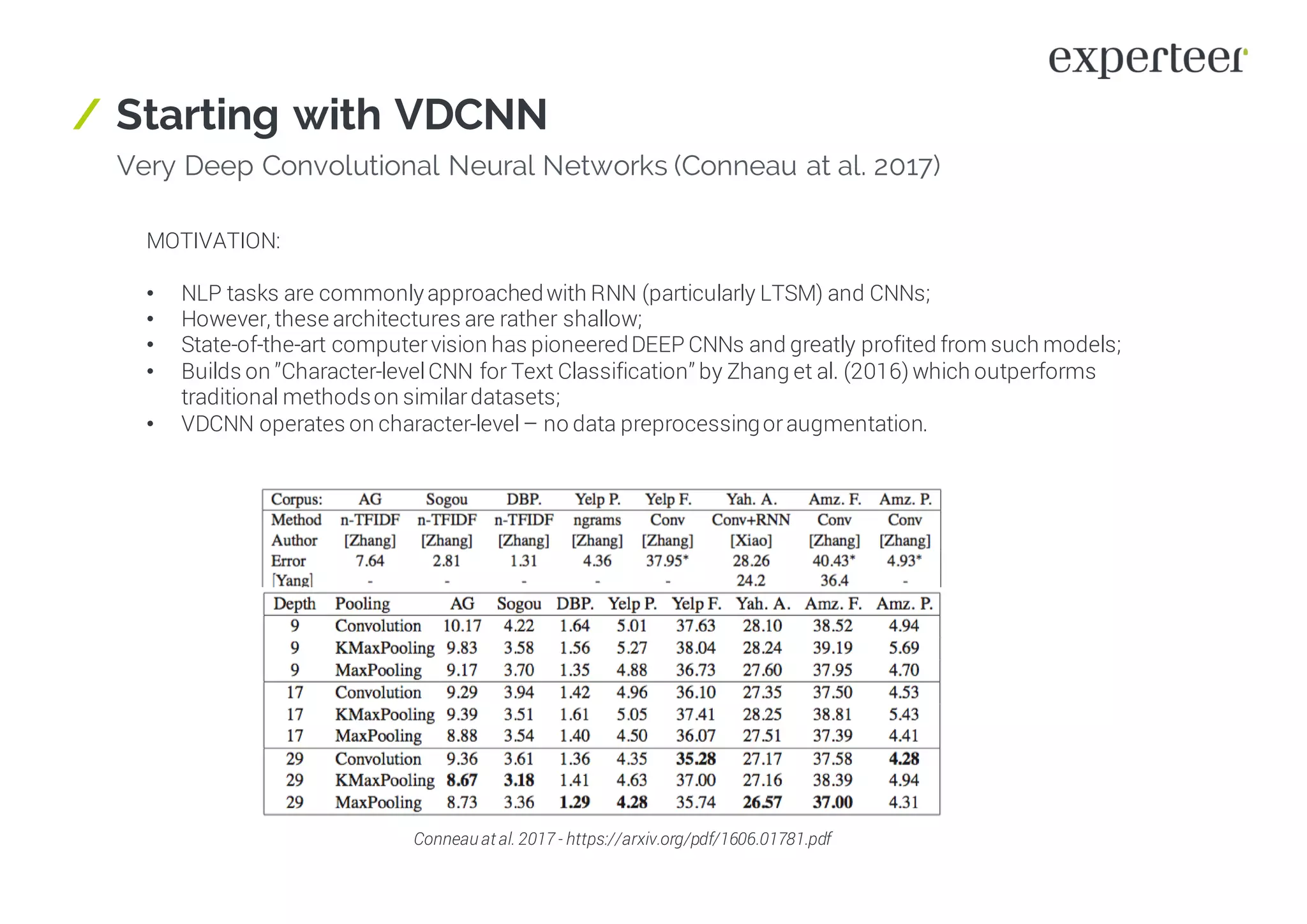

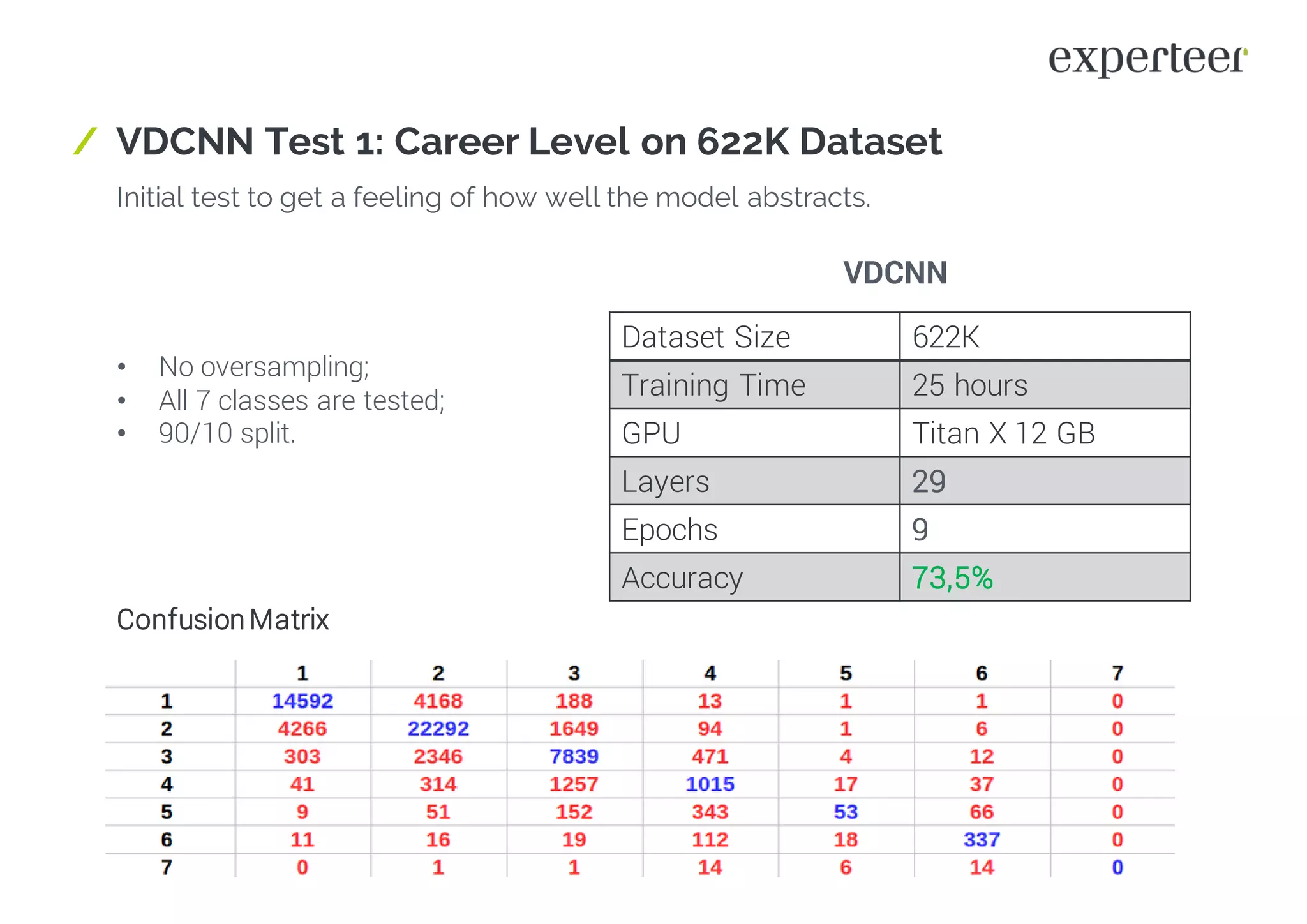

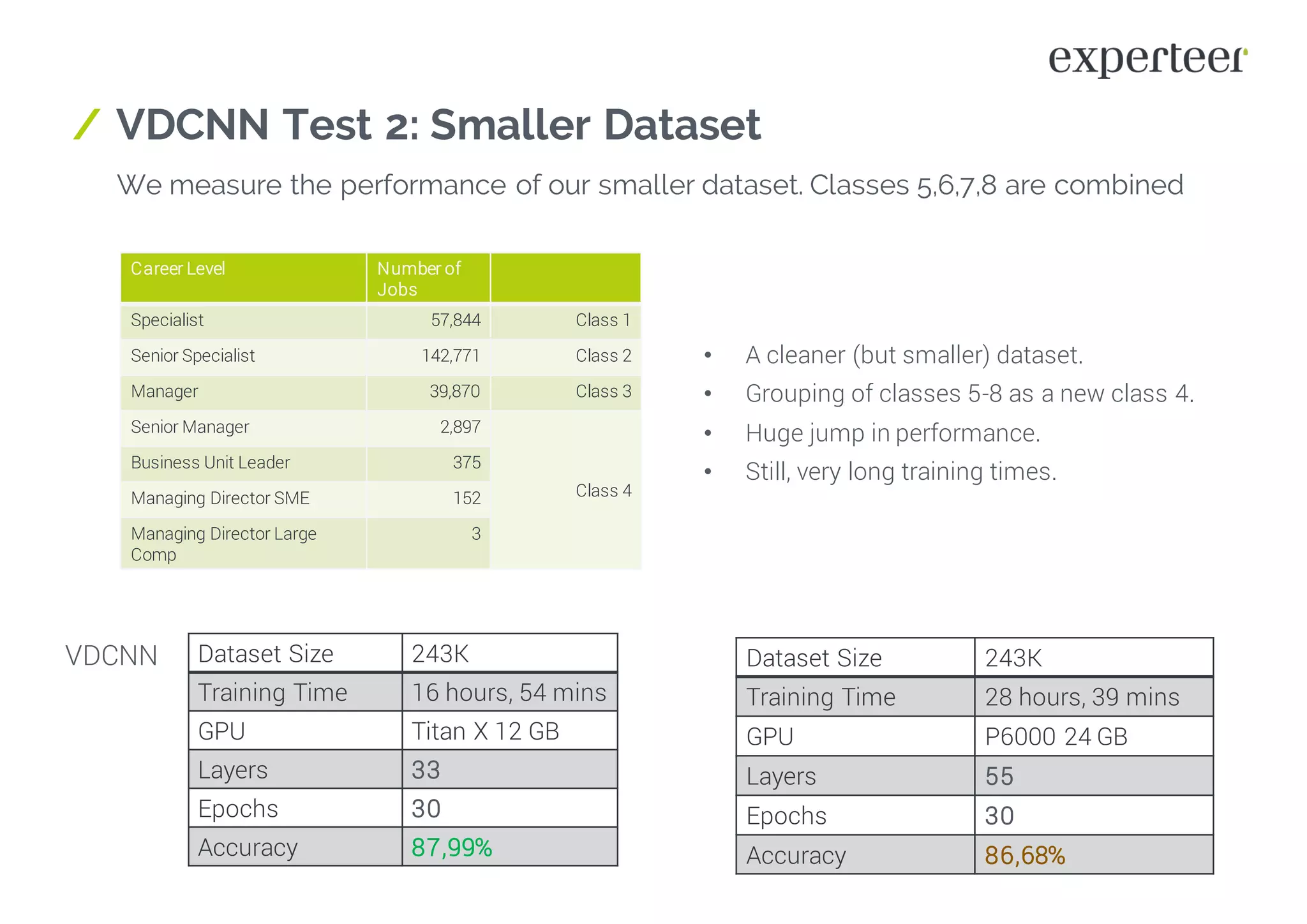

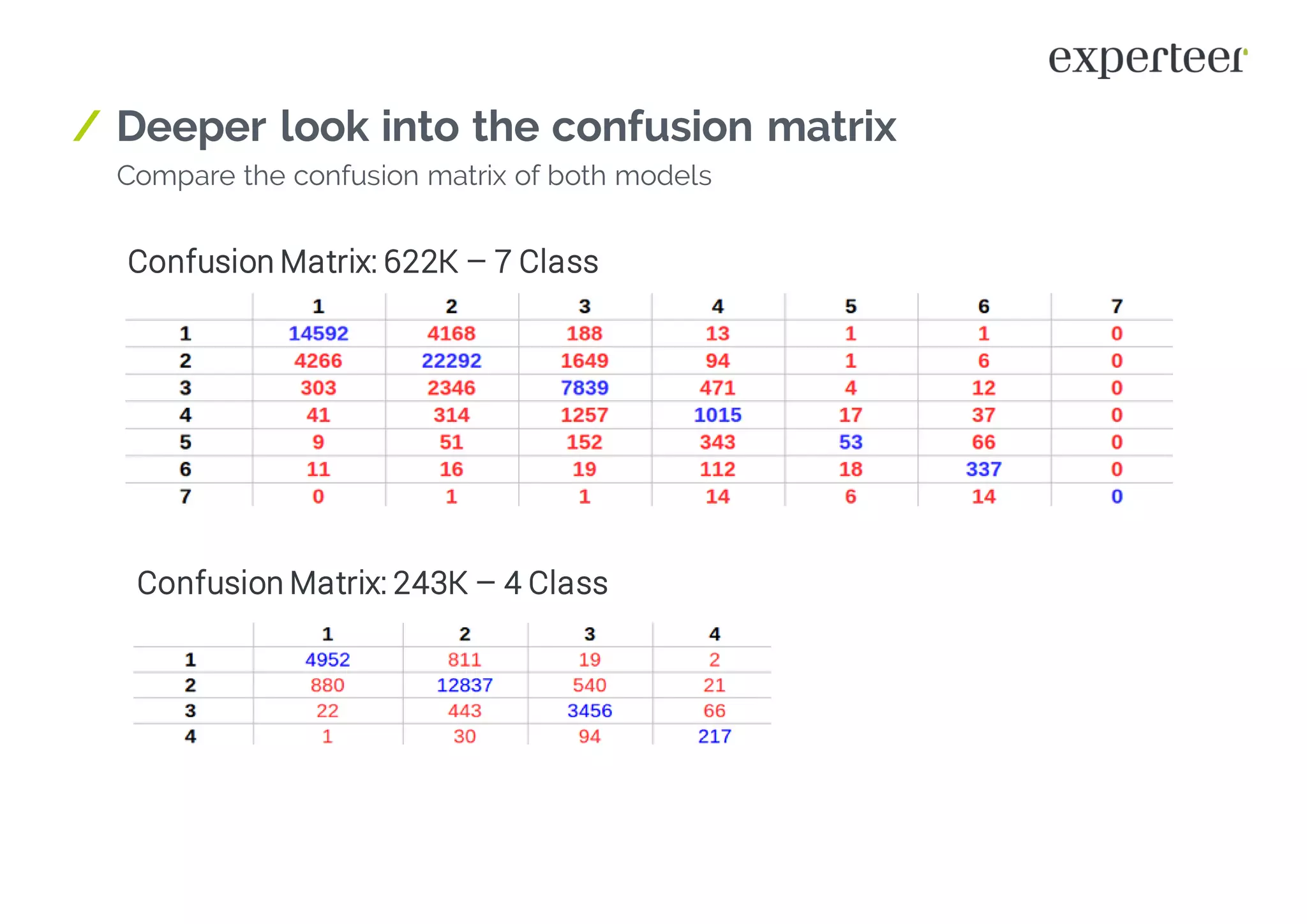

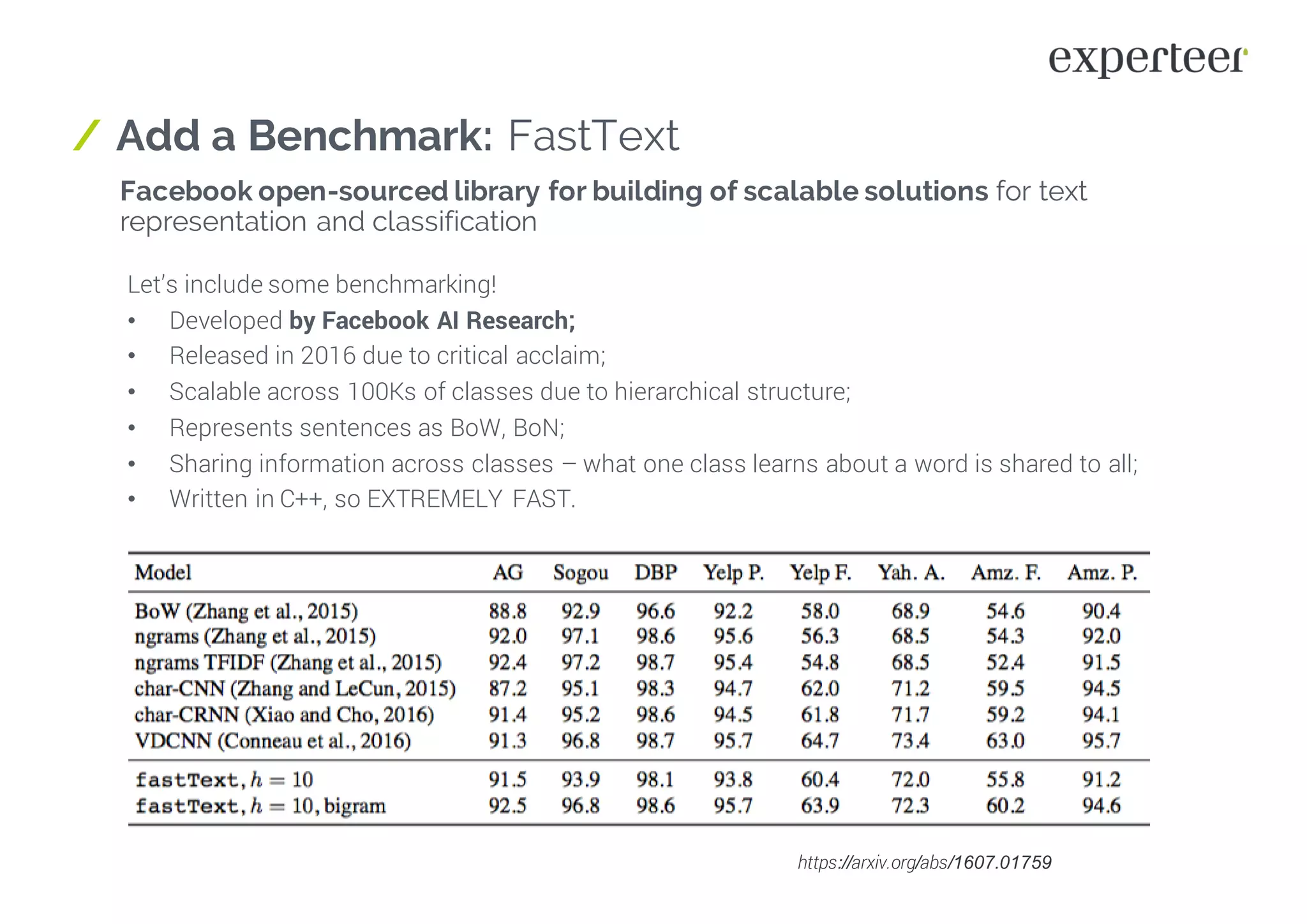

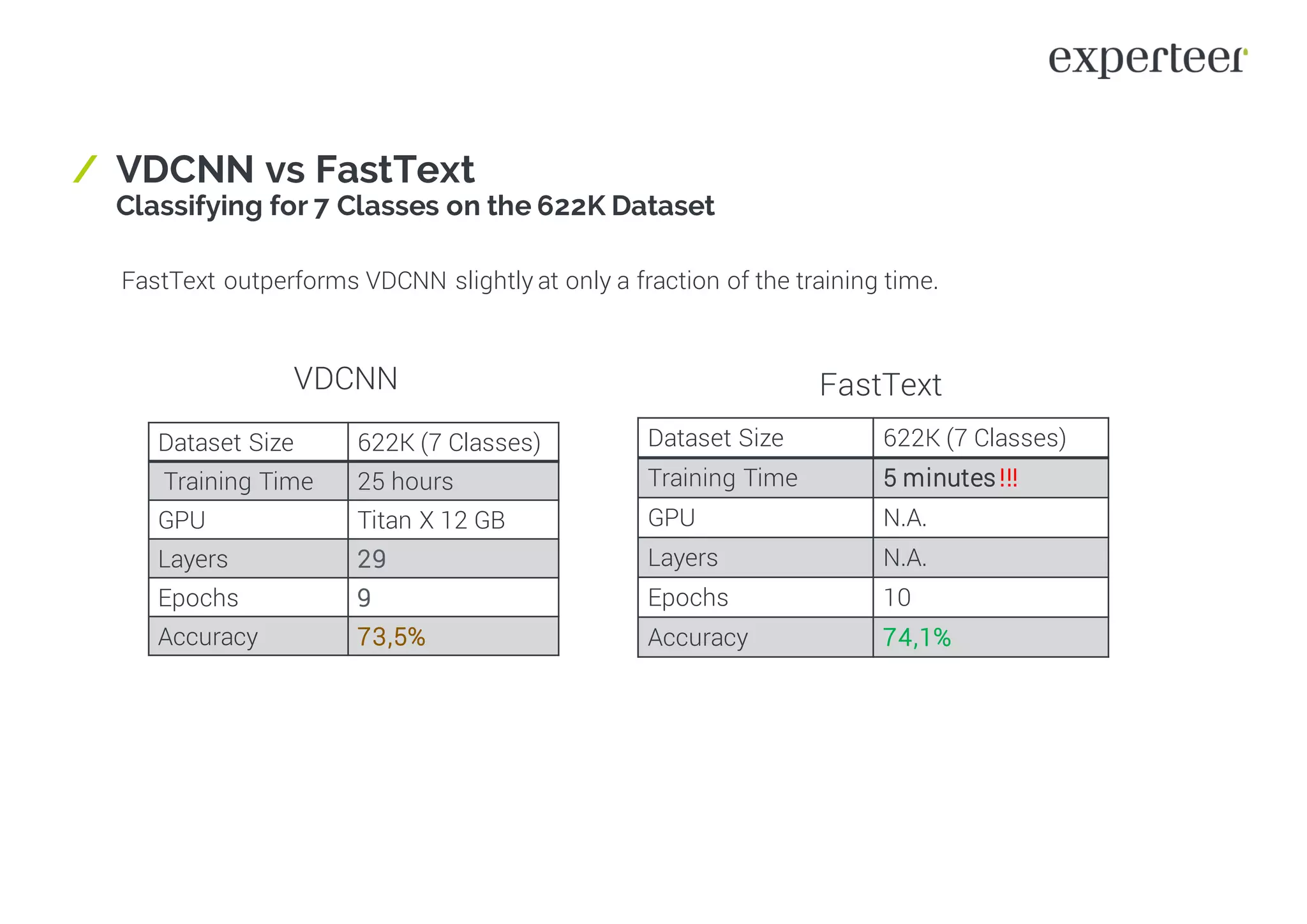

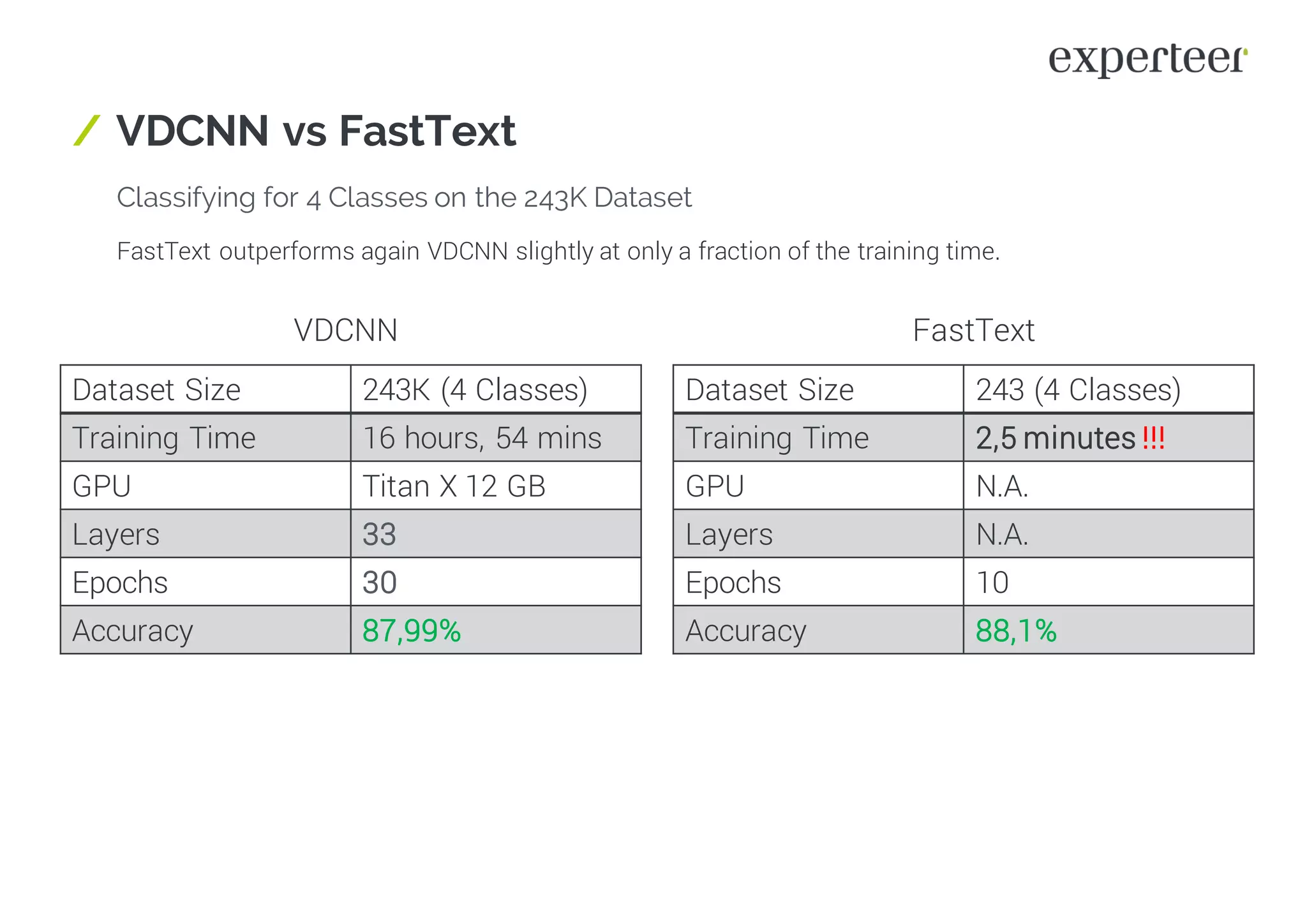

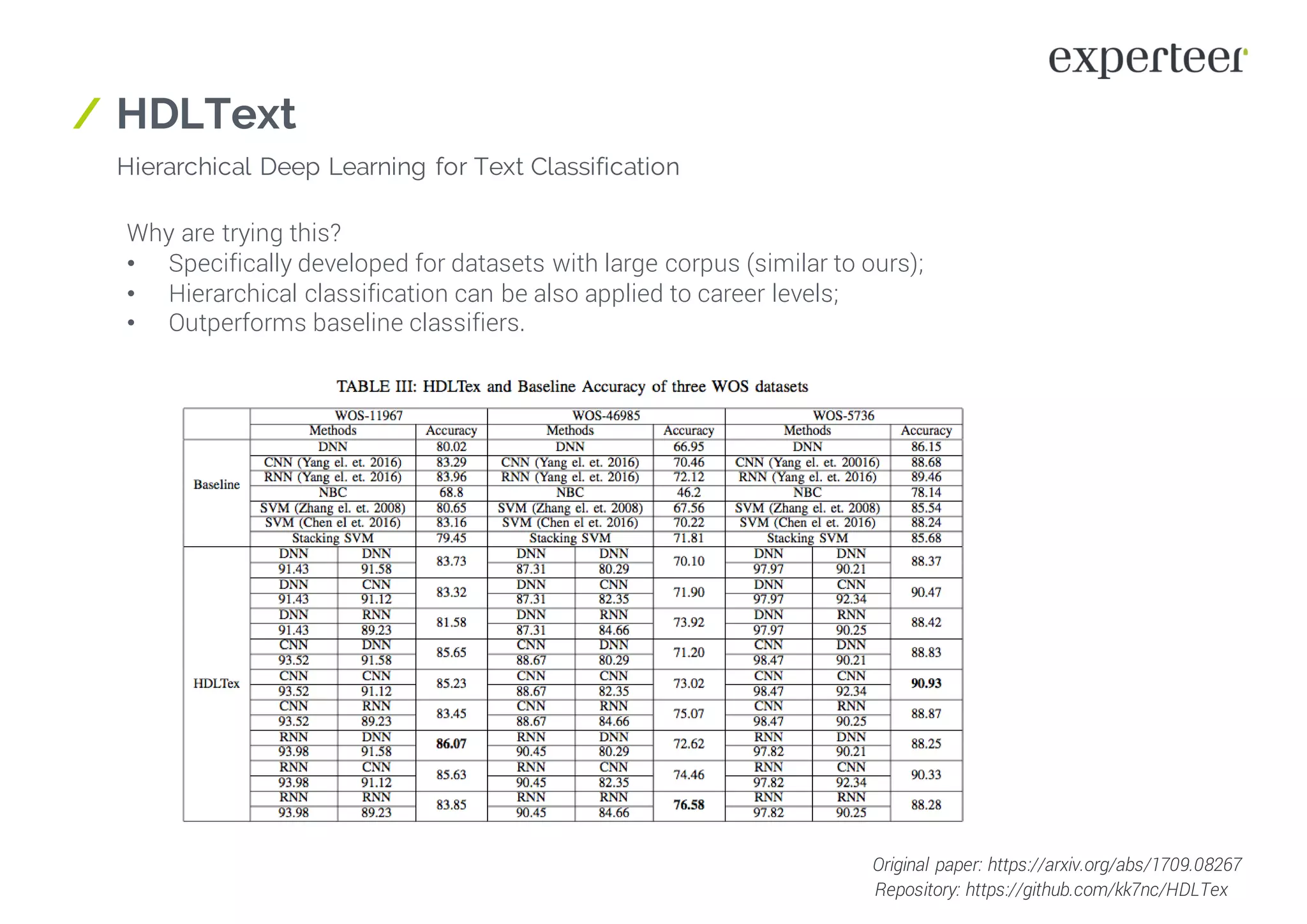

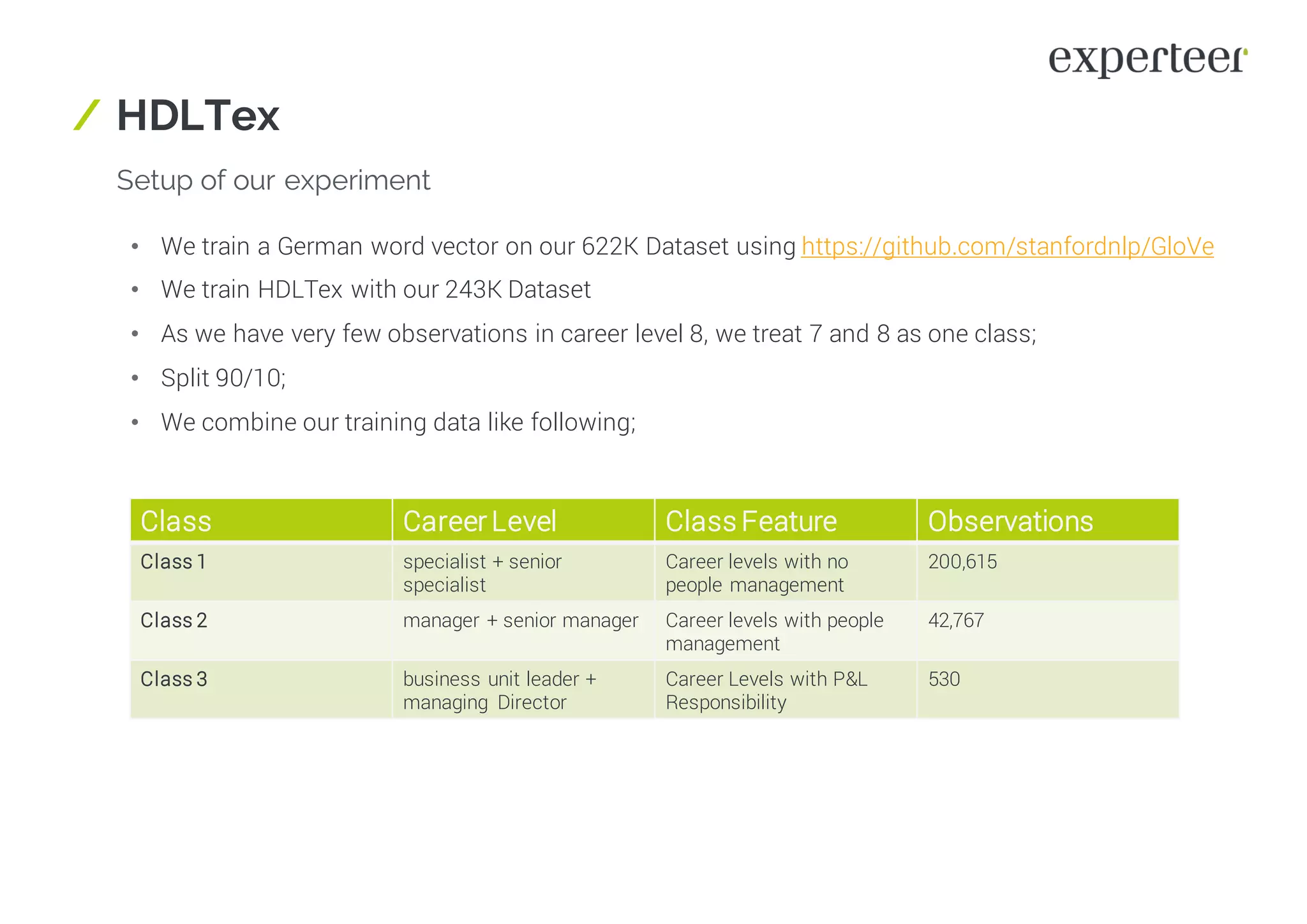

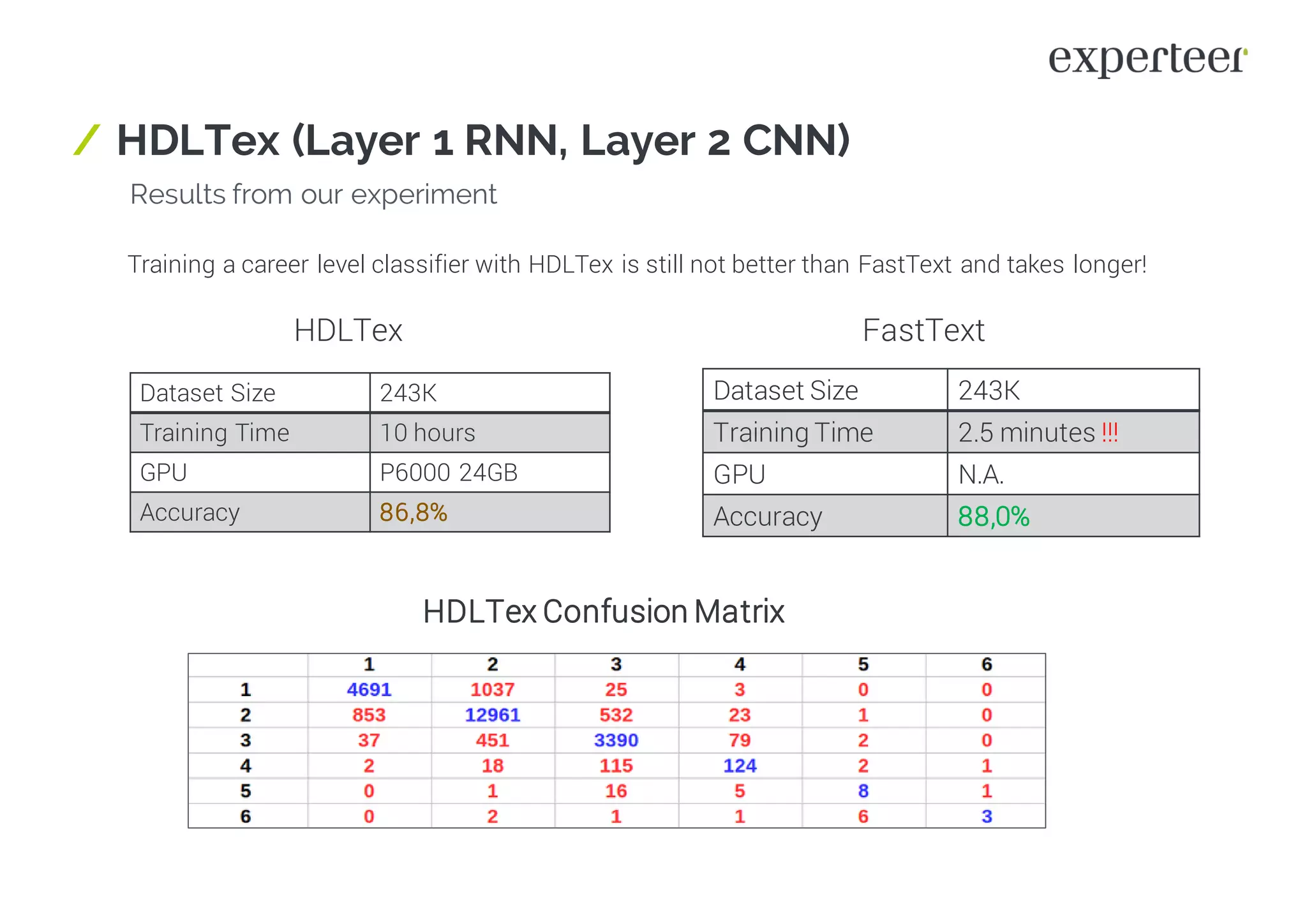

The document discusses the application of deep learning in text classification within the HR industry, particularly through the use of Experteer's machine learning services to optimize job search processes. It highlights challenges in traditional job searches and presents machine learning solutions that improved cost efficiency and job classification accuracy. The document also explores various deep learning models, testing methods, and the advantages of fasttext over deeper neural networks in terms of training speed and performance.