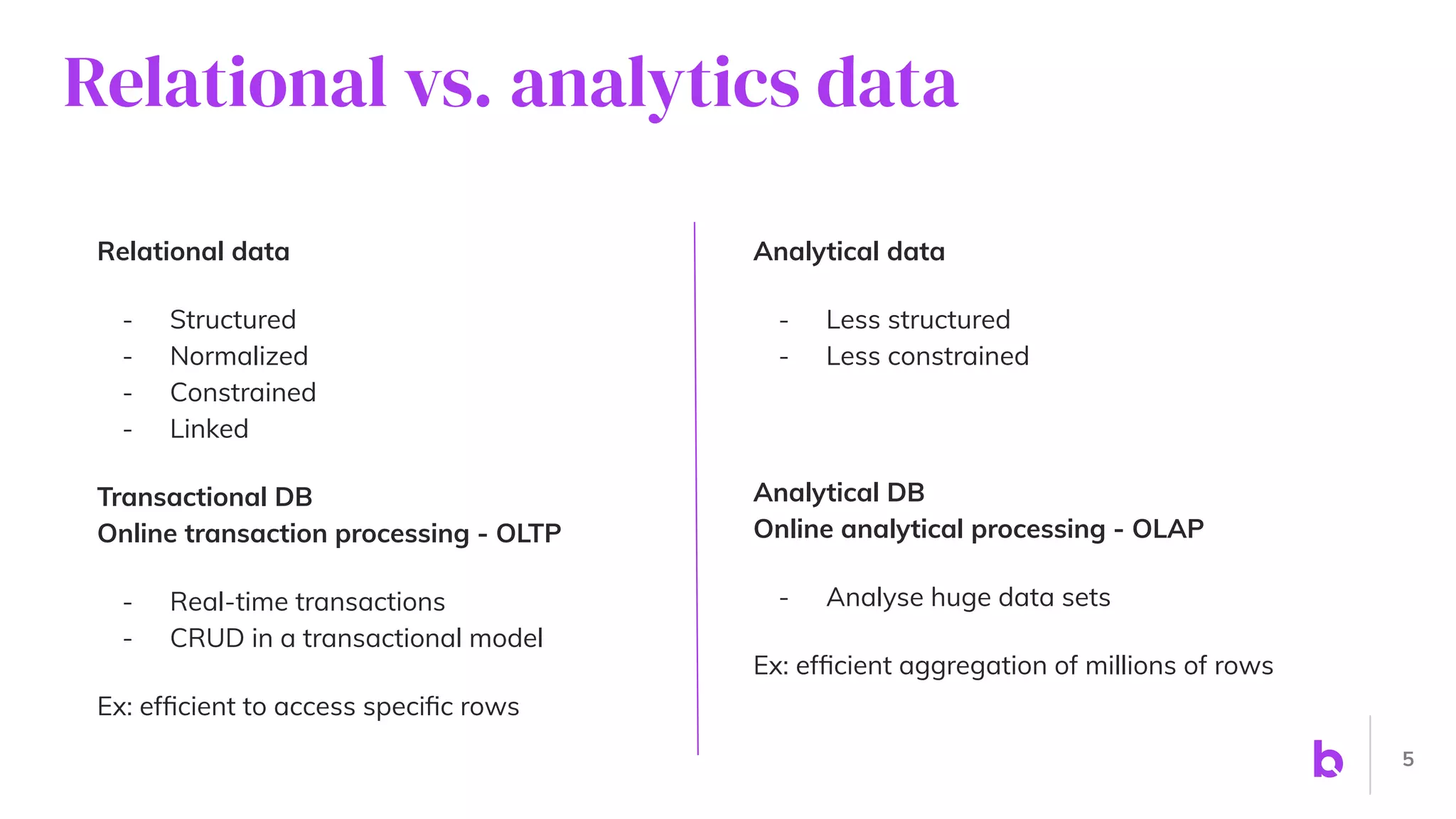

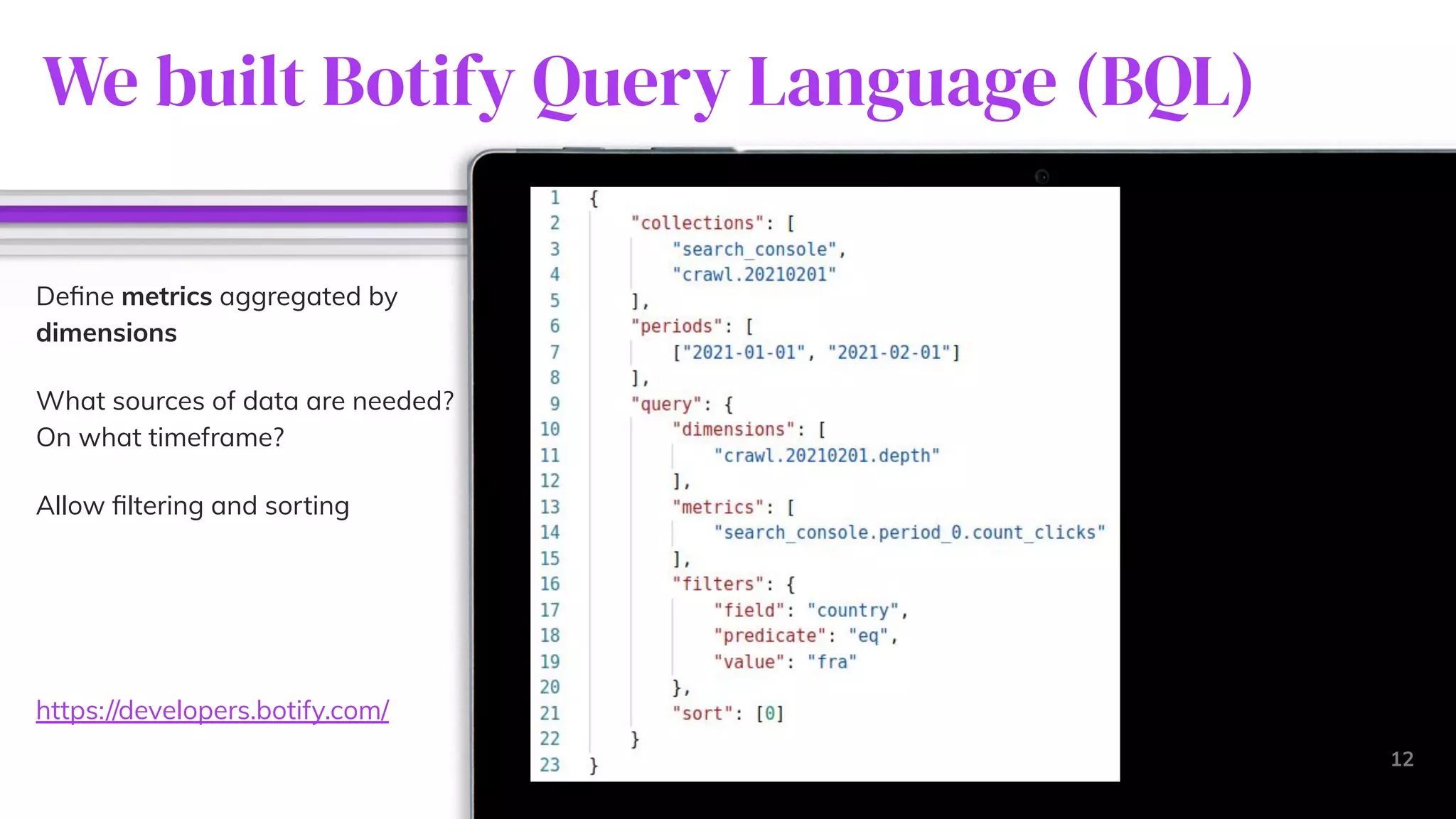

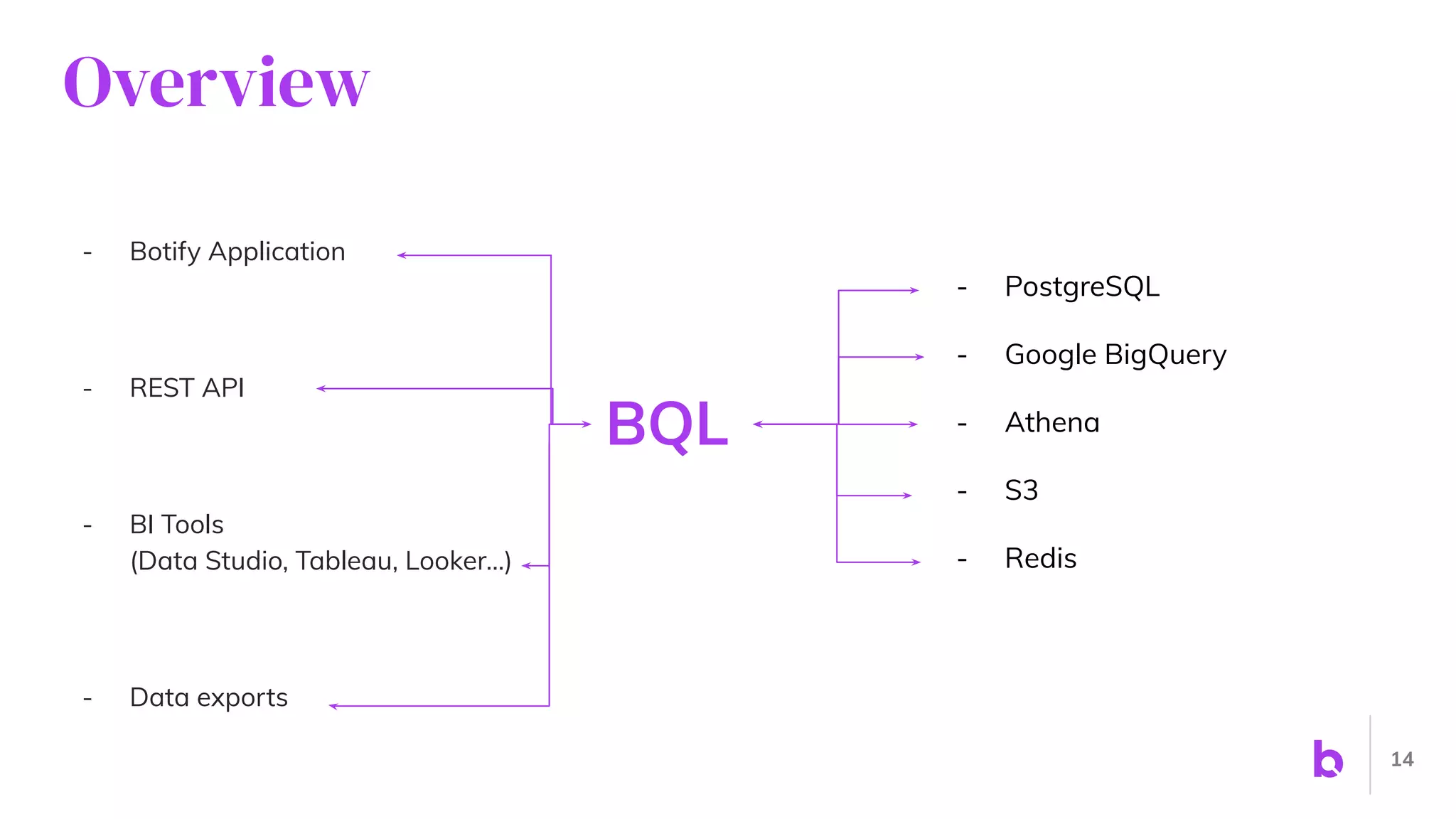

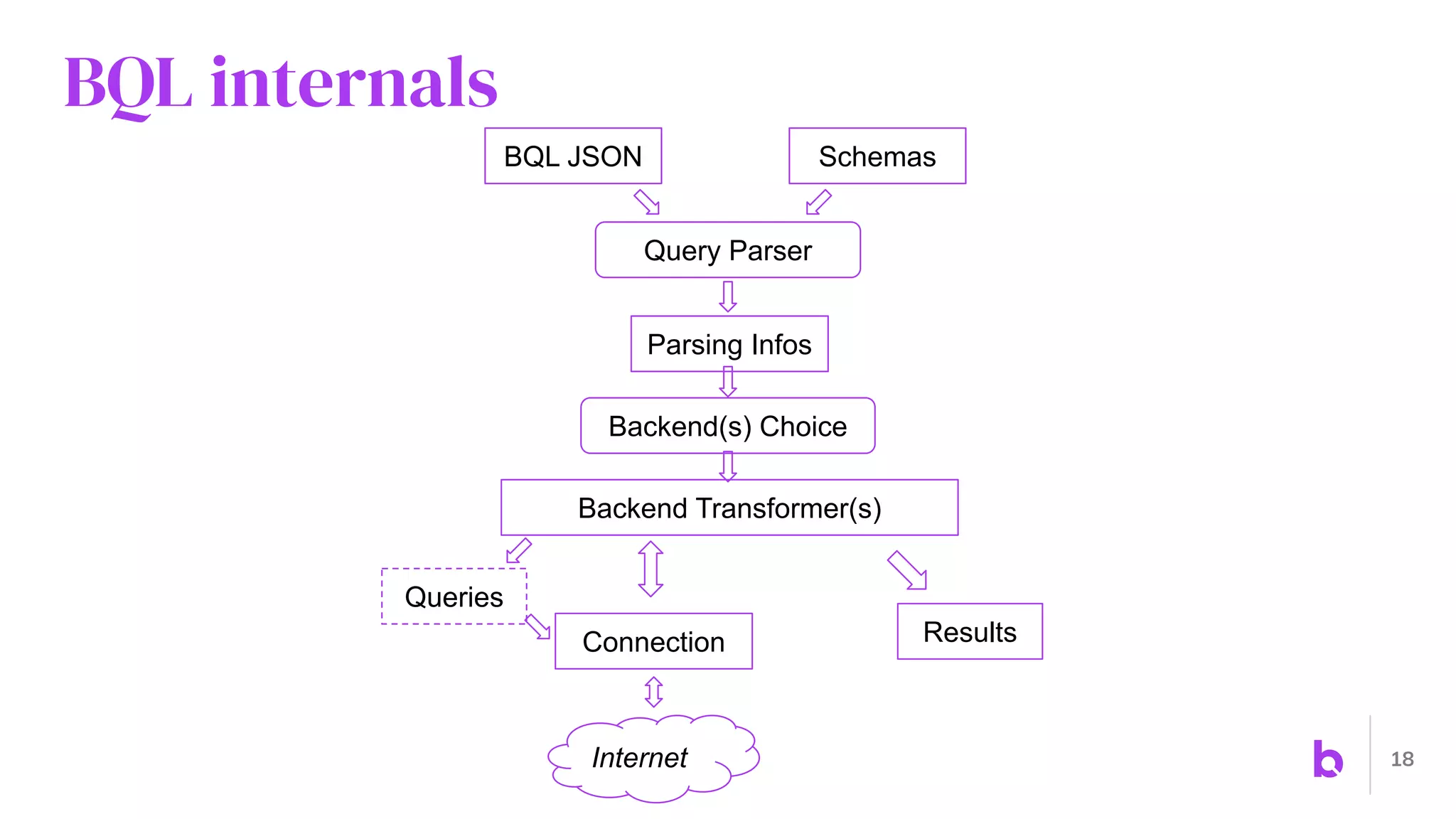

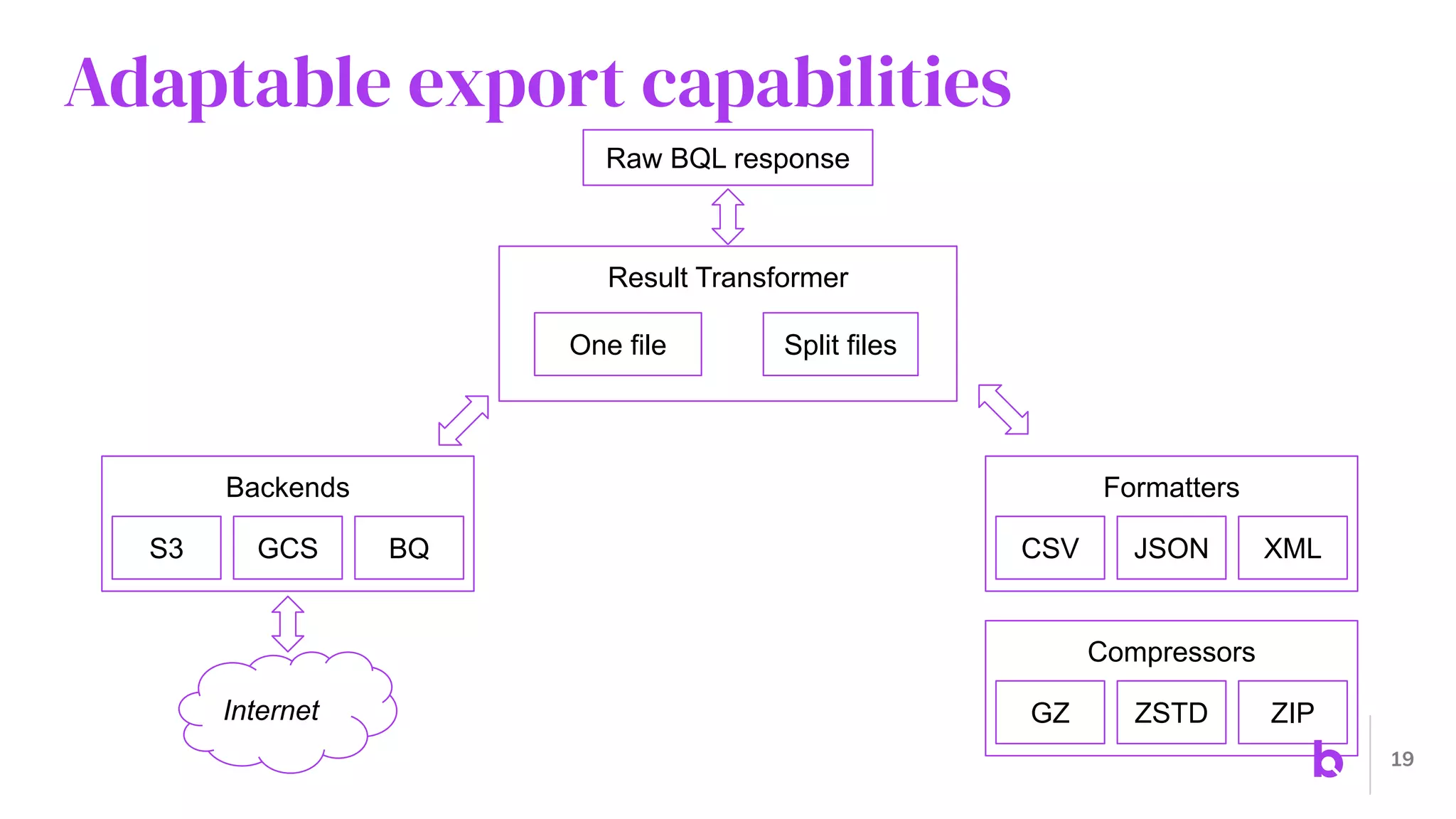

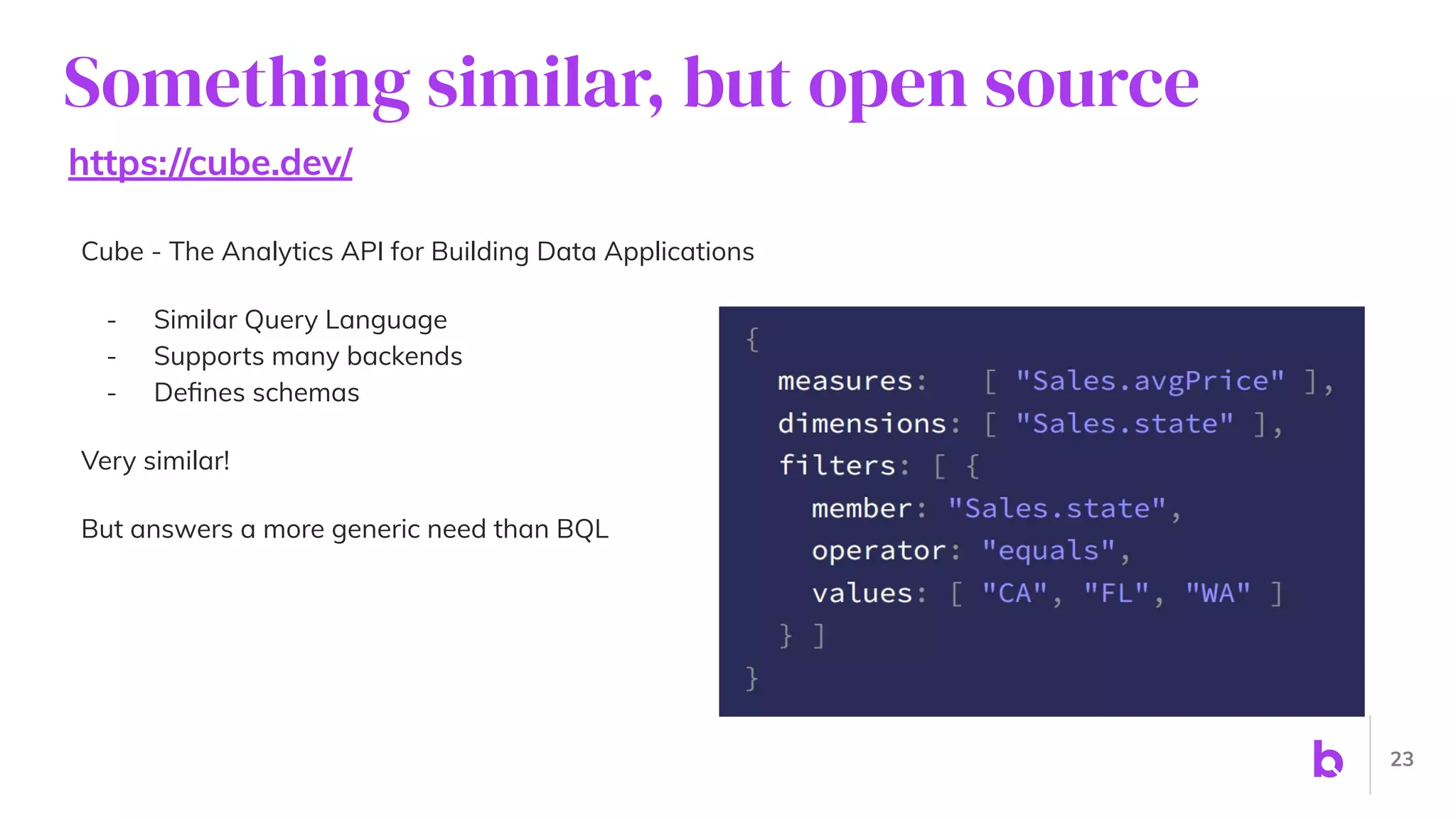

The document discusses the creation of an analytics API by David Wobrock, detailing the differences between relational and analytical data, as well as its use cases within the Botify SEO platform. It outlines the architecture of the API, including the Botify Query Language (BQL) and various data aggregation methods. The presentation emphasizes the importance of understanding the use case, optimizing performance, and providing flexible data consumption methods.