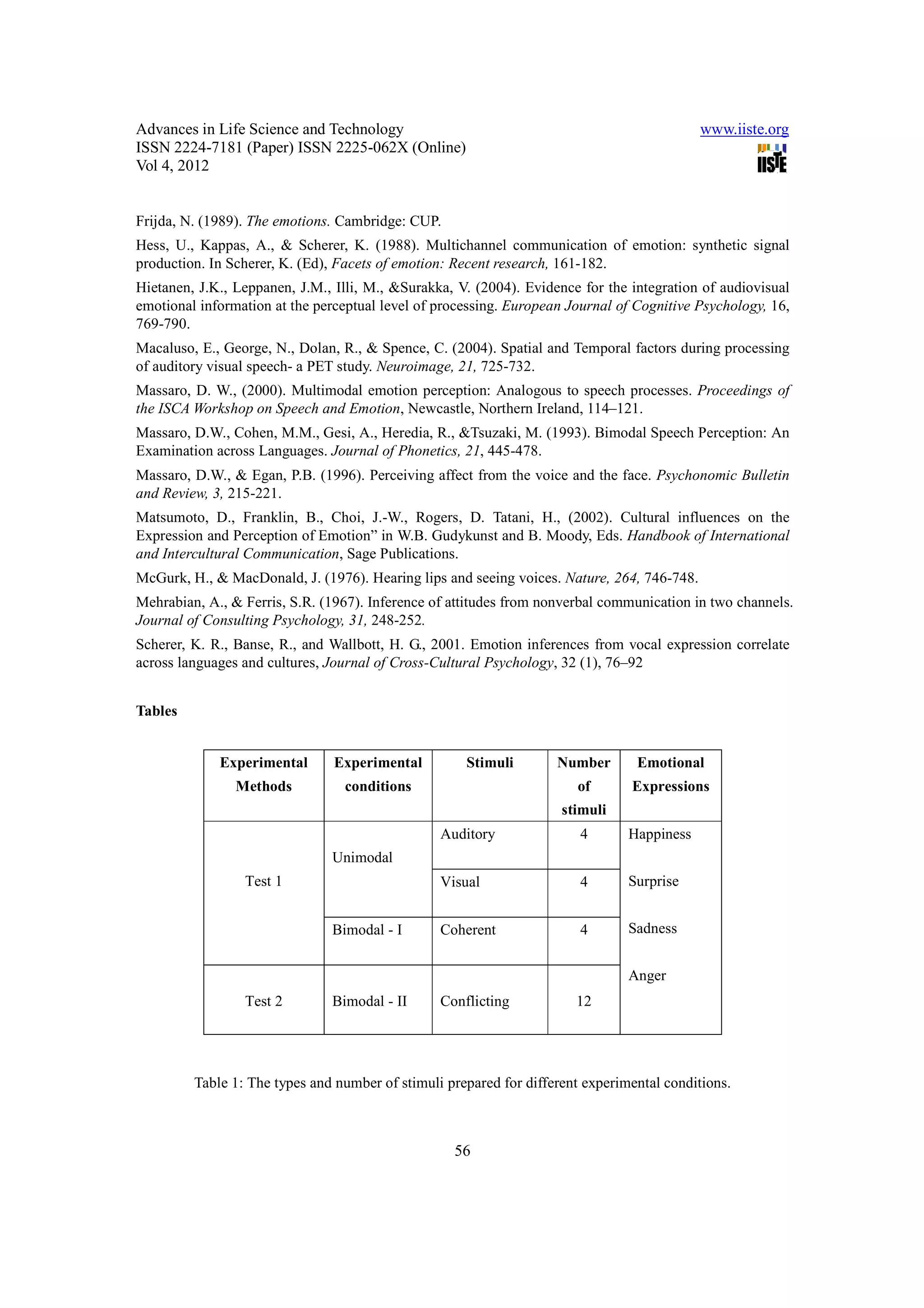

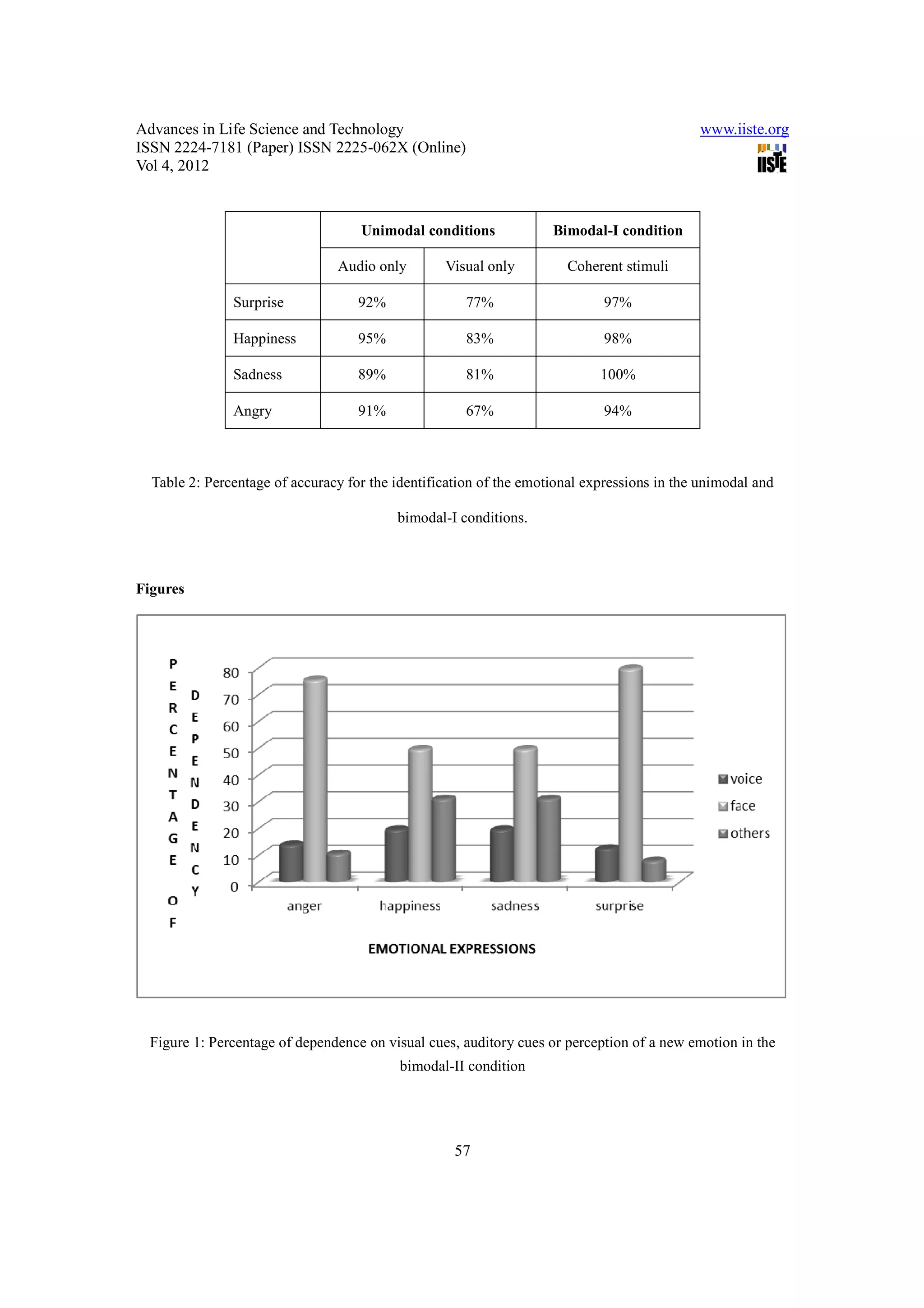

This document summarizes a study that examined the McGurk effect for emotions in the Kannada language. Researchers created audio and video recordings of a speaker producing the word "namaskara" in four emotions (happiness, surprise, sadness, anger). Subjects viewed these recordings in isolated and combined formats and identified the intended emotions. Results showed subjects relied more on visual information when audio and video emotions conflicted, sometimes perceiving a new emotion. The study aimed to understand auditory-visual processing of emotions in an Indian language and provide evidence the McGurk effect applies to emotional perception as it does speech sounds.