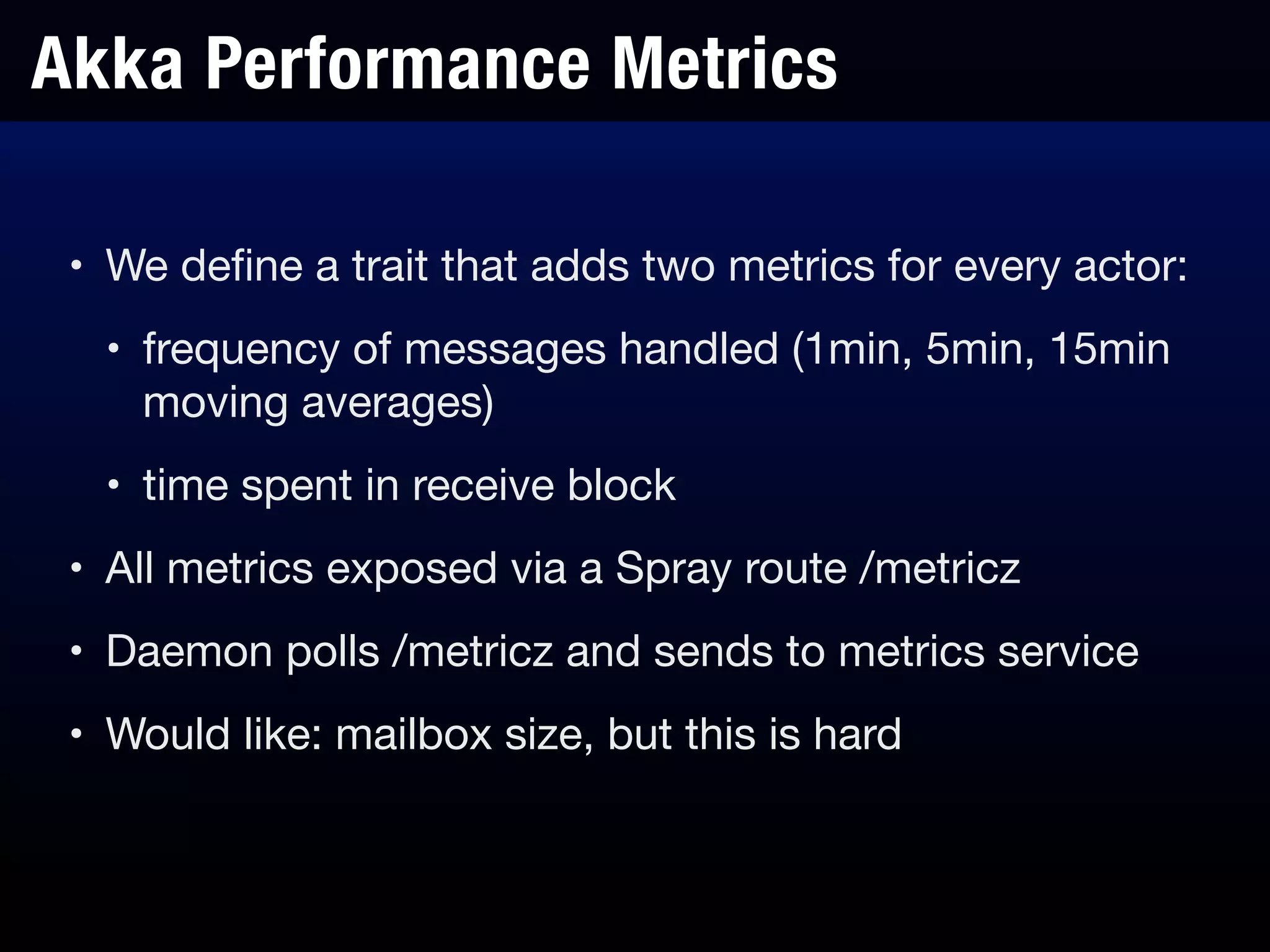

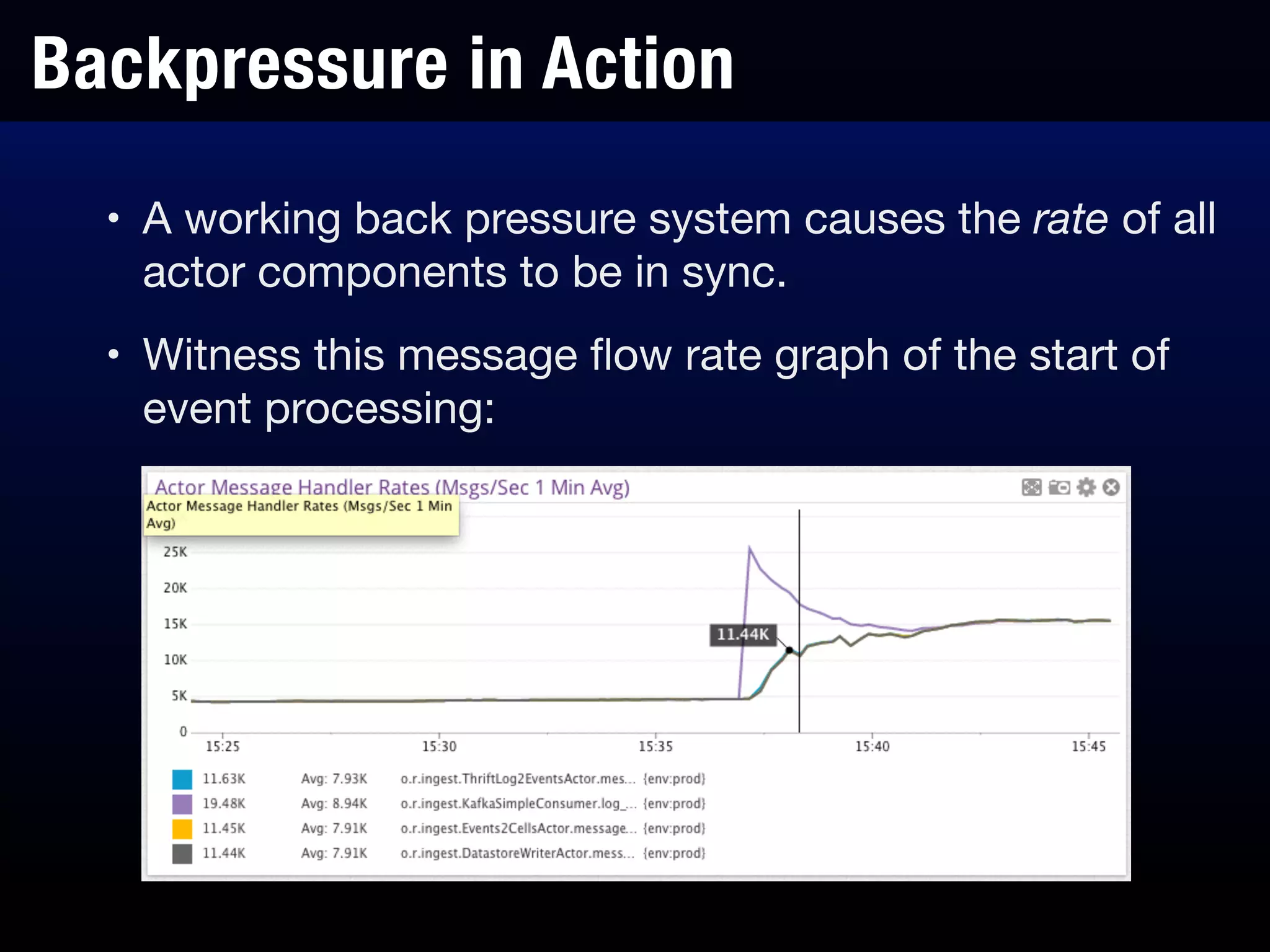

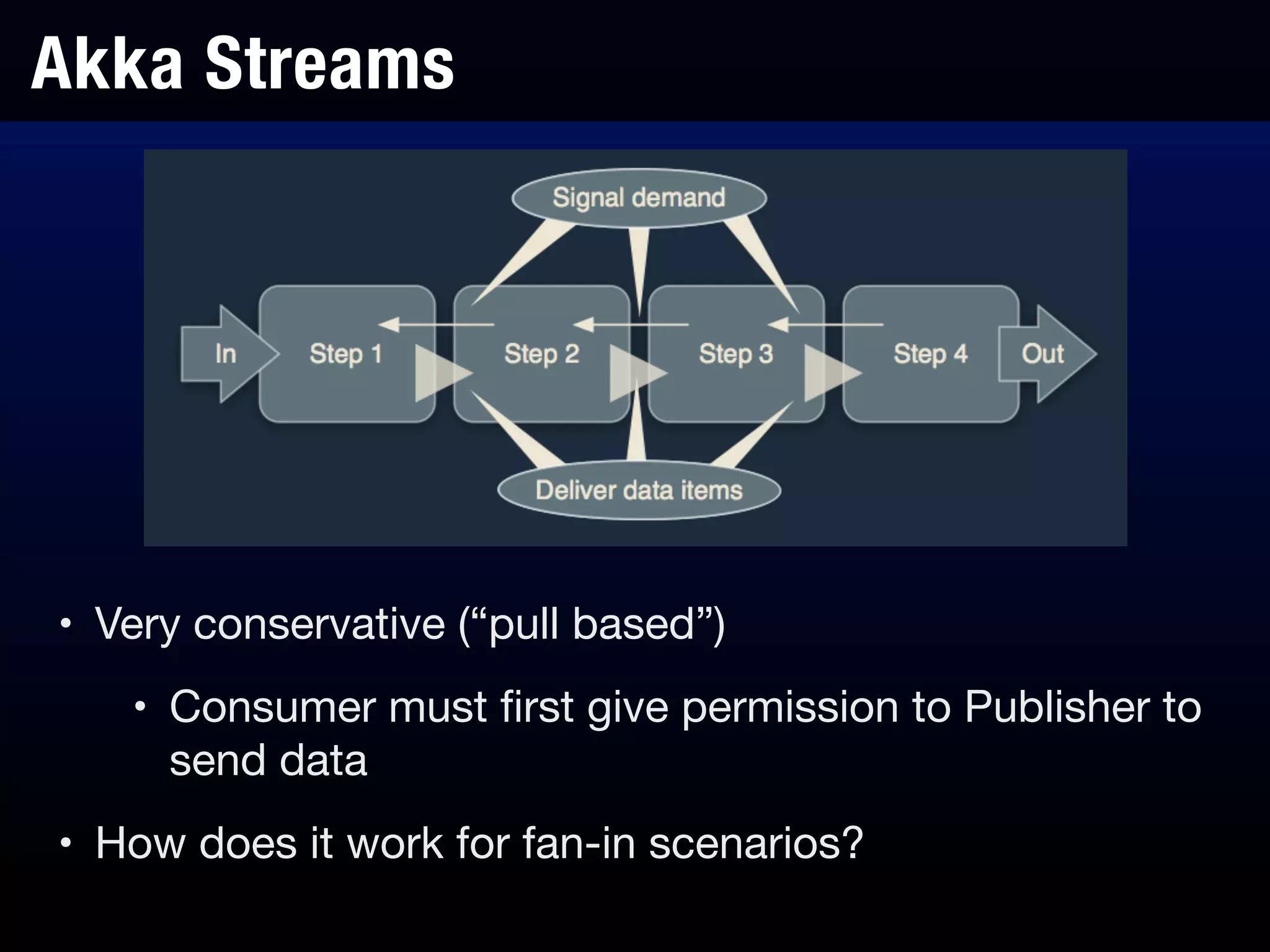

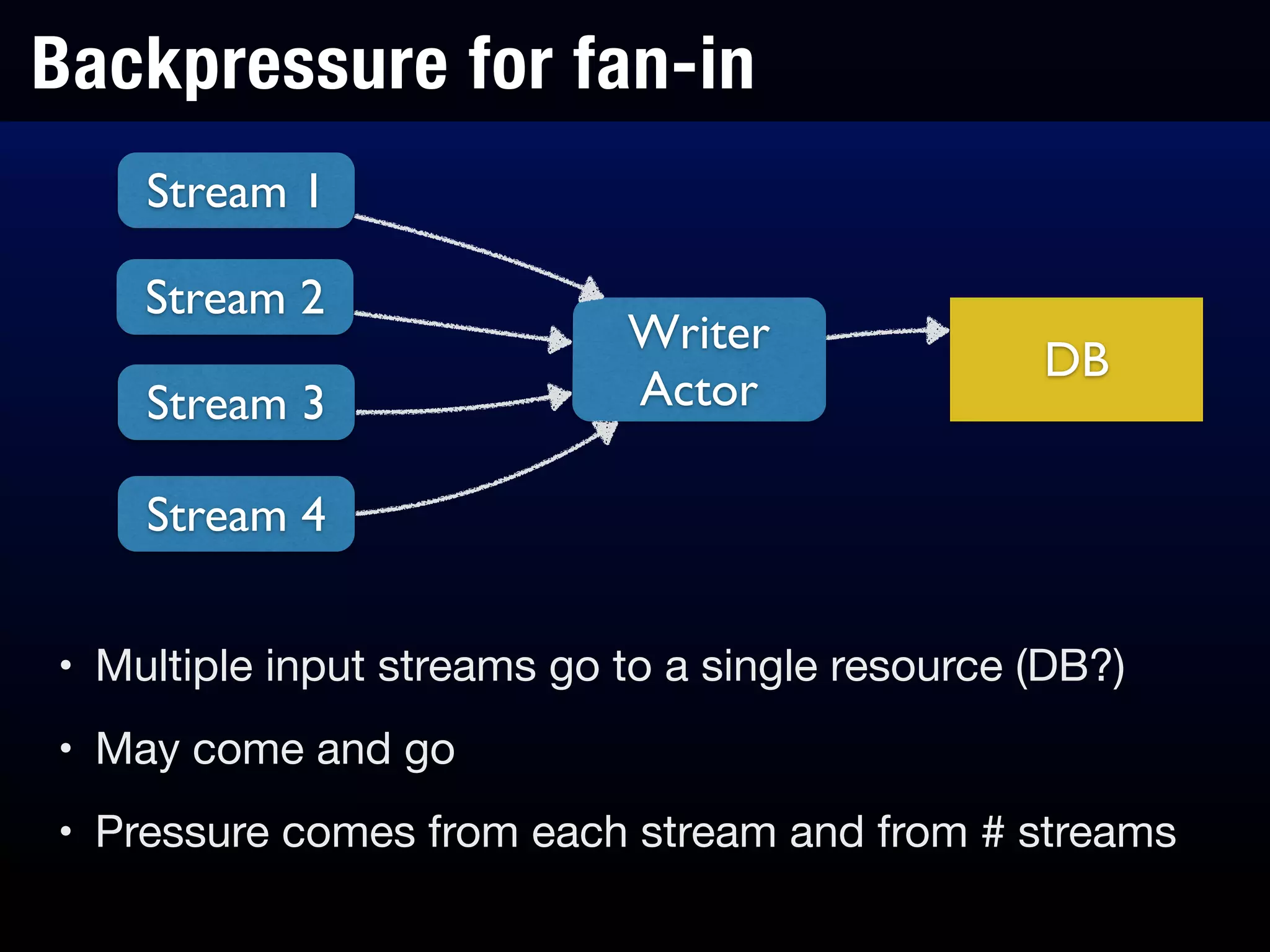

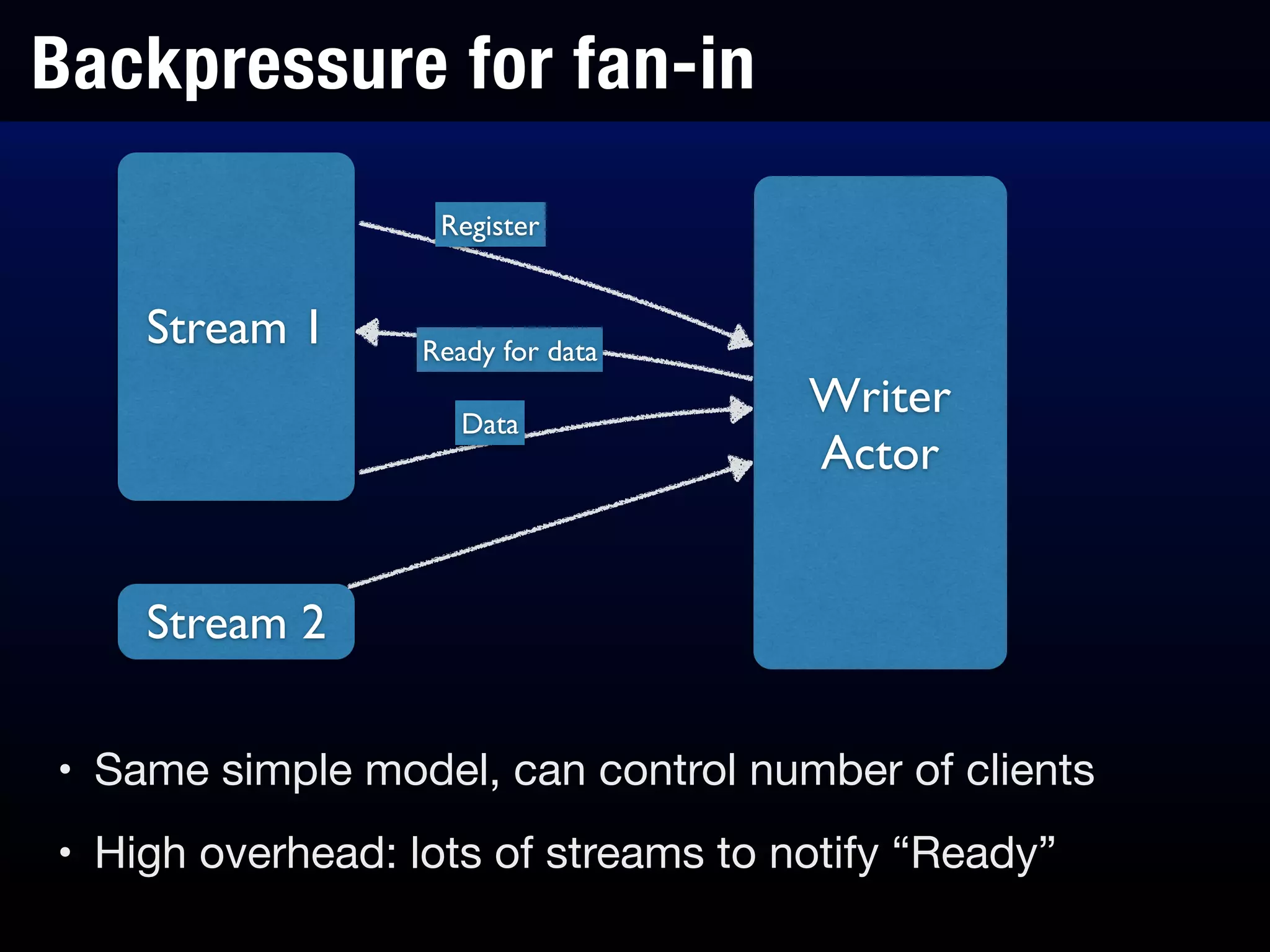

The document discusses the use of Akka in production environments, particularly at Socrata, outlining its transition from a Java-based architecture to a fully Scala-based backend with a focus on microservices. It covers various aspects of Akka, including event-driven architectures, backpressure management, performance metrics, and the role of libraries for instrumentation and monitoring. Additionally, it shares lessons learned from production implementations and offers best practices for developing with Akka.

![Tracing Akka Message Flows

• Stack trace is very useful for traditional apps, but for

Akka apps, you get this:

at akka.dispatch.Future$$anon$3.liftedTree1$1(Future.scala:195) ~[akka-actor-2.0.5.jar:2.0.5]!

at akka.dispatch.Future$$anon$3.run(Future.scala:194) ~[akka-actor-2.0.5.jar:2.0.5]!

at akka.dispatch.TaskInvocation.run(AbstractDispatcher.scala:94) [akka-actor-2.0.5.jar:2.0.5]!

at akka.jsr166y.ForkJoinTask$AdaptedRunnableAction.exec(ForkJoinTask.java:1381) [akka-actor-2.0.5.jar:2.0.5]!

at akka.jsr166y.ForkJoinTask.doExec(ForkJoinTask.java:259) [akka-actor-2.0.5.jar:2.0.5]!

at akka.jsr166y.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:975) [akka-actor-2.0.5.jar:2.0.5]!

at akka.jsr166y.ForkJoinPool.runWorker(ForkJoinPool.java:1479) [akka-actor-2.0.5.jar:2.0.5]!

at akka.jsr166y.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:104) [akka-actor-2.0.5.jar:2.0.5]

--> trAKKAr message trace <--!

akka://Ingest/user/Super --> akka://Ingest/user/K1: Initialize!

akka://Ingest/user/K1 --> akka://Ingest/user/Converter: Data

• What if you could get an Akka message trace?](https://image.slidesharecdn.com/akka-in-production-scaladays2015-150318112025-conversion-gate01/75/Akka-in-Production-ScalaDays-2015-31-2048.jpg)

![Using Logback with Akka

• Pretty easy setup

• Include the Logback jar

• In your application.conf:

event-handlers = ["akka.event.slf4j.Slf4jEventHandler"]

• Use a custom logging trait, not ActorLogging

• ActorLogging does not allow adjustable logging levels

• Want the Actor path in your messages?

• org.slf4j.MDC.put(“actorPath”, self.path.toString)](https://image.slidesharecdn.com/akka-in-production-scaladays2015-150318112025-conversion-gate01/75/Akka-in-Production-ScalaDays-2015-55-2048.jpg)

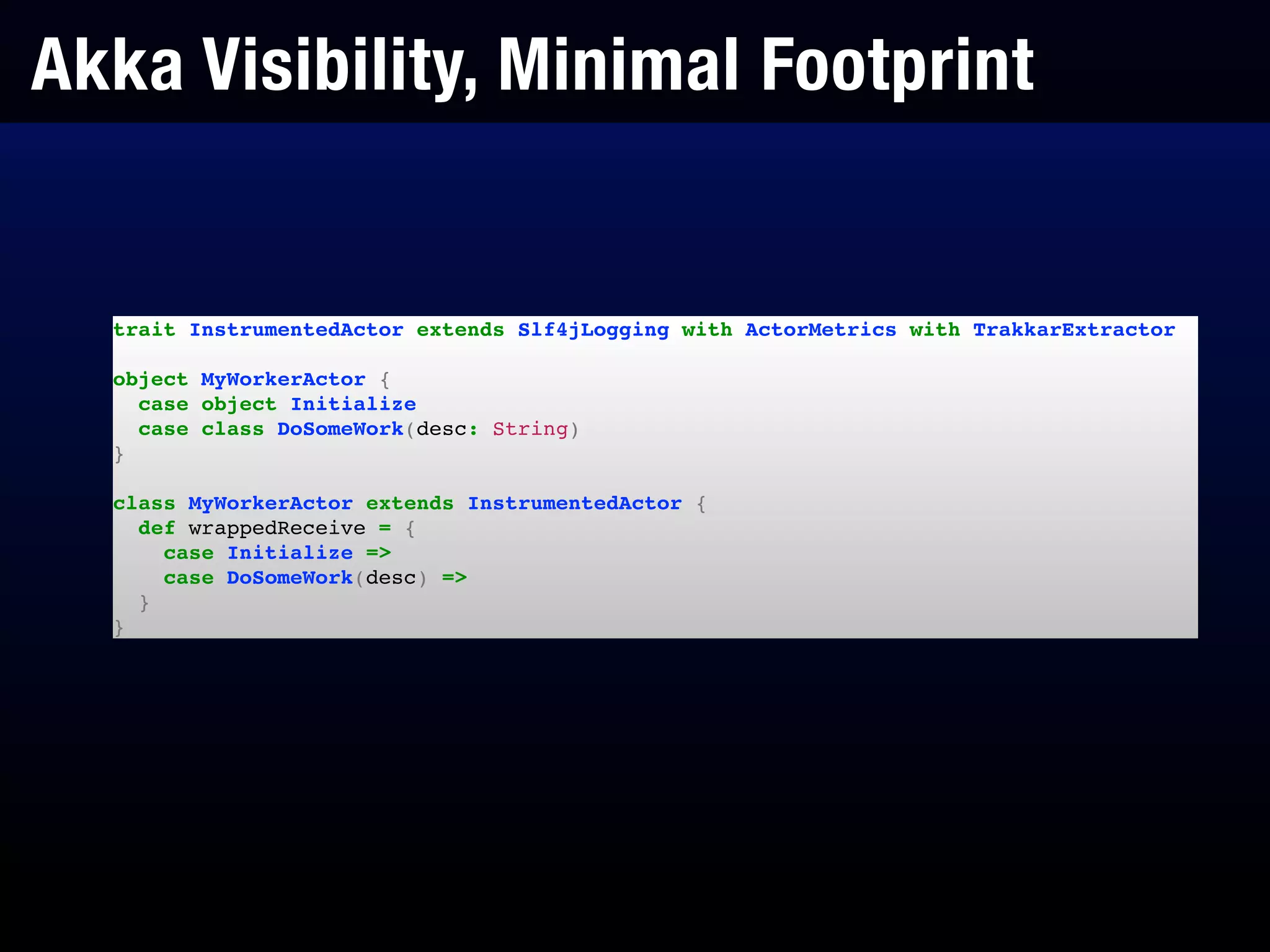

![Using Logback with Akka

trait Slf4jLogging extends Actor with ActorStack {!

val logger = LoggerFactory.getLogger(getClass)!

private[this] val myPath = self.path.toString!

!

logger.info("Starting actor " + getClass.getName)!

!

override def receive: Receive = {!

case x =>!

org.slf4j.MDC.put("akkaSource", myPath)!

super.receive(x)!

}!

}](https://image.slidesharecdn.com/akka-in-production-scaladays2015-150318112025-conversion-gate01/75/Akka-in-Production-ScalaDays-2015-56-2048.jpg)