This document provides a summary of a study conducted to examine the strengths and weaknesses of the United States Air Force Officer Evaluation Report (OER) system and recommend alternative designs. Key findings included that performance appraisal methods from literature and other organizations were not fully applicable to Air Force needs. Interviews with Air Force officers revealed issues like a perception that all officers are above average and a reluctance to differentiate performance. Three alternative conceptual OER designs were developed that focused on job performance, provided differentiation, and minimized administrative burden. The preferred alternative was limiting top performance ratings to 10% of officers at each level to promote differentiation while avoiding stigma. The report recommends implementation of this alternative along with changes to information provided to promotion boards. It concludes that a

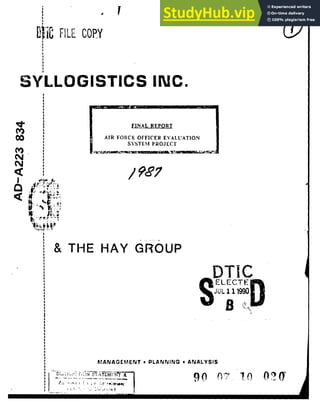

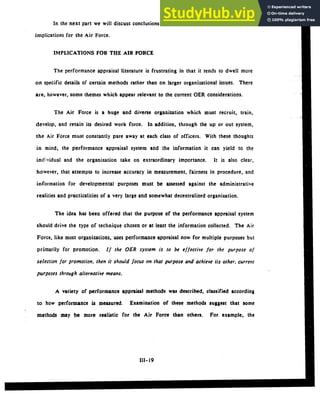

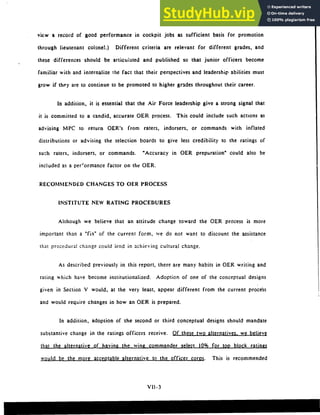

![FIURE V-

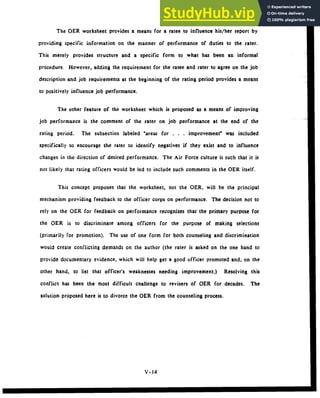

CONCEPTAL DESIGN I

OFFICER IDE~NTFICATION DATA

1.NAME 2. bbAN I. GRADE 4-. DAFSC-

,5.DUTY titLE -...

6. PAS CODE

7. ORGANIZATION, COMMAND, LOCATION

8. PERIOD OF REPORT '9. DAYS OF SUPERVISION 10. RE•A•SON FOR REPORT

FROM THRU BY RATING OFFICIAL

[ ii.

JOB DESCRIFPION

OflMR ASSIGNED DTI.ES

ASSESSMENT OF PERFORMANCE BY RATING OFFICIAL

JOB PERFORMANCE FACTORS

I ,

APPU CATION OF TECHNICAL KNOWLEDGE AND SKILLS m

M

PLANNING AND ORG ANIZATION OFWORK ~C ]C ]C

THE EXERCISE OF LEADERSHIP C -C r-] -] -] "-

MANAGEMENT OF RESOURCES ]]C] ]

IDENTIFICATION AND RESOLLTION OF PROBLEMS ] ] C C -- C]

COMMUNICATIONS = c z]C] ] ]

COMN'IS ON PERFRMANCE (Defh *Uaffmti for raung puiod)

NAME, GRADE, BR OF SVC, COMD, LOCATION DUTY TITLE DATE

SSAN "-INATU9t. FRTNCIAL

V- 19](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-137-320.jpg)

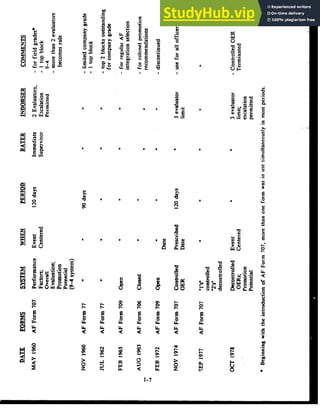

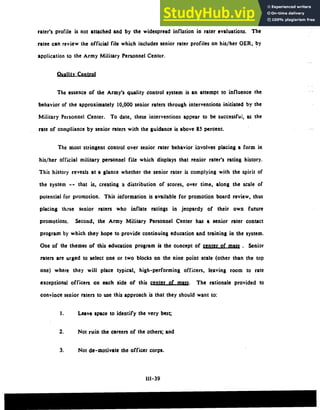

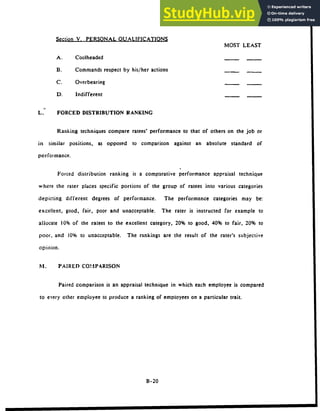

![FIGURE V-5

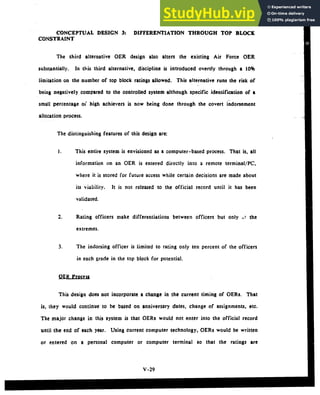

CONCETU.AL DESIGN 3

RATEE IDENTIFICATION DATA

1,NAME 3. GRADE 4. DAFSC

6. PAS CODE

7. ORGANIZATION, COMMAND, LOCATION

S. PERIOD OF REPORT 9. DAYS OF SUPERVISION I0 ESN FOR REPORT

FROM THRU BY RATING OFF•CIAL

II. JOB DESCRIMIION

OTHER ASSIGNED DUTIES

JOB PERFORMANCE FAC'TORS

•TID:G Not Observed

DNM MSE CE

APPLICATION OF TECHNICAL KNOWLEDGE AND SKILLS

PLANNING AND ORGANIZATION OF WORK DNM MSE CE

THE EXERCISE OF LEADERSHIP DNM MSE CE

MANAGEMENT OF RESOURCES DNM MSE CE

ID.NTIFICATION AND RESOLUTION OF PROBLEMS DNM MSE CE

COMMUNICATIONS DNM MSE CE

SPACE RESERVED FOR MPC USE (%) (%) (%)

(Th7a rawr' ratings for all (grade) in (year)] I___II

DNM - DOES NOT Coniuisently MEET the performance standards MSE - MEETS and SOMETIMES EXCEEDS the performance standards

CE - CONSISTENTLY EXCEEDS the performae standards

COMMEFiTS ON PERFORMANCE (Defoe accomplishments for the rating period)

NAME, GRADE, BR SVC, ORGN, COMED, LOCATION DAmh

SSAN SIGNATURE OF CERTIFYING OFFICIAL

V-33](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-151-320.jpg)

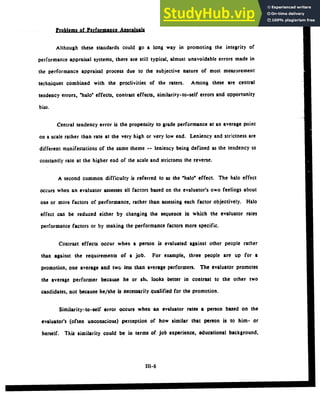

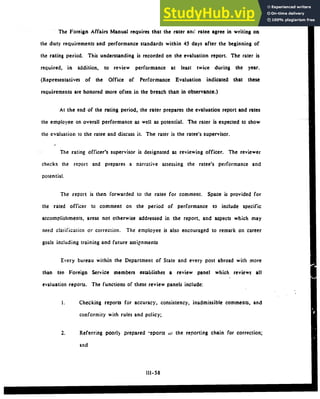

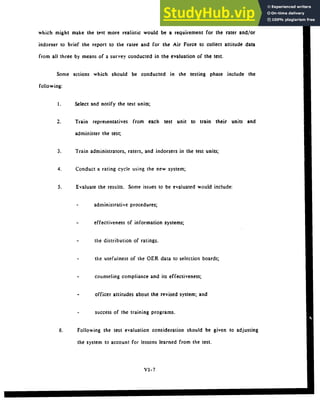

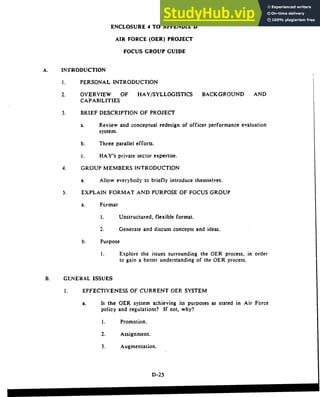

![AFR 36-10 Attachment 1. 26 ocktober 1982 Effective 1 November 1982

SAMPLE

RATEE IDENTIFICATION DATA IA.4AFA 36,-fOwVfUlly befoM 0`1111In si yIti)

I. NAsa (Lam First,Middle I.,ialJ iL SSAN (Mclvd# Suffix) S.GftAOC A. DAPSC

SMITH, Jack II 1231-34-5432 Captain

6. ORGANIZATION. COMMAND, LCCATION a. PAS COOC

345 Tac Ftr Wg (TAC), Mt Home AFB, ID MTOTDKLS

7.renoo or -e.•y S.N.Oo.v

DAY OF 9.t.o. -

VIASN OR

KP

FROM, 13 Jul 81 I T.HuI 31 Oct 82 sure"VSION 1201 Annual

11.JOUOESCRWTION ,.OUTTIm., Enter ooem-- e.i

t a.d approved duty title as of the

L K•YOUTIgS. T^9141 ^NO .gPONSISITIb, closeout date of the report (paragraph 2a this

attachment).

Item 2: Describe the type and level of responsibility, the impact, the

number of people supervised, the dollar value of projects managed, and any

other facts which describe the job of this particular ratee.

Ill. PEF

Fc6,MANCC PACTORS r 7 5l1~ -po WCL0.~.

U4-LfOW! Mgt Ir eqid Ap9OVr ASOVC

OId Icrflc •~'ipJ~

e~pqfonactI ,vquir• NOT OlS3EVEOD UTJlL.BO UANOARO STANDARD STANDOAWO TANDAND

I. o.01

KNOWLAO . u, o

(Dept& L- . L .

bdld

0

A)

What has the ratee done to actually demonstrate depth, currency or breadth of

job knowledge? Consider both quality and quantity of work.

2. J•UDGhgP4T AlO OICCIIIONStCOnS.flwrn,

accurate, effective)0

Does the ratee think clearly and develop correct and logical conclusions?

Does the ratee grasp, analyze, and present workable solutions to problems?

3. P.AN AN00 NGANIZI WONK (Trmaly.

Does the ratee look beyond immediate job requirements? How has the ratee

anticipated critical events?

6. 14ANA0GE4MYN OF MCSOUNCeI0L.. J L LJ L.

(MaRpowe? rgld

m0 rE)

Does the ratee get maximum return for personnel, material and energy expended7

Consider the balance between minimizing cost and mission accomplishment.

S. LCA )cRN1FInrwflVt.accCpf 0 L J I

How has the ratee demonstrated initiative, acteptance of responsibility, and

ability to direct and motivate group effort towards a goal?

4. ADAPTABILITY TO STRESS (Sidb~e .... ...... .JLJ L....

flIXIble. dependable]

How has the ratee handled pressure? Does quality of work drop off? Improve?

1. ORAL COMMUNICATIUMI (Clear,

c0mcU confideu"0 1<

How has the ratee demonstrated the ability to present ideas orally?

0. WIVIITT N COMMUUNiCATIOM (O1.0LJ

CoACIDe.ropis'edJ -0 .L4..J

How has the ratee demonstrated the ability to present ideas in writing?

*. PR@OPF9IONAL. QUALITIES (Afthsad*. 0 L----J L--.J L . L•

dftwL CoaposteN. bra*Vt0g25

How well does the officer meet and enforce Air Force standards of bearing,

dress, grooming and courtesy? Is the image projected by the ratee an asset

to the Air FPnrrp

S6.

.. VUMAoN

RoLATIoNS (Equal oppornitJ L. J L._.J "

par coaloptld. NmattiWryJ

How has the ratee demonstrated support for the AF Equal Opportunity Program,

and sensitivity for the human needs of others? Evaluation of this factor is

MANDATORY, ----

F-FE R

AF 1Z0om,- 707 P..VIoUS COITO W"e9U9. F-9..c OFFICER EFFECTIVENESS REPORT](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-250-320.jpg)

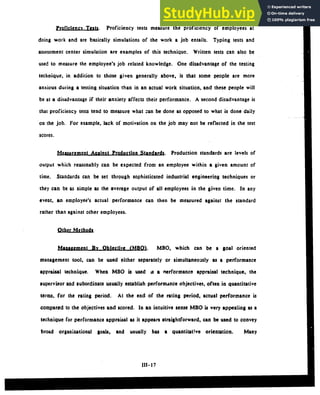

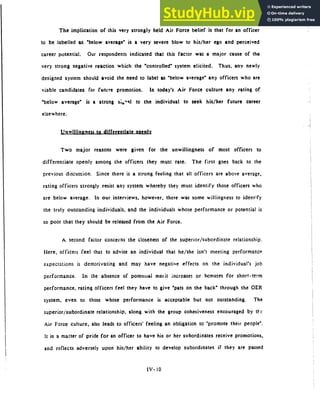

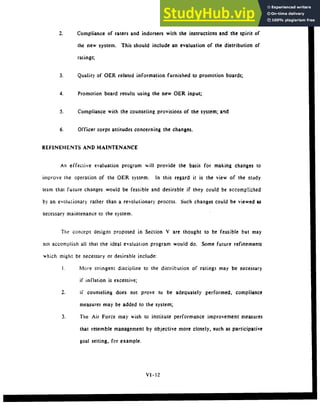

![SLIST YOUR S1Ga'; ,CA'l CONTRIOtlITWNS

SIGNATU'LOE AND OATE

PART V - RATER ANODO" INTERMEDIATE RATER fReq.aru" e.d r€tment a. P.1 IV.. 6. ead ebow.

i~ulw M144A A" eo-trei Ujint

u-hou

. SOW110UnrrIo

•e l' Poilftda

jrihoiiatnon DA Farm 6 7--s.j

a RATER COMMENTS ilgio..I

SIGNATURE AND OATE 1M.-d•o'-, I

SIGNATURE ANC00ATE 1`11..4410-t

DATA REOUIRED BY THE PRIVACY ACT OF 1974 ( L'.S C S$2.a

1. AUTHORITY See 301 Title UM

SC.See 3012 Title 10 VSC"

2. PURPOSE: DA Form 6- -S. Officer Evaluaton Report. wre'h az the primary source of Information for ofrwrr personnel

rmana epment detusiont DA Fotrn 67-6-1. Offsetr Evaluation Support Form. aer-, as a guide for the rated ofr'cer's perform.

anet. development cf the rated orffier. enhane" the accomplishment of the organization m ,inon,

and providea additional

perfurmanc, ianfurn.ation tvii the ratinr chain

3. ROUTINE USE: DA Form 6T--&' will be maintained in the rated officer' officiaj military Peruonnel File (OMPF) and

Carter lklanatenment Inditidual File iCIIFI A cops will be provided to the rated officer either directly or aeat to the

forwarding addite Showrn in Part 1. DA Form 67-1. DA Form 67-6-1 is for organizational ue only and will be returned to

thefalrd officer after re-ieu by thr- rating chain

4. PISCLOSURE: Di•closure of the rated offrier',

aSAN (P~rt I. DA Form 67-4) k .oluatair. Nowe"". fallum to wdlfy

the'SAN uay rAamult in a delayed or erroneou proc ing of the offic't a OER. Diadowe of the Ilnformatio is Part IV.

- DAIo.

m• lT6--]

- kroluntary. Nowere. fuliltre to provide the Inloemstio• reqwuaed will riult in an eitala•tlo of th

ratVd ofrrvr wt•• oat the bentet of Ikset ofIr'na commetmu. Shoid the raled ol"•er w the Priae Act as a book not

t;o p-r1ide the Infowatim mreq ted in hat IV. the Support Foram will wontaln the rated offeera satemest to that effetd

anIe forwauded throlughlie ratng usita ls aneordance with AR 623'-1-S.10.

F-.5](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-253-320.jpg)

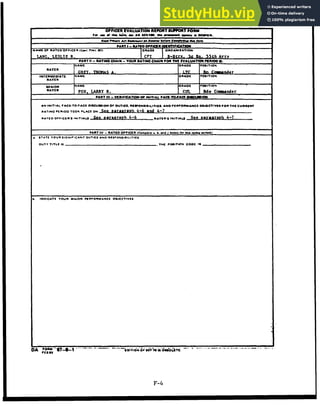

![Pt .100 CO V11111

PART V - PtRPOIUAWcE AN00 POTENTIAL EVALUATION 1FMMI

a. RATED OFPICIARS NAME IM"

POTOP ICE

1 ASSIONEO IN ONE OP HlIS/HER 01SIGNATEC SDECIALTOESAAOS []IE3 nEl

6. P111FORPINANCE VU"I NO THIS ARATWFIEG

PII00. REFEIRTO PART III, CA FOAM 47-4 AsiC PART Off . 6 ANO 6. CA FORM 4,.40-t

r7 UIIE~T

A 90U;TV" PAIITS

c.CCJUEFNTONSDICIFICASPECTSOP THE PERFORMANCE. REFER TO PART fit.OCAFOAM 67-9 Af.O PART fit a, 6 ANtd, CA PORNN101. ONOT UPONRCOMMENTS

ON POIEPPTIALI

0. THIS OFF ICE A POT tN1I AL F OR PROPOT PON TO THE "R XtI NICHER RA E

0POI0

DYMOE

AHEAD OF PROMOT' -IT--

0. COIIIMENT ON POTENTIPAL

PART VI - INTERMEDIATE RATER

ItCO.MEFITS

PART ViI - SENIORA RATIN

I POTENTIAL EVALUATION tso. rh.,., I AN All b

Ifl.IN F

DA

SIR USE ONLY

lift

it

A COMPI.E TEO OA fonm El A I -* d'C" -, 11 .T.II.

I Hot1 EfFORT AND CDA,.IOI 41 (1 I

I JNO

F- 7](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-255-320.jpg)

![SUPEnS USE ONLY suptils USE ONLY-

I IoPI16

, 11-_ ,, 03

REPORT ON THE FITNESS OF OFFICERS

NAME(LAST F:RS. MIDOLI "- ORAOD 3 0" SN0

5 ACp A1 6 UIC 7 TIPSATION DAT ri?,M -"

[Z_ ,EMACjjo_

I_ EI

0 -CCASION ru1 Ali____

0- rm -r-A~rarif!

I P -il ]1 10 DCALC N OFI DEACHMCN! ' FROM -

to

IODIC~A.r REPORTING SEimon or.

OFCC

OFFC1

C)" S -E

'11 1co,

Ir'S SPE TO

1 -i DPI -- , - .

SULAr . I -' ftI i I . IAL jJI H3 CO I IIL-'6 CLOSE Out I IoIN?

2- EMPLOYMENT Of COMMA•-o CONT,,UED ON "EvEASE SIDE OF RECORD COPY' 2

•I-

23 REPOA71NO SENIOR [LAST NAMAE.

FkMillITL 21 ORA60D 11rE7 I

28 nUTICS ASSlGNtO jCOPATiNUE ON REVEIAE SIOE OF RiCOno COPYi

SEIPIC ASPEN

rV INO COD LTU!TTI VUW6U

SHEET I~

29 GOALSE?11NG 1113o0 *O 1

.. '. I 3I, oWRKINO [-1 2 .... *

a

mail 33 11AVI

0.

"" £

kC'•)T_

___ •f1 '' PEI AV"ONS

•, M. ... o Iil ° '"

34 REAPONS

• N03F WmlImO

II UAFSFII 3$ IOAJA 3f7 Bi-11N

A :J-

8 I UA1OS AM

0PIO7wN AOiLITY

3 8

S A T . t WATC H 4

3 ! . A

A I-,.

.AFA

I J I I AA

0 " r.

Ad~~~~. do(T'(i

No so of P,On~~i!u

~

"'I

jJL~J .1 I

EVALUATION

SUMM1tARY

B3 FIIOI t..J

/ '.'• yr f

i t ,l.,I I j _____.__

/ ... ,,

-il(,~l4•{IiAIIL i~ ',*v,/iiU I [1 --

•-

6' ;'lil`

LUM A , L1]

.. OI. j

, i ... i i...~._ _

(A(_

I k ._ (

ut. [11 I ,,p , iA.,C,'

... .. Itil. ..

C It .. .. J j __ . . _ __ ___. . .___ 4___ __

SI'LI=I•uhuAL?4 T .4,}l l' I leCI C(1

(J.lll IcoMl~ 7t'* pLV(•IBFb

I•t

li tlJUi,(, [TI f,1 IV ......e [ IIf,` AICALYIL. J llu ('fl.j(,,iiA 1 *]' )i

I 7... MIIp

I tilt

ic

/ ."' II IIu, II ,l,,,I IJ],,,,, l "0 JI I II Ai

... ,-

-np• _IrJ' _iva)

Ila Jl$.'Il L

/ MLIII , / AI--Ia •l- / A-OI~i?Yo / (II

,, A~ol i 11 lll~ li(

cu., 'I Mt III

ýUJL74 ý11 1-( III V.14U 11111 J

ALL L tj jlA(lL F11A

81 'iflNAIUNi Oj OFFCIN

I VAII

VAI IIA I i ,l; 1.1 l? (I ll f1l1H

A (C N OW

I. (" L T? AT }I HA

V i th fll -,'k ti'lU It IIA V t il tl

' AP PAI LIO0 Of V V P il

FOMMANCJ ANt) 10G~t TO MAAL A StIAt IMANI

E . ... NA1UAfOF"('PORTING SEAiO'

F S tit SOFANibIGNATURIE

V, MIOJI

a it I

A ll

oi WkINO [41410i11 UN LUNCUU!"(fiAl ANIdr LUNUtMhINI)IJI b I'L* NIPO I

V-3

8_ _ _ _ _

11vPres(1#111110 ?~111 *E4L WORK 11 t~?

S IE](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-256-320.jpg)

![PROGRAM i. OaImNLZATIOcl

DF is st£ . Iruc 1 9 DESCRt~nol TITLE(Alshc eal" es q--

2. MARINE REPORTED 04

6. LASI

NAMA b POSTNAMI M t. it 0 eA. * W rdNTtcA

)tONNO. U.PM"O 9 tAT.Arv

tI - 1 I I1

23. OCCAoNsIO AND PERIOD COVERED

0 • OCC 6. NOW• FRlOM10 4. TYP it 061100SO• NOe1AVAAA&rlY C w awme ý,,,N #vHil.AI

"

"DUTY

1GNMFNT S. SPECIAL INFORMATION

a

*DIIReT7IVE MUL .6 10OWNS1 /0 04. Is.LO NO. *.o * GUALIFICAt1ost b clVteWiING ORsCR*s

ID NO.

i NO . d.*4 1

6. RESERVED FOR FUTURE USE 7. RFWRVED FOR FUTURE USE I ORGANIZED RESERVE DRILLS

'0 SI I I I/ •.

, 9. DEPENDENTS REQUIRING TRANSPORTATION

0.NO 6 tOCAtICI . c. ADOMI&

2 =1 lto. Du n

PEFERENCE (Code). 1ob. DUTY PREFERENCE (Descriptive Title) (Aw s.

e vio e se.li

.... 2 2d 2d

"d

11. REPOITING SENIOR S T IS

12. SPECIAL CASE (Mark i1 oppIhcoblo) led ATTENTION TO DUTY 1So. YOUR ESTI TEOF THISMARINES "GENERAL VALUE TO THE SERVICE'

EREPORT E REPFOR T e p9 Eq ED Eq @0909D0]E3[3E 90SC3E

i3.PERFMANCI 14l. COOPERATION '~b. DISTRIBUTION OF MARKS FOEALL MARINES OF THISGRADE c

[] . . N , • • • [] R LjI L__JLJL •I IC__

_Ll_j

L_.J -zo.

13b. ADDITIONAL DUTIES l4f. INITIATIVE 15. FILLBOXES SO THAT THEtUM Of EACHCOLUMNCORRESPONDS TO ITEMISb

I

13C ADMINISTRATIVE DUTIES 14g JUDGMENT 0.>C)

o0

:3d. HANDLING OFFICERS IWAR NCO, Wo-) )4h. PRESENCE OF MIND

Z ED

E EDR R FE9 B

9 EAB

9 D

RE 8 1 04 CD (D

8 D l D E E ID k,z0

0113. HANDLING ENLISTED PERSONNEL 4, FORCE

S13 TRAINING PERSONNEL 141. LEADERSHIP 16. CONSIEORING

THERE•UIRMENT$S

OF SERVICE

IN WAR. IN•n:ATI YOURATI1TUDI 0 t •

F IIOlARD MAVIN<. THIS .1A0IN1UNDERYOURI

COMAANP z

0 ER EA

9 9 9 NDT EDPREFER 06E OR E EPARTICULARLY 'K

1

3

g. TACTICAL HANDLING QF TROOPS 14k. LOYALTY OBSERVED NOT WILLING GLAD DESIRE < Z

E. e E E3 e) E1 21 17.

HSMRINE SEENTHESUBJECT OF ANY OF THEFOLLOWING REPORTS' C)

IF YES REF-RENCE IN SECTION C.

AD L E

1 QUALITIEr$S 141. PERSONAL RELATIONS o,

COMMENDAIOR I b. ADVERSE .DISCIPLINARY ACTION i^

l.ENURANgE x,

E 93

E] 9

ED B 9

Ell] E R E ] ED

YES ENOI EYES eNO

EYE

-40. PERSONAL APPEARANCE

•M- ECONOMY OF MANAGEMENT 18.REPORT

BASED

OH OBSERVATION 19.OUALIFIED FOR PROMOTION Z

--_

-,z -- ~k YES

ED) E-' C9] e R E _E] ED LE

RE DAILY I3OFREOUEN U1 ENT .A-.p.,CA 3YES .:,T,

IAC. M•lILITARY

PRESENCE I4n. GROWTH POTENTIAL 20 RECOMMENDATION FORNEXTPUtY 21. RESERVED FOR FUTURE USE 0

RECORD A CONCISE APPRAISAL 01- THE PROFESSIONAL CHARACTER OF MARINE REPORTED ON THIS SPACE MUST NOT BE LEFTBLANK.

ED,

22.I CERTIFY tIW Inlfomotion in se*ction A is correwi to the best of my 23. I CERTIFY Itha to the best of my knowledge and belief all entries mades hereon are

knowledge, true and without prejudice or pan-olity.

z S (SIPo tVro of Morine ripored on) (Dole) (Sanoiure of Reporting Sonor) (Dole)

z 24. (Check ,ne whlen requietd) I HAVE SEEN THIS COMPLETED REPOR. AND 25. REVIEWING OFFICER (Nose. Grode. Service. Duty Assignment) J25o INITIALS

1

i HAVE NO STATEMENT TO MAKE Z I HAVE ATTACHED A STATEMENT.

25b. DATE

(Signolurt of Marine reported on) (Dole)2

-- STAPLE ADDITIONAL PAGES HERE F-IO](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-258-320.jpg)

![f. COMMENTS (Performarim of Duies contlnued):

4. INTERPERSONAL RELATIONS: Measures how an officer affects or is affected by othiem.

a WORKING W-TF! O"HERS. Sometmimmme

dirards the ides andfestimp ,acaunagam, Ip of idea; Ule. Stimulatwe ape". ueP m ow

des•amsw"

d others, or mueee bohult hacui d Reopecot the view., ideas of others; t

ee tommun Wi

•pw. 4Ow em

Dammossalnal &ilhy to prim ,a tween . faflure to inform or consult-L b. a w-mp-tivs,lsters a m of eamwerlL. arwtkble g with *han of ael

fesS. ISimoeraits. Sodto week With oter Ueobimpaltne; Salk too muchb~sS. t. Keeps Other iftds moed w oithersl Car. rianketPstl s GeO"difermeut

People sa"

people o W N

tonS

Si ahie Momnsoon

coal& tus. May be inflaudble, os tam pe or

w mba rim shamo of lead. 'Irea. pow"l in ow wrgasnhetanm. to work lost.erh withetmatn

nxt

SWlow So resolve clcte. Not a Lamina adeat., omurte mam. Helpm datastin m them toac geBas

which H/0

player. reed-wve

conflicte and may Wuem on mmu would not eUatnwium haewbmu obtaine.

b.HtUMAN RELATIONS: Eakbite discriminatory sadmincise toward Trieset otbare falirly and with diplt Through leadership end demontiarated

o__

_ _ _ thers due to their retigton, aes. mc. ream. rarlegus of rligion,see. am, ram or athoic sum pereteonsaimamitmat insile fiair

The degree to which the ~wr.~luble the or aethntic bcgon.Allowbse his Is- hsckground. Cearries

out work,,=-nzg ~ an qa rsmeto te,.S l te

leta nad epardO

t ar the Cmm mndant's fluem ar the

o ramotmaatf lhpe. apesl responsibiltlie without btim.cregardlesn of eligico• t, Mae. . rem

Heman Rel•• a Policy and show o

ree mae tey

m piontimi to barem alwmu le I subaai dieastm acon mthls

(r b-Ting Imp or ehni b6dwakmo Dow not.WSoltem pie

-ad weham te in dealing with cams, diseectful; may makm"

aslurng remarki, i

to•etepirlt of the Commandant's Human actions or bohsvwr by anyoe.

Maker dnd Itn. f. Dcwot hold subordinate@ accountable for Relations Policy. ]= lemu•lrlyboterwo.ty catributimos, 1o

their human relations responsiblities. thi Si

___(D_ 0i) 0 of)

0 _ _ _ _0

c. COMMENIS flnterpersonal Relations):

5. LEAOERSIIP SKILLS: Measures an officer's ability to guide, direci, develop, influence, and support others in their performance of work.

. LOOKING O1f FOR OCTiEIIS. 1 shrvi lithle concr-i- for th, Saaety. problems. Ceam shout peop•le Ko, es and rempond. Createes n attitude of cnresand a Sense (o

nied., goals of other* May overlook or t. .,h, need. Concermod for Lheir gaae- Wmflucilty w.n

others Peracnally ensurses

The ofrlot' Sensitivity an4 responsiveneee tolerate unfair. insensitive. or hbunve trUat- itylwell-being

l ami ble •lsneJsnd helpe r-eouwre ar available to mo t people*$

to the nede, problems, goals. end ac)hieve. eent eopeople. May b leaebk

to other, wSith personal on,ob r"11td pembleod.l = needs and thaet hmit. of ondurtorae are NWS

ment O

(there. but non.vsp"ruive to their personal needs. end goale When unable to sm.st. eSugge•ts exceeded. Alians

Ilemihk to people and

Seldom ocknowledgeo or recognaia• rubor- or proiede• other remur "Goe to het" for their problem. b)oa no tolerate unfair, in

dealtee' achievements people Rtewards daervlg subordinates ino eenutve, or abusive Lreatmnent of or by

t t'ineey falUion. others itztremely oanat enticuea in ensuring

dmereng uubcrdnat-- get appo.prate tme-

ly •ecognition.

(D Q 0 ® @ D

@®

L. I•AY•bWPU• UIOtflINAMh: Shown little interest in treining or develop. Provides opportuntiee whith enurag, Creasto.challen Situaions wh•ch prompt

-ent of eabLordin•.Le. May unnesaaar,

iy eubordinates to eapend their roles. banJo. sr 'ly high lreldevel ntof people

"Theextent towhich an ffi uses coechjng, withbold authority or over-aupervise. Imporutat •asks. and liars by doing1. L ork group run le'clo-kwork

oaeu eetangad

tag and prvidea oppoa Deesn't challeri their bilitihe. May Deetateas end hold. subordinate. accoun F .lwSyA kn,,w what' on end

guru-th

fo growth to increasm

the ktilla. w.ulersto, margis

• . lrfmaic. or eltlcues table. litecogiue goodperformanca.caract. ro .. :y handle the = e Ho!de

kLaneled, . Lad proficiency of subordinates. ezceeively. Dmoen

I beep etbordanete, in- Shortcomings. Proide opportunties for autnraiainate acsounteble. prondee tamely

formed. provides littia monaet

tctive feedback, 0ianang which support profmoal growth pr'•uir •ndconstructive ir•irm Pruovid.

artit- And maueve tsrsinng opportunities-

I •"JThUt..•.c•'r 00"r Il ;JF.s i

Pmn

officer who haa diffii•uly controlling and A loader who Saru. the &

ppor mmrad

€oou. A Setrong leader who commnande respect end

Inrlueunin og

thers .ctively. Miy not insill ent of others Set.s hih work standards iap"r. othie to ech-eve result. rnot normal,

The

.Lfr's

re •fea

ctivao In

in Influrn- •rdi'dence or enhar.,a ,opertUone amrong end epectatlions which are clearly ]y etuancable. People want to srvie under

dirst".lo others in the accomphlaun of rubordunalee and others bet& work statn. understood. iA-quiree IS to meet Uti h1erleadership Commun•catetshighwork

Leeks or masaon i darda that may be vogue or mtusonderetooi. standarda Eneohaiode Keep. people .sndaPrdend enpaataoew .hacrely

"Tolerate lAtLeor marginal performance motivated end on tiack even when "the go urdervltood_ Get es

unor reeslt. even in

Falters in difficult situationns ing #et. toagh tare- O

cical nd ddrat aituationa. Wine

over rather than nmpo*e nell

Q G0

1 0

d. KVALUA TIN(J hl'l'lt)If)vlATE. - Preri ovaluatiuo that are late,inownie Prtpae s e-valustuor which are timely. lar, Pi-epares cVsluastune which aee always on

tent w•th actusl parformnsc, ornotwtLhin soirie, anrdaoilurnt w-tih eystem stan, taie.fair. accurate amin.clearly measre per,

Te aOunt to which so ficer eceoducte.

or systse udelinos Second gineeesethe darde. ftiquired narrative are concumt, formance against the standattrs Nev"r gets

roqulro• ~ ~ o car L' 'odut

aur , /t,.ertofte need to be improved J to-zrpum,rend conU-ltirbtrto udnr.ndr reports returned for Corut-orvaiiuastrnt.

utlnflatmd arid timely eveluoaornn lor or redon. = 't hold iuber•drien ecoue Subordnates' performance and qualiies Use perforimsanc evelustbao e a tool to

onluiteod, civildan, andoffiorr personnel table for their retlogo Proides littlo no Seldom gets reports returned for co•e•s. develop subordinart. •nd

schieves notable

euvnelini g f -ranatee. tionedjittmeot Provides constructive perforomance Improvement. Sets en example

ceu-mbh4 whe., needed. Doa not eeh

1 .

in in supporting eape.lished guidelines

ecueeifatdf 'yprprdLwst

101 IG) (~~romz

others 100 8

a. COMMENTS (Leadership Skills):

F-,13](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-261-320.jpg)

![CG-312 (Pae 2) (Rev. 6-64)

6. COMMUNICATION SKILLS: Meamire an offiers bwty to commmic in a potive. clear. and comln4V maInner.

a MPEAJfNG AND LUTMNMG: Countoutas as ht •is

kspb ed by fpes ck

l

dearly mnd-o1moUy. Gt- the i

,-,s alan-m aleicdad um-.

in 411 pointaamesar.

Spemaks~

1 ~o gffecivly end With new =

Alwayslislafdt and credible in both

No,.,well. un

ofcr spak AM•

btall i- in- a~~.u

milsssieuses als. rhsc inm-

both•

priate., -- A pbic-• artualas. gilelujmalinfedice

andim oo to, am,

divsduej. group, or public atsaatioaL Di o.

0 prst issibsitt confidence -hen Uses appropriate cramar-~

WW plots r

wpealkurin may be unprepsred. Latem pOW, ts% baa•o dtistraung manneriamr . GiCAs phass•es and pe•suade. L•ncuragee osthsm to

ly; doeasn't give Wenhr a chancie to speak. other a chance to speak. listens well. respond. is an sUsintowe

liesstner.

b. WRrIU•-G Writes material wh)ich ay be bard to Writs., clearly and simply. Mittelial ad. Celauueetly wnites material which isan 1ax.

undersetandi or damnet support conciu•si=n diems, subject. lows wel.,achieves intend atmple in blrevty, clarity. logical flow, and

How well an elar, conmmunicates through raldied. May use jargon or uViephr. ad purpose. Uses short aente4nc•spara- persuason.Tailmr wiucitt to audhietun..

Imroni material. rambling seltence•p•lracrupiN or Imart graphs, pereonal prionouns, eand the active tg approprte rooeerstaooal syle Wit.

gromm. asru••ire, format May oversae rm-. Av-ids buresuatoc. jarlgon Senwork neve _edok I.o

the poenve oice. Ownwot or

•Uhat d - or big words when little ones will do Own uborduisate mmw.s =m high sAtdard

dinata often needs corredjron or rewritef week or that of subordinates rarely needs

coriection or rewrite.

Of___ 0 a) a) 0 0 (D _

i. AR11CULAT•NG IDEAS May hiave valid We but lacks orIJIManiati Expresss idea nd oncepta in sioorgonit. Peadily iebshsim ecdsbility. C•ncm. Par.

or a confident delivery. MAY argu rather ad.undervtAndsibla manoter. Pointsout pro's suasive. and st=ng. Delivers Ides" with

Ability to contribute idess, to diatiseelsusss then diecuss; or may intaryset inelevant and con's. Urns soud resnig cinvinong[ogit anbl comments err"

an expem thoughts clarly. ooherit:ly. camment•. Contributes litle tha. isgs- off subjsect-L

Ieeptiv• toides ooters. C T hins c

iung, thovugh, Clearly states key

and eo1emporniscualy. is -ll or large or use••.il Unrectptive to ideas ao ethm's speak well "-Ofthe cuflf." iue-. ad quenin. BuAids on thtei

up brleeirrr m-ebtmin. O- t "ink's ' •eilon feet" in alI

d. COMMENTS (Communication Skills):

7. SUPERVISOR AUTHENTICATION

a. SIGNATURE c. SSN d. TITLE OF POSITION e. OATE

-7

IP

1 6,1 1iz

j THE REPOR1 ING OFFICER WILL COMPLET6 SECTIONS 8-13. in SectionS . Comment on the Suosritiear &valuation of this offica, lOittlna. Teo

n for ec of d, raig

siicaL-, In Section 9 and 10 cor'ip•te this officer against the etandaildl shovrri and

'osign a mriark by filling in the spPioctiil. C10it

a. In the ieas following each ieatiion desýnbe the bain lot me

marir given citing specifics wfiatt pouoible. tine only eliotled spate Complete SectIonsl 1I, ¶2 end 13

8. REPORTING OFFICER COMMENTS

SPERSONAL QUALITIES: Measures selected qualities wvhich illustrate the character of the individual.

£thr'l.AfIl'E Tend., to poe'.pone needed iction Ira- ! Get thinor, done Alosyt etrn~rs to do the OrrDns~t-a, nlurtures. promotes. or brngs

,I

plementa charie only &then confronted by

v ob better Makes improvenrents. "works shout new ideas, Lethod.,

or priactices, which

iemonstrated iblity to me rofr'ward. make necessity or directed to do eo.Often over smnrter. not harder - Sellstarterr, not afraid result in significa tiprovement to unt

changes. and to week responsibility iiLhout eastn

by eveniss May supprem inuatite of or mokint meitakeh Suppots ner andior Coast Guard Doe ot promote N.O

gtidanicw and supervision subor-dinatei May he non.supportive of ideaa.newL.od.opractice* and efforts of other, change for take of change Malkes woe

chatters directed by higher authonriy. to bnnC tibout constructive chanre Takes thwhile idraiipractires work w.hen osthers

ticely corrective sction toa rvoid.reiolve may have given up Always takes poisiUve

problerru acion well in advrncr:

q G) 0

0 (D

0

b JJDGMENT: May "nt show sound Iogic or common sense Dirmonsurvat. analytical thought and cemn Combintes keen analytical thought aid to.

in maJung diffdcult decisions. Somstimes ats •on sen.sei•i making proper deci-1ioi. Uses eight to make urtilo end omionul dae.

Demonstrhted abilityrto a at 1undde'm. too quickly or tou late. gets hung up in i cisand experience snd oinido, the inn. s

Isioe. lc-seeson th

e ms lnemd st

stuon

anm mkis sound recommedti onsLaO

by dataije. orboy

69WS ker elements

kl toaltis pact o( aluternatives

t

oghs nlib, cost. and relevant iliforUntaion. own in o•oplex ItU"-

using expenasce. common sen0e. end aise wironglI h tilseconaidor-atiogn Mekes sound decisions lon. Always dos the "right" thing at t

anaiy1ca though in the decia-on proce s a Utmely fashion with the olst informs --right tme.

tion evailable.

c-REPONSWCLIT.: Umiallyceaintisdpended upon to dot•s right Posmess high standard of honor and i. Uniesnmemang in midase otbf-- and ka-

thing Normally ocountable for own work tegrity Holds iself nd subordinates tun. tegrity. Placme goals

Oa Coas Guard above

flmoicnitmted mn"'

mitent tatgettingtkejob May awrvt lees thant sittlactlory, work or tabl Kep ommritsinentasteve when un. peierada

aabibtics sadi gels.. "Goss ths as.

os ad to bold oee's self ac"ountable for tolerate ndtftorsom. Teod ai to get involv. cmifortable or ddiffcult to doIn Speaks up Lre mile. and mare.' Always holds esVand

mw

anldsubordinsates' actions. ccrtneeg of ed or speak up Provides miniml support for when necessary. even if postion is un sibordesiatse accontable fopruidm and

umvri.nloL ability to ompt decasons coc- ded&isonsi

men to own kIldas. popular. L•yal to Coast Guard. Suppots tiouniea the eciragei In h Willl

tuary to own vnies andsin

mks thsem worit. ergianhethosial polcsaridedsdocep which may stand up aned

ho ---inAd fi-med -nL.

bs siontor its,ewv Ideas la eenu ps~la -olmeafdedsweerk.

__ __ 01 G

00 0 _D _

d. STAhMIA. Pill'umn

(a csI marginal leader strrni PesfaMA" ifIs

eusafred sundier straes or Pesfarmanos"

seethes an ima

iially high

or during pseudo a

of e Wendd

tr. May dsh'arigis ofextenided work with no lees lee hna4ie rdsa perds of

1'lle

orb'sia's ability to think and ac rt

fs make pooir

decilsosem

irevaook key faIos of prductleuity or msafty. Works amtr houra extsnded work. Can wor haLew" sea

lively sander waodi"iac that are witmlul ficu so0wreng priorities. or wase= ngh bat

when mO to get thne

job dones.

Stays several days and stUl remain very, proedw.

aedtesr enontally or physically fatiguiiing esafety

constidera~tions. Belka at putldung

in mel when the peesmiure

Is see ties and Marc. IThrivess =ar st1"Nsull

neci' r

av .

ti comes rattled In time situstiose

eswsitive stressul situstleews

___ __ _ 0 0 0 G0 0 00

a SOBRIrI'. Use

of lcoholc

sets powasinaplo, or semIte Usesalcmo I dum-uinua~isly andin hiemr"

Meessandeein

tosalows fear. is aedruei.

Is reduced job pseforuaine. May bring Unt. or ac at all. Job performance estivaeraf. holdsumpsevimas a assloaifor dwwwmag

The extent to which an affloweercial

name.~ didt o seret

w keissigh shohol odiaserc helnd by useof alocilol;nan diwedisct brought lag Intaemerate am and teog aey se"

madeostatbes tn theuse ofalcohol assd indres.] ad incidnsle ijall

wh~ f dulty. Dose sest

seek So

mvlmio. DoWeas

t tlsroate losisoamseatless Uspeecint ascaekl realala ladeta A

suhore to 40 wsse.I help for people with aimba related pro. by etherst Surptorts alcohol educatiocpn' le catirr

ia

mvla alanhwed

oussnta pie

IF~

h I s

a to Iinks tums adbeaisWpreveat pram a.d see. help for t1ese wiitalcols pmCreatess h sm ltars

sitrtvee Its

-- 1 -l1ois re

latedind

usdded &. re

latedpr obl rn

ia a "oh

l e

II

1 0,'. 0. _G)_ D 0](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-262-320.jpg)

![f. COMMENTS (Peronal Qualities):

10. REPRESENTING THE COAST GUARD: Measures an officer's ablity to bring credit to the Coast Guard through looks and actions.

as.APPECARANCE: May sat a~lwaysa" rstlav or~1= Apos t. -et and well genoamsd

in .. Always Wumaraa

an linatenomh appearance

standard. Civilia

n sts my amand civilian iatte. Presents Clearly mesets Creaming standarda.

Me awat

t a an,~

afs, r it prto~ at Urnso,May "s presnt a lityeally Wi- eapain. Rsquirsaeuaahr- Deviewatrte Vast, can in wouwing andl

"-a,,and w"ell-md. norm or pmally trie appearanc. Diownot boM diatna Its form to raomnngAnuiform munt-an undifo and dwilaat ut.s.

dyihaesn ame prescibed -at&gi inh nto. to sesm standards. standards a&d maintain a pliytmlly trimo Ham

a maert phyicmallyw~in ssilltary ap

Maaida. and anomly iu su pewn Fom exartimnm

In g•ue:an. NfO

dimiats to do lbs

o drew and physical appearance m~

dusinas

soad otherso.

_ _ _ _ _ 0 0 _ _ _ _ _ _ 0 _

b.CUSTOMS AND COURTESEES Occasocially laxs sine

ovun bat msutary Cawmt in mdmmzngO

to 1111111411

U*traimm. - Always precise in ucrdenagil uiIluiai7

-- cstoms. wwanume.

and t;diuoaL May not custooma.

"Ad courtesan. Convays tboatitm- cowrtm*L Ins~p~suewblorrljnates to do the

Ther l; to whice asnffim• ornes to show iu "r Fct wben dealing wihb pulam icother and require whrduetkn mawe Etsrplafisa the fnet traditions of

Military itradlitm. customs said courteedes others. Tolerates lax behavior on part of toconfort. Tviiats peolevwmh corayand Military CminOm.. etsq'iitLs. end protocol1

and aunoly rhsqw ordinsas Lodo ouhardinaties. consindi Ion; emw savdinatas dothe Gone. out oway to inur polite. mdaeraa

tbe

sus. sam and genuine treatment is extended to

everyone. Ininsta ubordisetis do llkewise.

_ _ _

_ _ 0) -

0 0 0 _ _

c. PIOFESSIONALMM: May be muainfrmed/unawwar of Coast " Welveted bow Coast Guard obpcives. Peco•igusediasanexpe.t inCoast Guard-a

Guard polias objectives. May bluf policies. poadmu serve the public; cm- fairs. Works ceatively aod ainifidenUy With

How an

l atppliess

knowledge and ekills raether tan adMmit

iWuDesJlle to mutwuces thee effectively Sutasghdfor. represntative of public and gfovieinesL

in p•i •idinq

serce to the public 7b@ mas

t- enhaneselfusageor image d Coast Guard. ward. cooperatve, agidevenhanded in deal. LJwpao

confidence aM trust, and dsahly oir.

couin which an officer represnts the Coast May be ineffecti-e wheni -okin wih Ing wih hepblic and govenuuenL Aware veys dedication to Coasit Guard ideals in

Guard. others May lead personal life which infr. of irmpectfimpreasion actions may caua• on public and private life. Laves everyone with

ioge s Coast Guard respnonbilitiem or others. Supports CG ideas

II---ads personal aer positive imnage of salf AndCoast

Image. ife which reinforces CG Imuag

_0 (0)® 0 0 o (a,

d. DEALI• G WTH TRE PUBLIC Appeuar ill-ai..sse with the publicor oedui. Deals fairly and honeetly with the public, Always self aseured and in control when

inconsistent in epplying Co•aA Gu•srd prm- medis and others atall levels. Responds pr- dealing with public. media and others at all

How an individual acts when dealing with grams to public sector. Falters under mptly. Shows nofalvoniuar Doesn't alter levels Straightforward, Impartial. and

other se-voie. aem bus'inesa, the preasure. May take antagonistic. or con. -hen faced with difficult aiLtuitons Cornfo. diplomatic Applies Coast Guard ruleipm

wiedis, or the public. deeenilir-. approach Mekes inapprupnate table in social ;tusti,'rn Issenuitie to con- graims fairly and undiormly. Has unusual

statements May embarass Coast Guard ii" ernis expressed by publit social gtrce. Responds with grest poise to

omie social situations. provcative actions of others

__ _ _ _0 G

© 0 0

0

a. COMMENTS (Representing the Coast Guard):

11. LEADERSHP AND POTENTIAL. ;'scibc this otiice's demonstrated leadership ability and overall potential for greater responsibility, promotion, special as.&ignmenri and

coenT.ind. Comrnnntn Should be rulated to ttiose areas for wh,.h the kepot'ing Officer has the apprupriate background.)

12. COMPARISON SCALE AND DISTRIBUTION. (Considering your cornenets above, in line a. compare this lieutenant cQmneander wrth others of the sme " .

gradse wtorn you have known in your career).

ONE OF THE

A QUALIFIED MANY COMPETENT PROFESSIONALS

UNSATISFACTORY A LFIED WHO FORM THE MAJORITY AN EXCEPTIONAL A DISTINGUISHED

OF THIS GRADE OFFICER OFFICER

aD I DI IDDDI E I D]

- - FOR HEADQUARTERS USE ONLY

Sb..-" r-] D... D

N

13. REPORTING OFFICER AUTHENTICATION

a. SIGNATURE b. GRADE c. SSN d. TITLE OF POSITION e. DATE

14. REVIEWER AUTHENTICATION 0 COMMENTS ATTACHED

a. SIGNATURE b. GRADE C. SSN d LE OF POSITION

F-15](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-263-320.jpg)

![FORM DS-1829 Page 3

II1. EVALUATION OF POTENTIAL (Completed by Rater)

A, General Appraisal: (Check block that best describesoverall potential)

1. For Career Candidetes only: Assessment of Career potential as a Foreign Serv'ce Officer or Foreign Service Specialist:

0 Unable to assess

potential from observations to date

[] Candidate is unlikely to serve effectively even with additional experience

[] Candidate is likely to serve effectively but judgment is contingent on additional evaluated experience

0 Candidate is recommended for tenure and can be expected to serve successfully scross a normal career span

2. For other Foreign Service employees:

0 Shows minimal potential to assume greater -espomibilities

D Has performed strongly at current level but is not ready for positions of significantly greater responsibility at this time

E3 Has demonstrated the potential to perform oeffectively at next higher level

0 Has demonstrated potential to perform effectively at higher levels

0•o as demonstrated exceptional potential for much greater responsibilities now

8. Disrussion

1. Potential is evaluated in terms of the competency groups listed in Section II. Cite examples illustrating strengths and weakhneses in competencies

most important to your judgment.

2. For career candidates, discuss potentisl for successlul service across a normal career span: for Senior Foreign Service, discuss potential for highest

and broadest retponsibilties. for all others, discuss potential for advancement.

C. Areas for Improvement: The folluwing must be completed for all employees. Employees should be made aware of areas where they should concentrate

their efforts to improve. Based on your observation of the employee in his/her present position, specify at least one area in which he/she might best direct

such efforts. Justify your choice. (The response is not to be directedto need for formnia training.)

F-18](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-266-320.jpg)

![a DESCRIPTIVE TITLE OF PRIMARY JOB

a TiTRE OESCRIPTIF OU POSTE PRINCIPAL

LT I CAPI' CP AJ LO O N

b SECONDARY DUTIES (by descriptive title onlvy c RANK FOR POSITION 0 0 0CAPTCAI

MAJLCOL COL N

b. FONCTIONS SECONDAIRES (litre descriptit seulemenl) c. GRADE OU POSTE I CAPT CAPT

LT LT CAPT SAJ LCOL CO. i

d: RATED OFFICER'S RANK 9 0

di GRADE DE L'OFFICIER EVALUE 5. 0AP 0A LCL

. TIME IN JOB

e ANCIENNETE ACE POSTE 0 0 0 0 0 0 0 0 0 0 -

"OS 4 G 8 10 Tj 1b 2 30 36 48f.

I PcnIOD OBSERVED •OIS

I PERIODE D'OBSERVATION 0 0 0 0 0 0 0 0 0 0 -

SECTION 7 - COMPARATIVE ASSESSMENT7 ra

SECTION 7 VALUATION COMPARATIVE 7-1 Reportng Ofcer - Ocer rpporteLr 7-2 Reviewing Offcer - Of-cir reviseur

-. PERFORMANCE FACTORS/FACTEURS DE RENDEMENT 1o- Normal H • _ _ _ f Normal S."

I Acceotec responsibilities and duties

1 A pr.s en Charge des TCspoflsobri,Ies(DG

aI des lonctionr,

2 Appied job knowledge and skills

2. A appique its conna,ssanres el leCs-------------- -- 0 0 10 (D (D 0 1 G i

comDeiences au travall

2 Analysed problems or situAtOns .A

3. AanalySO 1o$

problernes 0.. le sS:i76ns ---------- )) i)( k $

4Made dec.s'ons'too b acti ..

4 A DS des oclcisior~s el djes 'r-eswes G( D 00 D (

M

Tade riers and 1repataTo~ns.....

5 A woesse des plan s

e -Ia

.! ,

erep (eD (DD

..o0,o:;.eied. ............ .. ®® ®®I ®®I®®oo® -

c,eg........e o-ee

.,.-. ........... 0 !(D 0 ( ® ®® ®

) ® -

cX;£rm,,ni- ý._e ot.i.e-~ew i ( D D 0 z D c

p a

. .... ------..

--------------

0 G C D (D

D D 0 0 (9 G

. A •cmmf~r.J ,C 64e

F. CC,

SIeltre ,Ce" 5h'es'•, - ". ,

11111

9

.,o. a'.

: cIs•,o

.. ........ . -...

1 G G ( (D (DG

13 A

Lc ~. --- _----~r--------------- I

1-• ',u' C!'

• ed I:t

( 31 '

%zI PTi0 Std )C

. P..

eA).. ...................

- . (D

e (D'-0

,e ses su,.p4rnes

l

,PO1E. SI. NA. AT.. .. . .TES.r.I...SS..,. N"

.. a I....

. . Norr.a-

2 ,p

nce .IS

4 Conduc®0

4

6 . o ul

e - -

5 iS)ielleCI-----------------------. Q o ®®o®®

6 negrilýy ..........-..........................- '--

- -- - - - - - - - I (® @ g( -

6 . niegrii Z E 0 ®

(Z G ( (

7 LOtaule '

S ~1ao----------------------------------------o ®® -O&t

D( 2

3 D,,I.Calion

. C,,jrage ........................... . ,

~

"oI

SECTION 8 -POTENTIAL I ..QO

® L. ..Q,

81Re~orhng OfficerT Ohic~er repPorreu I -

8 e~e m Olf~cer Othc~er rev~se~r

Normal 5-R'e, ew N ora

SECTION 8 - POTENTIEL , 1 '0®! No~ma . -

O G)

. (D

- G (2)0 1

®1 (0) 0 G

SECTION 9 PROMOTION RECOMMENDATION 9 1 Reportng Officer - Officier repporteur IReveing Officer C. 1cer

reviseur

NO' NOT YET YES NONOT YET YE[S

SECTION 9- RECOMMANDATION DE PROMOTION NON PAS ENCORE 0 OUt 0, NONO PAS ENCORE 0 OUI (0 I

:OH NDHO USE - A 1'USAfrE DU .NOTET• .

,]_.___

_]__. ___ i](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-272-320.jpg)

![CONFIDENTIAL CONFIDENTIEL

(w-len any Pan completed) (urie foil remplis on tout ou an Catte)

C.CTION 12 - RECOMMENDATIONS FOR TRAINING AND EMPLOYMENT - RECOMMANflATIONS W'INSTRUCTION ET D'EMPLOI

6. Training 0. Employment

Instruction EmpI0

Aank. name and auuo3tnimert Date

Grade. -ion, Ct post,"

r

SECTION 13 - COMMENTS BY REVIEWING OFFICER - OBSERVATIONS DE L'OFFICIER REVISEUR

I do not kno. this officer I know this officer slightly i know this officer well []

Ji: fie cohiao pLmsau tout cel oficler Je ne connail cet officier ou'un Peu Je conna. -,n ccl officiei

RAnK. name and avDuintmen! I"natur Daitc

3race. onom

et poste

SECTION 14 - COMMENTS BY NEXT SENIOR OFFICER - OBSERVATIONS DU PROCHAIN OFFICýER SUPtRIEUR

Ci no' kno thi

i•, OtfiCcr I know this officer Sltghtly I know this ofhce.r weit

Jhe connias las itu lout cCI otcCr 0jc ne connais cet oatlteer Qu'un Pew .e contais bierl cet

eo&fcler

Rank, niarre. acipo~ntnnent Aar unit SgaueDt

Grade. nom. oote et unit

SECTION 15 - AODITIONAL REVIEW - EXAMEN SUPPLEMENTAIRE

F-26

CONFIDENTIAL CýONFIDý)E N TI AL77T](https://image.slidesharecdn.com/airforceofficerevaluationsystemproject-230806152107-fc5a01ec/85/Air-Force-Officer-Evaluation-System-Project-274-320.jpg)