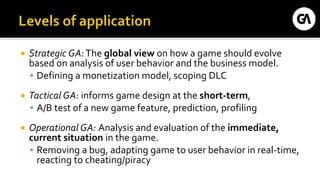

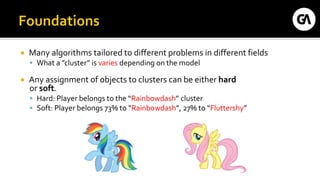

The document discusses the complexities of game analytics, focusing on discovering and communicating data patterns to enhance business decision-making and improve user experience. It distinguishes between strategic, tactical, and operational analytics, while emphasizing the importance of user metrics and effective clustering methods for understanding player behavior. Additionally, it outlines various clustering techniques, validation, and interpretation of results in the context of analyzing gaming data.