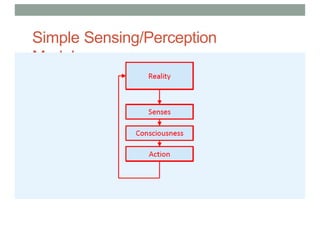

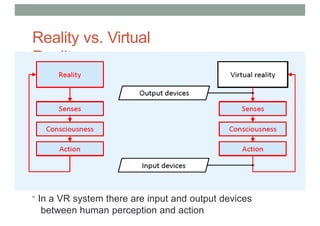

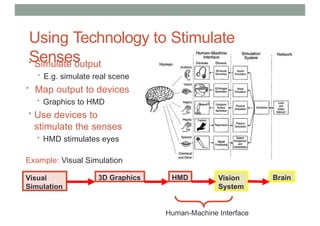

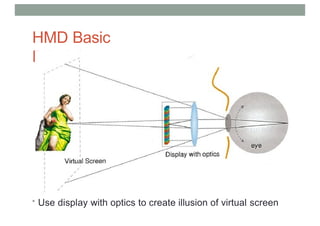

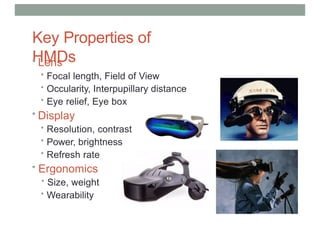

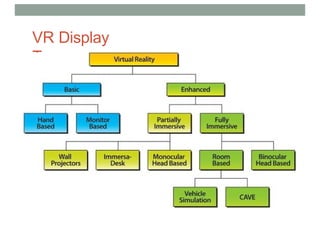

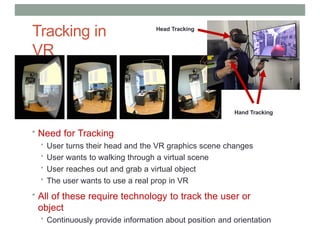

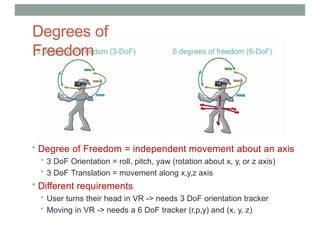

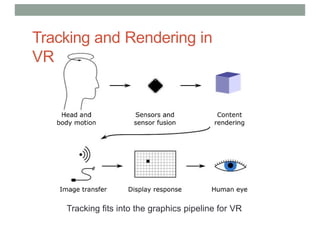

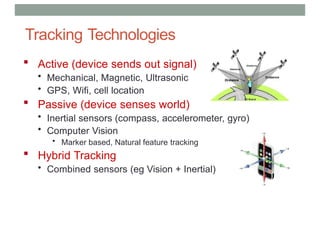

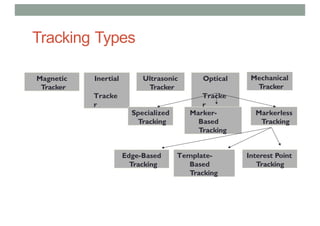

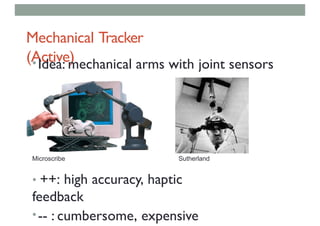

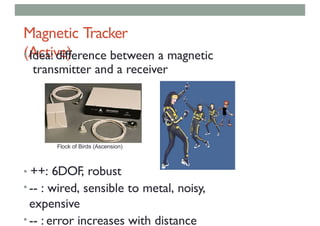

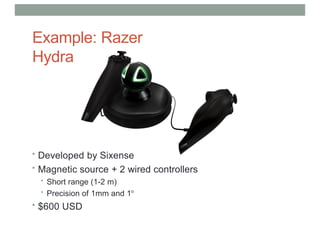

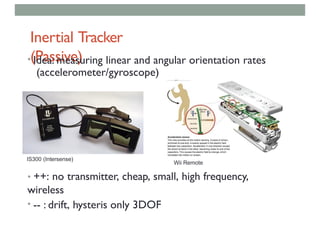

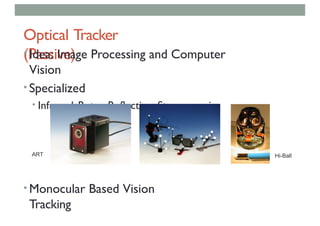

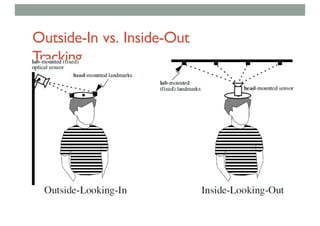

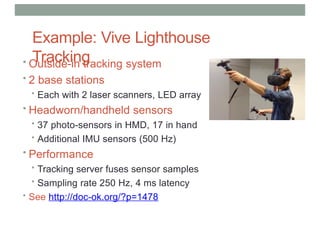

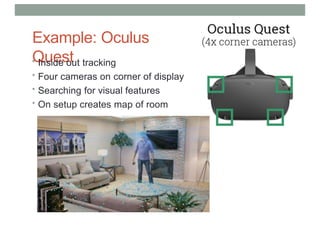

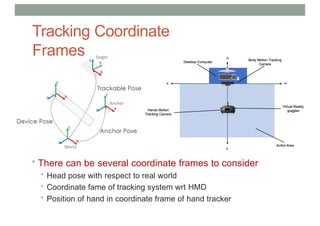

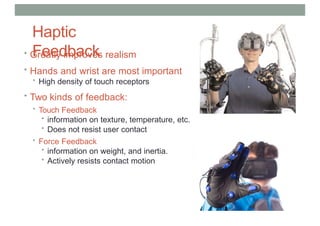

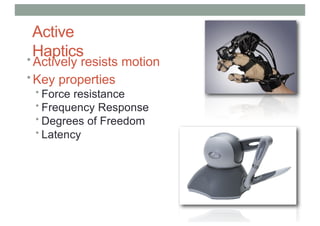

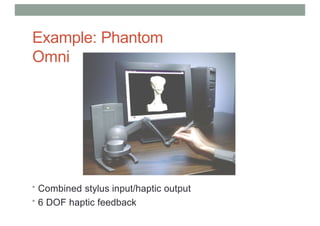

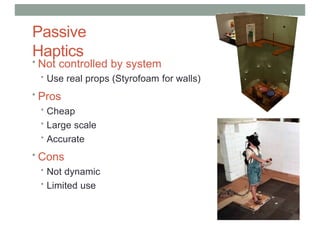

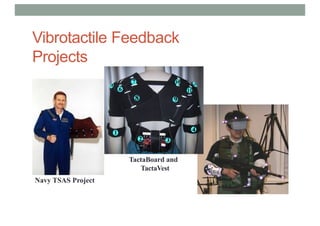

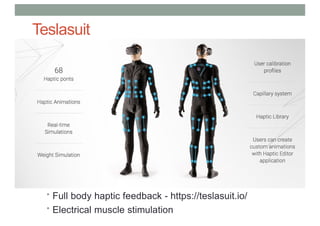

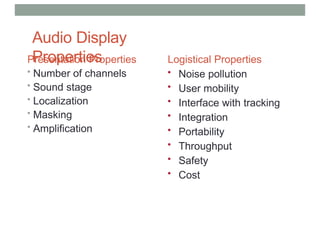

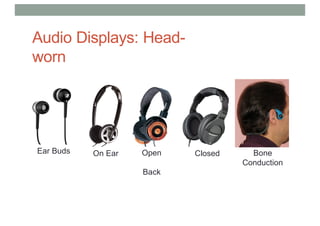

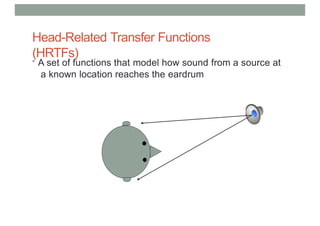

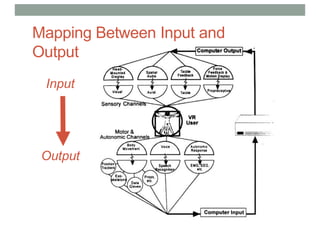

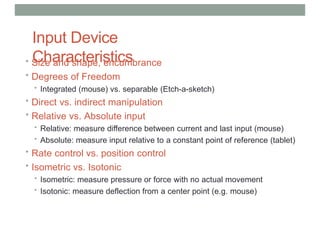

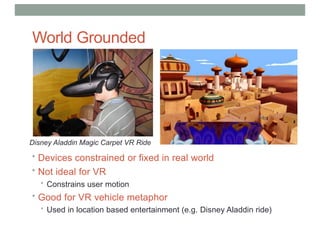

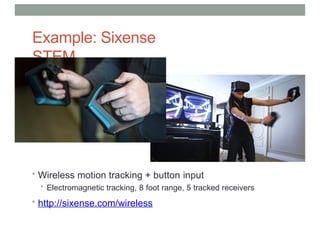

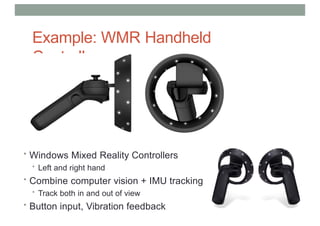

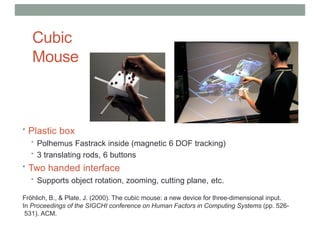

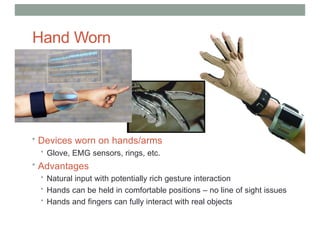

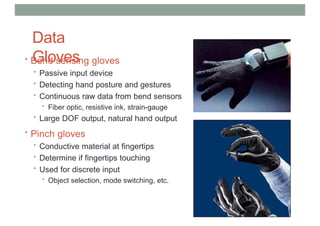

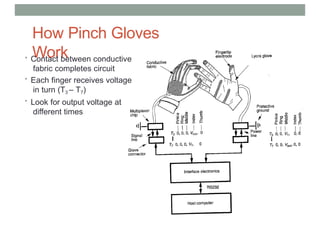

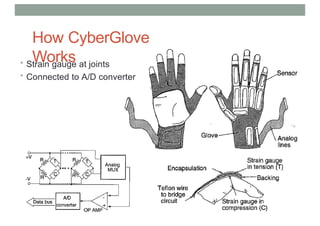

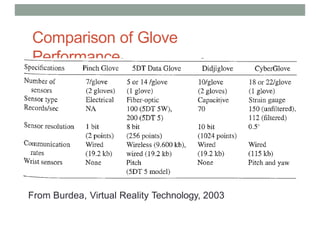

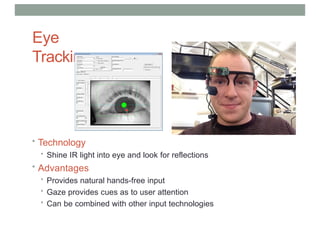

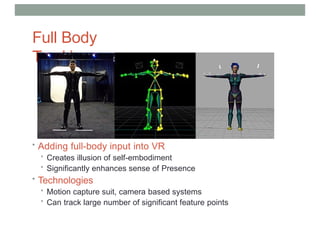

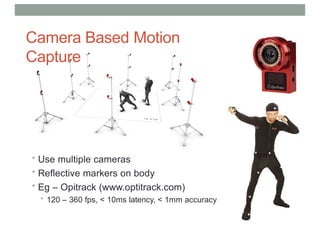

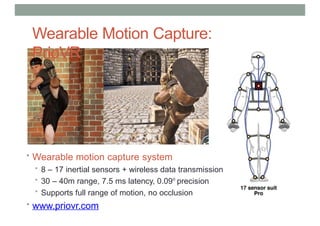

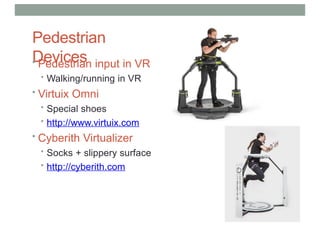

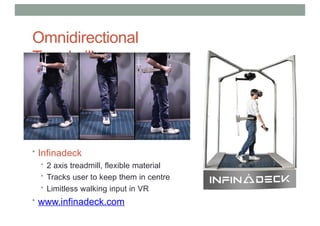

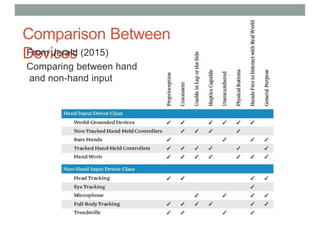

This lecture discusses various aspects of virtual reality (VR) technology, focusing on human perception, input and output devices, and tracking methods. It covers how VR systems simulate reality through sensory stimulation and explores technology developments like head-mounted displays, hand tracking, audio displays, and haptic feedback for immersive experiences. The importance of different tracking techniques and motion capture for enhancing user interaction in VR environments is also emphasized.