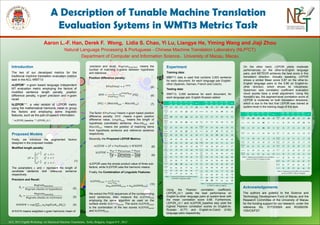

ACL-WMT Poster.A Description of Tunable Machine Translation Evaluation Systems in WMT13 Metrics Task

- 1. 中文 English Deutsch Français Italiano 日本語 Pусский Español Português Dansk, ελληνικά, , 한국어, ... Magyar nyelv Aaron L.-F. Han, Derek F. Wong, Lidia S. Chao, Yi Lu, Liangye He, Yiming Wang and Jiaji Zhou Natural Language Processing & Portuguese - Chinese Machine Translation Laboratory (NLP2CT) Department of Computer and Information Science,University of Macau, Macau Acknowledgements The authors are grateful to the Science and Technology Development Fund of Macau and the Research Committee of the University of Macau for the funding support for our research, under the reference No. 017/2009/A and RG060/09- 10S/CS/FST. Introduction The two of our developed metrics for the traditional machine translation evaluation metrics task in the ACL-WMT13: nLEPOR*: n-gram based language independent MT evaluation metric employing the factors of modified sentence length penalty, position difference penalty, n-gram precision and n-gram recall. hLEPOR**: a new version of LEPOR metric using the mathematical harmonic mean to group the factors and employing some linguistic features, such as the part-of-speech information. A Description of Tunable Machine Translation Evaluation Systems in WMT13 Metrics Task ACL 2013 Eighth Workshop on Statistical Machine Translation, Sofia, Bulgaria, August 8-9 , 2013. Proposed Models Firstly, we introduce the augmented factors designed in the proposed models. Modified length penalty: The parameters 𝑐 and 𝑟 represent the length of candidate sentence and reference sentence respectively. Precision and Recall: 𝑊𝑁𝐻𝑃𝑅 means weighted 𝑛-gram harmonic mean of Experiment Training data: WMT11 data is used that contains 3,003 sentence for each document, for each language pair English- other (Spanish, German, French and Czech). Testing data: WMT13, 3,000 sentence for each document, for each language pair, English-Russian added. Using the Pearson correlation coefficient, LEPOR_v3.1 yields the best performance on English-to-other language pairs at system-level with the mean correlation score 0.86. Furthermore, LEPOR_v3.1 and nLEPOR_baseline also yield the highest Pearson correlation scores on English-to- Russian (0.77) and English-to-Czech (0.82) language pairs respectively. On the other hand, LEPOR yields moderate performances on the other-to-English language pairs, and METEOR achieves the best score in this translation direction. Actually speaking, LEPOR shows a similar Mean score 0.87 on the other-to- English language pairs to the 0.86 on English-to- other direction, which shows its robustness. Spearman rank correlation coefficient evaluation result scores have a small adjustment. Using the Kendall’s tau, the segment-level correlation score of LEPOR is moderate on both translation directions, which is due to the fact that LEPOR was trained at system-level in the training stage of this task. precision and recall, #𝑛𝑔𝑟𝑎𝑚 𝑚𝑎𝑡𝑐ℎ𝑒𝑑 means the number of matching n-grams between hypothesis and reference. Position difference penalty: The factor 𝑁𝑃𝑜𝑠𝑃𝑒𝑛𝑎𝑙 means n-gram based position difference penalty, 𝑁𝑃𝐷 means n-gram position difference value, 𝐿𝑒𝑛𝑔𝑡ℎℎ𝑦𝑝 means the length of hypothesis (candidate) sentence, 𝑀𝑎𝑡𝑐ℎ𝑁ℎ𝑦𝑝 and 𝑀𝑎𝑡𝑐ℎ𝑁𝑟𝑒𝑓 means the position of matching items from hypothesis sentence and reference sentence respectively. Secondly, the Proposed LEPOR Metrics: nLEPOR uses the simple product value of three sub- factors, while hLEPOR uses the harmonic means. Finally, the Combination of Linguistic Features: We extract the POS sequences of the corresponding word sentences, then measure the ℎ𝐿𝐸𝑃𝑂𝑅 𝑃𝑂𝑆 employing the same algorithm as used on the surface words ℎ𝐿𝐸𝑃𝑂𝑅 𝑤𝑜𝑟𝑑. The score ℎ𝐿𝐸𝑃𝑂𝑅𝑓𝑖𝑛𝑎𝑙 is the combination of the two scores ℎ𝐿𝐸𝑃𝑂𝑅 𝑤𝑜𝑟𝑑 and ℎ𝐿𝐸𝑃𝑂𝑅 𝑃𝑂𝑆. 𝑁𝑃𝑜𝑠𝑃𝑒𝑛𝑎𝑙 = 𝑒−𝑁𝑃𝐷 (5) 𝑁𝑃𝐷 = 1 𝐿𝑒𝑛𝑔𝑡ℎℎ𝑦𝑝 |𝑃𝐷𝑖| 𝐿𝑒𝑛𝑔𝑡ℎℎ𝑦𝑝 𝑖=1 (6) 𝑃𝐷𝑖 = |𝑀𝑎𝑡𝑐ℎ𝑁ℎ𝑦𝑝 − 𝑀𝑎𝑡𝑐ℎ𝑁𝑟𝑒𝑓| (7) 𝑛𝐿𝐸𝑃𝑂𝑅 = 𝐿𝑃 × 𝑃𝑜𝑠𝑃𝑒𝑛𝑎𝑙𝑡𝑦 × 𝑊𝑁𝐻𝑃𝑅 (8) ℎ𝐿𝐸𝑃𝑂𝑅 = 𝑤 𝐿𝑃 + 𝑤 𝑁𝑃𝑜𝑠𝑃𝑒𝑛𝑎𝑙 + 𝑤 𝐻𝑃𝑅 𝑤 𝐿𝑃 𝐿𝑃 + 𝑤 𝑁𝑃𝑜𝑠𝑃𝑒𝑛𝑎𝑙 𝑁𝑃𝑜𝑠𝑃𝑒𝑛𝑎𝑙 + 𝑤 𝐻𝑃𝑅 𝐻𝑃𝑅 (9) ℎ𝐿𝐸𝑃𝑂𝑅𝑓𝑖𝑛𝑎𝑙 = 1 𝑤ℎ𝑤+𝑤ℎ𝑝 × (𝑤ℎ𝑤ℎ𝐿𝐸𝑃𝑂𝑅 𝑤𝑜𝑟𝑑 + 𝑤ℎ𝑝ℎ𝐿𝐸𝑃𝑂𝑅 𝑃𝑂𝑆) (10) * nLEPOR_baseline; ** LEPOR_v3.1. 𝐿𝑃 = 𝑒1− 𝑟 𝑐 𝑖𝑓 𝑐 < 𝑟 1 𝑖𝑓 𝑐 = 𝑟 𝑒1− 𝑐 𝑟 𝑖𝑓 𝑐 > 𝑟 (1) 𝑃𝑛 = #𝑛𝑔𝑟𝑎𝑚 𝑚𝑎𝑡𝑐ℎ𝑒𝑑 #𝑛𝑔𝑟𝑎𝑚 𝑐ℎ𝑢𝑛𝑘𝑠 𝑖𝑛 ℎ𝑦𝑝𝑜𝑡ℎ𝑒𝑠𝑖𝑠 (2) 𝑅 𝑛 = #𝑛𝑔𝑟𝑎𝑚 𝑚𝑎𝑡𝑐ℎ𝑒𝑑 #𝑛𝑔𝑟𝑎𝑚 𝑐ℎ𝑢𝑛𝑘𝑠 𝑖𝑛 𝑟𝑒𝑓𝑒𝑟𝑒𝑛𝑐𝑒 (3) 𝑊𝑁𝐻𝑃𝑅 = 𝑒𝑥𝑝 𝑤 𝑛 𝑙𝑜𝑔𝐻(𝛼𝑅 𝑛, 𝛽𝑃𝑛)𝑁 𝑛=1 (4) EN- FR EN- DE EN- ES EN- CS EN- RU Av LEPOR _v3.1 .91 .94 .91 .76 .77 .86 nLEPO R_basel ine .92 .92 .90 .82 .68 .85 SIMPB LEU_R ECALL .95 .93 .90 .82 .63 .84 SIMPB LEU_P REC .94 .90 .89 .82 .65 .84 NIST- mteval- inter .91 .83 .84 .79 .68 .81 Meteor .91 .88 .88 .82 .55 .81 BLEU- mteval- inter .89 .84 .88 .81 .61 .80 BLEU- moses .90 .82 .88 .80 .62 .80 BLEU- mteval .90 .82 .87 .80 .62 .80 CDER- moses .91 .82 .88 .74 .63 .80 NIST- mteval .91 .79 .83 .78 .68 .79 PER- moses .88 .65 .88 .76 .62 .76 TER- moses .91 .73 .78 .70 .61 .75 WER- moses .92 .69 .77 .70 .61 .74 TerrorC at .94 .96 .95 na na .95 SEMPO S na na na .72 na .72 ACTa .81 -.47 na na na .17 ACTa5 +6 .81 -.47 na na na .17 Table 1. System-level Pearson correlation scores on WMT13 English-to-other FR- EN DE- EN ES- EN CS- EN RU- EN Av Meteor .98 .96 .97 .99 .84 .95 SEMPOS .95 .95 .96 .99 .82 .93 Depref- align .97 .97 .97 .98 .74 .93 Depref- exact .97 .97 .96 .98 .73 .92 SIMPBLE U_RECA LL .97 .97 .96 .94 .78 .92 UMEANT .96 .97 .99 .97 .66 .91 MEANT .96 .96 .99 .96 .63 .90 CDER- moses .96 .91 .95 .90 .66 .88 SIMPBLE U_PREC .95 .92 .95 .91 .61 .87 LEPOR_v 3.1 .96 .96 .90 .81 .71 .87 nLEPOR_ baseline .96 .94 .94 .80 .69 .87 BLEU- mteval- inter .95 .92 .94 .90 .61 .86 NIST- mteval- inter .94 .91 .93 .84 .66 .86 BLEU- moses .94 .91 .94 .89 .60 .86 BLEU- mteval .95 .90 .94 .88 .60 .85 NIST- mteval .94 .90 .93 .84 .65 .85 TER- moses .93 .87 .91 .77 .52 .80 WER- moses .93 .84 .89 .76 .50 .78 PER- moses .84 .88 .87 .74 .45 .76 TerrorCat .98 .98 .97 na na .98 Table 2. System-level Pearson correlation scores on WMT13 other-to-English EN- FR EN- DE EN- ES EN- CS EN- RU Av SIMPBLE U_RECA LL .16 .09 .23 .06 .12 .13 Meteor .15 .05 .18 .06 .11 .11 SIMPBLE U_PREC .14 .07 .19 .06 .09 .11 sentBLEU -moses .13 .05 .17 .05 .09 .10 LEPOR_v 3.1 .13 .06 .18 .02 .11 .10 nLEPOR_ baseline .12 .05 .16 .05 .10 .10 dfki_logre gNorm- 411 na na .14 na na .14 TerrorCat .12 .07 .19 na na .13 dfki_logre gNormSof t-431 na na .03 na na .03 Table 3. Segment-level Kendall’s tau correlation scores on WMT13 English-to-other FR- EN DE- EN ES- EN CS- EN RU- EN Av SIMPBLE U_RECA LL .19 .32 .28 .26 .23 .26 Meteor .18 .29 .24 .27 .24 .24 Depref- align .16 .27 .23 .23 .20 .22 Depref- exact .17 .26 .23 .23 .19 .22 SIMPBLE U_PREC .15 .24 .21 .21 .17 .20 nLEPOR_ baseline .15 .24 .20 .18 .17 .19 sentBLEU -moses .15 .22 .20 .20 .17 .19 LEPOR_v 3.1 .15 .22 .16 .19 .18 .18 UMEANT .10 .17 .14 .16 .11 .14 MEANT .10 .16 .14 .16 .11 .14 dfki_logre gFSS-33 na .27 na na na .27 dfki_logre gFSS-24 na .27 na na na .27 TerrorCat .16 .30 .23 na na .23 Table 4. Segment-level Kendall’s tau correlation scores on WMT13 other-to-English