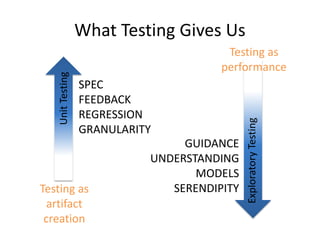

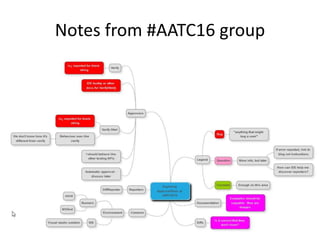

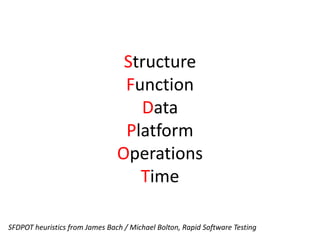

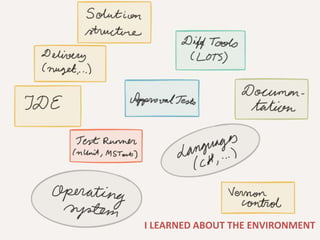

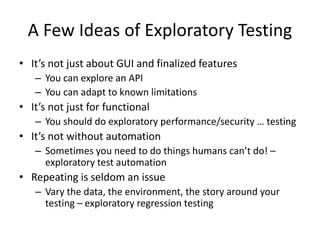

The document discusses exploratory testing of APIs, emphasizing the distinction between testing as performance and artifact creation. It outlines key concepts, techniques, and insights gained from a conference, including the importance of adaptability in testing methods, incorporating automation, and ensuring effective communication within testing teams. Additionally, it highlights the significance of learning and reflection throughout the exploratory testing process.