A credit risk prediction pipeline that utilizes deep learning models for tabular data can outperform state-of-the-art tree-based models like XGBoost, Random Forest, and CatBoost. This pipeline includes:

• Tabular data imputation

• Tabular data generation

• Tabular data risk classification prediction

![1. Credit Risk Modelling

Credit risk modelling is a statistical process used by financial institutions to assess the

likelihood that a borrower will default on a loan. These models analyse historical and

real-time data to quantify risk, which informs critical business decisions. Without them,

a financial institution would be at a significant disadvantage, leading to poor lending

decisions and potential insolvency.

a) It helps lenders predict the Probability of Default (PD), the Loss Given Default

(LGD), and the Exposure at Default (EAD). By quantifying these risks, institutions

can set appropriate interest rates and lending terms or deny a loan application to

avoid losses.

b) It enables risk-based pricing by financial institutions, where borrowers with lower

risk are offered more favourable rates. This not only attracts high-quality

customers but also ensures that higher-risk loans are priced to compensate for

the greater potential for loss, which protects the lender's profitability.

c) Regulatory Compliance which requires banks to use robust internal models to

calculate and hold sufficient capital reserves against potential losses, ensuring

the stability of the financial system.

2. Challenges in Credit Risk Modelling

The following are some challenges in using ML (machine learning) for credit risk

prediction, as highlighted in Ref [1]:

a) Low Explainability: Many ML models, such as neural networks and boosted

algorithms, operate as black boxes, which makes it difficult to interpret their

predictions. This lack of interpretability hinders their adoption in financial

institutions, where transparency is crucial for regulatory compliance and

decision-making.

b) Data Imbalance and Overfitting: Credit datasets are often imbalanced (e.g., far

more good payers than defaulters), leading to biased models and overfitting. This

reduces performance, especially in real-world scenarios.

c) Data Inconsistency: Datasets may not accurately reflect real-world conditions

due to biases, errors in recording, or missing values.

d) Uncertainty in Dynamic Environments: External factors (e.g., economic

changes like the 2008 crisis or COVID-19) increase uncertainty, making models

less robust. This necessitates the development of adaptable models that

incorporate macroeconomic variables and can handle non-linear relationships.](https://image.slidesharecdn.com/state-of-the-art-credit-risk-pipeline-slideshare-250908161502-a204632f/75/A-New-State-of-the-Art-Credit-Risk-Modelling-Pipeline-with-Deep-Learning-Tabular-Data-Models-2-2048.jpg)

![e) Unstructured Data: While unstructured data (e.g., social media posts, news

articles) can provide valuable insights, it is challenging to process and integrate

into traditional models. This is addressed in [2], where user online behavioural

data (e.g., registration, login, click, and authentication behaviours) are utilized.

f) Non-Stationarity: Economic conditions and borrower behaviour change over

time. Models must be continuously monitored and re-calibrated to remain

relevant and accurate, which is a major ongoing challenge.

3. Widely Adopted Credit Risk Models in Current

Practice

While traditional statistical methods remain a cornerstone, the industry is increasingly

adopting more sophisticated machine learning techniques. A few of these are listed

below, but the list is not limited to them:

a) Logistic Regression (LR):

• LR Pros:

➢ This is a classic and highly interpretable model that remains the most

widely adopted for retail credit scoring. Its simplicity and ability to

provide a clear explanation for each variable's impact make it a

favourite of most analysts.

• LR Cons:

➢ Despite these strengths, an LR model often struggles to capture non-

linear relationships and complex interactions between features. This

limitation can negatively impact its predictive power, especially when

the underlying data is not linearly separable. It can also perform poorly

with high-dimensional data, requiring significant manual feature

engineering to be effective.

b) Ensemble (Ens) Methods - Random Forest & XGBoost:

• Ens Pros:

I. Random Forest (RF) and XGBoost models are generally more

accurate than single models like Logistic Regression (LR) and

Decision Trees (DT) because they combine multiple "weak learners"

(decision trees) to boost performance. This ensemble approach

makes them more robust and less prone to overfitting.

II. These models excel at capturing non-linear relationships and

complex feature interactions, making them well-suited for high-

dimensional and complex datasets, like user behavioural data.](https://image.slidesharecdn.com/state-of-the-art-credit-risk-pipeline-slideshare-250908161502-a204632f/75/A-New-State-of-the-Art-Credit-Risk-Modelling-Pipeline-with-Deep-Learning-Tabular-Data-Models-3-2048.jpg)

![Additionally, they can handle missing values and outliers to a

certain extent. Both models provide feature importance rankings,

which helps identify the most influential variables. The XGBoost has

built-in mechanisms to handle imbalanced datasets effectively.

• Ens Cons

I. While ensemble methods are powerful, they have drawbacks.

Reduced Interpretability: Unlike simpler models like LR, ensemble

methods are "black boxes". Their complex nature, which involves

combining numerous trees, makes it difficult to understand how they

arrive at a prediction. This lack of transparency can be a major

challenge in regulated industries.

II. They are computationally intensive. They require more time and

resources to train and tune, especially with large datasets. Achieving

optimal performance with these models requires careful

hyperparameter tuning, a process that can be both time-consuming

and complex.

c) Deep Learning (DL) Methods - RNN, LSTM, CNN, Gen-AI:

• DL Pros:

I. Handling unstructured and sequential data is a major area where

deep learning shines. Traditional models like LR and ensemble

methods (Random Forest, XGBoost) are built for structured, tabular

data. However, modern credit risk analysis often involves

unstructured data, such as text from loan application notes, news

articles, or customer communication, as highlighted in Ref [4].

Additionally, sequential data like a borrower's transaction history or

payment patterns over time is best captured by RNNs and LSTMs,

which can learn from the temporal dependencies.

II. Deep learning models, particularly CNNs, can automatically extract

and learn meaningful features from raw data, reducing the need for

extensive manual feature engineering. This capability is crucial

when dealing with high-dimensional or alternative data sources that

might not have a clear, pre-defined structure.

III. By modelling highly non-linear relationships and complex

interactions that are often too intricate for other models, deep

learning can achieve higher predictive accuracy, especially on large

and complex datasets. This leads to more precise risk assessments.

• DL Cons:

I. Deep learning models are often considered "black boxes." Their

complex, multi-layered structure makes it difficult to understand](https://image.slidesharecdn.com/state-of-the-art-credit-risk-pipeline-slideshare-250908161502-a204632f/75/A-New-State-of-the-Art-Credit-Risk-Modelling-Pipeline-with-Deep-Learning-Tabular-Data-Models-4-2048.jpg)

![exactly how they arrive at a decision. This lack of transparency is a

significant drawback in the highly regulated financial industry, where

banks must be able to explain the reasons for a loan denial to meet

regulatory requirements (e.g., Fair Credit Reporting Act).

II. Training deep learning models requires a large amount of data to

perform well. They are also computationally intensive, demanding

powerful hardware (like GPUs) and more time for training and tuning

compared to simpler models.

III. While robust, deep learning models with a high number of

parameters are susceptible to overfitting, where they learn the noise

in the training data rather than the underlying pattern. This can lead

to poor performance on new, unseen data if not managed with

proper regularization techniques.

4. Missing Data

Missing data is a common issue that can cause problems for banks, especially in credit

risk portfolios that have a low number of defaults. The problem is made worse when the

missing data belongs to non-worthy applicants who have defaulted. Research, such as

that in Ref [6], has shown that the elimination strategy (i.e., removing records with

missing data) consistently performed the worst across different measures in terms of

credit risk prediction accuracy.

To address the missing data problem, the solution is to impute the missing values. The

authors found that simple mean (for numerical features) and mode (for categorical

features) imputation can work well.

The popular MICE imputation method, which uses a chain of linear regression models

to estimate missing values, has two major limitations, which is highlighted in Ref [7].

First, its regression models can be ineffective if a feature's missing values are

independent of other features. Second, it struggles to capture the non-linear

relationships that are common in real-world data.

Unfortunately, there is no single best imputation method for all datasets or missing

value types; it is highly dependent on the situation. For high dimensional large-scale

datasets, deep learning imputation models are often more appropriate, as they can

address the nonlinearity and scalability challenges of feature interactions.

Some deep learning methods can learn from data with missing values by jointly

imputing and classifying, but this process adds computational cost and can bias

results. An alternative is a deep learning framework that learns directly from observed

values, avoiding the need for synthetic data. Similarly, decision tree-based models like](https://image.slidesharecdn.com/state-of-the-art-credit-risk-pipeline-slideshare-250908161502-a204632f/75/A-New-State-of-the-Art-Credit-Risk-Modelling-Pipeline-with-Deep-Learning-Tabular-Data-Models-5-2048.jpg)

![Random Forest and XGBoost can handle missing values directly, though they may be

overfit in high-dimensional feature spaces without incremental learning.

5. Imputation with Diffusion Models

The most significant recent development in deep learning-based tabular data

imputation is the use of Diffusion Models. These models, which have been highly

successful in image and audio generation, have been adapted to handle the unique

challenges of tabular data, including mixed data types and out-of-sample imputation.

Some of the recently published diffusion models are listed below, but the list is not

exhaustive. They use embeddings or a unified encoding scheme to represent mixed-

variate (ie, both numerical and categorical data) and can handle out-of-sample

imputation (i.e., samples not seen in the training set).:

• DiffPuter (2024) – Ref [8]: This model is specifically designed for missing data

imputation in tabular data. It combines a tailored diffusion model with the

Expectation-Maximization (EM) algorithm. It handles both continuous and

categorical variables and has been shown to outperform many existing

imputation methods. DiffPuter is a strong candidate for out-of-sample

imputation as it iteratively fills in missing values by learning the joint distribution

of observed and unobserved data.

• MTabGen / TabGenDDPM (2024) – Ref [9]: This paper proposes a diffusion

model that introduces an encoder-decoder transformer and a dynamic

masking mechanism to handle both imputation and synthetic data generation

within a single framework. This unified approach makes it highly effective for out-

of-sample imputation because the model is trained to condition on existing data

while generating new values, including those for new, completely unobserved

samples. It can handle various feature types and has demonstrated superior

performance on datasets with a large number of features.

• MissDDIM (2025) – Ref [10]: The MissDDIM model addresses the limitations of

stochastic diffusion models, which can suffer from high inference latency and

variable outputs. It offers faster and more stable imputation results, making it

more practical for real-world applications where consistent outputs for new data

are crucial.

6. Limitations of Current Methods to Address

Imbalanced Data

a) Data Class Imbalance](https://image.slidesharecdn.com/state-of-the-art-credit-risk-pipeline-slideshare-250908161502-a204632f/75/A-New-State-of-the-Art-Credit-Risk-Modelling-Pipeline-with-Deep-Learning-Tabular-Data-Models-6-2048.jpg)

![While deep learning-based models often require more computational resources and

can be more complex to train, many models in published studies have demonstrated

superior performance in generating high-quality synthetic data for imbalanced tabular

classification tasks.

More information on tabular data generation to address class and group imbalances

can be found in Ref. [11] and Ref. [12].

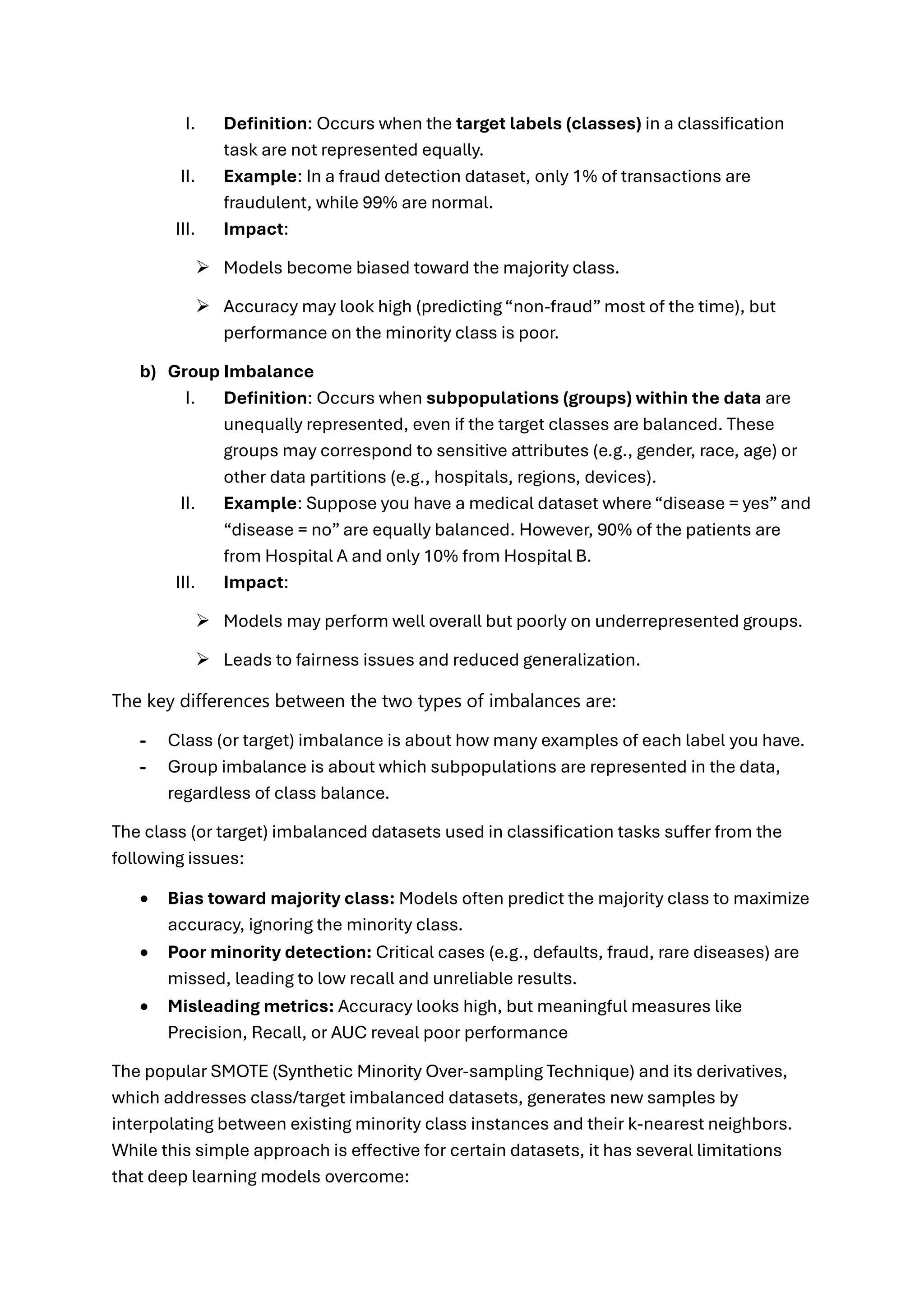

8. A New State-of-the-Art Credit Risk Modelling

Pipeline

The workflow shown below depicts a new state-of-the-art credit risk modelling

approach using deep learning-based tabular models:

The workflow overview is highlighted below:

➢ The data is first pre-processed to correct malformed entries (e.g., string entries

in numerical fields or values that appear wrongly scaled) and to flag missing

entries with NaN, preparing them for encoding in the imputation step below.](https://image.slidesharecdn.com/state-of-the-art-credit-risk-pipeline-slideshare-250908161502-a204632f/75/A-New-State-of-the-Art-Credit-Risk-Modelling-Pipeline-with-Deep-Learning-Tabular-Data-Models-9-2048.jpg)

![The deep learning-based tabular data classification models listed below have shown to

be more accurate in most tests (or on par in some cases) compared to popular tree-

based models such as XGBoost, Random Forest, and CatBoost.

▪ As shown in the study in [13], TabICL surpasses tree-based models like XGBoost

and CatBoost on classification tasks in terms of accuracy and AUC.

▪ TabR outperformed XGBoost on a benchmark test, which is described in [14]

▪ AMFormer demonstrated superior overall performance compared to XGBoost,

scoring the highest in key metrics such as AUC, MCC, and F1 in the study

described in [15]

▪ The research in [16] indicates that while XGBoost is a strong contender in the

benchmark classification test, the TabM model achieved better or competitive

performance, particularly when properly tuned.

▪ The Mambular model, described in [17], is benchmarked classification task

against state-of-the-art models, including other neural networks and tree-based

methods like XGBoost and CatBoost, and its performance was found to be on

par with or better than theirs (in some datasets).

▪ The Beta model described in [18], which is an enhancement of the TabPFN

model, either outperforms or matches state-of-the-art methods like CatBoost

and XGBoost on over 200 benchmark classification datasets.

▪ The TabNet model, described in the study in [19], significantly outperformed

XGBoost in both binary and multi-class classification tasks but only after

extensive hyperparameter tuning.

10. Recap and Final Thoughts

While tree-based models like XGBoost and Random Forest have long been the industry

standard, deep learning models are emerging as a more favourable solution for modern

credit risk prediction. They offer a powerful and flexible approach that can overcome the

limitations of traditional methods, particularly when dealing with the complex, high-

dimensional, and often messy data of today. The superior capabilities are summarized

below:

• Holistic Data Handling: Deep learning pipelines are uniquely equipped to

handle the entire credit risk data ecosystem, from dealing with missing values

using advanced diffusion models to generating high-quality synthetic data to

overcome class and group imbalances. This integrated approach allows for more

robust and reliable modelling than traditional methods.

• Superior Performance on Complex Data: Deep learning models can capture

intricate, non-linear relationships and interactions within the data that tree-

based models often miss. As the preceding sections have shown, models like

TabICL, AMFormer, and TabR have demonstrated superior or competitive](https://image.slidesharecdn.com/state-of-the-art-credit-risk-pipeline-slideshare-250908161502-a204632f/75/A-New-State-of-the-Art-Credit-Risk-Modelling-Pipeline-with-Deep-Learning-Tabular-Data-Models-11-2048.jpg)