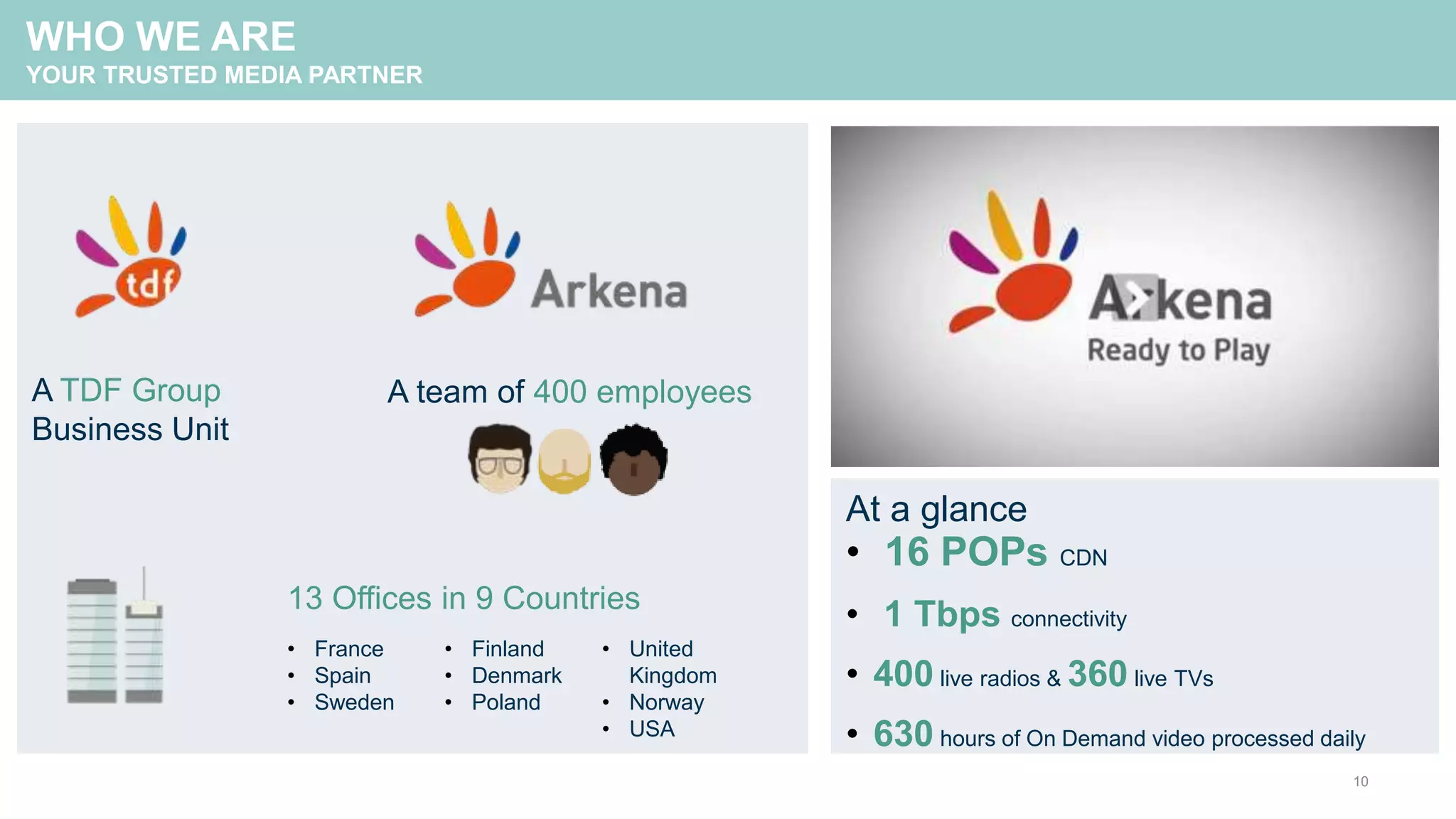

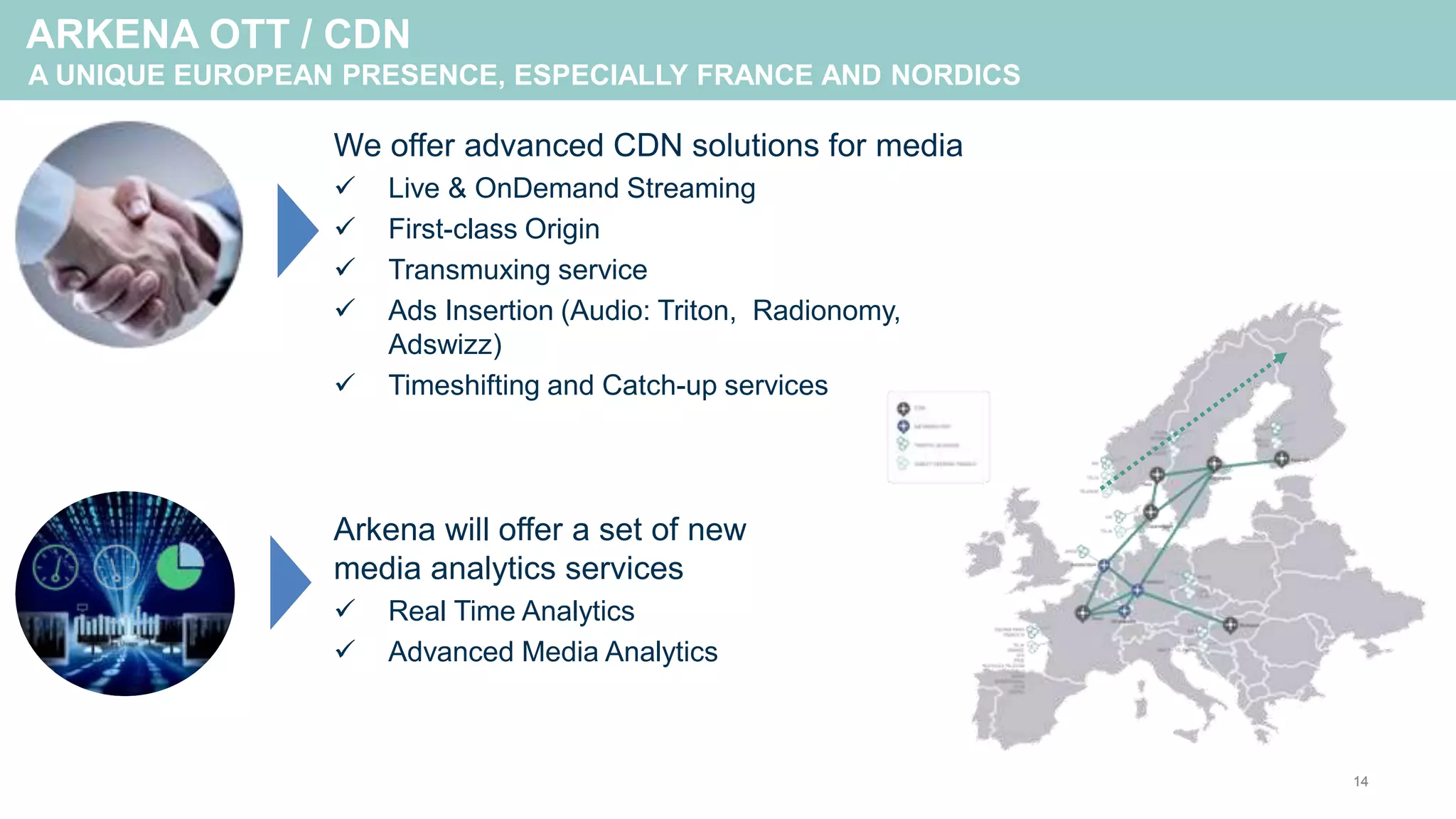

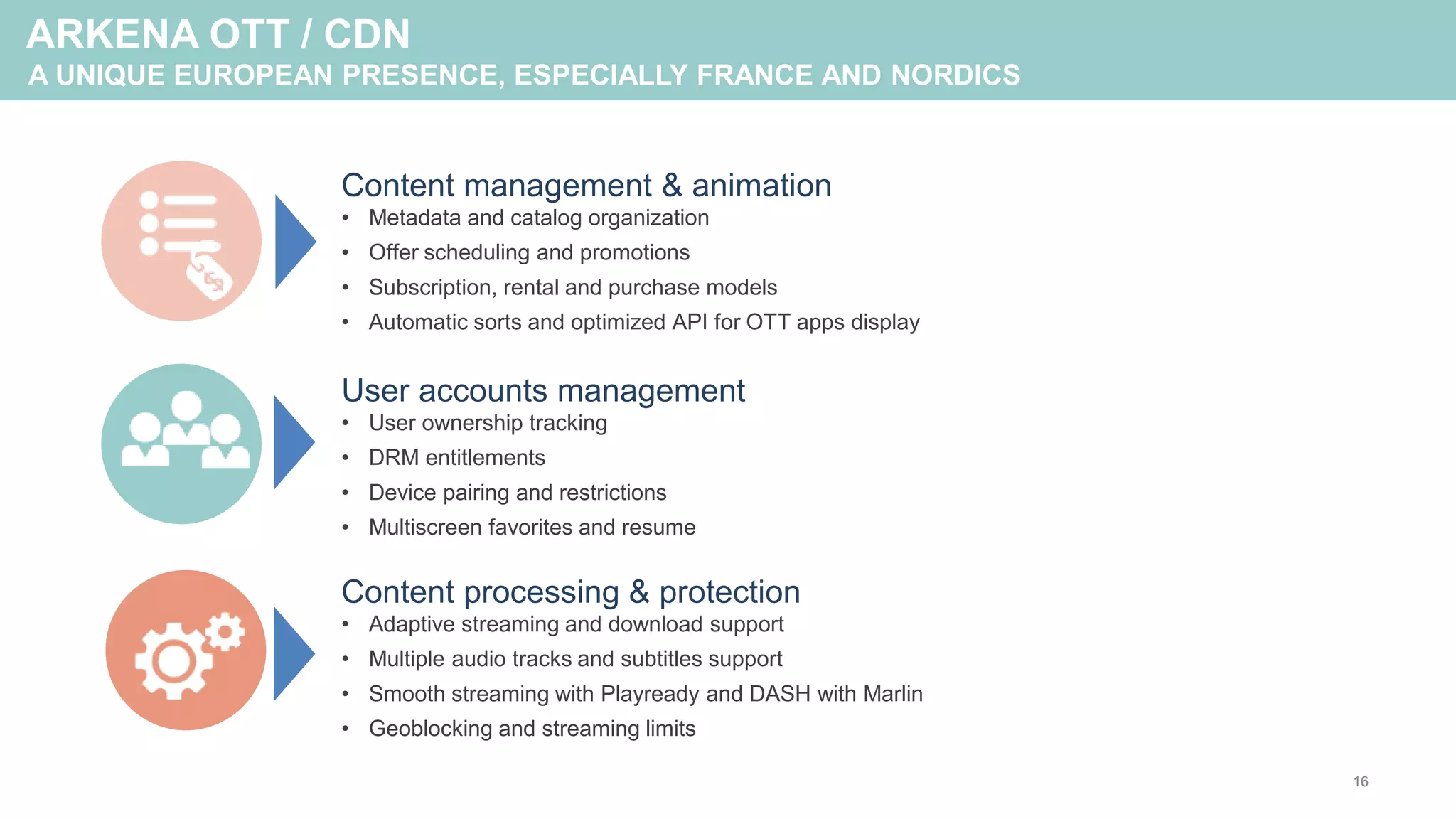

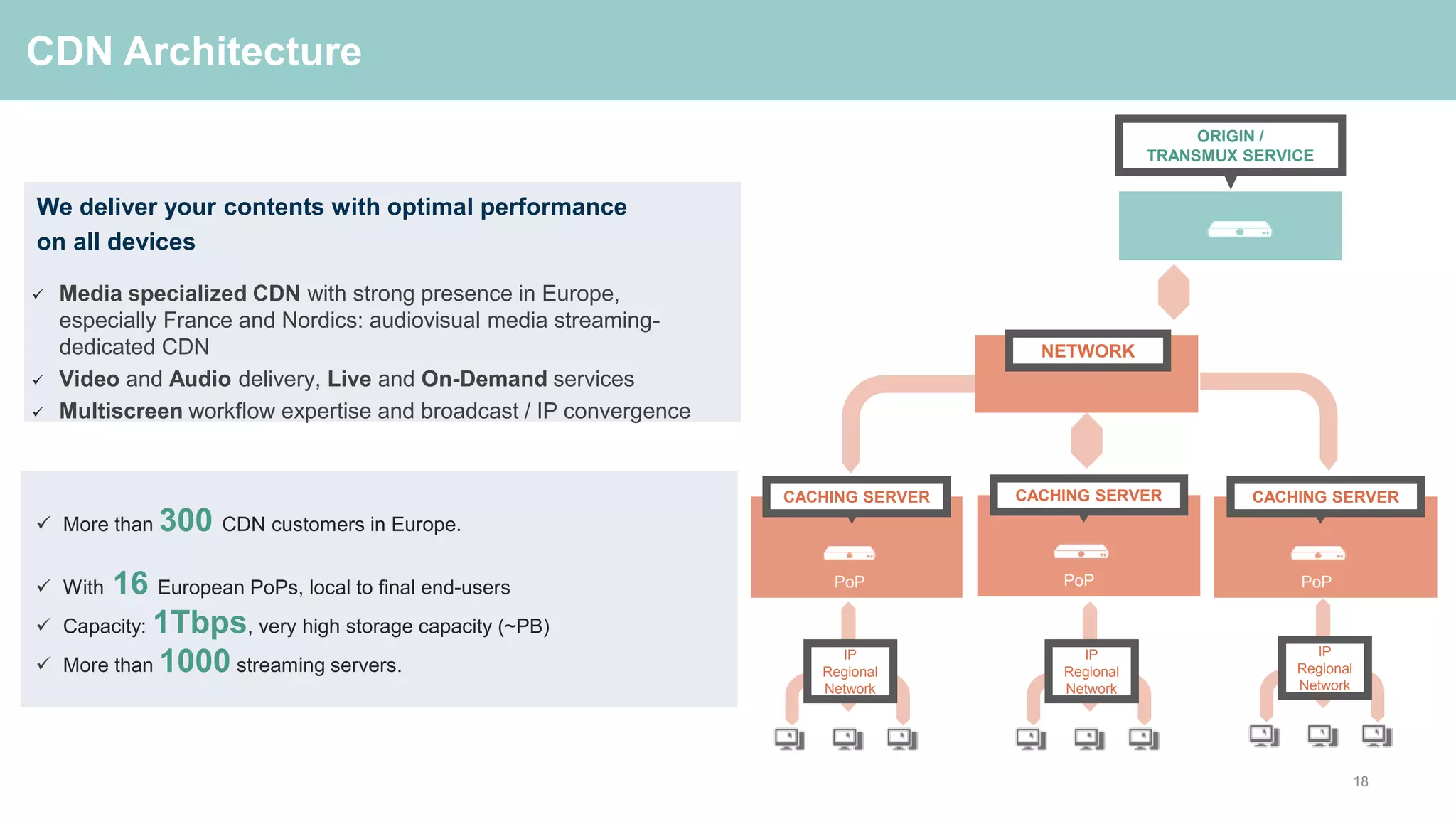

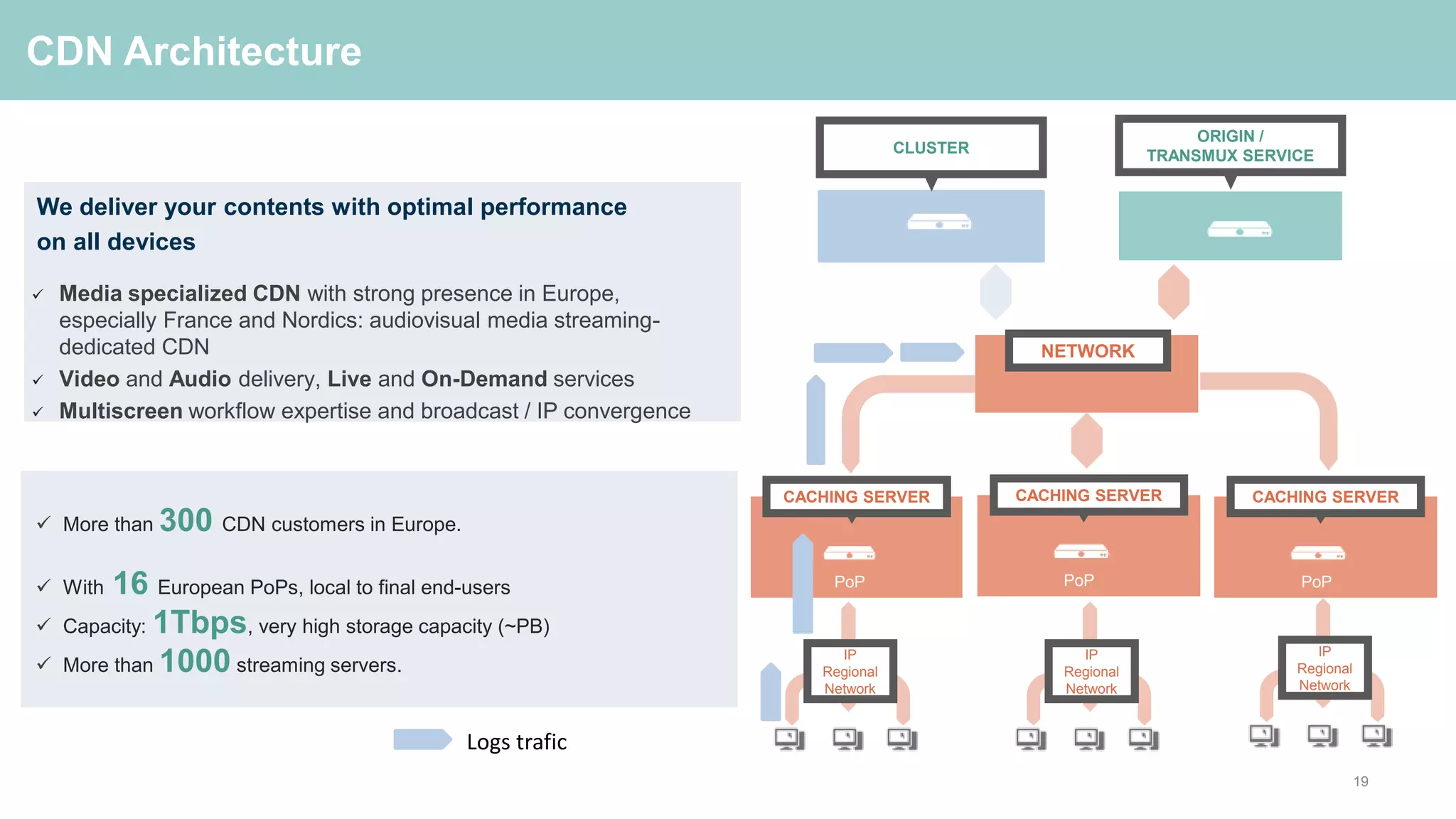

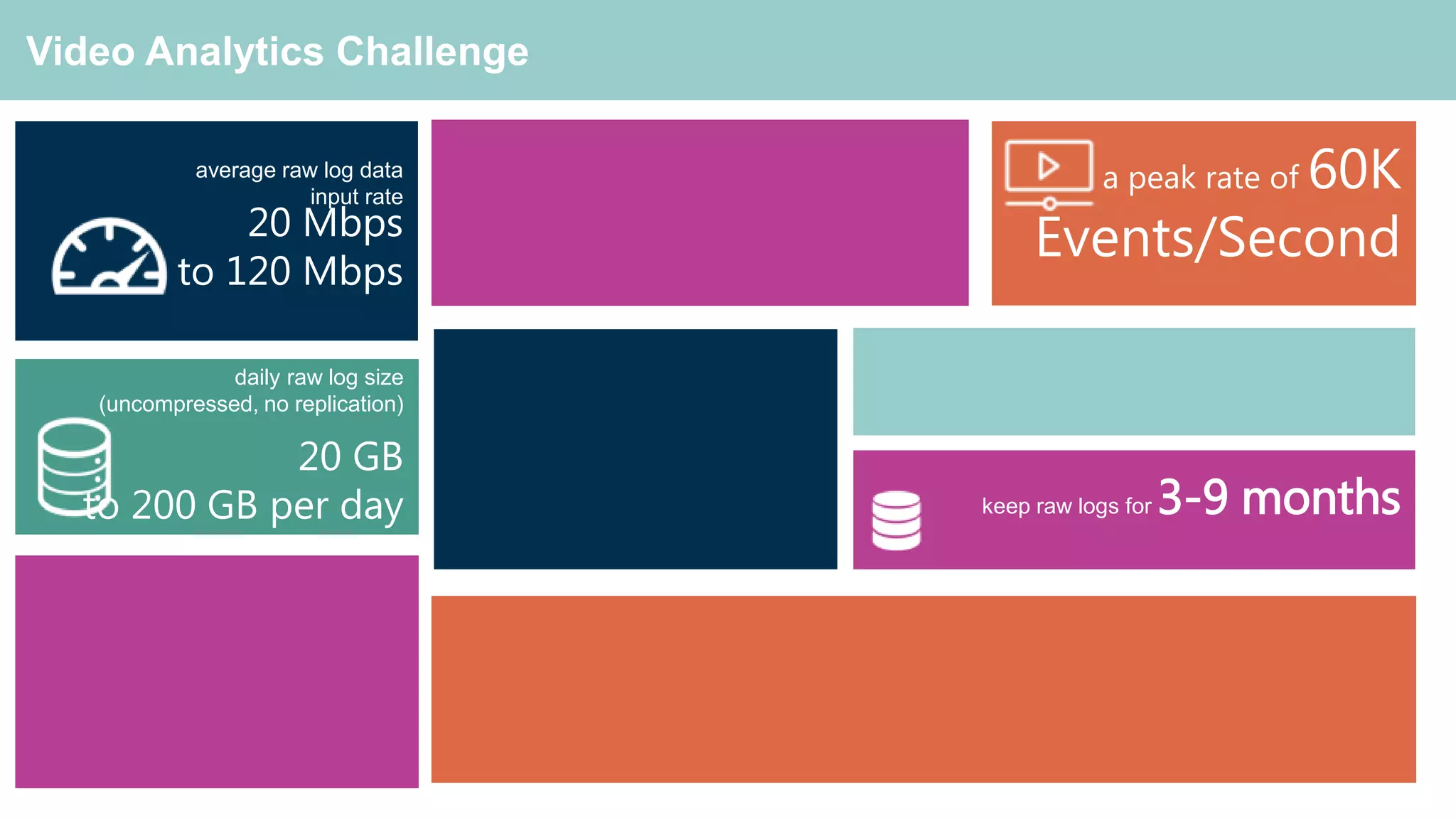

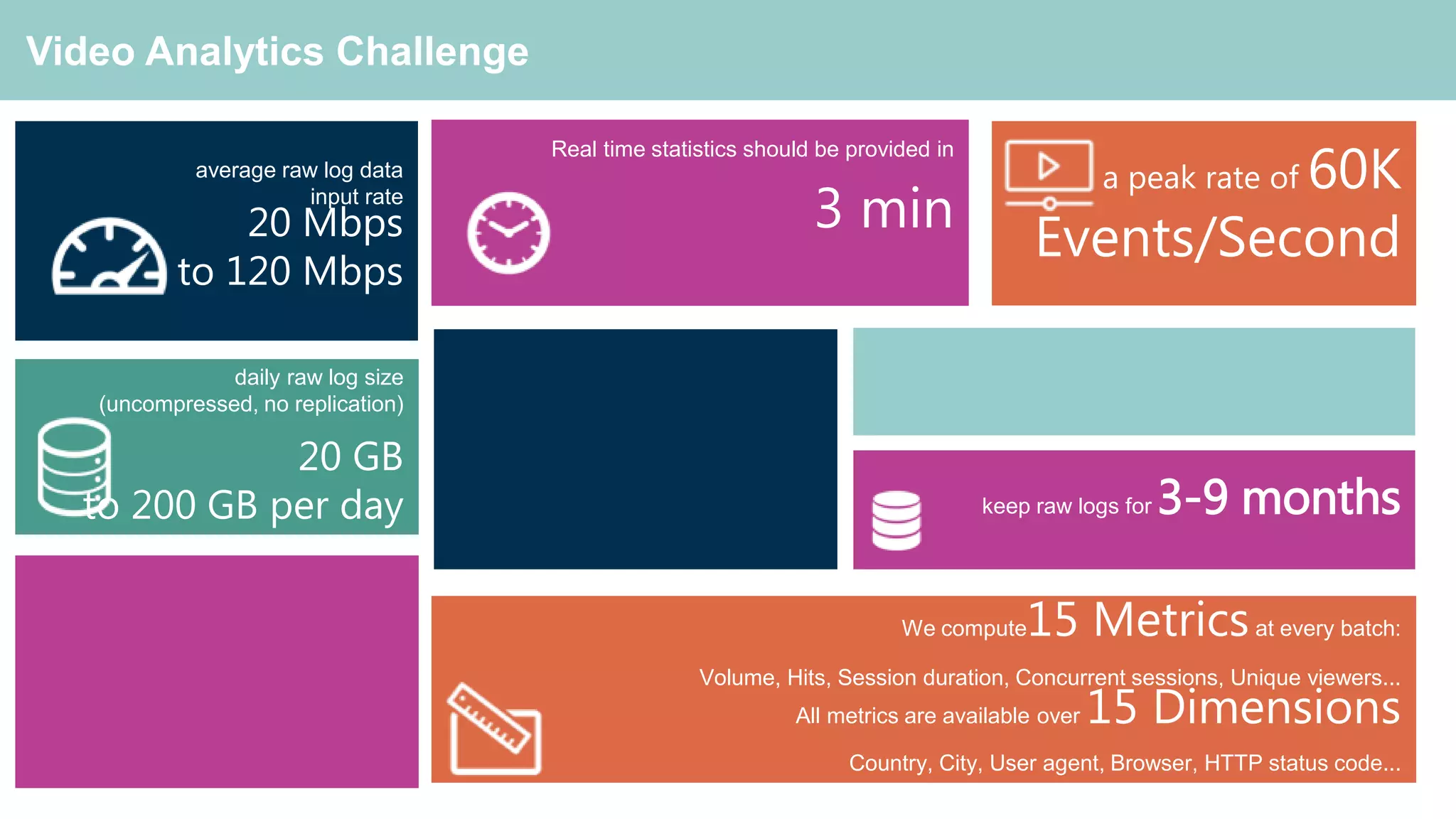

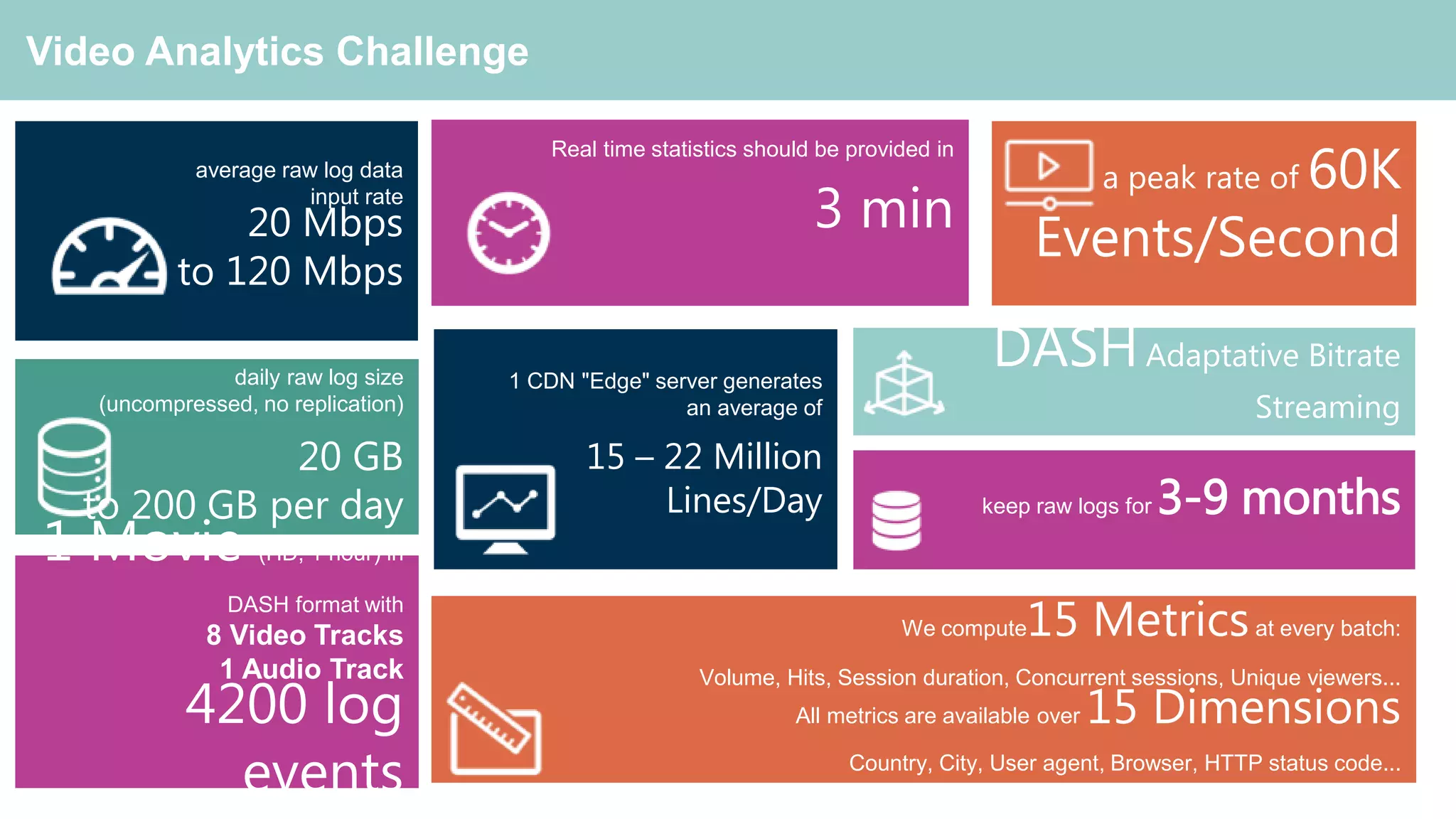

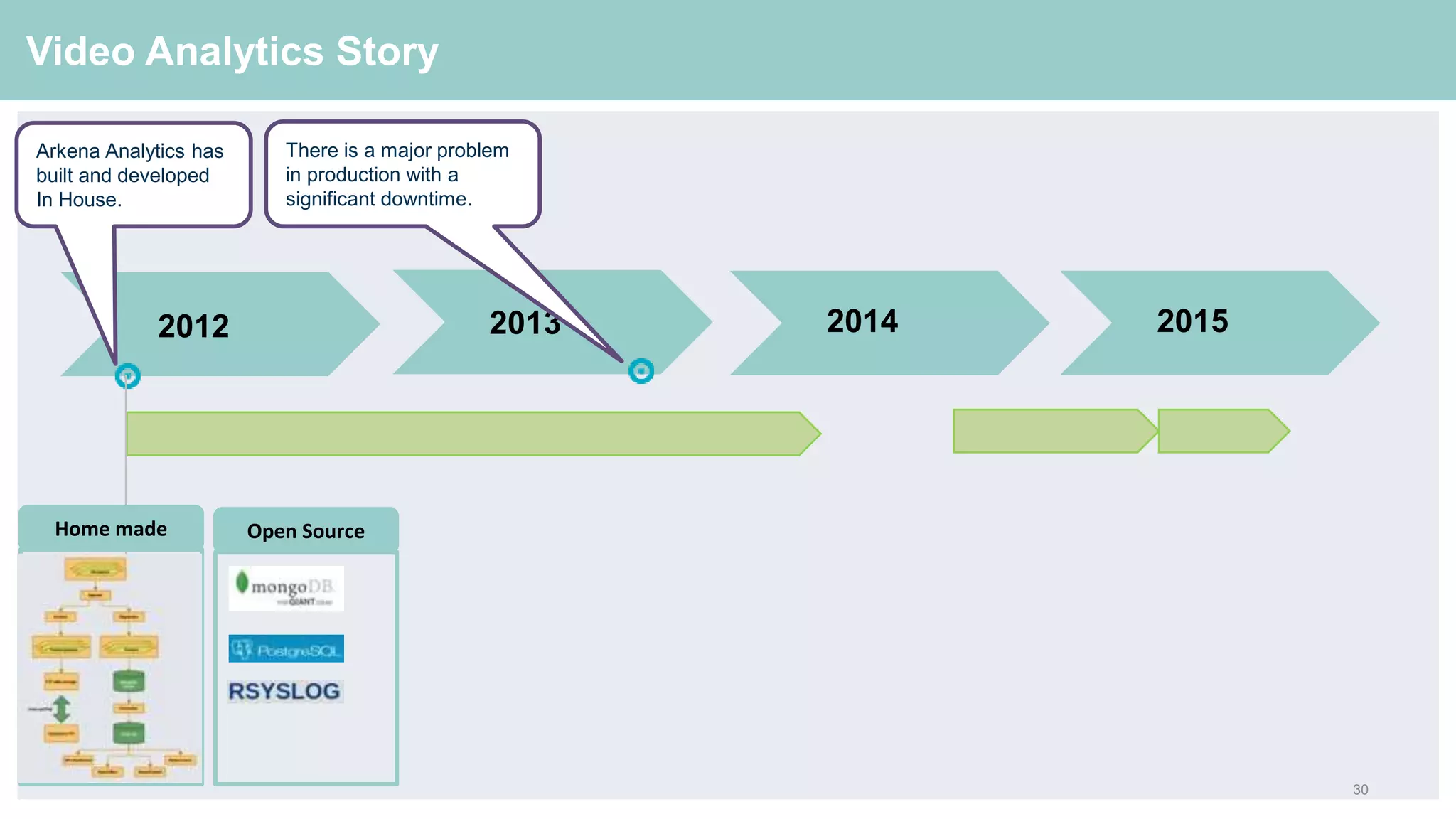

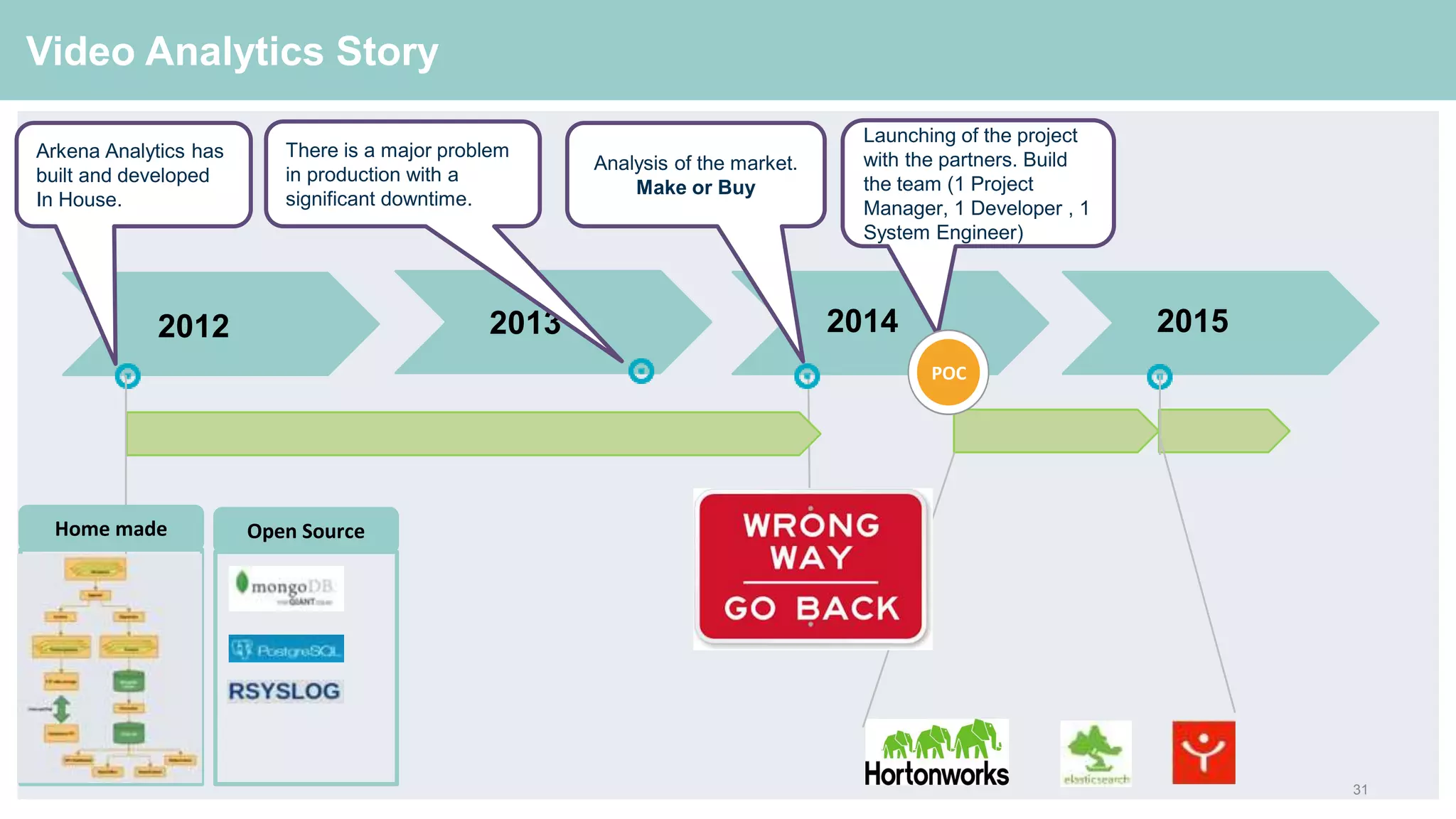

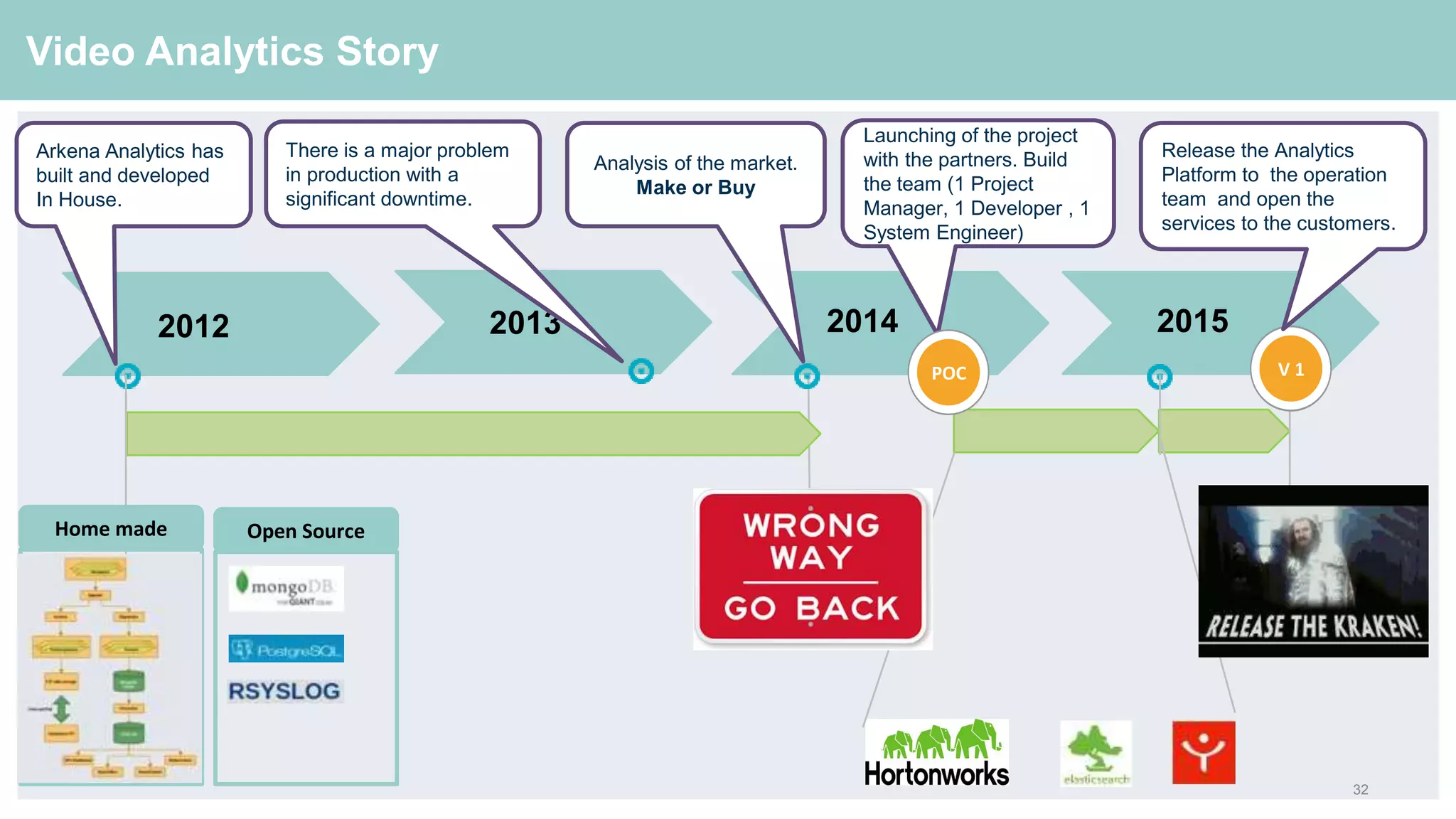

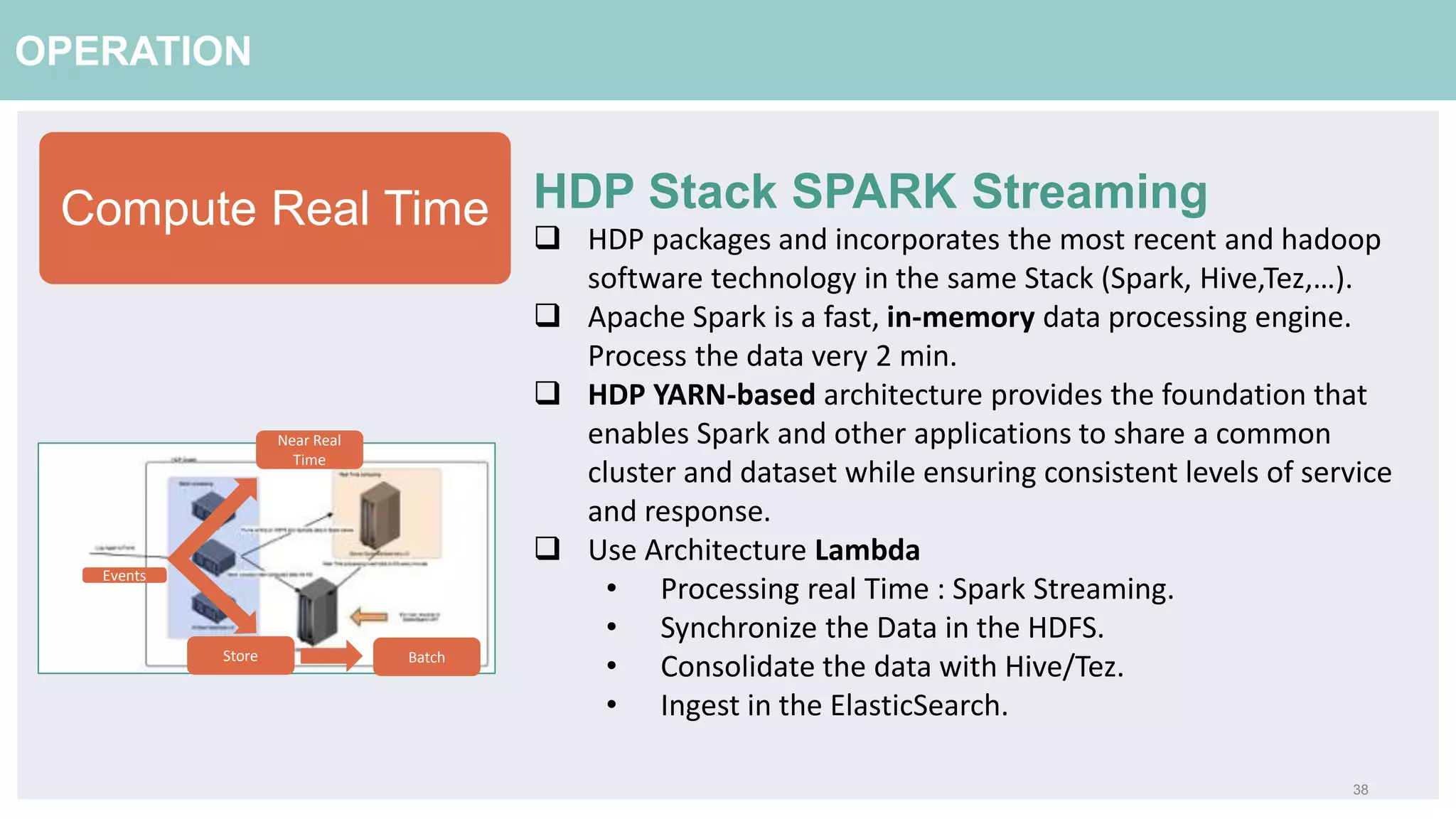

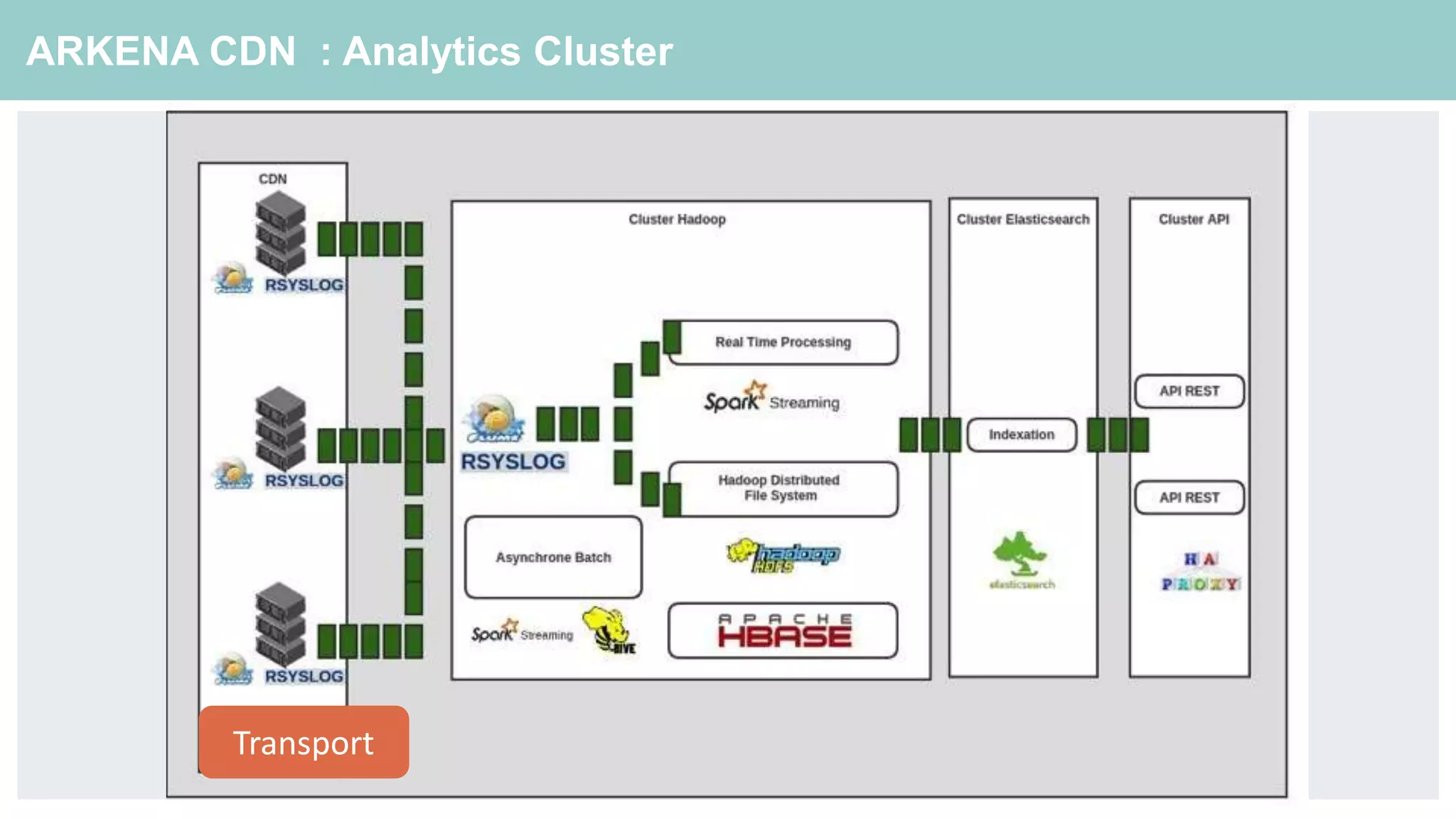

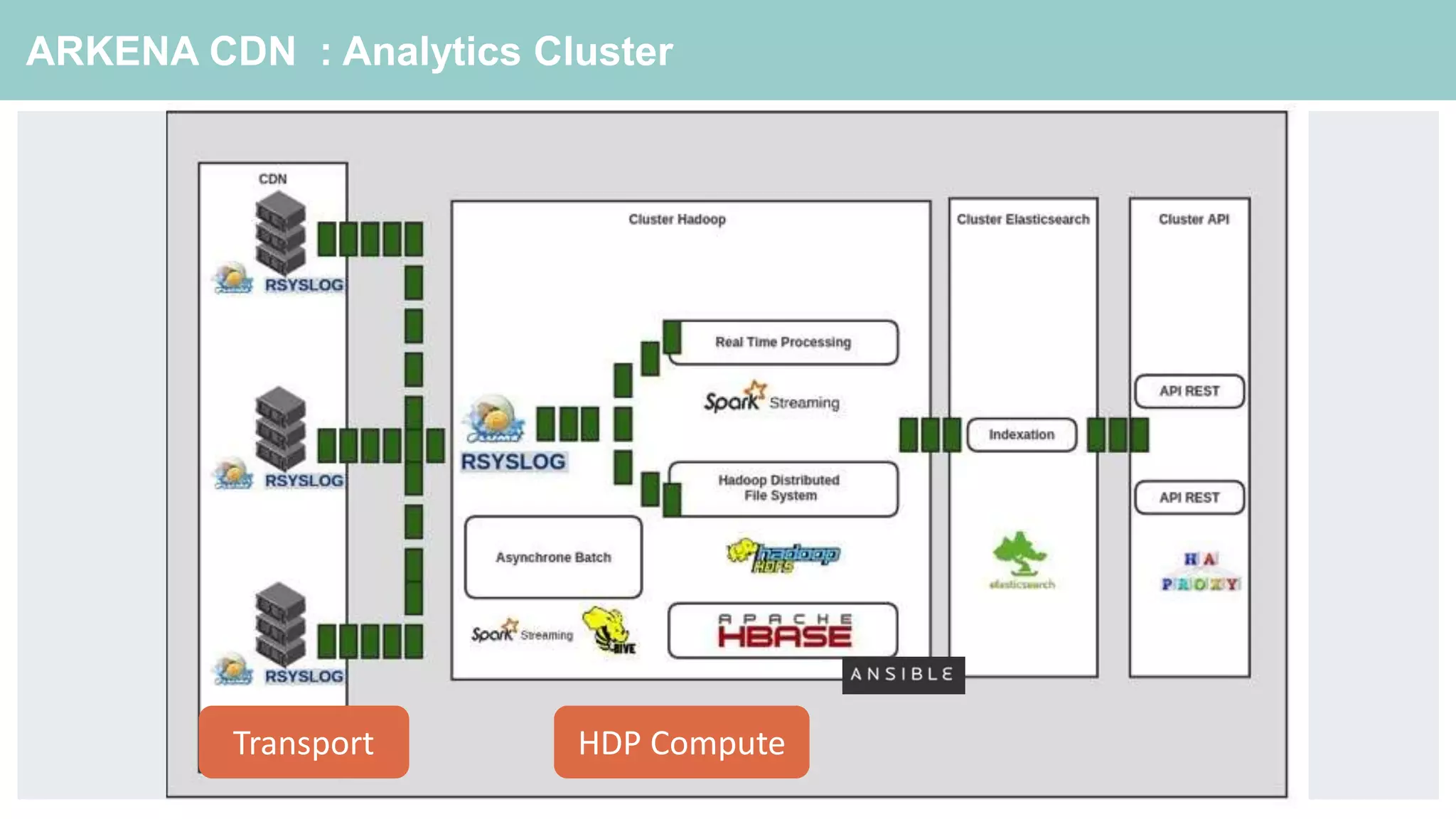

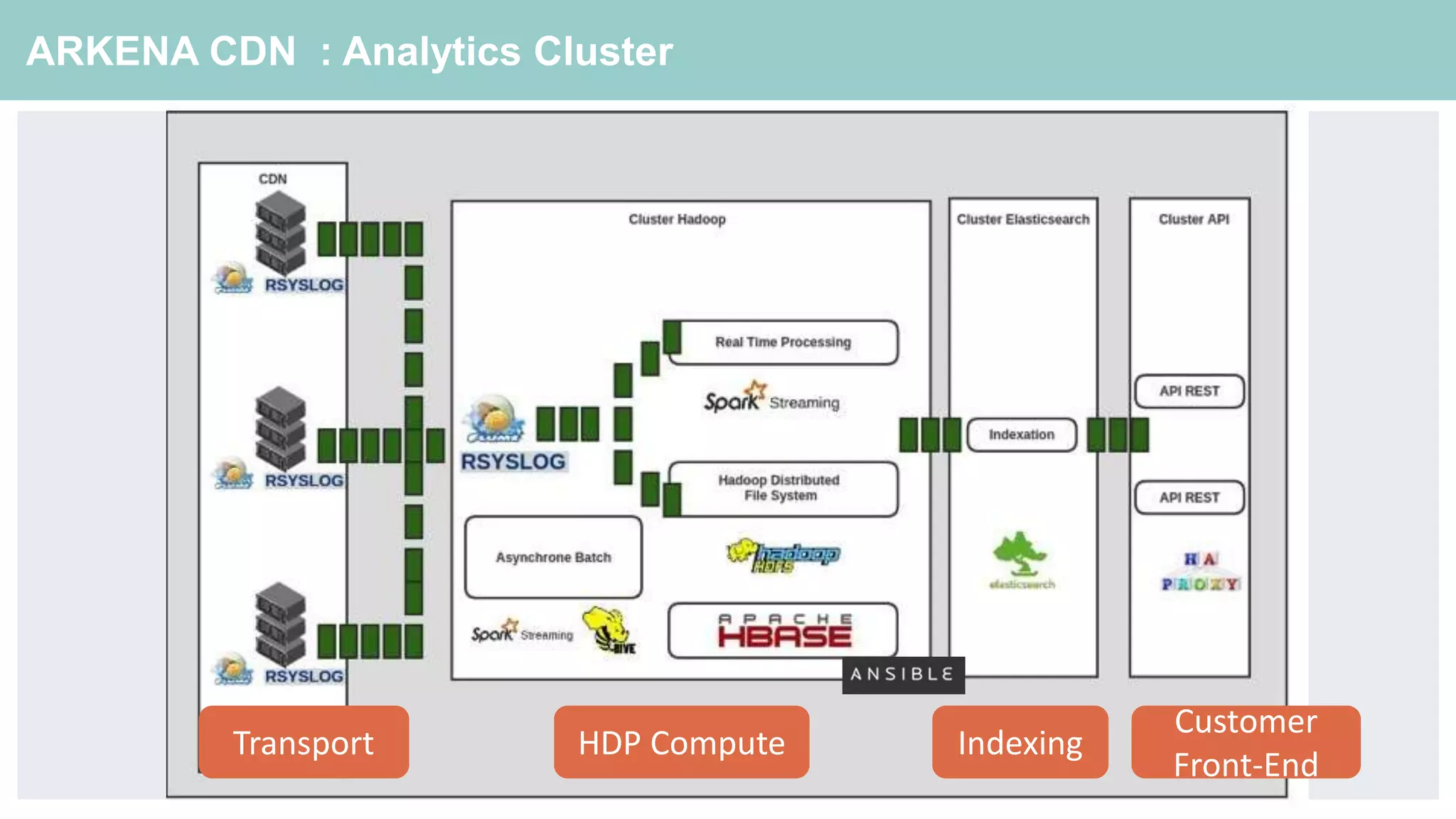

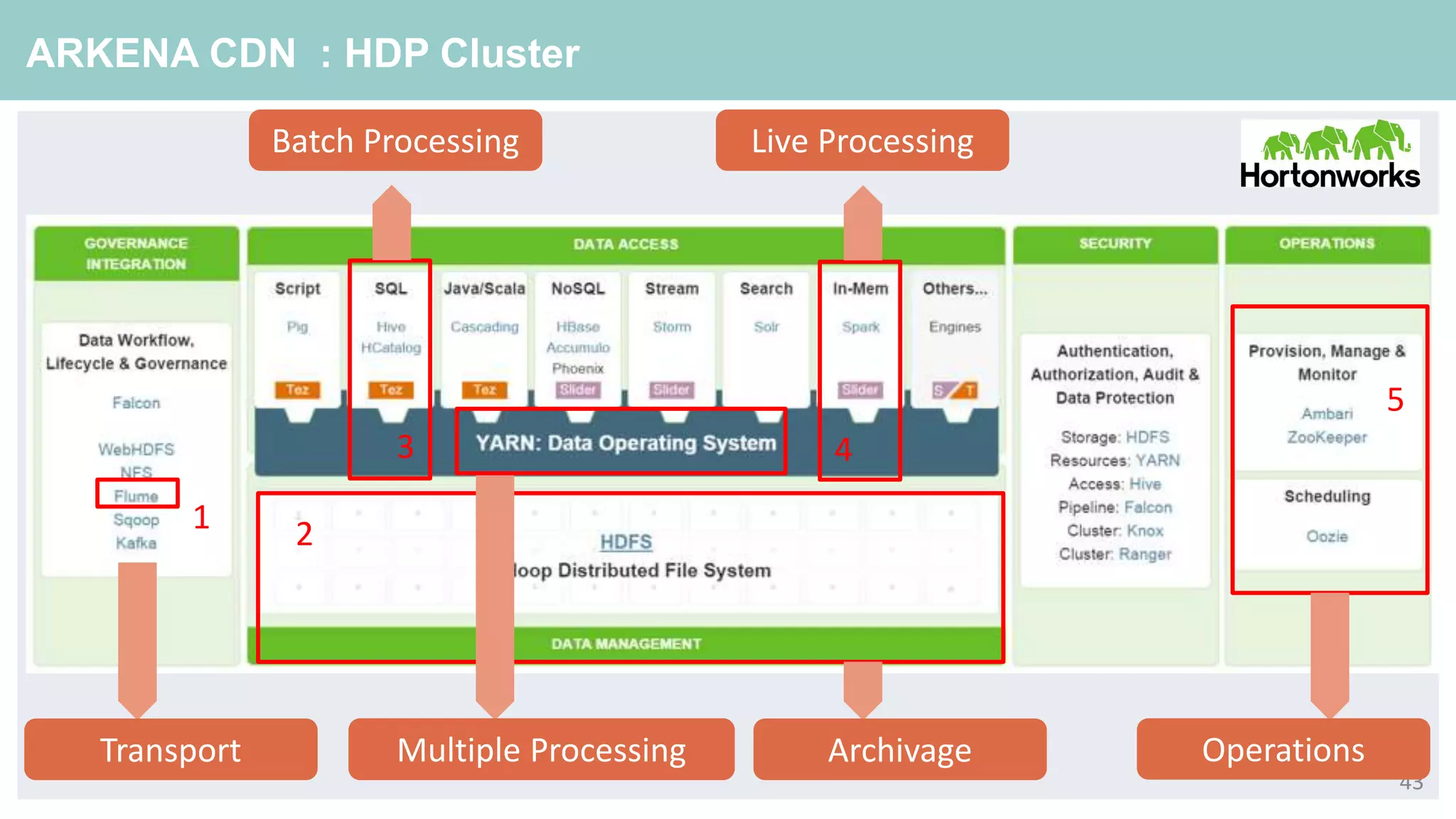

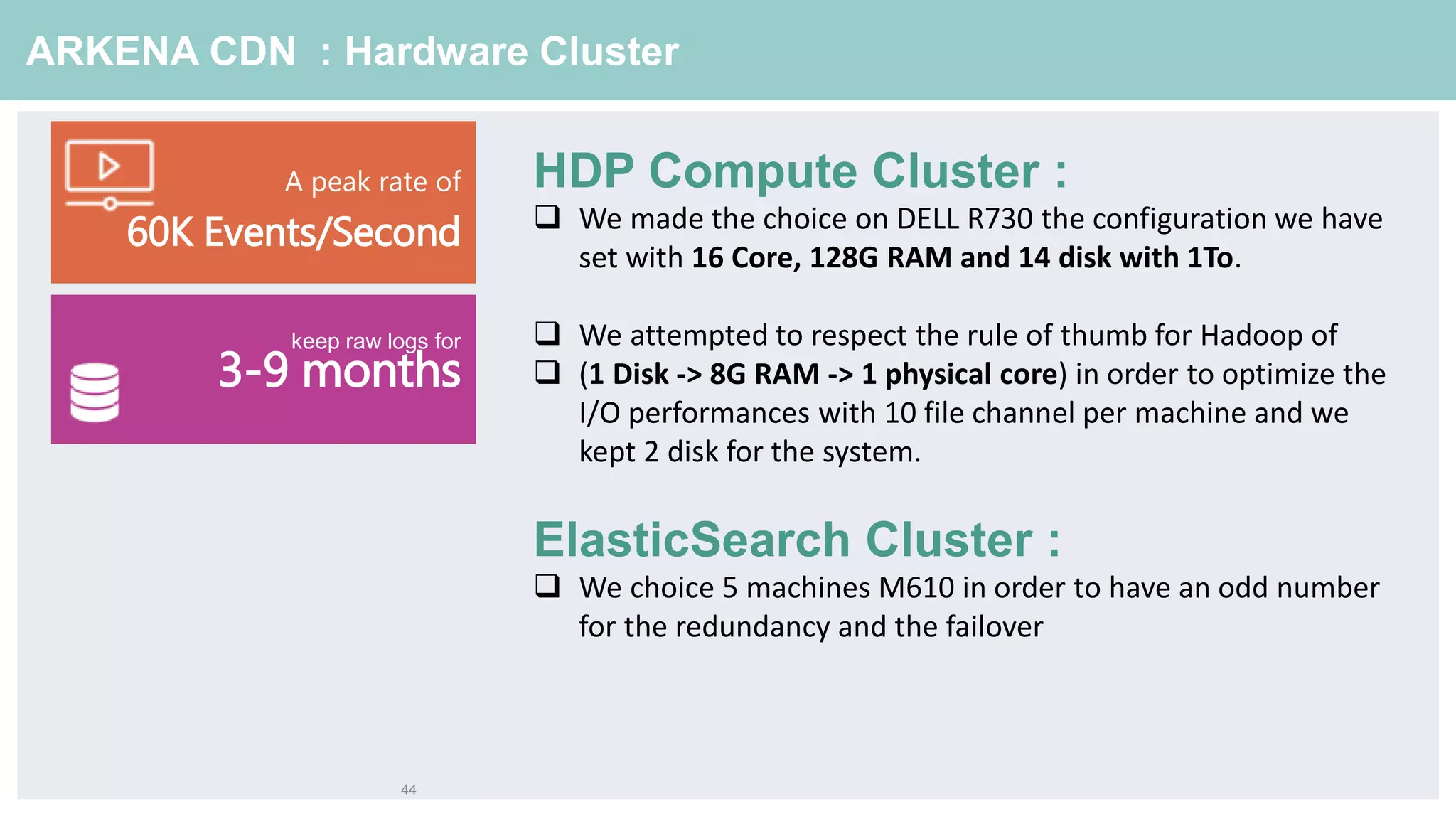

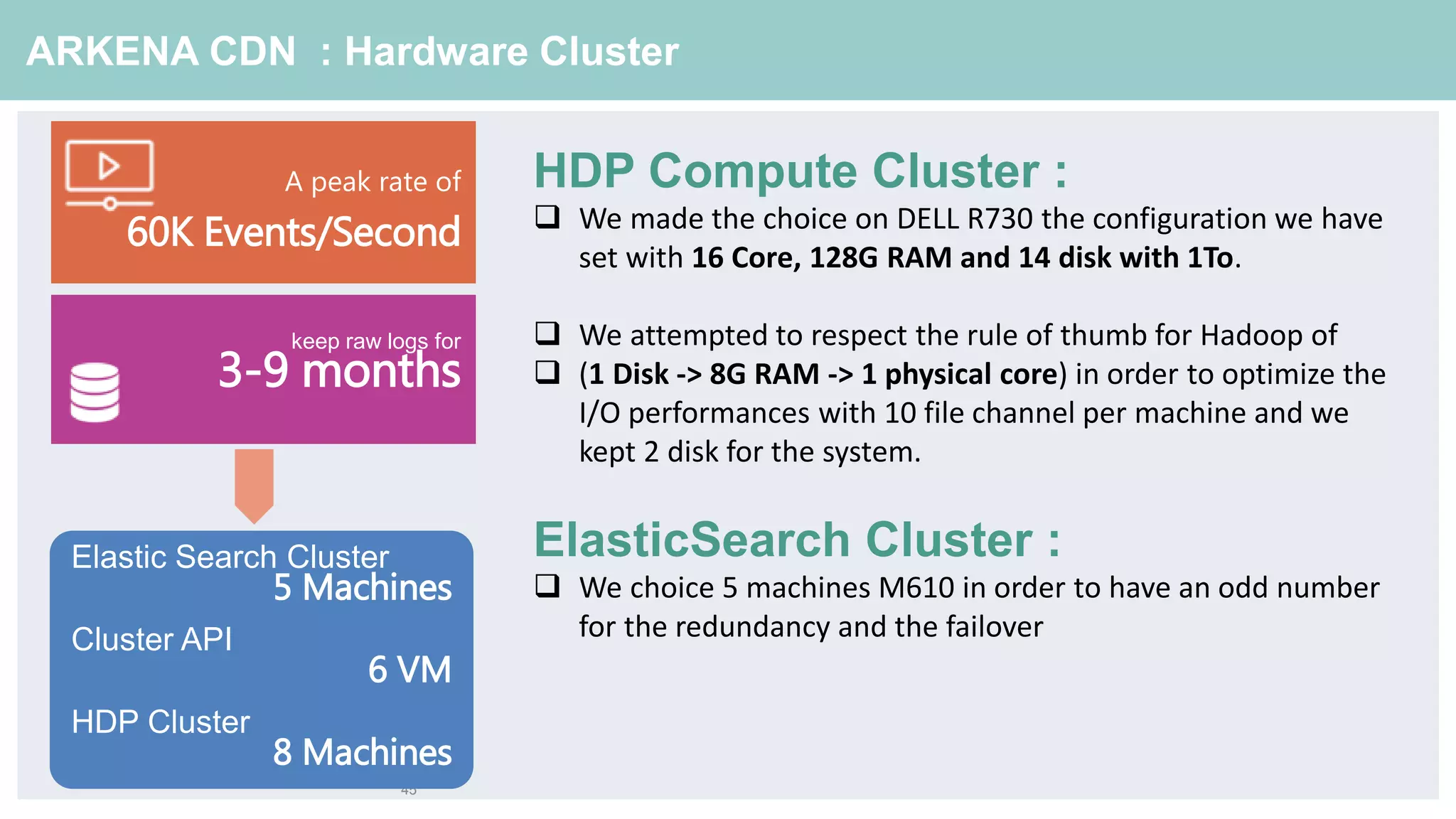

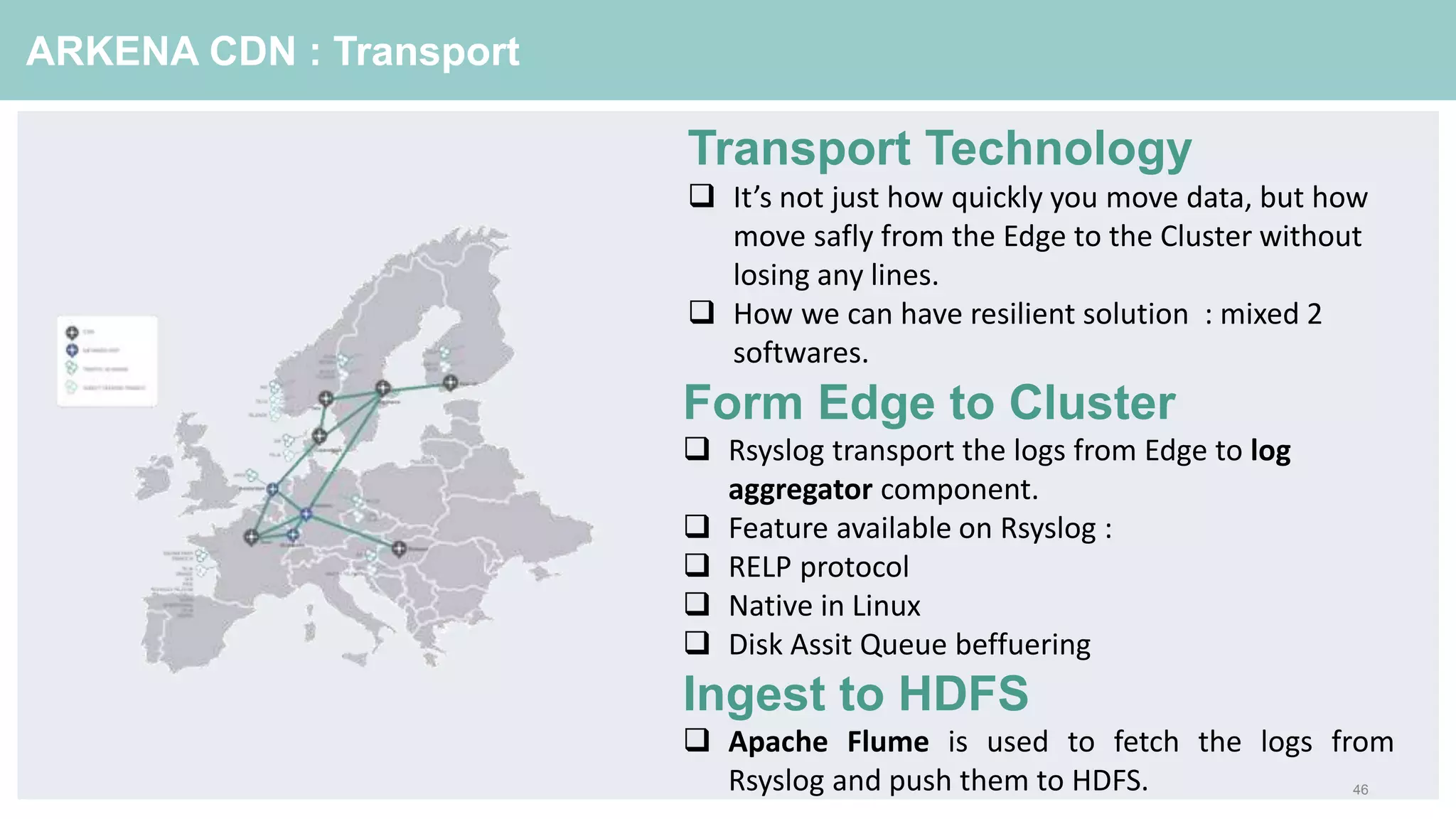

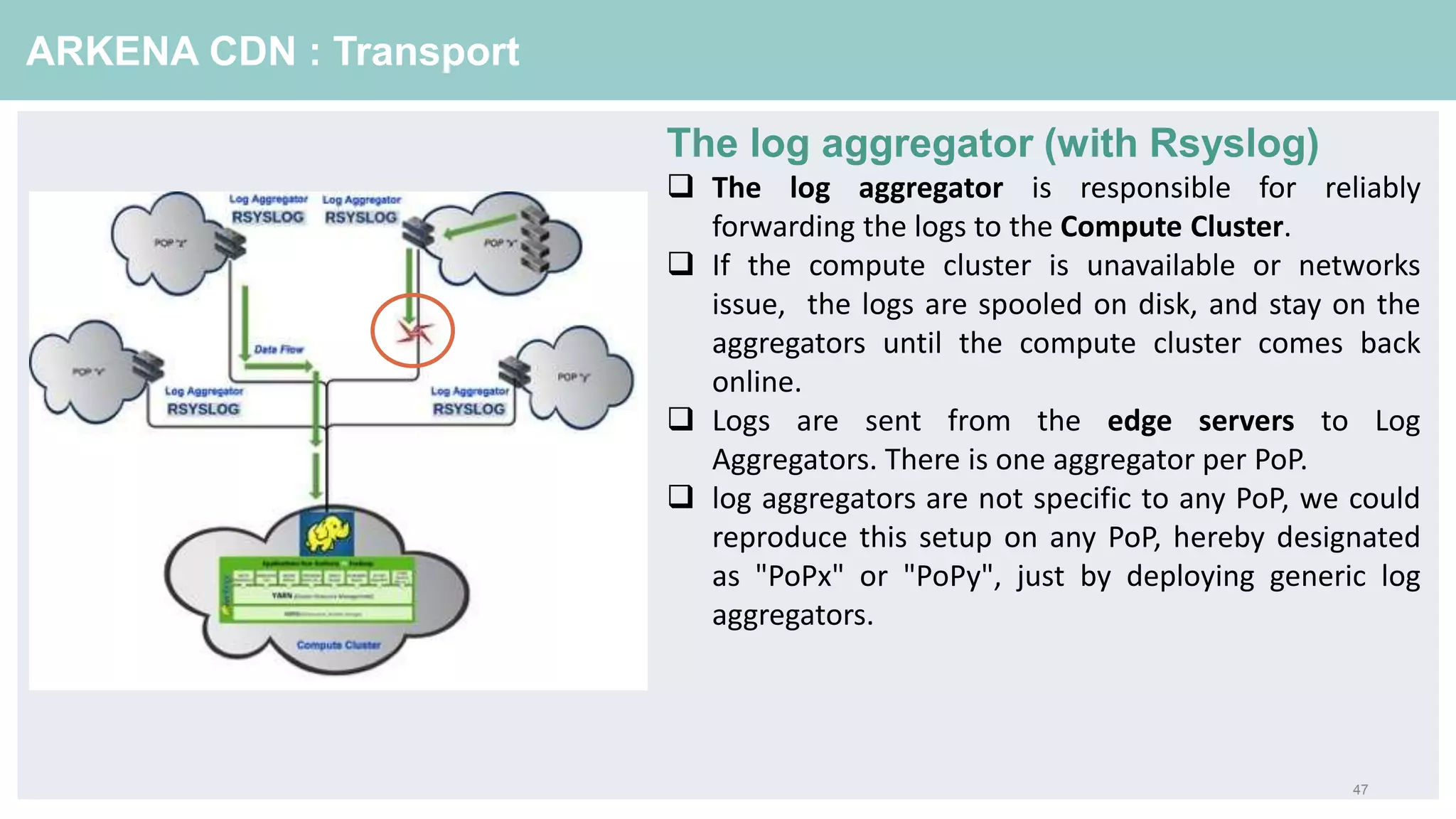

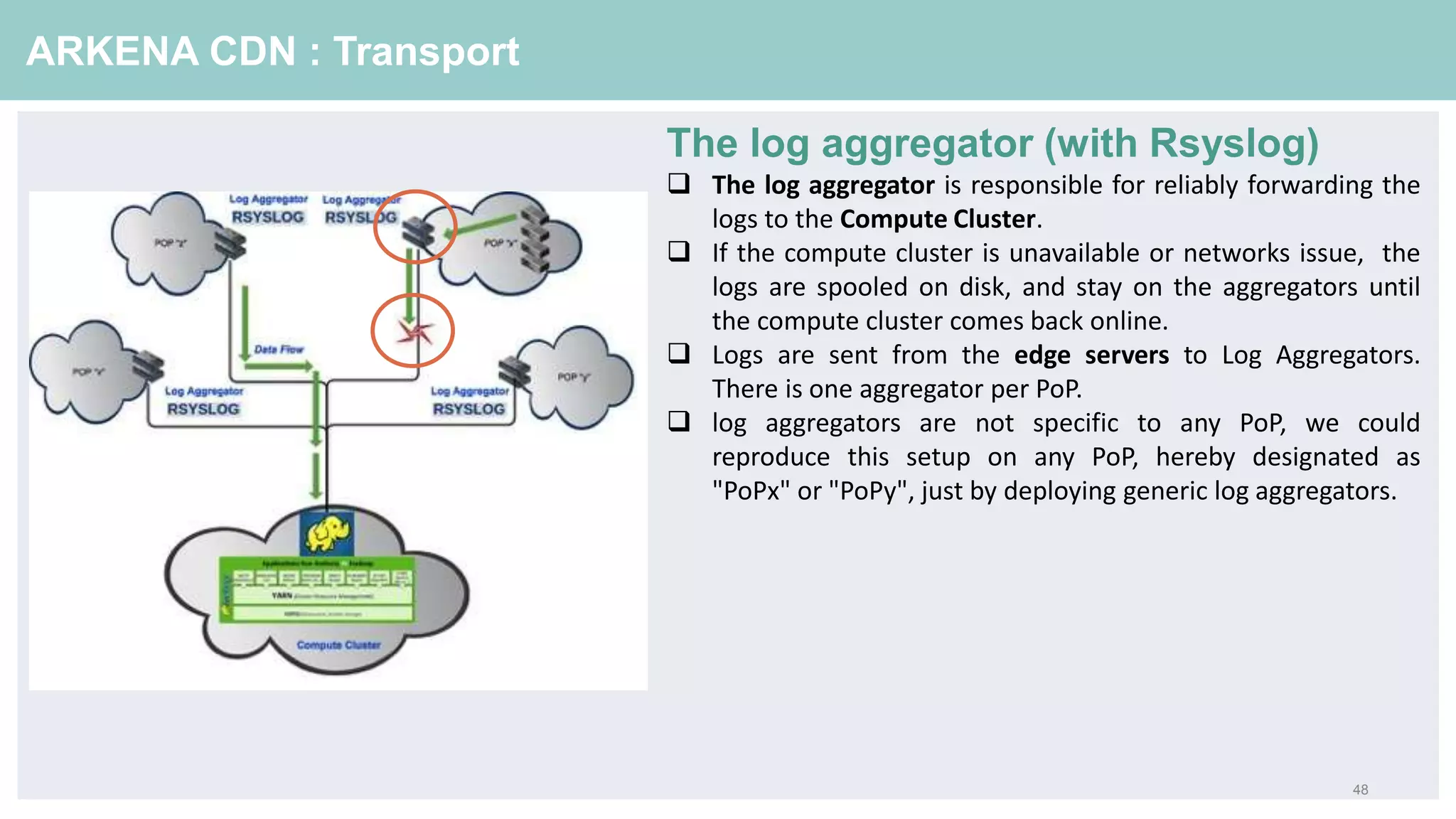

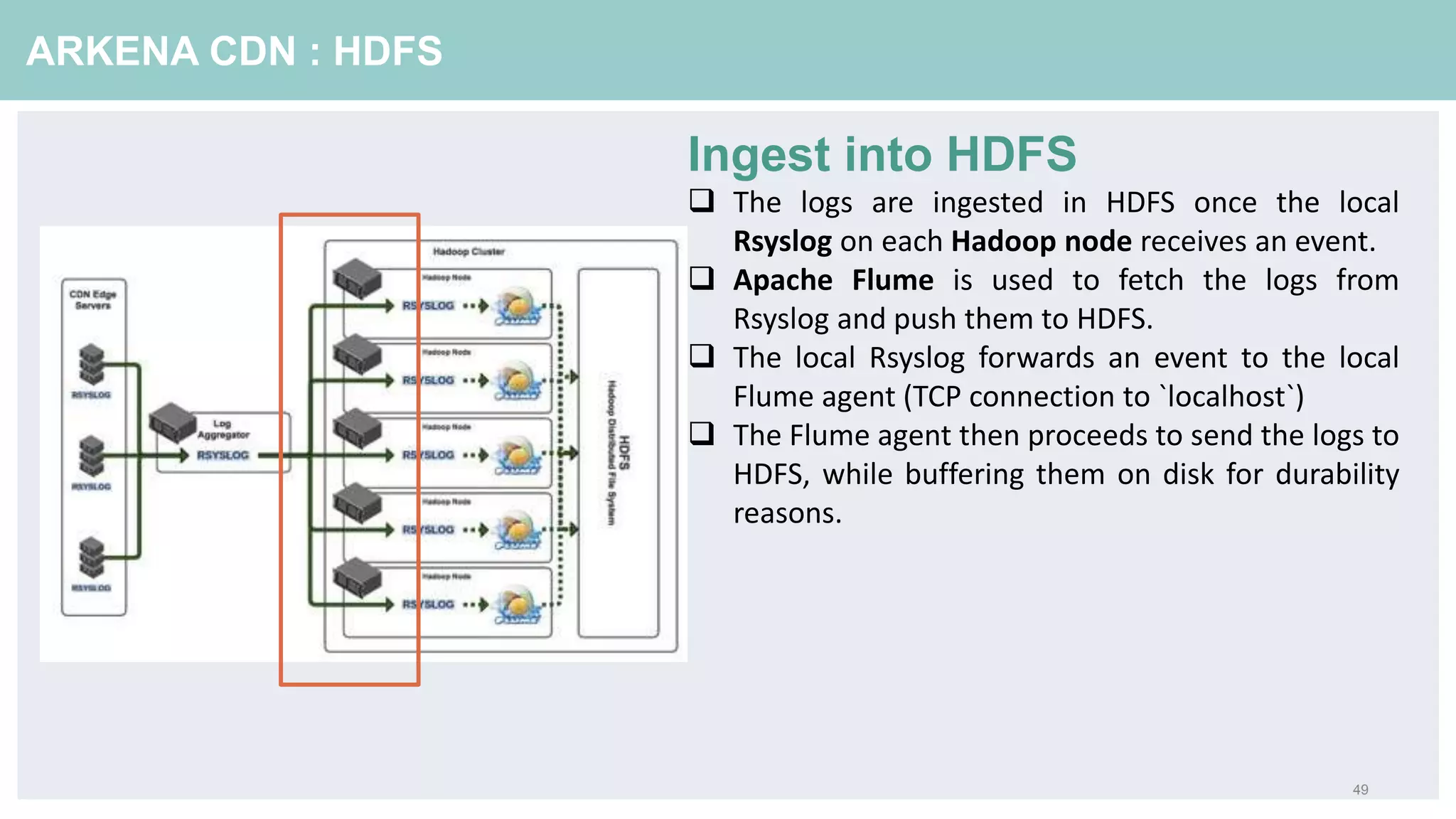

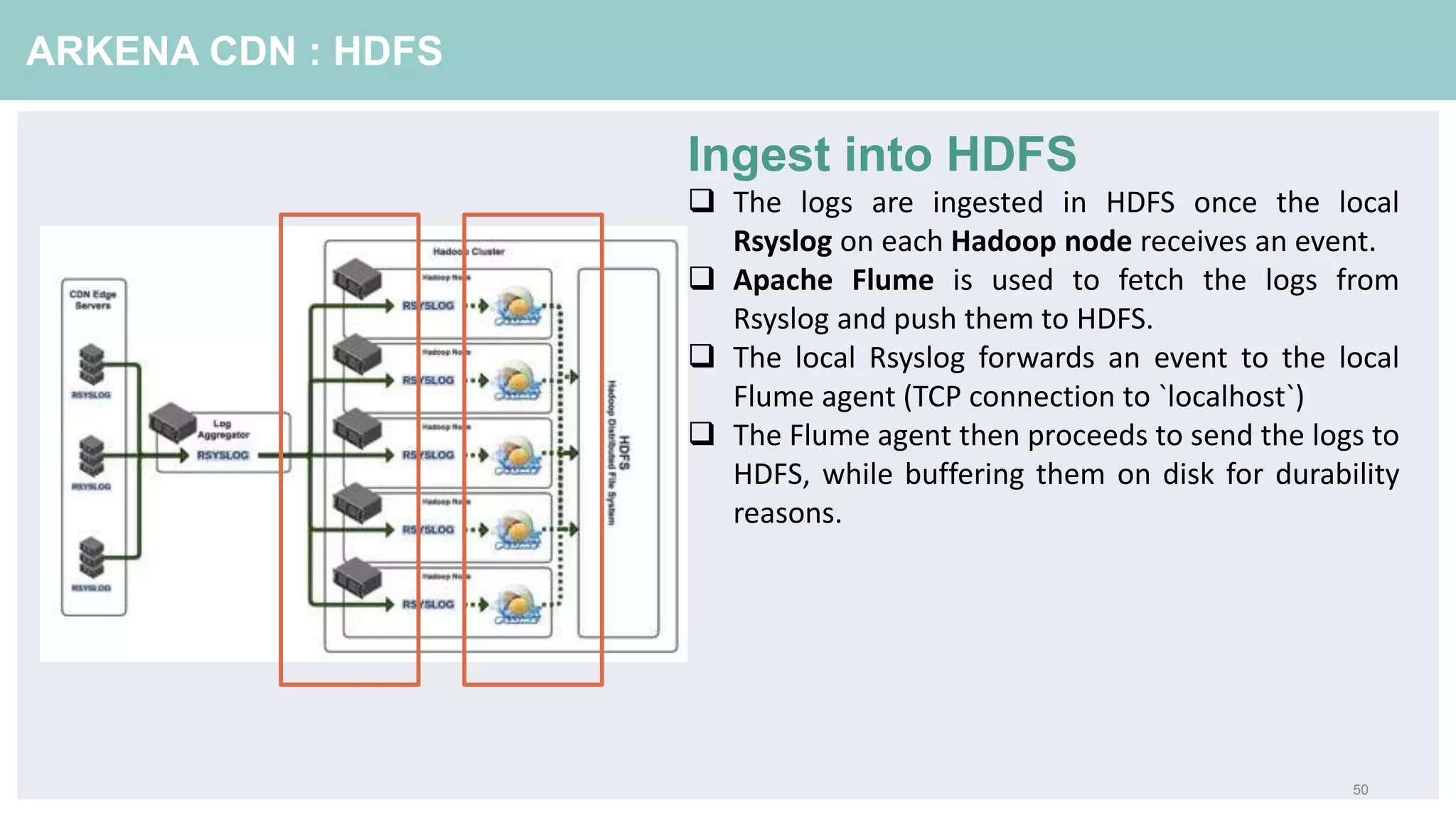

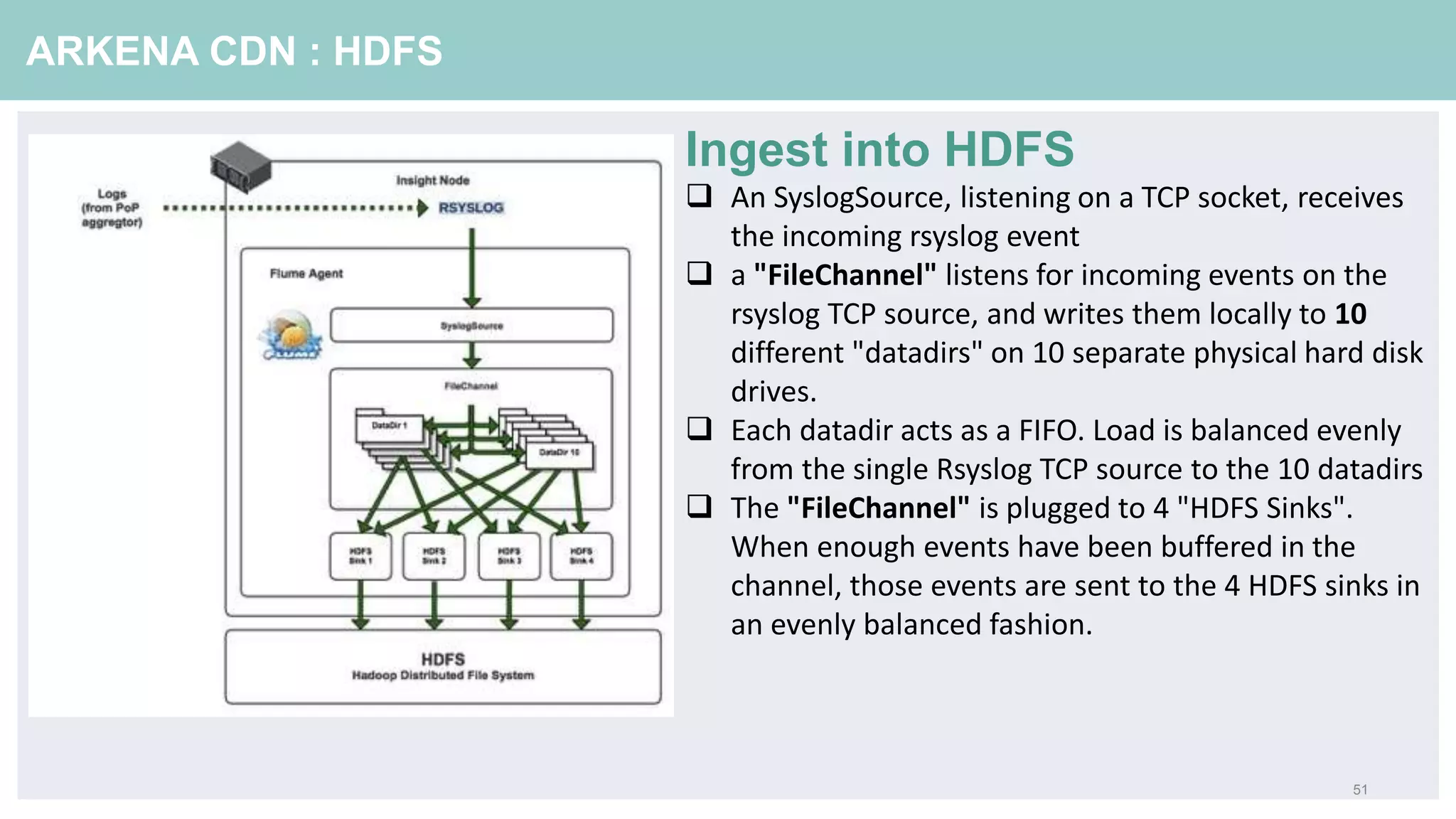

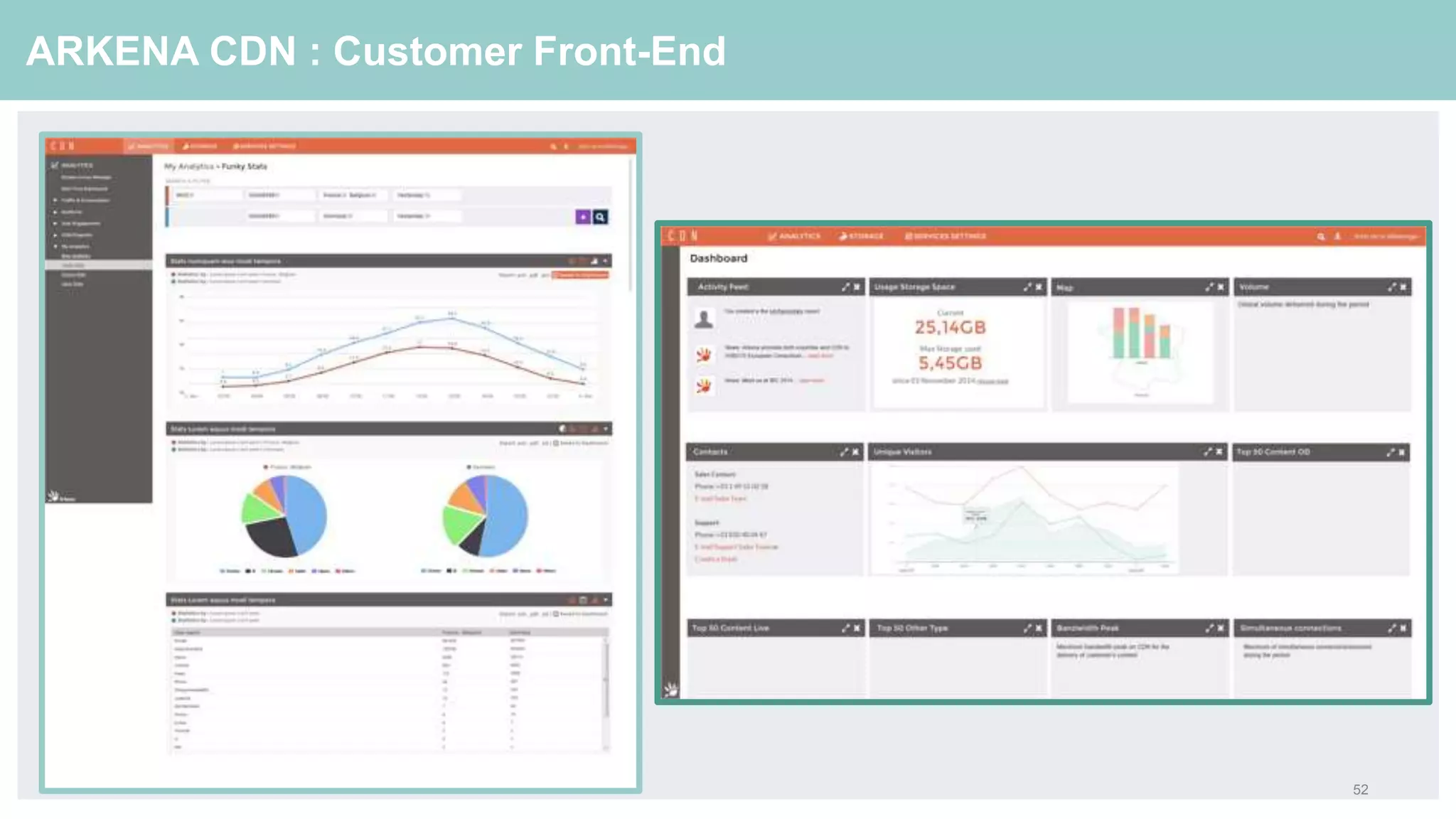

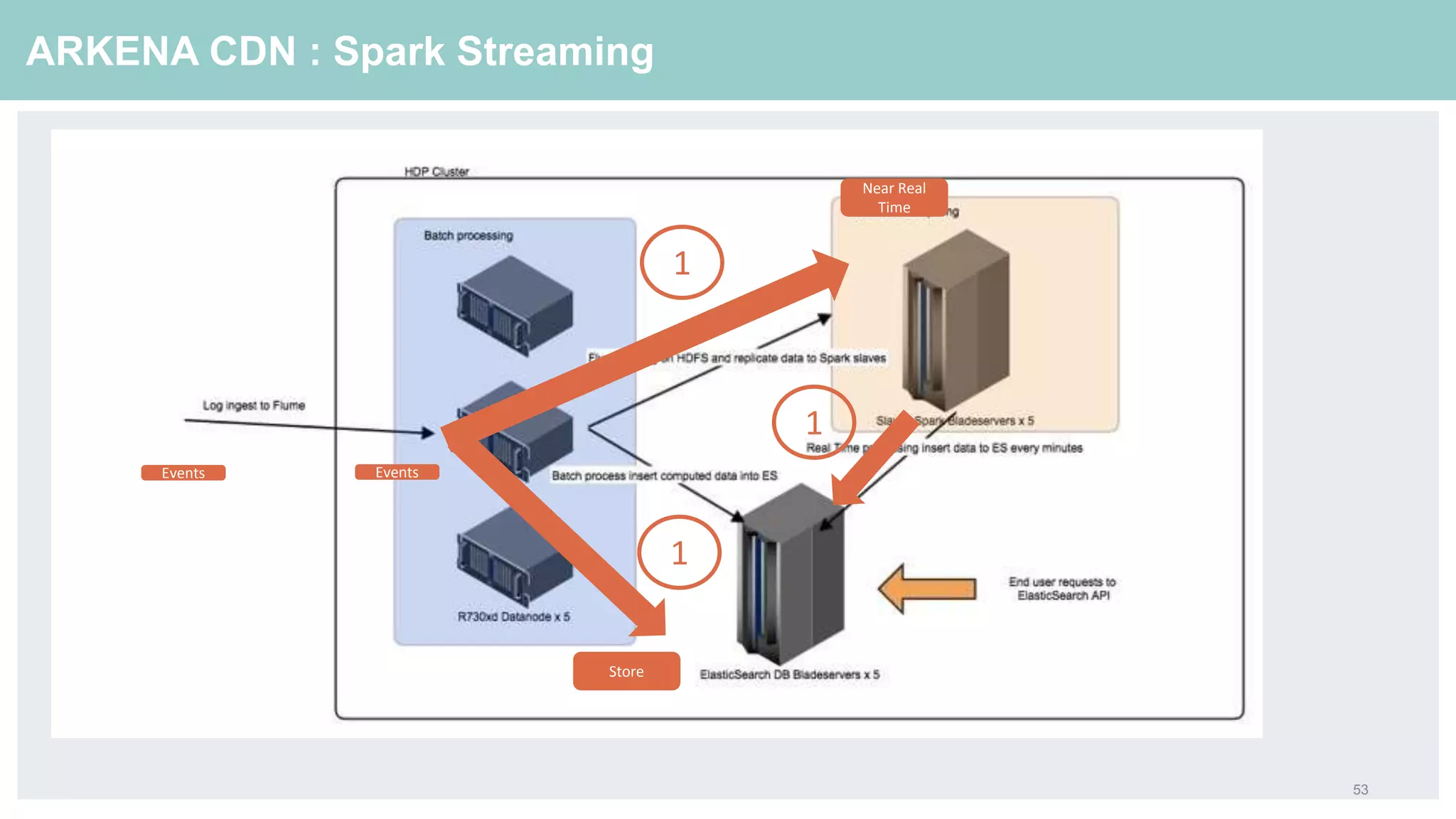

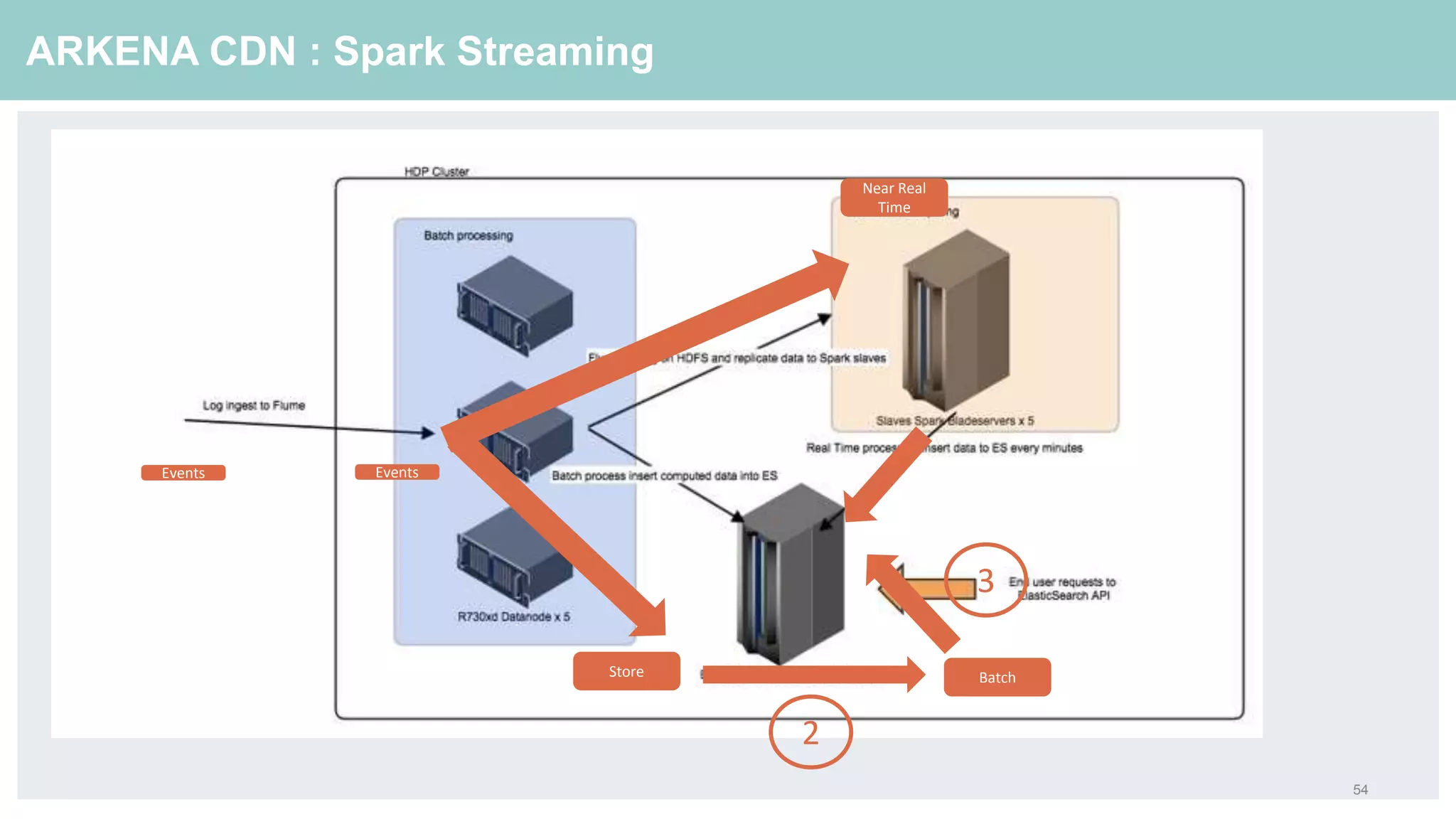

The document discusses a webinar on improving rich media experiences and video analytics at Arkena using Apache Hadoop. It outlines the importance of video analytics for content delivery networks (CDN), highlights challenges faced in analytics, and presents the infrastructure and technology choices made to efficiently process and analyze media data. The discussion emphasizes the benefits of using the Hortonworks Data Platform for scalability, reliability, and advanced analytics capabilities.