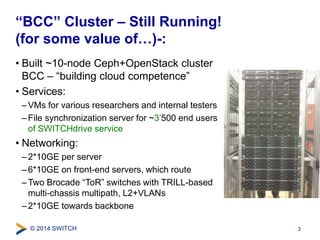

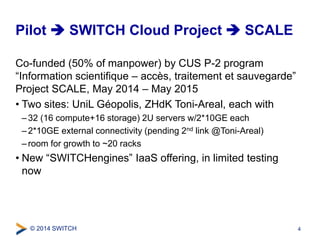

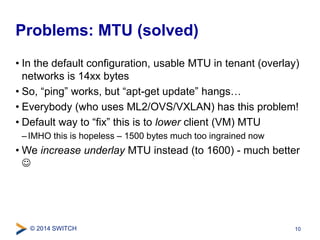

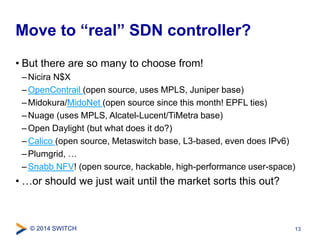

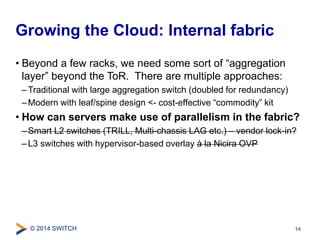

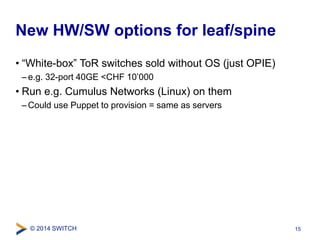

The document details the updates on SDN applications and the SwitchCloud project as of November 2014, including the progress of the 'bcc' cluster and future plans for network scalability. Challenges related to performance and missing features in OpenStack networking are discussed, with a focus on exploring SDN controller options and the necessity for an aggregation layer in cloud structures. The outlook emphasizes the ongoing evolution of the internet towards a cloud-integrated future, with significant developments in 5G and NFV anticipated.