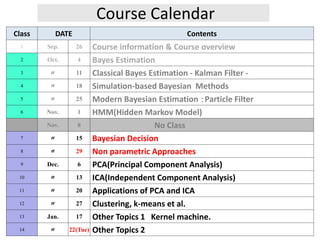

1. The document outlines the course calendar for a Bayesian estimation course, with topics including Bayes estimation, Kalman filters, particle filters, hidden Markov models, Bayesian decision theory, and applications of principal component analysis and independent component analysis.

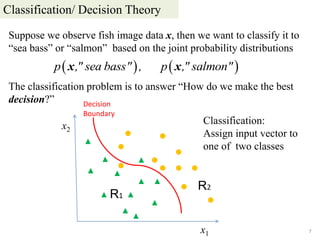

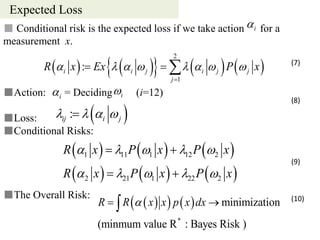

2. The lecture on Bayesian decision theory introduces classification and decision problems, Bayes' decision theory, discriminant functions, and the Gaussian case. It discusses classifying observations to categories based on loss functions and conditional risk to minimize overall risk.

3. Bayesian decision theory aims to assign observations to categories to minimize the expected loss. It considers prior probabilities, likelihood functions, posterior probabilities, and loss functions to derive decision rules that minimize risk or probability of error.

![1. Introduction

3

1.1 Pattern Recognition

The second part of this course is concerned about Pattern Recognition.

Pattern recognitions (Machine Learning) want to give very high skills

for sensing and taking actions as humans do according to what they

observe.

Definitions of Pattern Recognition appeared in books

“The assignment of a physical object or event to one of several pre-

specified categories”

by Duda et al.[1]

“The science that concerns the description or classification

(recognition) of measurements”

by Schalkoff (Wiley Online Library)](https://image.slidesharecdn.com/2012mdsp-pr07bayesdecision-130701022252-phpapp01/85/2012-mdsp-pr07-bayes-decision-3-320.jpg)