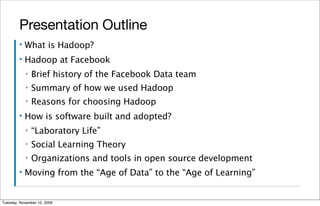

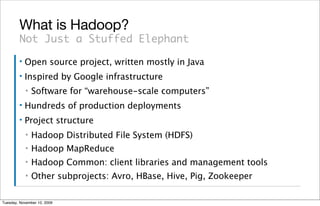

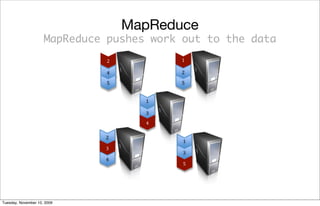

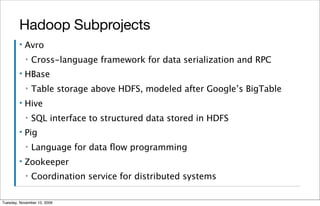

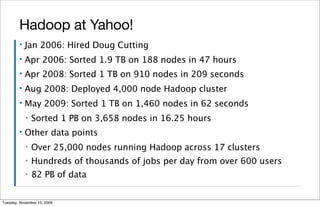

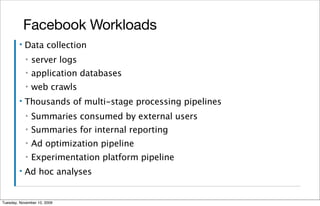

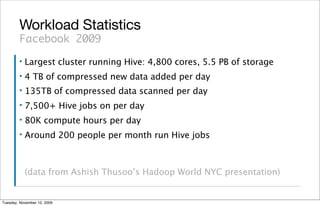

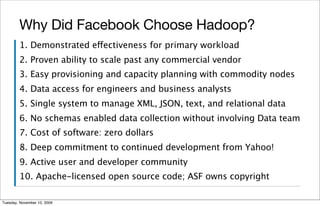

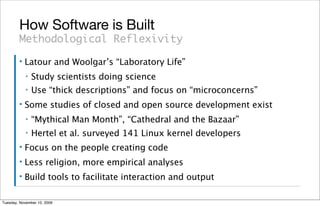

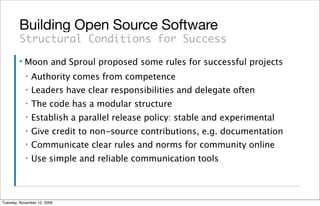

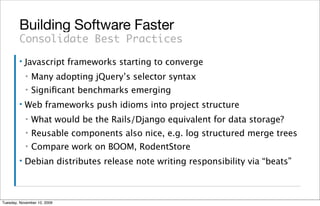

The document summarizes Jeff Hammerbacher's presentation on socializing big data lessons from the Hadoop community. The presentation covered an overview of Apache Hadoop and how it was adopted at Facebook to handle large-scale data processing needs. It also discussed how open source software is built and adopted through open collaboration in communities like the Hadoop community. The goal is to enhance the rate of evolution of data processing technologies.