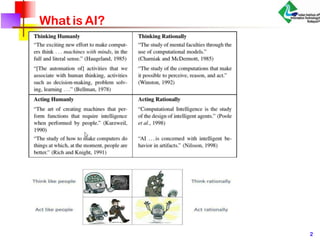

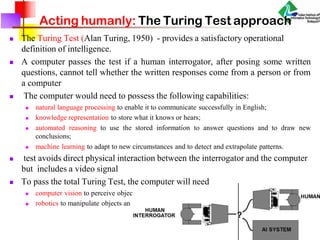

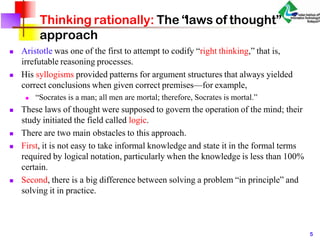

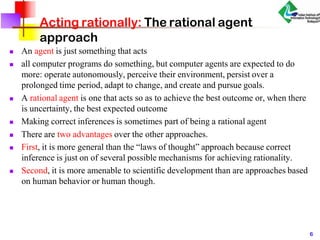

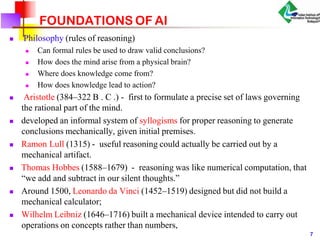

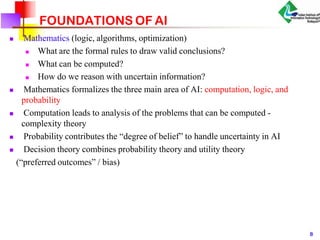

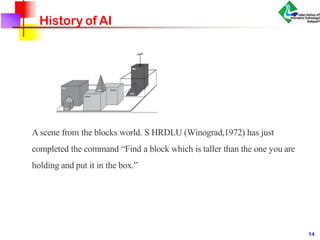

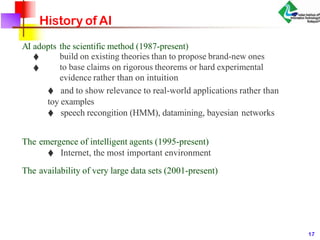

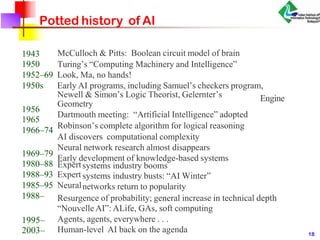

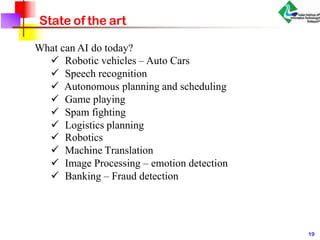

The document provides a comprehensive introduction to artificial intelligence (AI), covering foundational concepts such as the Turing Test, rational agents, and various philosophical and scientific underpinnings. It outlines the historical development of AI, from early neural models and symbolic systems to contemporary applications in robotics, speech recognition, and machine learning. Lastly, the document highlights the challenges and critiques faced by the field, alongside the evolution of AI technologies over the decades.