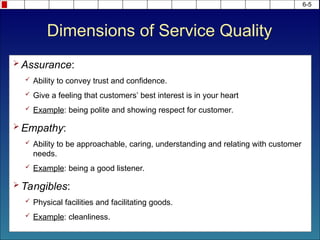

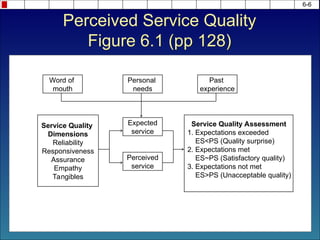

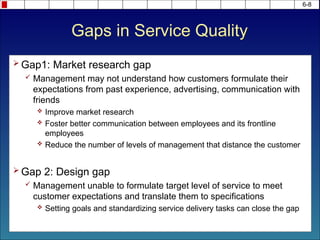

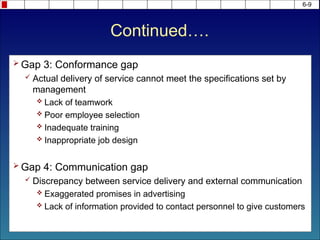

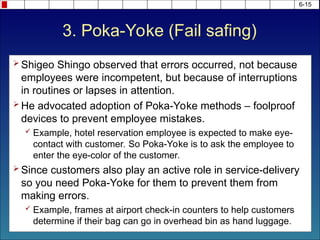

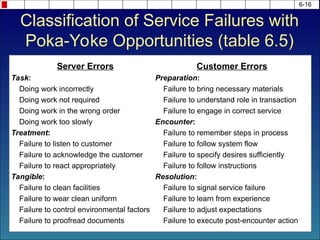

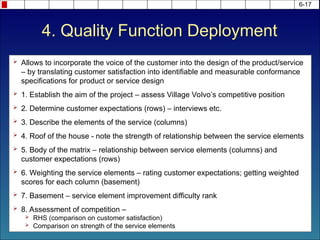

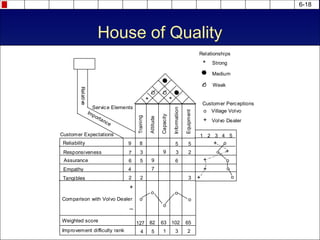

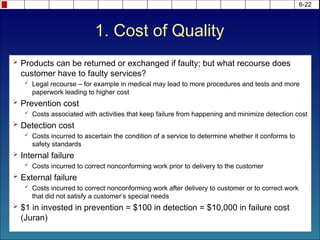

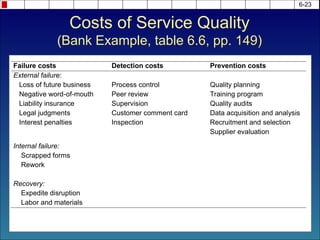

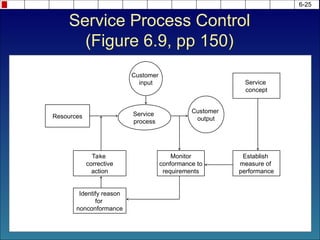

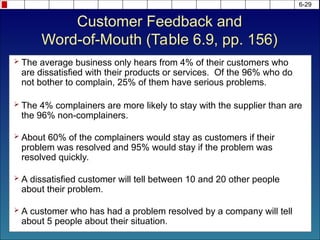

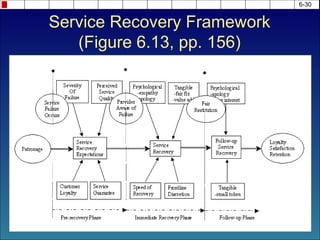

The document discusses service quality, outlining five key dimensions: reliability, responsiveness, assurance, empathy, and tangibles. It also presents the service quality gap model to identify issues, emphasizing the importance of service recovery and customer satisfaction. Various methods for quality management, including Taguchi and poka-yoke, are covered, along with tools such as service audits and statistical process control to enhance service delivery.