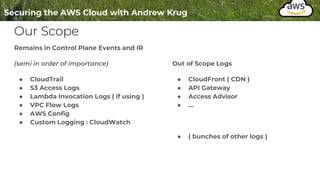

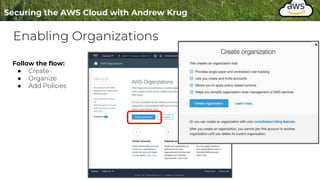

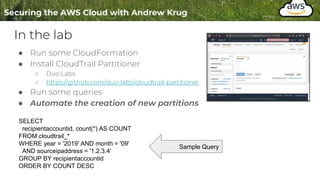

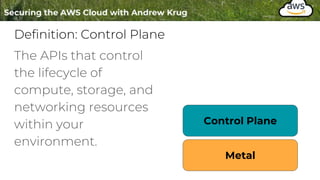

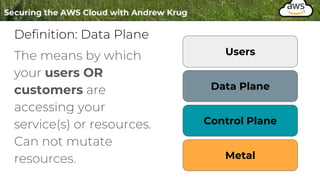

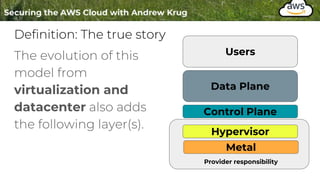

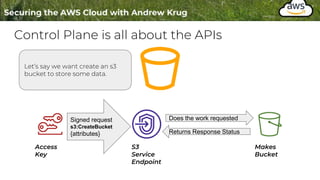

Logging is important for security, compliance, and operations in the cloud. There are two main types of logs - logs of resources in the cloud (data plane) and logs of API calls that control cloud resources (control plane). CloudTrail is the primary way to log control plane API calls in AWS and provides information about who performed actions, resources affected, and other context. When implementing logging, accounts should be structured with organizational units and logging policies to ensure all accounts are logged appropriately for security and compliance.

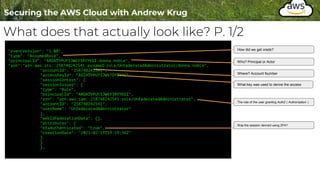

![What does that actually look like? P. 2/2

"eventTime": "2021-02-13T19:20:22Z",

"eventSource": "s3.amazonaws.com",

"eventName": "CreateBucket",

"awsRegion": "us-west-2",

"sourceIPAddress": "68.185.27.210",

"userAgent": "[aws-cli/2.1.21 Python/3.9.1 Darwin/20.3.0 source/x86_64 prompt/off

command/s3.mb]",

"requestParameters": {

"CreateBucketConfiguration": {

"LocationConstraint": "us-west-2",

"xmlns": "http://s3.amazonaws.com/doc/2006-03-01/"

},

"bucketName": "examplebucket1.securingthecloud.local",

"Host": "s3.us-west-2.amazonaws.com"

},

"responseElements": null,

"additionalEventData": {

"SignatureVersion": "SigV4",

"CipherSuite": "ECDHE-RSA-AES128-GCM-SHA256",

"bytesTransferredIn": 153,

"AuthenticationMethod": "AuthHeader",

"x-amz-id-2":

"p/jM0+HCYyqM8MBzIHAvzwtW1MFka3diEfclB0Xgi7femq+MiWYmfc9ndoe4GfKiL9Ydzcr75hc=",

"bytesTransferredOut": 0

},

"requestID": "A57328BD8E3112D9",

"eventID": "875597d7-998a-4a16-ae48-b2d41dc59e85",

"readOnly": false,

"eventType": "AwsApiCall",

"managementEvent": true,

"eventCategory": "Management",

"recipientAccountId": "258748242541"

Source

Verb ( aka Action )

Region

Caller IP Address

What User Agent

The rest of the data is request specific

data or specific to this particular service.

See : `requestParameters`](https://image.slidesharecdn.com/004-logginginthecloud-hide01-220809160823-cc919de0/85/004-Logging-in-the-Cloud-hide01-ir-pptx-12-320.jpg)