Open source for_you__july_2017

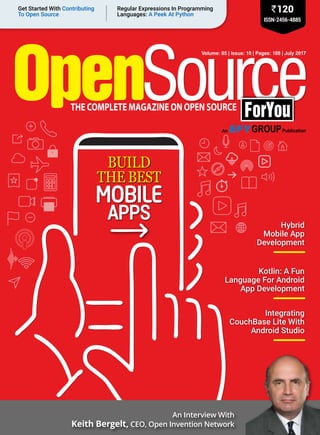

- 1. Volume: 05 | Issue: 10 | Pages: 108 | July 2017 ISSN-2456-4885 `120Regular Expressions In Programming Languages: A Peek At Python Get Started With Contributing To Open Source Hybrid Mobile App Development Kotlin: A Fun Language For Android App Development Integrating CouchBase Lite With Android Studio Build The BesT Apps Mobile An Interview With Keith Bergelt, CEO, Open Invention Network

- 3. PLATINUM PLAN GOLD PLAN Within 15 mins Lowest Initial Response (sev 1) Within 60 mins Within 30 mins Standard Escalation Time (sev 1) Within 90 mins 7 years Release Lifetime 7 years 24/7 Operational Hours 24/7 5 Named Contacts 5 Yes Fix guaranteed by SLA No Unlimited Number of Incidents Unlimited 15 min (bug fix within 24h) Severity 1 initial response 1 hour 2 hours (bug fix within 10d) Severity 2 initial response 2 hours Next business day (bug fix within 20d) Severity 3 initial response Next business day 1 week (bug fix within 60d) Severity 4 initial response 1 week PostgreSQL 24/7 Support WHEN IT’S CRITICAL YOU CAN COUNT ON US https://2ndQuadrant.com | info@2ndQuadrant.com USA +1 650 378 1218 | UK +44 (0)870 766 7756 | India +91 20 4014 7882

- 4. Admin 20 The DevOps Series Deploying Graphite Using Ansible 23 A Guide to Running OpenStack on AWS Developers 30 Using MongoDB to Improve the IT Performance of an Enterprise 40 Building REST APIs with the LoopBack Framework 44 Cross-Platform Mobile App Development Using the Meteor Framework 50 Kotlin: A Fun Language for Android App Development 56 Software Automation Testing Using Sikuli 59 Developing HTML5 and Hybrid Apps for Mobile Devices 62 Using the Onsen UI with Monaca IDE for Hybrid Mobile App Development 65 Why We Should Integrate Couchbase Lite with Android Studio 67 Eight Easy-to-Use Open Source Hybrid Mobile Application Development Platforms 76 Regular Expressions in Programming Languages: A Peek at Python 81 An Overview of Convertigo FOR U & ME 83 Get Started with Contributing to Open Source 86 Browser Fingerprinting: How EFF Tools Can Protect You 89 Mobile App Development in the Fast-changing Mobile Phone Industry REGULAR FEATURES 06 FOSSBytes 16 New Products 104 Tips & Tricks 71 34 The Best Open Source Mobile App Development Frameworks The AWK Programming Language: A Tool for Data Extraction ISSN-2456-4885 4 | July 2017 | OPEN SOuRCE FOR yOu | www.OpenSourceForu.com

- 5. Live Gnome, 64-bit JULY 2017 CDTeame-mail:cdteam@efy.in RecommendedSystemRequirements:P4,1GBRAM,DVD-ROM Drive IncasethisDVD doesn ot work properly, write to us at support@efy.in for a free replacement. Here’s the latest and the most stable version of Debian. DVDof The MonTh • Debian 9 Stretch GNOME Live 106 Keith Bergelt, CEO of Open Invention Network 26 100NetLogo: An Open Source Platform for Studying Emergent Behaviour 94 An Introduction to Deep (Machine) Learning 102 Caricaturing with Inkscape Columns 13 CodeSport 18 Exploring Software: Connecting LibreOffice Calc with Base and MariaDB/MySQL “At the heart of the Open Invention Network is its powerful cross- licence” Editor Rahul chopRa Editorial, SubScriptionS & advErtiSing Delhi (hQ) d-87/1, okhla industrial area, phase i, new delhi 110020 ph: (011) 26810602, 26810603; Fax: 26817563 E-mail: info@efy.in MiSSing iSSuES e-mail:support@efy.in back iSSuES Kits‘n’Spares newdelhi110020 ph:(011)26371661, 26371662 E-mail:info@kitsnspares.com nEwSStand diStribution ph:011-40596600 E-mail:efycirc@efy.in advErtiSEMEntS mumbai ph:(022)24950047,24928520 E-mail:efymum@efy.in beNGaluRu ph:(080)25260394,25260023 E-mail:efyblr@efy.in PuNe ph:08800295610/09870682995 E-mail:efypune@efy.in GuJaRaT ph:(079)61344948 E-mail:efyahd@efy.in chiNa powerpioneergroupinc. ph:(86755)83729797,(86)13923802595 E-mail:powerpioneer@efy.in JaPaN tandeminc.,ph:81-3-3541-4166 E-mail:tandem@efy.in SiNGaPORe publicitasSingaporepteltd ph:+65-68362272 E-mail:publicitas@efy.in TaiwaN J.k.Media,ph:886-2-87726780ext.10 E-mail:jkmedia@efy.in uNiTeD STaTeS E&techMedia ph:+18605366677 E-mail:veroniquelamarque@gmail.com printed, published and owned by ramesh chopra. printed attara art printers pvt ltd, a-46,47, Sec-5, noida, on 28th of the previous month, and published from d-87/1, okhla industrial area, phase i, new delhi 110020. copyright © 2017. all articles in this issue, except for interviews, verbatim quotes, or unless otherwise explicitly mentioned, will be released under creative commons attribution-noncommercial 3.0 unported license a month after the date of publication. refer to http://creativecommons.org/licenses/by-nc/3.0/ for a copy of the licence. although every effort is made to ensure accuracy, no responsi- bility whatsoever is taken for any loss due to publishing errors. articles that cannot be used are returned to the authors if accompanied by a self-addressed and sufficiently stamped envelope. but no responsibility is taken for any loss or delay in returning the material. disputes, if any, will be settled in a new delhi court only. SubScRiPTiON RaTeS Year Newstand Price You Pay Overseas (`) (`) Five 7200 4320 — three 4320 3030 — one 1440 1150 uS$ 120 kindly add ` 50/- for outside delhi cheques. please send payments only in favour of eFY enterprises Pvt ltd. non-receipt of copies may be reported to support@efy.in—do mention your subscription number. www.OpenSourceForu.com | OPEN SOuRCE FOR yOu | July 2017 | 5

- 6. Compiled by: Jagmeet Singh NEC establishes centre for Big Data and analytics in India Japanese IT giant NEC Corporation has announced the launch of its Center of Excellence (CoE) in India to promote the adoption of Big Data and analytics solutions. Based in Noida, the new centre is the first by the company and is set to simplify digital transformation for clients across sectors such as telecom, retail, banking, financial services, retail and government. NEC is set to invest US$ 10 million over the next three years to achieve a revenue of US$ 100 million in the next three years through the new CoE. While the Tokyo-headquartered company is initially targeting markets including Japan, India, Singapore, Philippines and Hong Kong, the centre will expand services throughout APAC and other regions. “The new CoE is an important step towards utilising Big Data analytics and NEC’s Data Platform for Hadoop to provide benefits for government bodies and enterprises in India and across the world,” said Tomoyasu Nishimura, senior vice president, NEC Corporation. NEC, in partnership with NEC India and NEC Technologies India (NTI), is leveraging Hadoop for its various Big Data and analytics developments. The company has implemented its hardware in partnership with Hortonworks and Red Hat. “The latest CoE is designed as a single platform that can be used to cater to the needs of processing structured and unstructured data,” Piyush Sinha, general manager for corporate planning and business management, NEC India, told Open Source For You. Sinha is handling a team of around 20 people who are initiating operations through the new centre. However, NEC has plans to organise a 100-member professional team to address requirements in the near future. There are also plans to hire fresh talent to enhance Big Data developments. The Data Platform for Hadoop (DPH) that NEC is using at the centre features a business intelligence layer in the background — alongside the Hadoop deployment, thus relieving customers of the chore of searching the individual building blocks of Big Data analytics. This combination also provides them the ability to generate value from the enormous pools of data. NEC already has a record of contributing to national projects in the country. The company enabled the mass-level adoption of Aadhaar in partnership with the Unique Identification Authority of India (UIDAI) by providing a large-scale FOSSBYTES GNOME 3.26 desktop to offer enhanced Disk Utility While GNOME maintainers are gearing up with some major additions to the GNOME 3.26 desktop environment, the latest GNOME Disk Utility gives us a glimpse of the features that will enhance your experience. GNOME 3.25.2 was published recently, and came with updated components as well as apps. That same release included the first development release of GNOME Disk Utility 3.26. The new GNOME Disk Utility includes multiple mounting options along with an insensitive auto-clear switch for unused loop devices. Also, there are clearer options for encryption. The GNOME team has additionally provided certain visual changes. The new GNOME Disk Utility version allows you to create an empty disk image from the App Menu. Similarly, the behaviour of the file system dialogue has been improved, and the UUID (Universally Unique Identifier) of selected volumes is displayed by default. The update enables disk images to use MIME types. The disk mounting functionality in the software has been improved, and now has the ability to auto-clear the handling for lock and unmount buttons. Besides, the latest version prompts users while closing the app. GNOME Disk Utility 3.25.2 is presently available for public testing. It will be released along with GNOME 3.26 later this year. Those wanting to try the preview version can download and compile GNOME Disk Utility 3.25.2 for their GNU/Linux distro. 6 | JuLY 2017 | OPEN SOuRCE FOR YOu | www.OpenSourceForu.com

- 7. FOSSBYTES Google Photos gets AI integration to archive users’ pictures Google Photos has been enhanced with an all-new AI-powered upgrade that suggests which trivial pictures from your collection should be archived. The latest development is a part of the Google Assistant support. The option to archive unwanted photos from your timeline is indeed welcome, in order to make your albums clutter-free. But what is more interesting is the artificial intelligence (AI) that Google has used to deliver this feature. You might have a mixed collection of your pictures that include various memorable moments alongside some images that you no longer need. These could be photographs of documents, billboards or posters. The new AI integration within Google Photos detects the difference intelligently, while the Assistant tab helps you select the not-so-important ones for archiving. “You can review the suggestions and remove any photos you don’t want archived, and tap to confirm when you are done,” the Google Photos team wrote in a Google+ post, explaining the latest feature. Once archived, you can view those unimportant pictures in the ‘Archive’ tab. Google has started rolling out the new feature to Android, iOS and desktop versions of the Photos app. Going forward, the machine learning input powering the archiving feature is likely to be available to developers to test its effectiveness across new areas. biometrics identification system. It is also supporting the Delhi-Mumbai Industrial Corridor (DMIC) that tracks nearly 70 per cent of container transactions in India. Krita painting app enhances experience for GIMP files Krita, the open source digital painting app, has announced v3.1.4. The new version is especially designed to deliver an enhanced experience for GIMP files. Krita 3.1.3 was released in early May. And now, it’s already time for the new release. The new minor update has improved the loading time of GIMP 2.9 files. The file format used to crash while attempting to quickly cycle through layers that contained a colour tag. It is worth noting that the GIMP 2.9 file format is not officially supported by Krita. Nevertheless, its development team has fixed the crashing issue in v3.1.4. With the new version of Krita, you can no longer hide the template selector in the ‘New Image’ dialogue box. But the menu entries in the macro recorder plugin in Krita are still visible. However, you can expect them to be removed in subsequent updates. Krita 3.1.4 also has a patch for the crash that occurs while attempting to play an animation with the OpenGL option active. Among the list of other minor bug fixes, Krita has fixed a crash that could occur during closing the last view on the last document. Krita 3.1.4 also improves rendering of animation frames. Krita 3.1.4 with its bug fixes is a stability release that is a recommended update for all users. The latest version is available for Linux, Windows and MacOS on the official website. Happiest Minds enters open source world by acquiring OSSCube Happiest Minds Technologies, a Bengaluru-headquartered emerging IT company, has acquired US-based digital transformation entity OSSCube. The new development is aimed to help the Indian company enter the market of enterprise open source solutions and expand its presence in the US. With the latest deal, Happiest Minds is set to widen its portfolio of digital offerings and strengthen its business in North America, the market that OSSCube is focused on. The team of 240 from OSSCube will now be a part of Happiest Minds. The acquisition will bring the total workforce of Happiest Minds to 2,400 and its active customer base to 170. “We are delighted to welcome the OSSCube team into the Happiest Minds family,” said Ashok Soota, executive chairman, Happiest Minds, in a joint statement. Founded in 2008 by Indian entrepreneurs Lavanya Rastogi and Vineet Agarwal, OSSCube is one of the leading open source companies around the globe. It is an exclusive enterprise partner for the open source platform Pimcore in North America. Apart from the open source developments, the company recently expanded the verticals it operates in to include the cloud, Big Data, e-commerce and enterprise mobility. “Coming together with Happiest Minds offers us the scale, global reach, complementary skills and expertise, enabling us to offer ever more innovative solutions to our global customers. We believe that the great cultural fit, www.OpenSourceForu.com | OPEN SOuRCE FOR YOu | JuLY 2017 | 7

- 8. FOSSBYTES marketplace synergies, and critical mass of consulting and IP-led offerings will unlock tremendous value for all our stakeholders,” said Lavanya Rastogi, co- founder and CEO, OSSCube. Rastogi will join Happiest Minds’ DTES business as the CEO. The Houston, Texas-based OSSCube will operate as a subsidiary of Happiest Minds. This is not the first acquisition by Happiest Minds. The Indian company has already bought out IoT services startup, Cupola Technology, this year. With all these developments, Happiest Minds is moving forward to become the fastest Indian IT services company to reach a turnover of US$ 100 million. The company is also in plans to go public within the next three years. Happiest Minds presently operates in Australia, the Middle East, USA, UK and the Netherlands. It offers domain-centric solutions applying skills, IP and functional expertise in IT services, product engineering, infrastructure management and security using technologies such as Big Data analytics, Internet of Things, mobility, the cloud, and unified communications. Microsoft’s Cognitive Toolkit 2.0 debuts with open source neural library Keras Microsoft’s deep learning and artificial intelligence (AI) solution, Cognitive Toolkit, has reached version 2.0. The new update is designed to handle production-grade and enterprise-grade deep learning workloads. To enable the developments around deep learning applications, Cognitive Toolkit 2.0 supports Keras, an open source neural networking library. The latest integration helps developers receive a faster and more reliable deep learning platform without any code changes. Chris Basolu, a partner engineering manager at Microsoft — who has been playing a key role in developing the Cognitive Toolkit builds — has tweaked the existing tools to make them accessible to enthusiasts with basic programming skills. There are also customisations available for highly-skilled developers who are all set to accelerate training for their own deep neural networks with large-sized data sets and across multiple servers. Cognitive Toolkit 2.0 supports the latest versions of NVIDIA Deep Learning SDK and advanced GPUs like NVIDIAVolta. In addition to the Keras support, the latest Cognitive Toolkit comes with model evaluations using Java bindings. There are also a few other tools that compress trained models in real-time. The new version is capable of compressing models even on resource-constrained devices such as smartphones and embedded hardware. Microsoft has designed the AI solution after observing the problems faced organisations ranging from smart startups to large tech companies, government agencies and NGOs. The team, led by Basolu, aims to make the major Cognitive Toolkit features accessible to a wider audience. Developers working with the previous release are recommended to upgrade to the latest Microsoft Cognitive Toolkit 2.0. You can find the code for the latest version on a GitHub repository. Raspberry Pi 3 can now monitor vital health signs Bengaluru-based hardware startup ProtoCentral has launched a multi- parameter patient monitoring add-on for Raspberry Pi 3. Called HealthyPi, Tor 7.0 debuts with sandbox feature Tor, the open source browser that is popular for maintaining user anonymity, has received an update to version 7.0, which includes a sandbox feature. The new version is designed for privacy-minded folks, offering them a more secure platform to surf the Web. The new sandbox integration hides your real IP and MAC address and even your files. The feature makes sure that the information that the Tor browser learns about your computer is limited. Moreover, it makes it harder for hackers leveraging Firefox exploits to learn about user identities. “We know there are people who try to de- anonymise Tor users by exploiting Firefox. Having the Tor browser run in a sandbox makes their life a lot harder,” Tor developer Yawning Angel wrote in a Q&A session. Angel has been experimenting with the sandbox feature since October 2016. The feature was in the unstable and testing phase back then. However, the new release for Linux and Mac is a stable version to protect user identities. In addition to the sandbox feature, the latest Tor version comes with Firefox 52 ESR. This new development includes tracking and fingerprinting resistance improvements such as the isolation of cookies, view-source requests and the permissions API to the first-party URL bar domain. It also includes WebGL2, the WebAudio, Social, SpeechSynthesis and Touch APIs. With the Firefox 52 ESR addition, the latest Tor build will not work on non-SSE2-capable Windows hardware. Users also need to be using Mac OS X 10.9 or higher on Apple hardware, and use e10s on Linux and MacOS systems to begin with the sandboxing feature. 8 | JuLY 2017 | OPEN SOuRCE FOR YOu | www.OpenSourceForu.com

- 10. FOSSBYTES the new development offers you a completely open source solution for monitoring vital health signs. The HealthyPi board comes with an Atmel ATSAMD21 Cortex-M0 MCU that is compatible with Arduino Zero, and can monitor health signs like ECG and respiration. Additionally, there is a pulse oximetry front-end along with an LED driver and 22-bit ADC. The board includes a three-electrode cable that has a button and stereo connector. Through the available 40-pin header connector, HealthyPi can connect with your Raspberry Pi 3. There is also a USB CDC device interface and UART connector that enables connectivity with an external blood pressure module. It is worth noting here that the HealthyPi is not yet a certified medical device. Microsoft Azure gets an open source tool to streamline Kubernetes developments Microsoft has announced the first open source developer tool for Azure. Called Draft, the new tool is designed to help developers create cloud-native applications on Kubernetes. Draft lets developers get started with container-based applications without any knowledge of Kubernetes or Docker. There is no need to even install either of the two container-centric solutions to begin with the latest tool. “Draft targets the ‘inner loop’ of a developer’s workflow — while developers write code and iterate, but before they commit changes to version control,” said Gabe Monroy, the lead project manager for containers on Microsoft Azure, in a blog post. Draft uses a simple detection script that helps in identifying the application language. Thereafter, it writes out simple Docker files and a Kubernetes Helm chart into the source tree to begin its action. Developers can also customise Draft using configurable packs. The Azure team has specifically built Draft to support languages that include Python, Java, Node.js, Ruby GO and PHP. The tool can be used for streamlining any application or service. Besides, it is well-optimised for a remote Kubernetes cluster. Monroy, who joined Microsoft as a part of the recent Deis acquisition, highlighted that hacking an application using Draft is as simple as typing ‘draft up’ on the screen. The command deploys the build in a development environment using the Helm chart. The overall packaging of the tool is similar to Platform-as-a-Service (PaaS) systems such as Cloud Foundry and Deis. However, it is not identical to build-oriented PaaS due to its ability to construct continuous integration (CI) pipelines. You can access Draft and its entire code as well as documentation from GitHub. The tool needs to spin up a Kubernetes Cluster on ACS to kickstart the new experience. Toyota starts deploying Automotive Grade Linux WhileApple’s CarPlay and Google’sAndroidAuto are yet to gain traction, Toyota has given a big push toAutomotive Grade Linux (AGL) and launched the 2018 Toyota Camry as its first vehicle with the open source in-car solution. The Japanese company is set to launch an AGL-based infotainment platform across its entire new portfolio of cars later this year. “The flexibility of the AGL platform allows us to quickly roll out Toyota’s infotainment system across our vehicle line-up, providing customers with greater connectivity and new functionalities at a pace that is more consistent with consumer Google releases open source platform Spinnaker 1.0 Google has released v1.0 of Spinnaker. The new open source tool is designed for the multi-cloud continuous delivery platform. The search giant started working on Spinnaker back in November 2015 along with Netflix. The tool was aimed at offering large companies fast, secure and repeatable production deployments. It has so far been used by organisations like Microsoft, Netflix, Waze, Target and Oracle. The Spinnaker version 1.0 comes with a rich UI dashboard and offers users the ability to install the tool on premise, on local infrastructure and the cloud. You can run it from a virtual machine as well as using Kubernetes. The platform can be integrated with workflows like Git, Docker registries, Travis CI and pipelines. It can be used for best- practice deployment strategies as well. “With Spinnaker, you simply choose the deployment strategy you want to use for each environment, e.g., red/black for staging, rolling red/black for production, and it orchestrates the dozens of steps necessary under- the-hood,” said Christopher Sanson, the product manager for Google Cloud Platform. Google has optimised Spinnaker 1.0 for Google Compute Engine, Container Engine, App Engine, Amazon Web Services, Kubernetes, OpenStack and Microsoft Azure. The company plans to add support for Oracle Bare Metal and DC/OS in upcoming releases. 10 | JuLY 2017 | OPEN SOuRCE FOR YOu | www.OpenSourceForu.com

- 12. FOSSBYTES technology,” said Keiji Yamamoto, executive vice president of the connected company division, Toyota Motor Corporation. Hosted by the Linux Foundation, AGL presently has more than 100 members who are developing a common platform to serve as the de facto industry standard. The Linux-based operating system comes with an application framework that allows automakers and suppliers to customise the experience with their specific features, services and branding. According to Automotive Grade Linux executive director, Dan Cauchy, among all the AGL members, Toyota is one of the more active contributors. “Toyota has been a driving force behind the development of the AGL infotainment platform, and we are excited to see the traction that it is gaining across the industry,” said Cauchy. Around 70 per cent of the AGL-based platform on the latest Toyota Camry sedan is said to have generic coding, while the remaining 30 per cent includes customised inputs. There are features like multimedia access and navigation. In the future, the Linux Foundation and AGL partners are planning to deploy the open source platform for building even unmanned vehicles. This model could make competition tougher for emerging players including Google and Tesla, which are both currently testing their self-driving cars. In addition to automotive companies like Toyota and Daimler, AGL is supported by tech leaders such as Intel, Renesas, Texas Instruments and Qualcomm. The open source community built AGL Unified Code Base 3.0 in December last year to kickstart developments towards the next-generation auto space. Perl 5.26.0 is now out with tons of improvements The Perl community has received version 26 of Perl 5. This new release is a collaborative effort from 86 authors. Perl 5.26.0 contains approximately 360,000 lines of code across 2600 files. Most of the changes in the latest version have originated in the CPAN (Comprehensive Perl Archive Network) modules. According to the official changelog, new variables such as @ {^CAPTURE}, %{^CAPTURE} and %{^CAPTURE_ALL} are included in the Perl 5.26.0 release. “Excluding auto-generated files, documentation and release tools, there were approximately 230,000 lines of changes to 1,800 .pm, .t, .c and .h files,” Perl developer Sawyer X wrote in an email announcement. The new version comes with some improvements like ‘here-docs’ can now strip leading whitespace. The original POSIX::tmpnam () command has also been replaced with File::Temp. Moreover, lexical sub-routines that were debuted with the Perl 5.18 are no longer experimental. Google brings reCAPTCHA API to Android Ten years after beginning to offer protection to Web users, Google has now taken a step towards protecting mobile devices and brought its renowned reCAPTCHAAPI to Android. The move is to protect Android users from spam and abuse, as well as countermoves by its competitors in the smartphone space, which include Apple and Microsoft. “With this API, reCAPTCHA can better tell human and bots apart to provide a streamlined user experience on the mobile,” said Wei Liu, product manager for reCAPTCHA, Google, in a blog post. Available as a part of Google Play Services, the reCAPTCHA Android API comes along with Google SafetyNet that provides services such as device attestation and safe browsing to protect mobile apps. The combination enables developers to perform both the device and user attestations using the same API. Carnegie Mellon alumni Luis von Ahn, Ben Maurer, Colin McMillen, David Abraham and Manuel Blum built reCAPTCH as a CAPTCHA-like system back in 2007. The development was acquired by Google two years after its inception, in September 2009. The solution has benefited more than a billion users so far. Google is not just limiting the access of reCAPTCHA to Android but also plans to bring it to Apple’s iOS as well. It would be interesting to see how it is adopted by iOS developers. Meanwhile, you can use the reCAPTCHA Android Library under SafetyNet APIS to integrate the secured solution into your Android apps. For more news, visit www.opensourceforu.com 12 | JuLY 2017 | OPEN SOuRCE FOR YOu | www.OpenSourceForu.com

- 13. CODE SPORT Sandya Mannarswamy www.OpenSourceForU.com | OPEN SOURCE FOR YOU | JULY 2017 | 13 A s requested by a few of our readers, we decided to feature practice questions for computer science college students, for their upcoming on-campus and off-campus interviews. One small piece of advice to those preparing for interviews: Make sure that you are very clear about the fundamental concepts. Interviewers will not hold it against you if you don’t know a specific algorithm. But not being able to answer some of the key fundamental concepts in different areas of computer science would definitely be an obstacle to clearing interviews. Also, make sure that you have updated your GitHub and SourceForge pages on which you might have shared some of your coding projects. Contributing to open source and having interesting, even if small, coding projects online in your GitHub pages would definitely be a big plus. In this month’s column, we will discuss a medley of interview questions covering a wide range of topics such as algorithms, operating systems, computer architecture, machine learning and data science. 1. Given the advances in memory-driven computing, let us assume that you have been given an enterprise Class 16 core server with a huge 2TB RAM. You have been asked to design an intrusion detection system which can analyse the network logs of different systems and identify potential intrusion candidates. Note that you have to monitor close to 256 systems and analyse their network logs for potential issues. Let us also assume that you have been told which standard state-of-art approach (for intrusion detection) you would need to implement. Now the question is: Would you design the intrusion detection application as a scale up or a scale out application? Can you justify your choice? Would your choice change if, instead of the enterprise Class 16 server with 2TB RAM, you are given multiple standard workstations with 32GB RAM. 2. You have been asked to build a supervised binary classification system for detecting spam emails. Basically, given an email, your system should be able to classify it as spam or non-spam. You are given a training data set containing 400,000 rows and 10,000 columns (features). The 10,000 features include both numerical variables and categorical variables. Now, you need to create a model using this training data set. Consider the two options given in Question 1 above, namely a server with 2TB RAM or a simple workstation with 32GB RAM. For each of these two options, can you explain how your approach would change in training a model? (Clue: The workstation is memory constrained, hence you will need to figure out ways to reduce your data set. Think about dimensionality reduction, sampling, etc.) Now, assume that you are able to successfully create two different models — one for the enterprise server and another for the workstation. Which of these two models would do better on a held-out test data set? Is it always the case that the model you built for the enterprise class server with huge RAM would have a much lower test error than the model you built for the workstation? Explain the rationale behind your answer. Here is a tougher bonus question: For this specific computer system which has a huge physical memory, do you really need paging mechanisms in the operating system? If you are told that all your computer systems would have such huge physical memory, would you be able to completely eliminate the virtual memory In this month’s column, we discuss some computer science interview questions.

- 14. CodeSport Guest Column 14 | JULY 2017 | OPEN SOURCE FOR YOU | www.OpenSourceForU.com By: Sandya Mannarswamy The author is an expert in systems software and is currently working as a research scientist at Conduent Labs India (formerly, Xerox India Research Centre). Her interests include compilers, programming languages, file systems and natural language processing. If you are preparing for systems software interviews, you may find it useful to visit Sandya’s LinkedIn group Computer Science Interview Training India at http://www. linkedin.com/groups?home=HYPERLINK “http://www.linkedin. com/groups?home=&gid=2339182”&HYPERLINK “http://www. linkedin.com/groups?home=&gid=2339182”gid=2339182 (VM) sub-system from the operating system? If you cannot completely eliminate the VM sub-system, what are the changes in paging algorithms (both data structures and page replacement algorithms) that you would like to implement so that the cost of the virtual memory sub- system is minimised for this particular server? 3. You were asked to create a binary classification system using the supervised learning approach, to analyse X-ray images when detecting lung cancer. You have implemented two different approaches and would like to evaluate both to see which performs better. You find that Approach 1 has an accuracy of 97 per cent and Approach 2 has an accuracy of 98 per cent. Given that, would you choose Approach 2 over Approach 1? Would accuracy alone be a sufficient criterion in your selection? (Clue: Note that the cancer image data set is typically imbalanced – most of the images are non-cancerous and only a few would be cancerous.) 4. Now consider Question 3 again. Instead of getting accuracies of 98 per cent and 97, both your approaches have a pretty low accuracy of 59 per cent and 65 per cent. While you are debating whether to implement a totally different approach, your friend suggests that you use an ensemble classifier wherein you can combine the two classifiers you have already built and generate a prediction. Would the combined ensemble classifier be better in performance than either of the classifiers? If yes, explain why. If not, explain why it may perform poorly compared to either of the two classifiers. When is an ensemble classifier a good choice to try out when individual classifiers are not able to reach the desired accuracy? 5. You have built a classification model for email spam such that you are able to reach a training error which is very close to zero. Your classification model was a deep neural network with five hidden layers. However, you find that the validation error is not small but around 25. What is the problem with your model? 6. Regularisation techniques are widely used to avoid overfitting of the model parameters to training data so that the constructed network can still generalise well for unseen test data. Ridge regression and Lasso regression are two of the popular regularisation techniques. When would you choose to use ridge regression and when would you use Lasso? Specifically, you are given a data set, in which you know that out of 100 features, only 10 to 15 of them are significant for prediction, based on your domain knowledge. In that case, would you choose ridge regression over Lasso? 7. Now let us move away from machine learning and data science to computer architecture. You are given a computer system with 16 cores, 64GB RAM, 512KB per core L1 cache (both instruction and data caches are separate and each is of size 512KB), 2MB per core L2 cache, and 1GB L3 cache which is shared among all cores. L1 cache is direct-mapped whereas L2 and L3 caches are four-way set associative. Now you are told to run multiple MySQL server instances on this machine, so that you can use this machine as a common back- end system for multiple Web applications. Can you characterise the different types of cache misses that would be encountered by the MySQL instances? 8. Now consider Question 7 above, where you are not given the cache sizes of L1, L2 and L3, but have been asked to figure out the approximate sizes of L1, L2 and L3 caches. You are given the average cache access times (hit and miss penalty for each access) for L1, L2 and L3 caches. Other system parameters are the same. Can you write a small code snippet which can determine the approximate sizes of L1, L2 and L3 caches? Now, is it possible to figure out the cache sizes if you are not aware of the average cache access times? 9. Consider that you have written two different versions of a multi-threaded application. One version extensively uses linked lists and the second version uses mainly arrays. You know that the memory accesses performed by your application do not exhibit good locality due to their inherent nature. You also know that your memory accesses are around 75 per cent reads. Now you have the choice between two computer systems — one with an L3 cache size of 24MB shared across all eight cores, and another with an L3 cache size of 2MB independent for each of the eight cores. Are there any specific reasons why you would choose System 1 rather than System 2 for each of your application versions? 10. Can you explain the stochastic gradient descent algorithm? When would you prefer to use the co- ordinate gradient descent algorithm instead of the regular stochastic gradient algorithm? Here’s a related question. You are told that the cost function you are trying to optimise is non-convex. Would you still be able to use the co-ordinate descent algorithm? If you have any favourite programming questions/software topics that you would like to discuss on this forum, please send them to me, along with your solutions and feedback, at sandyasm_AT_yahoo_DOT_com. Till we meet again next month, wishing all our readers a wonderful and productive month ahead!

- 16. German audio company Sennheiser has unveiled a pair of UC-certified headsets that employ adaptive active noise cancellation (AANC), called the MB 660 UC and MB 660 UC MS. AANC muffles the background sounds to a certain extent offering a more pleasant user experience. The headphones offer stereo audio and CD quality streaming with aptX. They come with Sennheiser’s unique SpeakFocus technology, delivering crystal clear sound even in noisy offices or play areas. The headphones are designed with a touchpad to access controls like answer/ end call, reject, hold, mute, redial, change track, volume, etc. The pillow soft leather ear pads and ear shaped cups are designed with premium quality material to ensure maximum comfort and style. The MB 660 UC series provides up new products Headphones with active noise cancellation from Sennheiser Connect between the digital and analogue worlds with Electropen 4 Sony has launched its wireless Bluetooth headset, the NW-WS623, a model specially designed for sports enthusiasts. Weighing just 32 grams, the NW-WS623 has a battery life of up to 12 hours once fully charged. As per company claims, the device comes with the quick charge capability, charging the device for one hour of playback in just three minutes. Enabled with NFC and Bluetooth, the device allows users to connect their headset to their smartphones or any other device. The NW-WS623 comes with 4GB built-in storage. It has IP65/IP68 certification for water and dust resistance, making it suitable for use in outdoor sports activities. It can also endure extreme temperatures from -5 degrees to 45 degrees Celsius, allowing it to be used while hiking or climbing at high and very cold altitudes. The device features an ergonomic, slim and light design, and comes with the ambient sound mode, enabling users to hear external sounds without having to take off the headset. The Sony NW-WS623 is available in black colour via Sony Centres and other electronics retail stores. to 30 hours of battery back-up. The two variants – MB660 UC and MB660 UC MS – are available via any authorised Sennheiser distribution channel. Address: Sennheiser Electronics India Pvt Ltd, 104, A, B, C First Floor, Time Tower, M.G. Road, Sector 28, Gurugram, Haryana – 122002 Wireless Bluetooth headset for sports enthusiasts Portable devices manufacturer, Portronics, has launched its latest digital pen, the Electropen 4, which allows users to write notes or make sketches on paper and then convert these into a document or JPEG image on their smartphone, laptop or computer. The digital pen has an in-built Flash memory to store A4 sized pages and later sync or transfer these to the smartphone or tablet, eliminating the need to carry a mobile device or laptop to meetings for taking notes. In addition, the Bluetooth digital pen uses the microphone of the smartphone via an app that allows users to record an audio clip while they take notes. The user can also erase or edit the notes taken Address: Portronics Digital Pvt Ltd, 4E/14 Azad Bhavan, Jhandewalan, New Delhi – 110055; Ph: 91-9555245245 with or sketches made using Electropen 4. The digital pen is compatible with Bluetooth 4.1 and is powered by a Li- polymer rechargeable battery. The Portronics Electropen 4 is available in black colour via online and retail stores. Price: ` 6,999 Address: Sony India Pvt Ltd, A-18, Mathura Road, Delhi – 110044; Ph: 91-11-66006600 Price: ` 8,990 Price: ` 41,990 for MB 660 UC and ` 60,094 for MB 660 UC MS 16 | JuLY 2017 | OPEN SOuRCE FOR YOu | www.OpenSourceForu.com

- 17. offers two 5MP cameras – front and rear, both with flash. The 4G VoLTE smartphone is available with a gold back cover and an additional back cover via mobile stores. The prices, features and specifications are based on information provided to us, or as available on various websites and portals. OSFY cannot vouch for their accuracy. Samsung launches its new Tizen smartphone Compiled by: Aashima Sharma Enjoy loud, clear and uniform sound with Bose’s 360 degree speakers Samsung has recently unveiled its latest smartphone, the Samsung Z4, with the Tizen OS. The device is equipped with an 11.43cm (4.5 inch) screen, allowing users to enjoy games and other multimedia with a superior resolution. Designed with 2.5D glass, the phone has a comfortable grip. It comes with 1.5 quad-core processor, 1GB RAM and 8GB internal memory, which is further expandable up to 128GB via microSD card. It is backed with a 2050mAh battery boosted by Samsung’s unique ‘Ultra Power Saving Mode’. According to company sources, the Tizen OS gives users an effortless mobile experience as it comes with features developed specifically for Indian consumers. The smartphone Price: ` 5,790 Address: Samsung India Electronics Pvt Ltd, 20th to 24th Floors, Two Horizon Centre, Golf Course Road, Sector-43, DLF PH-V, Gurugram, Haryana 122202 Address: Bose Corporation India Pvt Ltd, 3rd Floor, Salcon Aurum, Plot No. 4, Jasola District Centre, New Delhi – 110025 International audio equipment manufacturer, Bose, has launched its latest mid-range Bluetooth speakers – SoundLink Revolve and SoundLink Revolve +. The speakers are designed to offer clear sound in all directions, giving uniform coverage. The aluminium covered cylindrical speakers feature a ¼-20 thread to make it easy to mount them on a tripod, in yards or outdoors. As they are IPX4 rated, they can withstand spills, rain and pool splashes. The SoundLink Revolve measures 15.24cm (6 inch) in height, 8.25cm (3.25 inch) in depth and weighs 680 grams. The SoundLink Revolve + is bigger at 18.41cm (7.25 inch) in height, 8.25cm (3.25 inch) in depth and weighs 907 grams. The Revolve + promises up to 16 hours of playback, while the Revolve offers up to 12 hours of play time. Both the variants can be charged via micro-B USB port and can be paired via NFC. Integrated microphones can also be used as a speakerphone or with Siri and Google Assistant. The speakers are available in Triple Black and Lux Gray colours via Bose stores. Price: ` 19,900 for SoundLink Revolve and ` 24,500 for SoundLink Revolve + www.OpenSourceForu.com | OPEN SOuRCE FOR YOu | JuLY 2017 | 17

- 18. Guest ColumnExploring Software 18 | JUly 2017 | OPEN SOURCE FOR yOU | www.OpenSourceForU.com import MySQLdb SQL_INSERT=”INSERT INTO navtable(isin,nav) VALUES(‘%s’,%s)” db = MySQLdb.connect(“localhost”,”anil”,”secretpw”,”navdb”) c = db.cursor() try: for line in open(‘navall.txt’).readlines(): cols=line.split(‘;’) if len(cols) > 4 and len(cols[1])==12 and not cols[4][0].isalpha(): c.execute( SQL_INSERT%(cols[1],cols[4]) ) db.commit() db.close() except: db.rollback() Linking the MySQL database and LibreOffice Base In addition to LibreOffice Base, you will need to install the MySQL JDBC driver. On Fedora, the package you need is mysql-connector-java. LibreOffice needs to be able to find this driver. For Fedora, add the archive /usr/share/java/mysql-connector- java.jar in the class path of Java Options. You can find this option in Tools/Options/LibreOffice/Advanced. The next step is to enable this as a data source in LibreOffice, as follows. 1. Create a new database. 2. Connect to an existing database using MySQL JDBC connection. 3. The database is navdb on localhost. 4. Enter the credentials to access the database. 5. You will be prompted to register the database with LibreOffice. 6. You will be prompted for the password. You may create additional tables, views, and SQL queries on the database, which may then be accessed through Calc. Access the data through Calc Your personal table of your funds will have at least the This article demonstrates how using LibreOffice Calc with Base and a Structured Query Language (SQL) database like MariaDB or MySQL can help anyone who wishes to keep track of mutual fund investments. The convenience afforded is amazing! Connecting LibreOffice Calc with Base and MariaDB/MySQL L et’s assume that you have a list of mutual funds that you can easily manage in a spreadsheet. You will need to check the NAV (net asset value) of the funds occasionally to keep track of your assets. You can find out the NAV of all the mutual funds (MFs) you have invested in at one convenient spot—the website of the Association of Mutual Funds, amfiindia.com. As time goes by, your list of funds keeps increasing and at some point you will want to automate the process of keeping track of them. The first step is to download the NAV for all the funds in a single text file; you may use wget to do so: $ wget http://www.amfiindia.com/spages/NAVAll.txt The downloaded file is a text file with fields separated by ‘;’. It can easily be loaded into a spreadsheet and examined. Creating a usable format Each mutual fund can be identified by an International Securities Identification Number (ISIN). Hence, ISIN (the second column) can be the key to organise the data. The minimum additional data you need is the NAV for the day (the fifth column) for each security. LibreOffice has a Base module, which I had never used and which works well with any SQL database using ODBC/ JDBC drivers. If you are using Linux, you may already have installed MariaDB or MySQL. Python will easily work with MySQL as well. So, you create a MySQL database NAVDB and a table called navtable: CREATE TABLE navtable (isin char(12) primary key, nav real); The following code is a minimal Python program to load the data into a MySQL table while skipping all the extraneous rows and rows for which no NAV is available. Anil Seth

- 19. Guest Column Exploring Software www.OpenSourceForU.com | OPEN SOURCE FOR yOU | JUly 2017 | 19 By: Dr Anil Seth The author has earned the right to do what interests him. You can find him online at http://sethanil.com, http://sethanil. blogspot.com, and reach him via email at anil@sethanil.com. following columns: ISIN No. of units NAV Current value INF189A01038 500 To get from navtable = <A2>*<B2> Ideally, Calc should have had a function to select the NAV from the navtable using the ISIN value as the key. Unfortunately, that is not possible. The simplest option appears to be to use a Pivot Table. So, after opening your spreadsheet, take the following steps: 1. Insert the Pivot Table. 2. Select Data Source registered with LibreOffice. 3. Choose NAVDB that you had registered above and the table navdb.navtable. 4. After a while, you will be asked to select the fields for the Pivot Table layout. Select both isin and nav as row fields. It will create a new sheet with the values. For the sake of simplicity, rename the sheet as ‘Pivot’. Now, go back to your primary sheet and define a lookup function in the NAV column. For example, for the second row, it will be: =VLOOKUP(A2, Pivot.A:B, 2) This function will search for the value in A2 in the first column of the A and B columns of the Pivot sheet. The process of using Base turned out to be a lot more complex than I had anticipated. However, the same complexity also suggests that using a spreadsheet as a front-end to a database is a very attractive option for decision taking, especially knowing how often one suffers trying to understand the unexpected results only to find that a value in some cell had been accidentally modified!

- 20. Admin How To G raphite is a monitoring tool that was written by Chris Davis in 2006. It has been released under the Apache 2.0 licence and comprises three components: 1. Graphite-Web 2. Carbon 3. Whisper Graphite-Web is a Django application and provides a dashboard for monitoring. Carbon is a server that listens to time-series data, while Whisper is a database library for storing the data. Setting it up A CentOS 6.8 virtual machine (VM) running on KVM is used for the installation. Please make sure that the VM has access to the Internet. The Ansible version used on the host (Parabola GNU/Linux-libre x86_64) is 2.2.1.0. The ansible/ folder contains the following files: ansible/inventory/kvm/inventory ansible/playbooks/configuration/graphite.yml ansible/playbooks/admin/uninstall-graphite.yml The IP address of the guest CentOS 6.8 VM is added to the inventory file as shown below: graphite ansible_host=192.168.122.120 ansible_connection=ssh ansible_user=root ansible_password=password Also, add an entry for the graphite host in the /etc/hosts file as indicated below: 192.168.122.120 graphite Graphite The playbook to install the Graphite server is given below: --- - name: Install Graphite software hosts: graphite gather_facts: true tags: [graphite] tasks: - name: Import EPEL GPG key rpm_key: key: http://dl.fedoraproject.org/pub/epel/RPM-GPG- KEY-EPEL-6 state: present - name: Add YUM repo yum_repository: name: epel description: EPEL YUM repo baseurl: https://dl.fedoraproject.org/pub/ epel/$releasever/$basearch/ gpgcheck: yes 20 | JuLY 2017 | OPEN SOuRCE FOR YOu | www.OpenSourceForu.com The DevOps Series Deploying Graphite Using Ansible In this fifth article in the DevOps series we will learn to install and set up Graphite using Ansible.

- 21. AdminHow To - name: Update the software package repository yum: name: ‘*’ update_cache: yes - name: Install Graphite server package: name: “{{ item }}” state: latest with_items: - graphite-web We first import the keys for the Extra Packages for Enterprise Linux (EPEL) repository and update the software package list. The ‘graphite-web’ package is then installed using Yum. The above playbook can be invoked using the following command: $ ansible-playbook -i inventory/kvm/inventory playbooks/ configuration/graphite.yml --tags “graphite” MySQL A backend database is required by Graphite. By default, the SQLite3 database is used, but we will install and use MySQL as shown below: - name: Install MySQL hosts: graphite become: yes become_method: sudo gather_facts: true tags: [database] tasks: - name: Install database package: name: “{{ item }}” state: latest with_items: - mysql - mysql-server - MySQL-python - libselinux-python - name: Start mysqld server service: name: mysqld state: started - wait_for: port: 3306 - name: Create graphite database user mysql_user: name: graphite password: graphite123 priv: ‘*.*:ALL,GRANT’ state: present - name: Create a database mysql_db: name: graphite state: present - name: Update database configuration blockinfile: path: /etc/graphite-web/local_settings.py block: | DATABASES = { ‘default’: { ‘NAME’: ‘graphite’, ‘ENGINE’: ‘django.db.backends.mysql’, ‘USER’: ‘graphite’, ‘PASSWORD’: ‘graphite123’, } } - name: syncdb shell: /usr/lib/python2.6/site-packages/graphite/ manage.py syncdb --noinput - name: Allow port 80 shell: iptables -I INPUT -p tcp --dport 80 -m state --state NEW,ESTABLISHED -j ACCEPT - name: lineinfile: path: /etc/httpd/conf.d/graphite-web.conf insertafter: ‘ # Apache 2.2’ line: ‘ Allow from all’ - name: Start httpd server service: name: httpd state: started As a first step, let’s install the required MySQL dependency packages and the server itself. We can then start the server and wait for it to listen on port 3306. A graphite user and database is created for use with the Graphite Web application. For this example, the password is provided as plain text. In production, use an encrypted Ansible Vault password. The database configuration file is then updated to use the MySQL credentials. Since Graphite is a Django application, the manage.py script with syncdb needs to be executed to create the necessary tables. We then allow port 80 through the firewall in order to view the Graphite dashboard. The graphite-web.conf file is updated to allow read access, and the www.OpenSourceForu.com | OPEN SOuRCE FOR YOu | JuLY 2017 | 21

- 22. 22 | JuLY 2017 | OPEN SOuRCE FOR YOu | www.OpenSourceForu.com Admin How To Apache Web server is started. The above playbook can be invoked as follows: $ ansible-playbook -i inventory/kvm/inventory playbooks/ configuration/graphite.yml --tags “database” Carbon and Whisper The Carbon and Whisper Python bindings need to be installed before starting the carbon-cache script. - name: Install Carbon and Whisper hosts: graphite become: yes become_method: sudo gather_facts: true tags: [carbon] tasks: - name: Install carbon and whisper package: name: “{{ item }}” state: latest with_items: - python-carbon - python-whisper - name: Start carbon-cache shell: /etc/init.d/carbon-cache start The above playbook is invoked as follows: $ ansible-playbook -i inventory/kvm/inventory playbooks/ configuration/graphite.yml --tags “carbon” The Graphite dashboard You can open http://192.168.122.120 in the browser on the host to view the Graphite dashboard. A screenshot of the Graphite Web application is shown in Figure 1. Uninstalling Graphite An uninstall script to remove the Graphite server and its dependency packages is required for administration. The Ansible playbook for the same is available in the playbooks/ admin folder and is given below: --- - name: Uninstall Graphite and dependencies hosts: graphite gather_facts: true tags: [remove] tasks: - name: Stop the carbon-cache server shell: /etc/init.d/carbon-cache stop - name: Uninstall carbon and whisper package: name: “{{ item }}” state: absent with_items: - python-whisper - python-carbon - name: Stop httpd server service: name: httpd state: stopped - name: Stop mysqld server service: name: mysqld state: stopped - name: Uninstall database packages package: name: “{{ item }}” state: absent with_items: - libselinux-python - MySQL-python - mysql-server - mysql - graphite-web The script can be invoked as follows: $ ansible-playbook -i inventory/kvm/inventory playbooks/ admin/uninstall-graphite.yml By: Shakthi Kannan The author is a free software enthusiast and blogs at shakthimaan.com. [1] Graphite documentation. https://graphite.readthedocs. io/en/latest/ [2] Carbon. https://github.com/graphite-project/carbon [3] Whisper database. http://graphite.readthedocs.io/en/ latest/whisper.html References Figure 1: Graphite Web

- 23. www.OpenSourceForu.com | OPEN SOuRCE FOR YOu | JuLY 2017 | 23 AdminHow To T his article will guide you on installing OpenStack on top of AWS EC2. Installing OpenStack on a nested hypervisor environment is not a big deal when a QEMU emulator is used for launching virtual machines inside the virtual machine (VM). However, unlike the usual nested hypervisor set-up, installing OpenStack on AWS EC2 instances has a few restrictions on the networking side, for the OpenStack set-up to work properly. This article outlines the limitations and the solutions to run OpenStack on top of the AWS EC2 VM. Limitations The AWS environment will allow the packets to flow in their network only when the MAC address is known or registered in the AWS network environment. Also, the MAC address and the IP address are tightly mapped. So, the AWS environment will not allow packet flow if the MAC address registered for the given IP address is different. You may wonder whether the above restrictions will impact the OpenStack set-up on AWS EC2. Yes, they certainly will! While configuring Neutron networking, we create a virtual bridge (say, br-ex) for the provider network, where all the VM’s traffic will reach the Internet via the external bridge, followed by the actual physical NIC (say, eth1). In that case, we usually configure the external interface (NIC) with a special type of configuration, as given below. The provider interface uses a special configuration without an IP address assigned to it. Configure the second interface as the provider interface. Replace INTERFACE_NAME with the actual interface name, for example, eth1 or ens224. Next, edit the /etc/network/interfaces file to contain the following: # The provider network interface auto INTERFACE_NAME iface INTERFACE_NAME inet manual up ip link set dev $IFACE up down ip link set dev $IFACE down You can go to http://docs.openstack.org/mitaka/install- guide-ubuntu/environment-networking-controller.html for more details. Due to this special type of interface configuration, the restriction in AWS will hit OpenStack networking. In the mainstream OpenStack set-up, the above-mentioned provider OpenStack is a cloud operating system that controls vast computing resources through a data centre, while AWS (Amazon Web services) offers reliable, scalable and inexpensive cloud computing services. The installation of OpenStack on AWS is an instance of Cloud-on-a-Cloud. A Guide to Running OpenStack on AWS

- 24. 24 | JuLY 2017 | OPEN SOuRCE FOR YOu | www.OpenSourceForu.com Admin How To interface is configured with a special NIC configuration that will have no IP for that interface, and will allow all packets via that specially configured NIC. Moreover, the VM packets reaching the Internet via this specially configured NIC will have the OpenStack tenant router’s gateway IP address as the source in each packet. As I mentioned earlier, in the limitations above, AWS will only allow the packet flow when the MAC address is known/ registered in its environment. Also, the IP address must match the MAC address. In our case, the packet from the above-mentioned OpenStack tenant router will have the IP address of the router’s gateway in every single packet, and the packet source’s MAC address will be the MAC address of the router’s interface. Note: These details are available if you use ip netns show followed by ip netns exec qr-<router_ID> ifconfig commands in the OpenStack controller’s terminal. Since the MAC address is unknown or not registered in the AWS environment, the packets will be dropped when they reach the AWS switch. To allow the VM packets to reach the Internet via the AWS switch, we need to use some tricks/ hacks in our OpenStack set-up. Making use of what we have One possible way is to register the router’s MAC address and its IP address with the AWS environment. However, this is not feasible. As of now, AWS does not have the feature of registering any random MAC address and IP address inside the VPC. Moreover, allowing this type of functionality will be a severe security threat to the environment. The other method is to make use of what we have. Since we have used a special type of interface configuration for the provider NIC, you will note that the IP address assigned to the provider NIC (say, eth1) is left unused. We could use this available/unused IP address for the OpenStack router’s gateway. The command given below will do the trick: neutron router-gateway-set router provider --fixed-ip ip_ address=<Registered_IP_address*> IP address and MAC address mismatches After configuring the router gateway with the AWS registered IP address, each packet from the router’s gateway will have the AWS registered IP address as the source IP address, but with the unregistered MAC address generated by the OVS. As I mentioned earlier, while discussing the limitations of AWS, the IP address must match the MAC address registered; else, all the packets with the mismatched MAC and IP address will be dropped by the AWS switch. To make the registered MAC address match with the IP address, we need to change the MAC address of the router’s interface. The following steps will do the magic. Step 1) Install the macchanger tool. Step 2) Note down the actual/original MAC address of the provider NIC (eth1). Step 3) Change the MAC address of the provider NIC (eth1). Step 4) Change the MAC address of the router’s gateway interface to the original MAC address of eth1. Step 5) Now, try to ping 8.8.8.8 from the router namespace. If you get a successful ping response, then we are done with the Cloud-on-the-Cloud set-up. Key points to remember Here are some important things that one needs to keep track of. Changing the MAC address: In my case, I had used the Ubuntu 14.04 LTS server, with which there was no issue in changing the MAC address using the macchanger tool. However, when I tried the Ubuntu 16.04 LTS, I got an error saying, “No permission to modify the MAC address.” I suspect the error was due to the cloud-init tool not allowing the MAC address’ configuration. So, before setting up OpenStack, try changing the MAC address of the NIC. Floating IP disabled: Associating a floating IP to any of OpenStack’s VMs will send the packet via the router’s gateway with the source IP address as the floating IP’s IP address. This will make the packets hit the AWS switch with a non-registered IP and MAC address, which results in the packets being dropped. So, I could not use the floating IP functionality in this set-up. However, I could access the VM publicly using the following NAT process. Using NAT to access OpenStack’s VM: As mentioned earlier, I could access the OpenStack VM publicly using the registered IP address that was assigned to the router’s gateway. Use the following NAT command to access the OpenStack VM using the AWS EC2 instance’s elastic IP: $ ip netns exec qrouter-f85bxxxx-61b2-xxxx-xxxx-xxxxba0xxxx iptables -t nat -A PREROUTING -p tcp -d 172.16.20.101 --dport 522 -j DNAT --to-destination 192.168.20.5:22 Note: In the above command, I had NAT forwarding for all packets for 172.16.20.101 with Port 522. Using the above NAT command, all the packets reaching 172.16.20.101 with Port number 522 are forwarded to 192.168.20.5:22. Here, 172.16.20.101 is the registered IP address of the AWS EC2 instance which was assigned to the router’s gateway. 192.168.20.5 is the local IP of the OpenStack VM. Notably, 172.16.20.101 already has NAT with the AWS elastic IP, which means all the traffic that comes to the elastic IP (public IP) will be forwarded to this VPC

- 25. www.OpenSourceForu.com | OPEN SOuRCE FOR YOu | JuLY 2017 | 25 AdminHow To local IP (172.16.20.101). In short, [Elastic IP]:522 π 172.16.20.101:522 π 192.168.20.5:22 This means that you can SSH the OpenStack VM globally by using the elastic IP address and the respective port number. Elastic IP address: For this type of customised OpenStack installation, we require at least two NICs for an AWS EC2 instance. One is for accessing the VM terminal for installing and accessing the dashboard. In short, it acts as a management network, a VM tunnel network or an API network. The last one is for an external network with a unique type of interface configuration and is mapped with the provider network bridge (say br-ex with eth1). AWS will not allow any packets to travel out of the VPC unless the elastic IP is attached to that IP address. To overcome this problem, we must attach the elastic IP for this NIC. This is so that the packets of the OpenStack’s VM reach the OpenStack router’s gateway and from the gateway, the packets get embedded with the registered MAC address. Then, the matching IP address will reach the AWS switch (VPC environment) via br-ex and eth1 (a special type of interface configuration), and then hit the AWS actual VPC gateway. From there, the packets will reach the Internet. Other cloud platforms In my analysis, I noticed that most of the cloud providers like Dreamhost and Auro-cloud have the same limitations for OpenStack networking. So we could use the tricks/hacks mentioned above in any of those cloud providers to run an OpenStack cloud on top of them. Note: Since we are using the QEMU emulator without KVM for the nested hypervisor environment, the VM’s performance will be slow. If you want to try OpenStack on AWS, you can register for CloudEnabler’s Cloud Lab-as-a-Service offering available at https://claas.corestack.io/ which provides a consistent and on-demand lab environment for OpenStack in AWS. By: Vinoth Kumar Selvaraj The author is a DevOps engineer at Cloudenablers Inc. You can visit his LinkedIn page at https://www.linkedin.com/ in/vinothkumarselvaraj/. He has a website at http://www. hellovinoth.com and you can tweet to him @vinoth6664. [1] http://www.hellovinoth.com/ [2] http://www.cloudenablers.com/blog/ [3] https://www.opendaylight.org/ [4] http://docs.openstack.org/ R References

- 26. Keith Bergelt, CEO of Open Invention Network The Open Invention Network was set up in 2005 by IBM, Novell, Philips, Red Hat and Sony. As open source software became the driving force for free and collaborative innovation over the past decades, Linux and associated open source applications faced increased litigation attacks from those who benefited from a patent regime. The OIN effectively plays the role of protecting those working in Linux. It is the largest patent non- aggression community—with more than 2,200 corporate participants and financial supporters such as Google, NEC and Toyota. But how exactly does it protect and support liberty for all open source adopters? Jagmeet Singh of OSFY finds the answer to that in an exclusive conversation with Keith Bergelt, CEO of Open Invention Network. Edited excerpts... QWhat does the Open Invention Network have for the open source community? The Open Invention Network (OIN) is a collaborative enterprise that enables innovation in Linux and other open source projects by leveraging a portfolio of more than 1,200 strategic, worldwide patents and applications. That is paired with our unique, royalty-free licence agreement. While our patent portfolio is worth a great deal - in the order of hundreds of millions of dollars - any person or company can gain access to OIN’s patents for free, as long as they agree not to sue anyone in the collection of software packages that we call the ‘Linux System’. A new licensee also receives royalty-free access to the Linux System patents of the 2,200 other licensees. In essence, a licensee is agreeing to For U & Me Interview 26 | July 2017 | OPEN SOuRCE FOR yOu | www.OpenSourceForu.com Interview

- 27. support patent non-aggression in Linux. Even if an organisation has no patents, by taking an OIN license, it gains access to the OIN patent portfolio and an unrestricted field of use. QHow has OIN grown since its inception in November 2005? Since the launch of OIN, Linux and open source have become more widely used and are incredibly prevalent. The average person today has an almost countless number of touch points with Linux and open source software on a daily basis. Search engines, smartphones, cloud computing, financial and banking networks, mobile networks and automobiles, among many other industries, are all leveraging Linux and open source. As Linux development, distribution and usage have grown -- so too has the desire of leading businesses and organisations to join OIN. In addition to the benefit of gaining access to patents worth hundreds of millions of dollars, becoming an OIN community member publicly demonstrates an organisation’s commitment to Linux and open source. These factors have led OIN to grow at a very significant pace over the last five years. We are now seeing new community members from across all geographic regions as well as new industries and platforms that include vehicles, NFV (network function virtualisation) telecommunications services, Internet of Things, blockchain digital ledgers and embedded parts. QHow did you manage to build a patent ‘no-fly’ zone around Linux? At the heart of OIN is its powerful cross- licence. Any organisation, business or individual can join OIN and get access to its patents without paying a royalty even if the organisation has no patents. The key stipulation in joining is that community members agree not to sue anyone based on a catalogue of software libraries we call the Linux System. QWhat were the major challenges you faced in building OIN up as the largest patent non-aggression community? There is no analogy in the history of the technology industry for OIN. Where people really struggled was in understanding our business model. Because we do not charge a fee to join or access our patents, it was challenging for executives to grasp the fact that OIN was created to help all companies dedicated to Linux. For the first few years of our existence, this aspect created uncertainty as it is not common for leaders like IBM, Red Hat, SUSE, Google and Toyota to come together to contribute capital to support a common good with no financial return. But instead of seeking a financial return, these companies have had the foresight to understand that the modalities of collaborative invention will benefit all those who currently rely on, as well as those who will come to rely on, open source software. In this transformation arise market opportunities and a new way of creating value in the new economy which is open to all who have complementary technology, talent and compelling business models. QWhat practices does OIN encourage to eliminate low- quality patents for open source solutions? Low-quality patents are the foodstuff of NPEs (non-practising entities) and organisations looking to hinder their competitors, because their products are not competitive in the marketplace. OIN has taken a multi-pronged approach towards eliminating low-quality patents. We have counselled and applaud the efforts of the USPTO (United States Patent and Trademark Office), other international patent assigning entities and various government agencies in the EU and Asia that are working to create a higher bar for granting a patent. Additionally, in the US, Asia and the EU, there are pre-patent peer review organisations that are working to ensure that prior art is well catalogued and available for patent examiners. These initiatives are increasingly effective. QWhat are the key steps an organisation should take to protect itself from a patent infringement claim related to an open source deployment? A good first step is to join the Open Invention Network. This gives Linux and open source developers, distributors and users access to very strategic patents owned directly by OIN, and cross-licensed with thousands of other OIN community members. Another significant step is to understand the various licences used by the open source community. These can be found at the Open Source Initiative. QHow do resources like Linux Defenders help organisations to overcome patent issues? We encourage the open source community to contribute to defensive publications, as that will help to improve patent examination and therefore will limit the future licensing of other patents. Linux Defenders aims to submit prior art on certain patents while they are at the application stage to prevent or restrict the issuance of these patents. Finally, we educate the open source community on other defensive intellectual property strategies that can be employed to complement their membership in OIN. For U & MeInterview www.OpenSourceForu.com | OPEN SOuRCE FOR yOu | July 2017 | 27

- 28. QUnlike the proprietary world, awareness regarding the importance of patents and licensing structures is quite low in the open source space. How does OIN help to enhance such knowledge? We conduct a fairly extensive educational programme in addition to spreading the word through interviews and media coverage. We have speakers that participate at numerous open source events around the globe. Also, we help to educate intellectual property professionals through our support of the European Legal Network and the creation of the Asian Legal Network, including regular Asian Legal Network meetings in India. QIn what way does OIN help developers opting to use open source technologies? We believe that all products and services, and in particular those based on open source technologies, should be able to compete on a level playing field. We help ensure this by significantly reducing the risk of patent litigation from companies that want to hinder new open source-based entrants. We also provide developers, distributors and users of Linux and other open source technologies that join OIN with access to valuable patents owned by us directly, and cross-licensed by our community members. QAs over 2,200 participants have already joined in with the founding members, have patent threats to Linux reduced now? There will always be the threat of patent litigation by bad actors. OIN was created to neutralise patent lawsuit threats against Linux – primarily through patent non-aggression community building and the threat of mutually assured destruction in patent litigation. As the Open Invention Network community continues to grow, the amount of intellectual property that is available for cross-licensing will continue to grow. This will ensure that innovation in the core will continue, allowing organisations to focus Linux and open source. QHow do you view the integration of open source into major Microsoft offerings? We see all this as recognition by Microsoft that its success does not have to come solely from proprietary software and standards. If it becomes a good open source citizen, it can also reap the benefits of innovation and cost efficiencies derived through the development, distribution and use of Linux. We have and will continue to cheer Microsoft to become an OIN licensee. This would publicly and tangibly demonstrate its support and commitment to patent non-aggression in Linux and open source. QIn addition to your present role at OIN, you have successfully managed IP strategies at various tech companies. What is unique about developing an IP strategy for an open source company? Over the last few years, as market forces have shifted, new legislation has been passed, and judicial precedents have been set – all technology companies have had to review their IP strategies. With the rapid adoption of open source by most technology vendors, it is becoming increasingly challenging to bifurcate organisations as open source vs non-open source. We believe that today and in the future, successful companies — whether they are hybrid or pure-play open source companies — will take advantage of the benefits of shared core technology innovation. They will look to invest the majority of their resources higher in the technology stack. This move will help ensure the continued sales of their higher-value products through an IP strategy employing patents, defensive publications, or both—and by participating in an organisation like OIN. QLastly, could it be an entirely patent-free future for open source in the coming years? While software patents are not part of the landscape in India, they are part of the landscape elsewhere in the world. We don’t foresee this changing anytime soon. their energies and resources higher in the technology stack, where they can significantly differentiate their products and services. QWhere do you see major patent threats coming from — are they from the cloud world, mobility or the emerging sectors like Internet of Things and artificial intelligence? Linux and open source technology adoption will increase as more general computing technologies become the key building blocks in areas like the automotive industry. Many of the computing vendors that have been aggressive or litigious historically may try to use a similar strategy to extract licensing fees related to Linux. This is one of the reasons that companies like Toyota, Daimler-Benz, Ford, General Motors, Subaru and Hyundai-Kia, among many others, have either joined OIN or are very close to signing on. In fact, Toyota made a significant financial contribution to OIN and is our latest board member. QDon’t you think that ultimately, this is a war with just Microsoft? In the last few years, Microsoft has taken some positive steps towards becoming a good open source citizen. We have and will continue to encourage it to join OIN and very publicly demonstrate its commitment to shared innovation and patent non-aggression in For U & Me Interview 28 | July 2017 | OPEN SOuRCE FOR yOu | www.OpenSourceForu.com

- 30. 30 | JuLY 2017 | OPEN SOuRCE FOR YOu | www.OpenSourceForu.com Developers Let’s Try I n the last decade or so, the amount of data generated has grown exponentially. The ways to store, manage and visualise data have shifted from the old legacy methods to new ways. There has been an explosion in the number and variety of open source databases. Many are designed to provide high scalability, fault tolerance and have core ACID database features. Each open source database has some special features and, hence, it is very important for a developer or any enterprise to choose with care and analyse each specific problem statement or use case independently. In this article, let us look at one of the open source databases that we evaluated and adopted in our enterprise ecosystem to suit our use cases. MongoDB, as defined in its documentation, is an open source, cross-platform, document-oriented database that provides high performance, high availability and easy scalability. MongoDB works with the concept of collections, which you can associate with the table in an RDBMS like MySQL and Oracle. Each collection is made up of documents (like XML, HTML or JSON), which are the core entity in MongoDB and can be compared to a logical row in Oracle databases. MongoDB has a flexible schema as compared to the normal Oracle DB. In the latter we need to have a definite table with well-defined columns and all the data needs to fit the table row type. However, MongoDB lets you store data in the form of documents, in JSON format and in a non- relational way. Each document can have its own format and structure, and be independent of others. The trade-off is the inability to perform joins on the data. One of the major shifts that we as developers or architects had to go through while adopting Mongo DB was the mindset shift — of getting used to storing normalised data, getting rid of redundancy in a world where we need to store all the possible data in the form of documents, and handling the problems of concurrency. The horizontal scalability factor is fulfilled by the ‘sharding’ concept, where the data is split across different machines and partitions called shards, which help further scaling. The fault tolerance capabilities are enabled by replicating data on different machines or data centres, thus making the data available in case of server failures. Also, This article targets developers and architects who are looking for open source adoption in their IT ecosystems. The authors describe an actual enterprise situation, in which they adopted MongoDB in their work flow to speed up processes. Using MongoDB to Improve the IT Performance of an Enterprise