Embed presentation

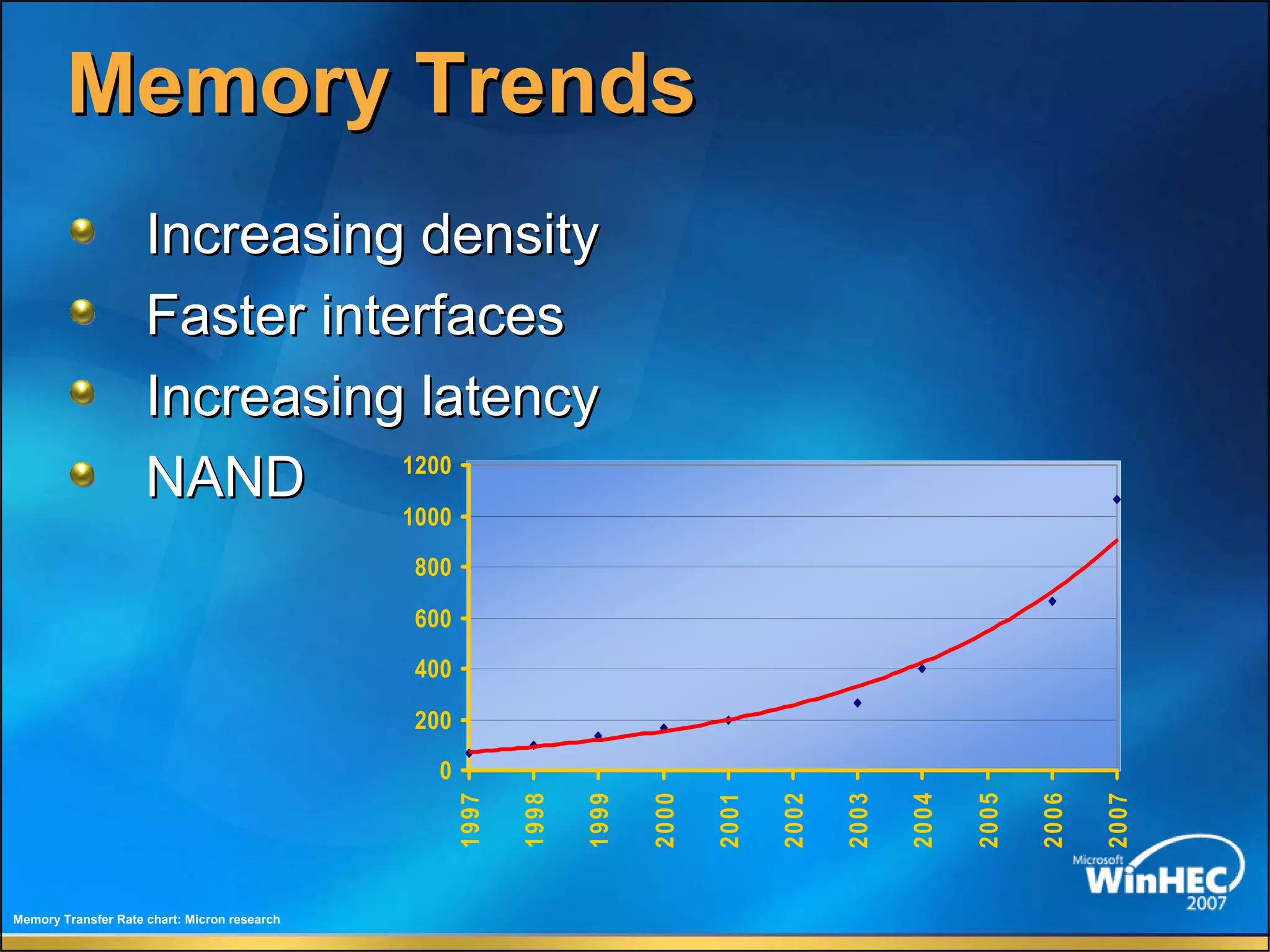

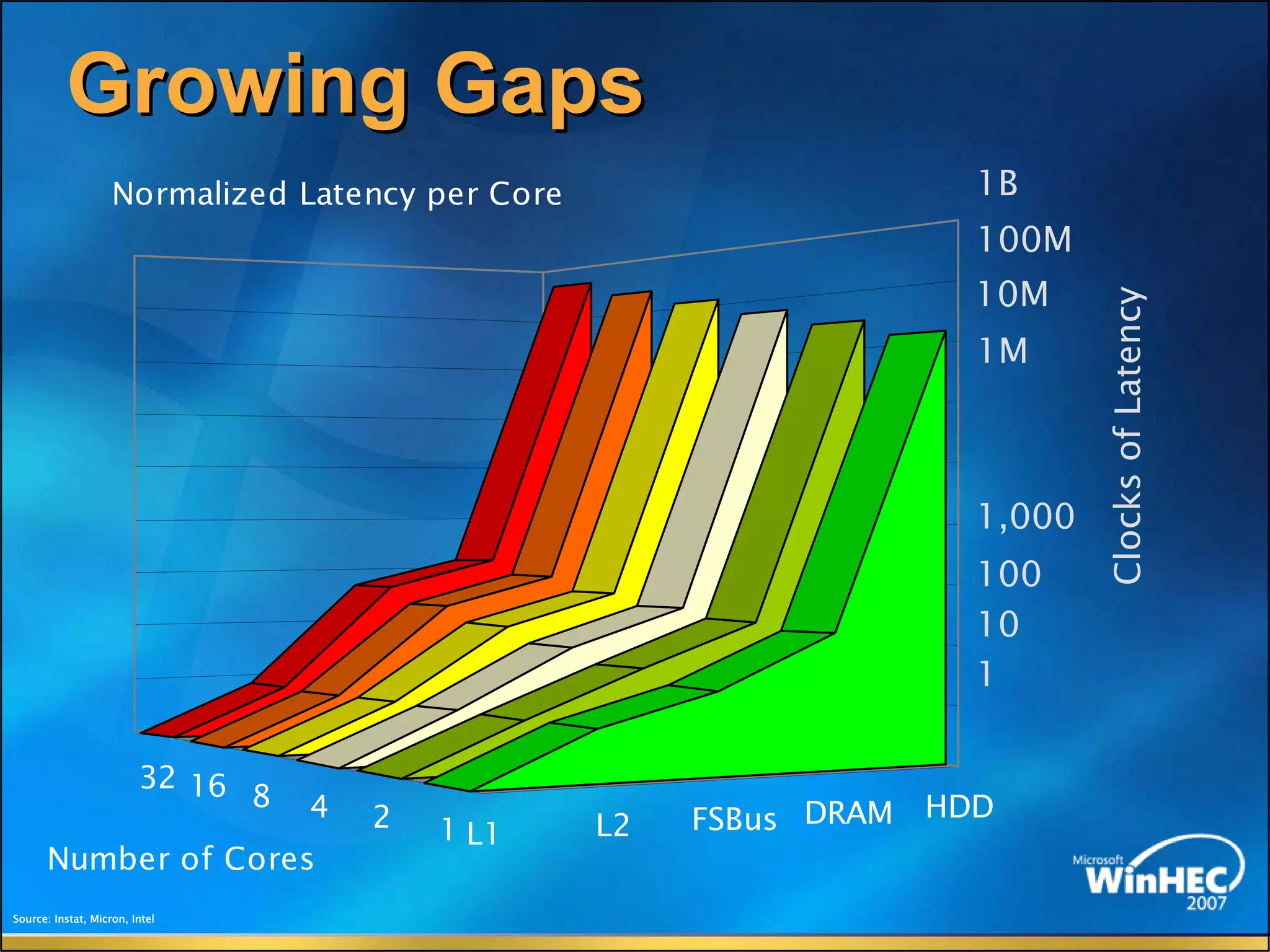

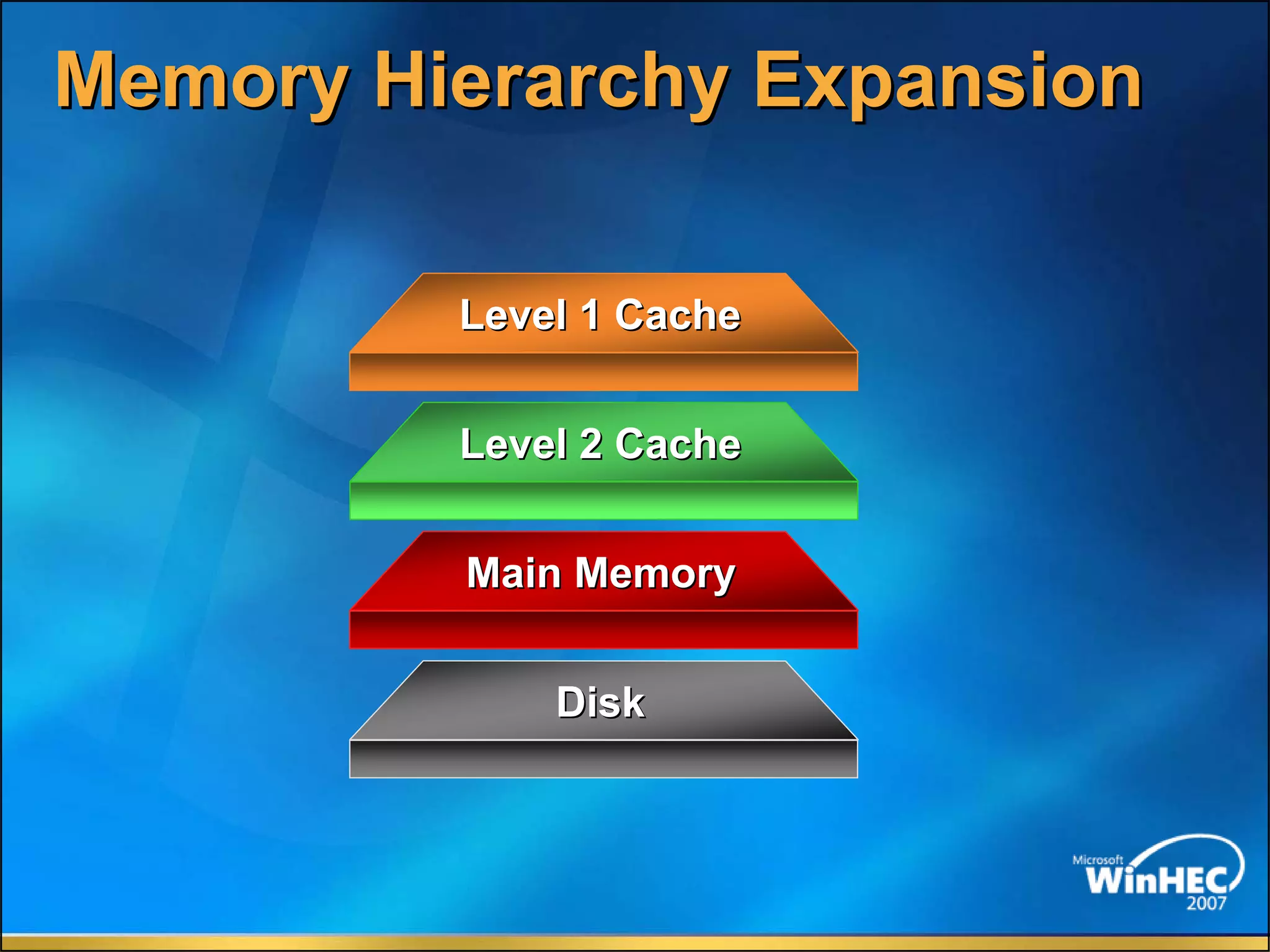

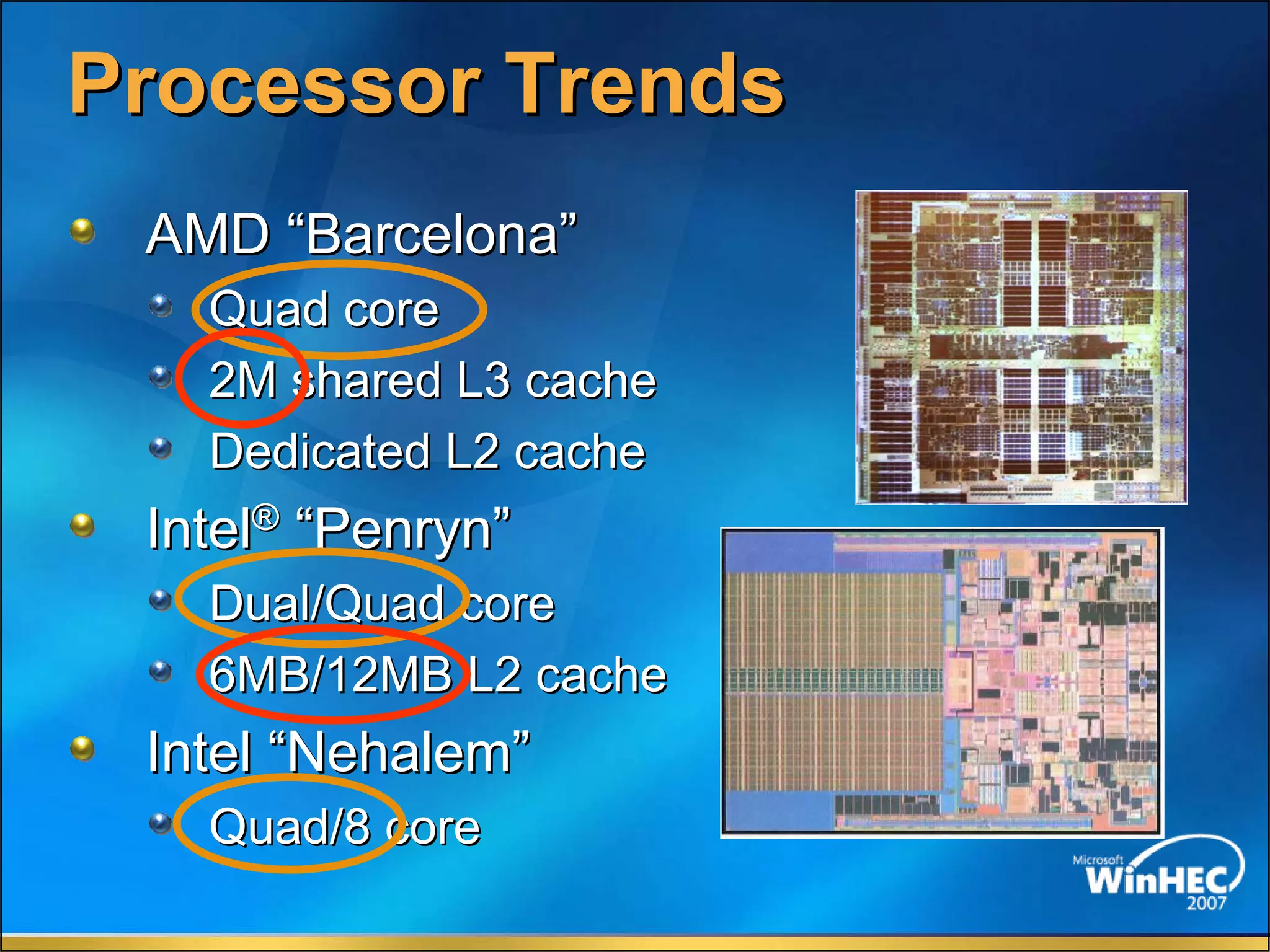

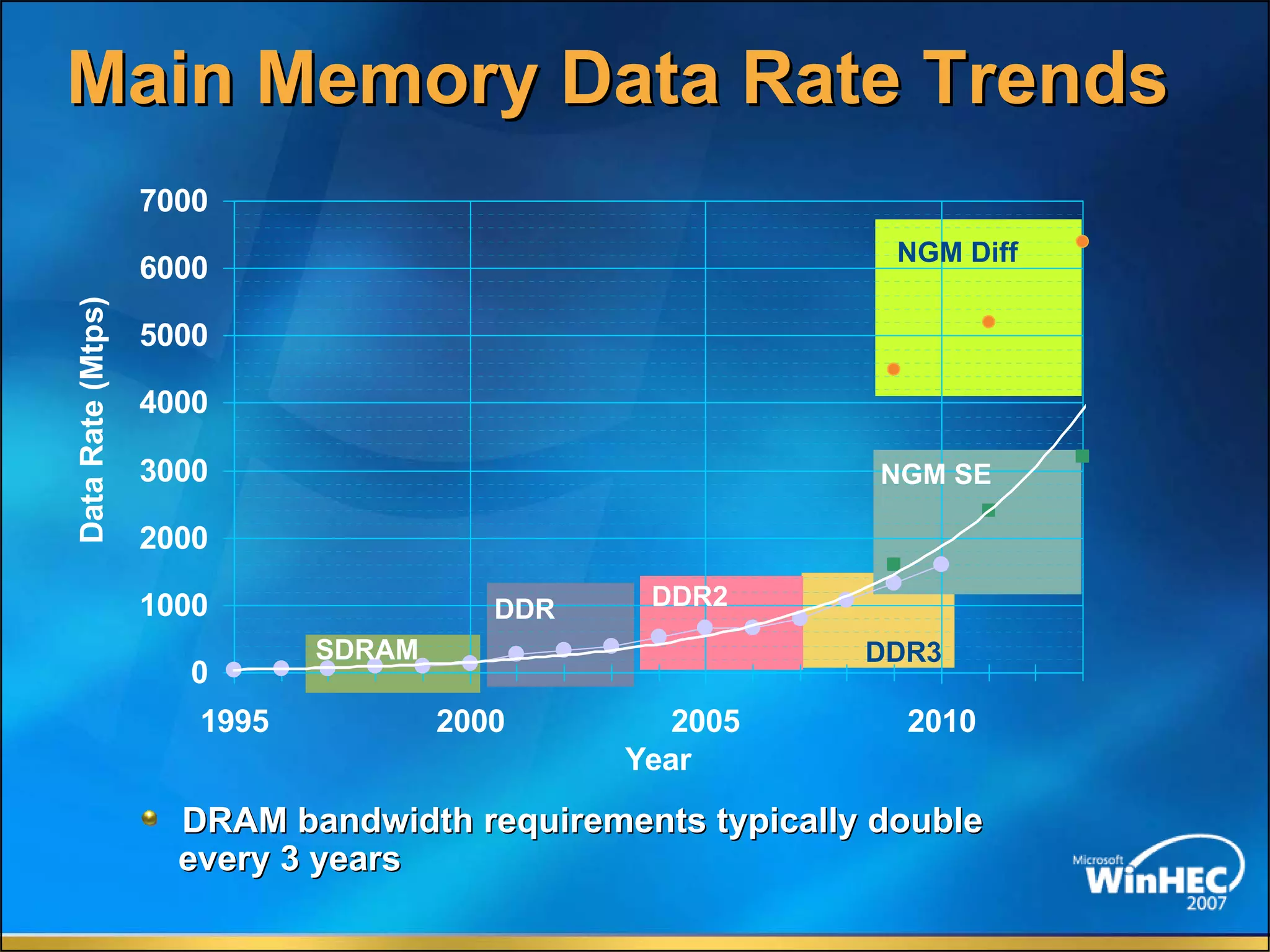

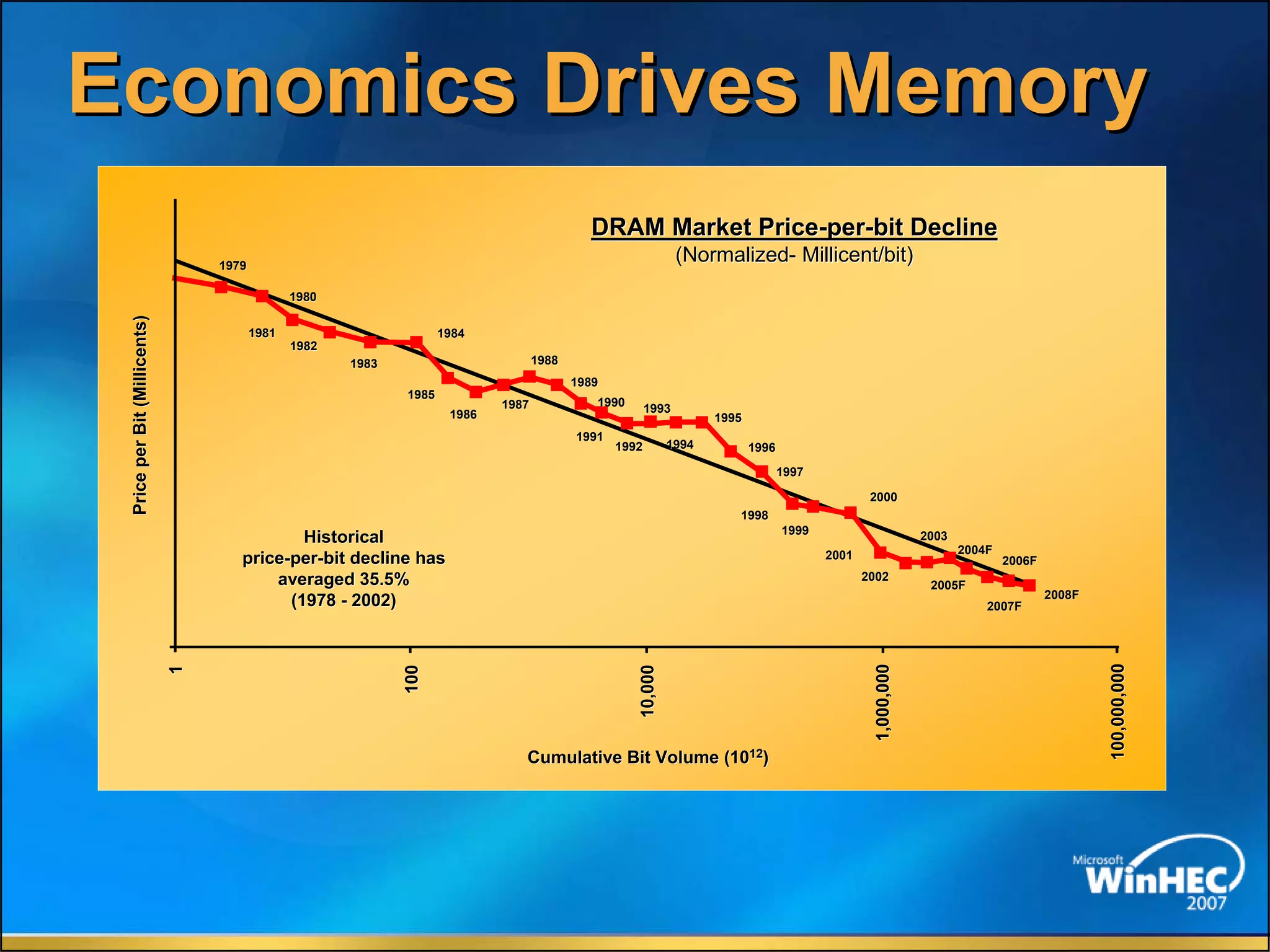

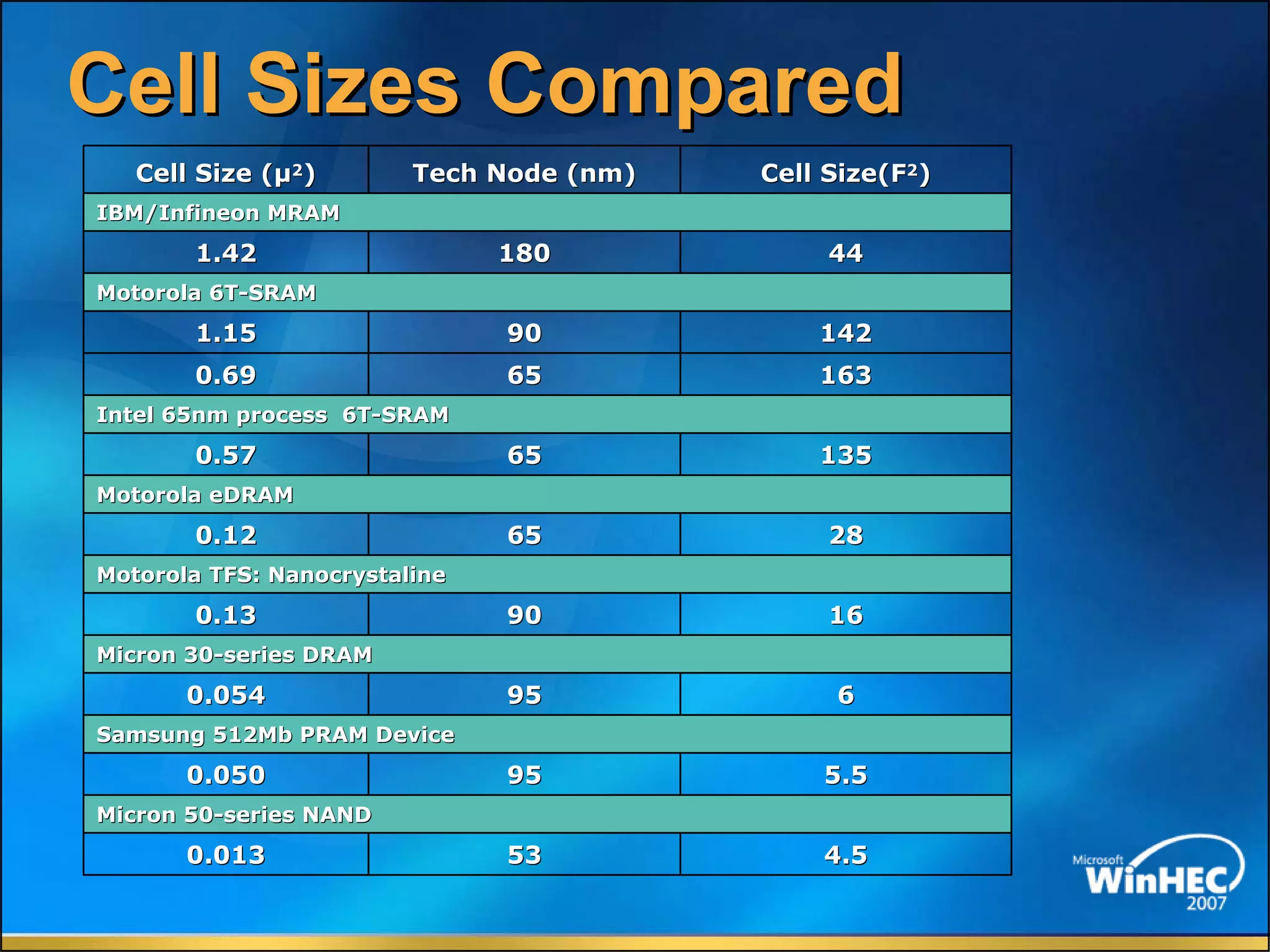

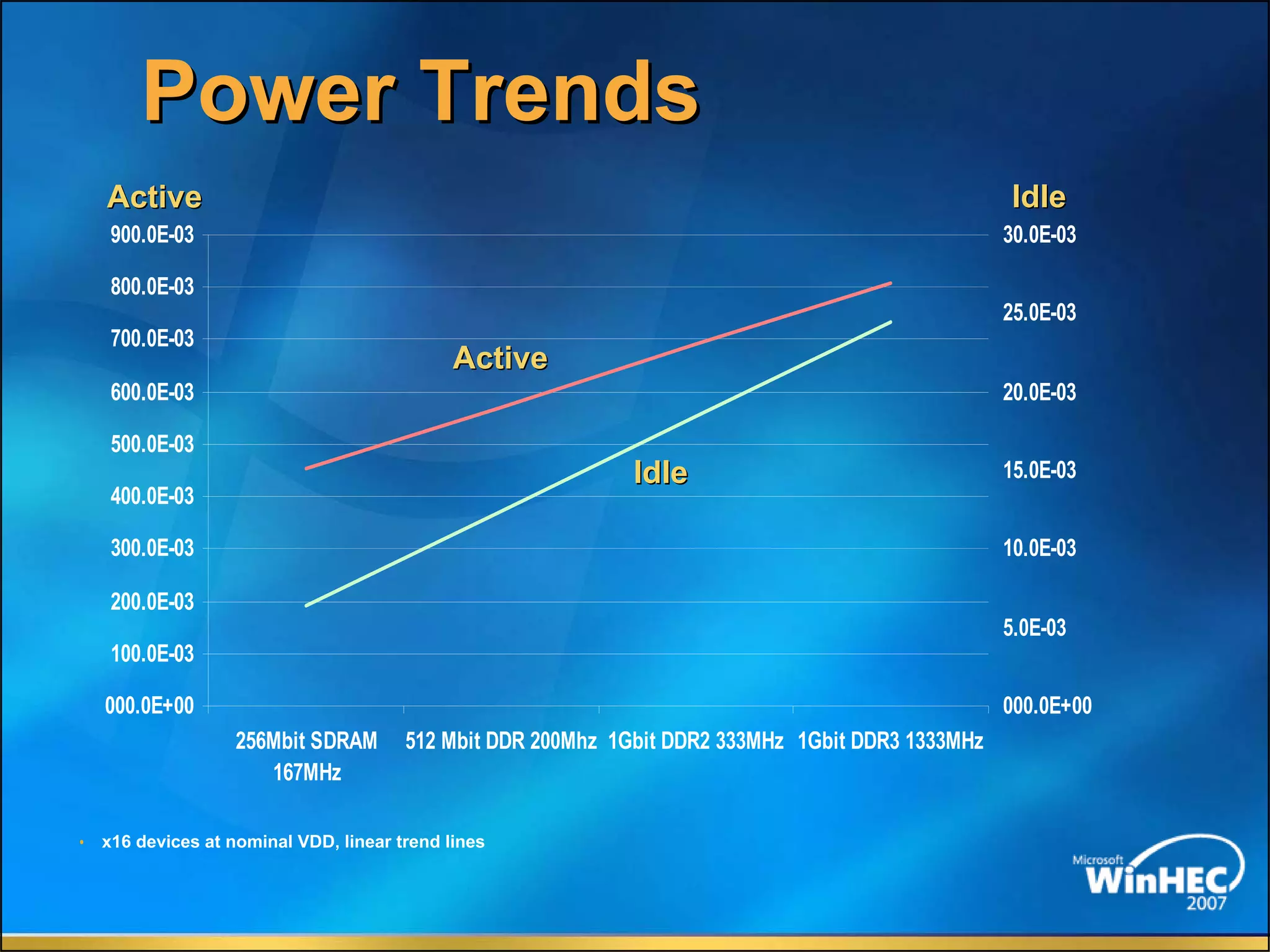

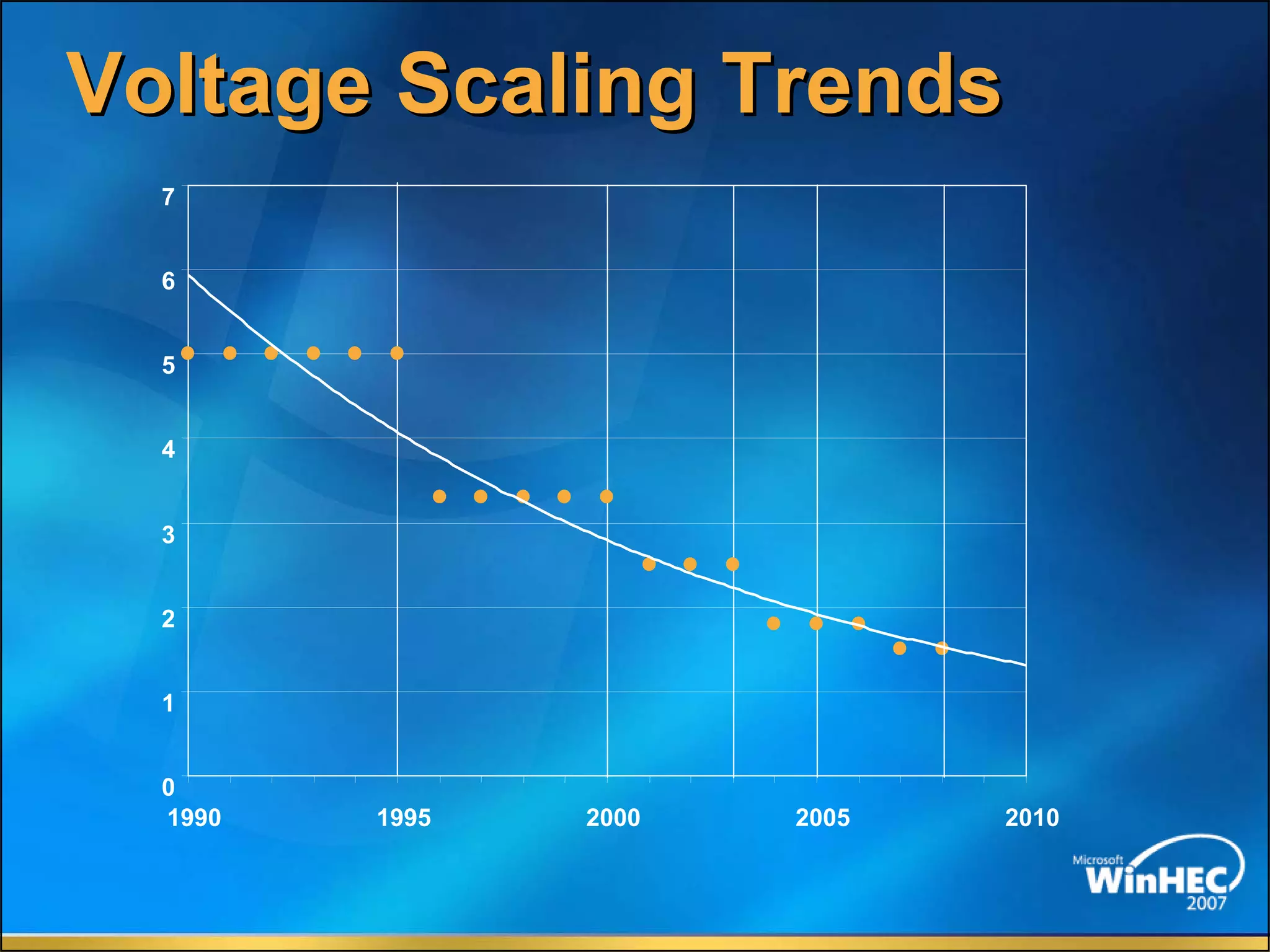

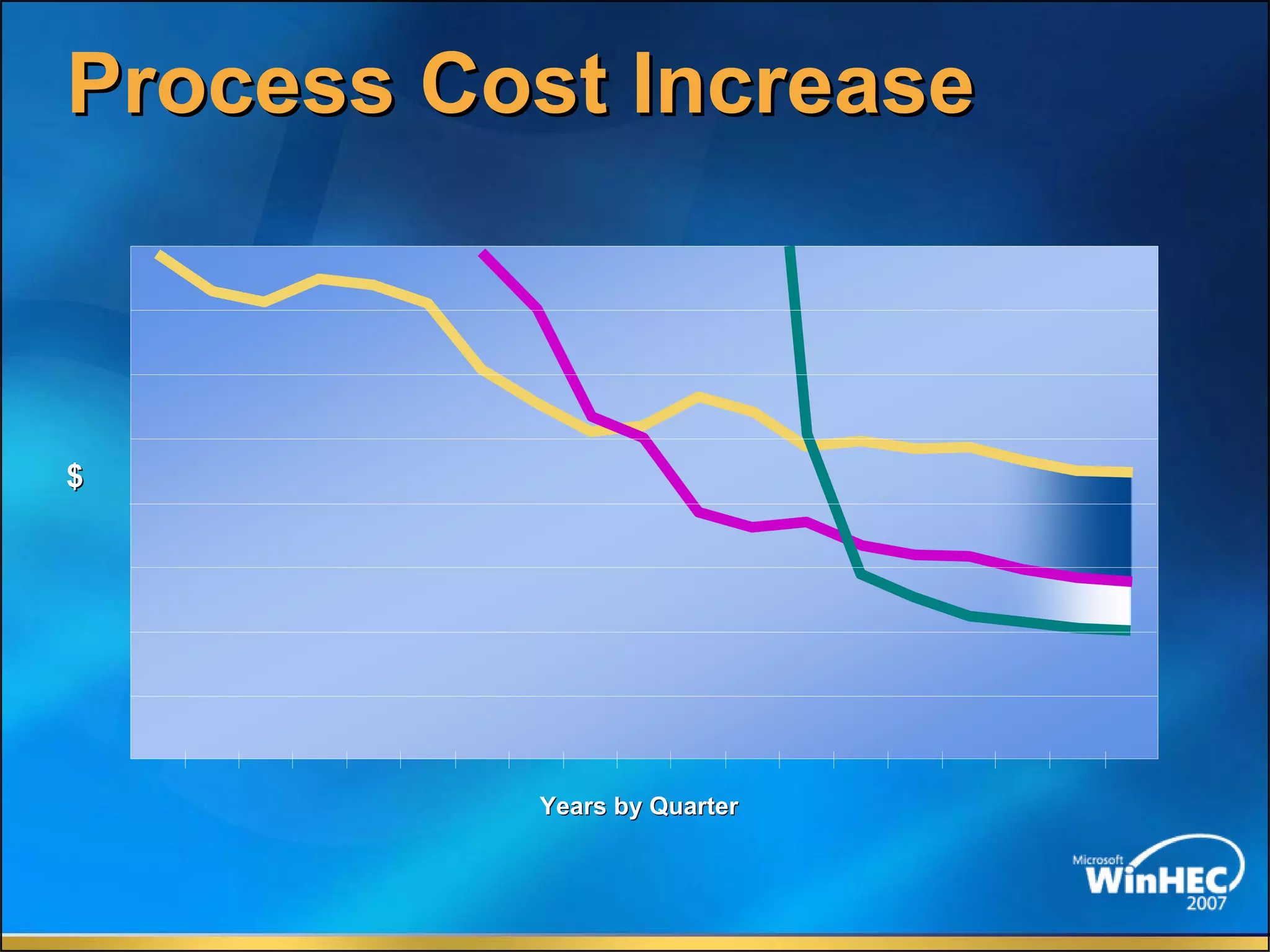

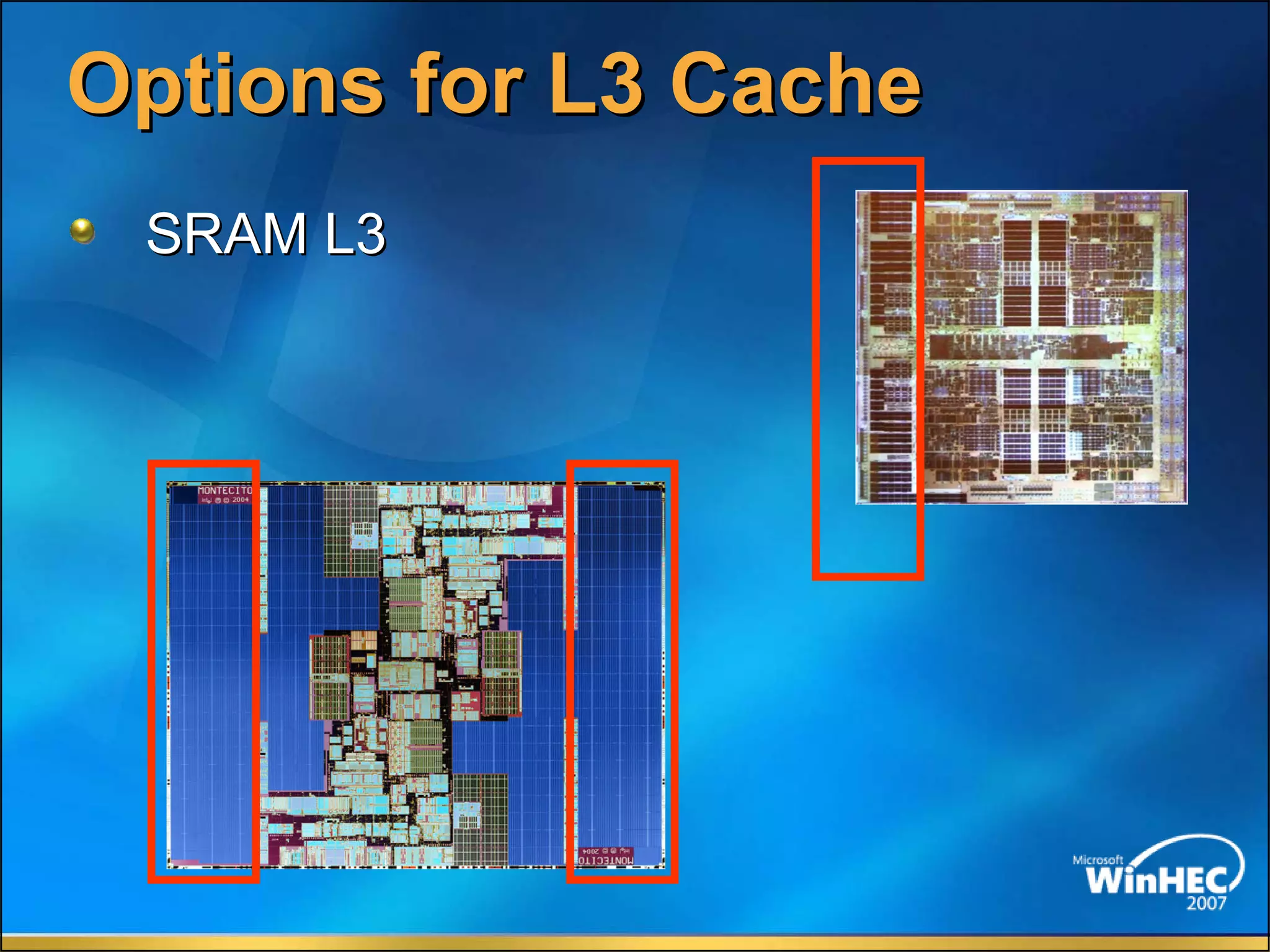

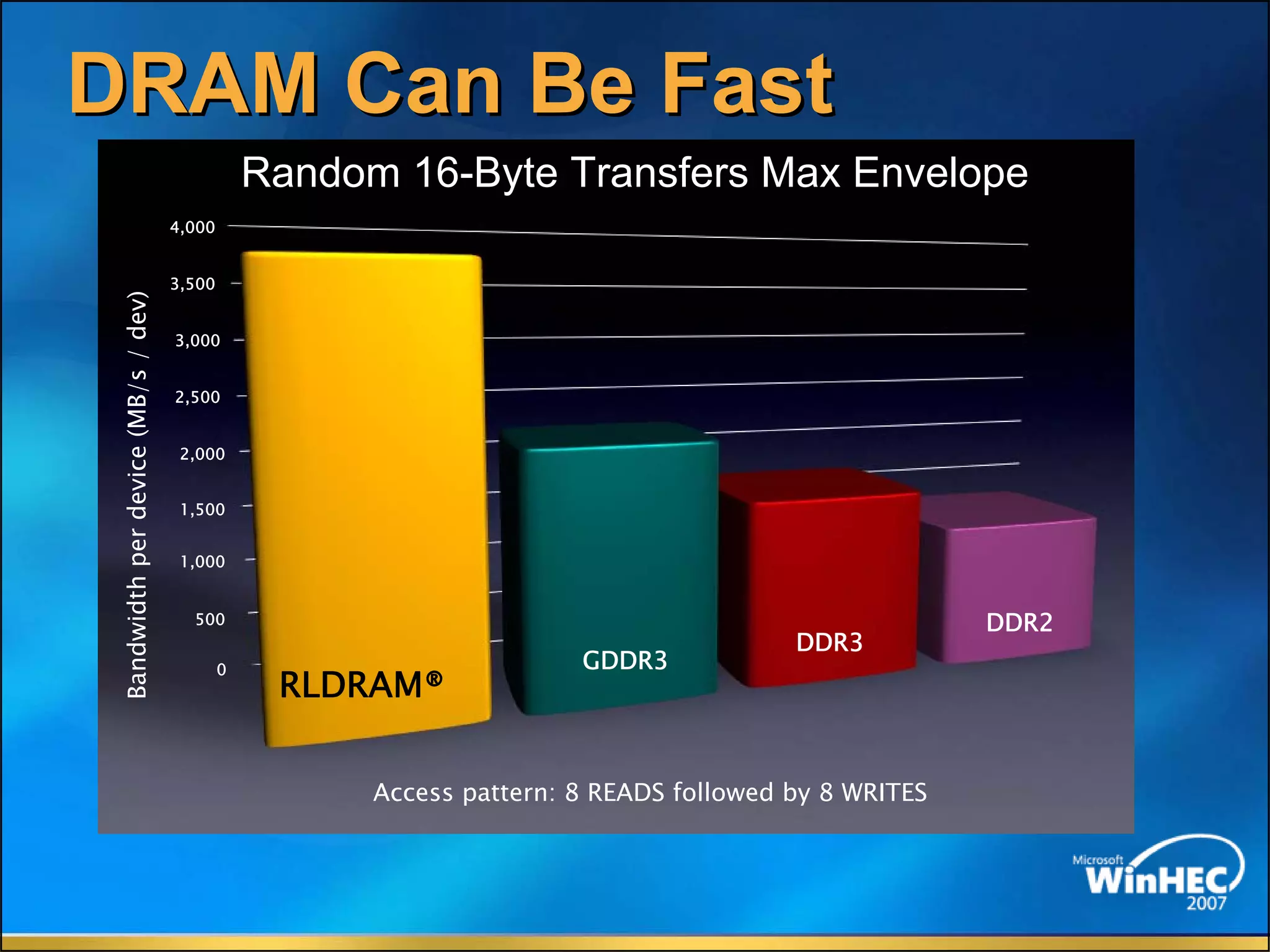

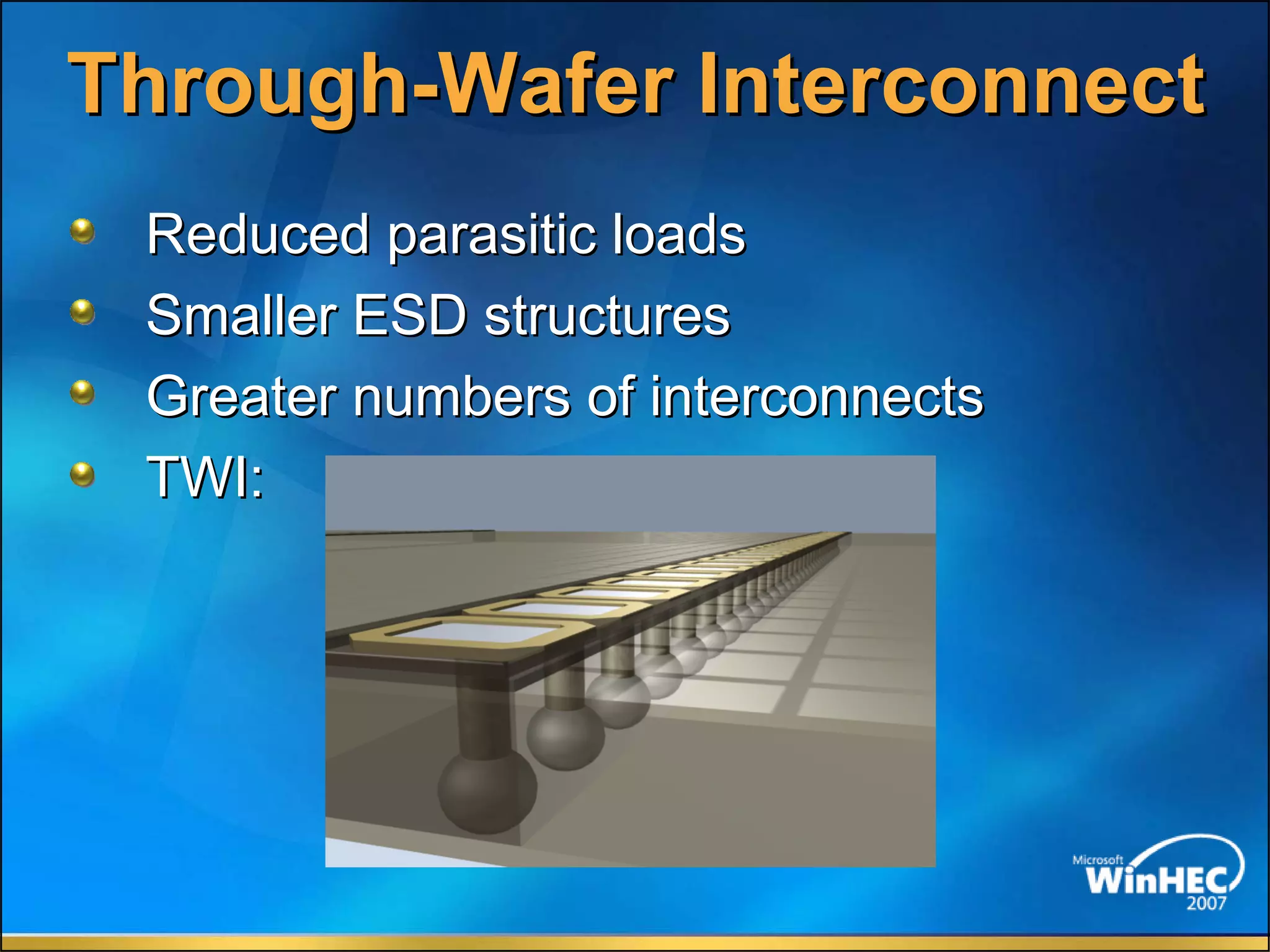

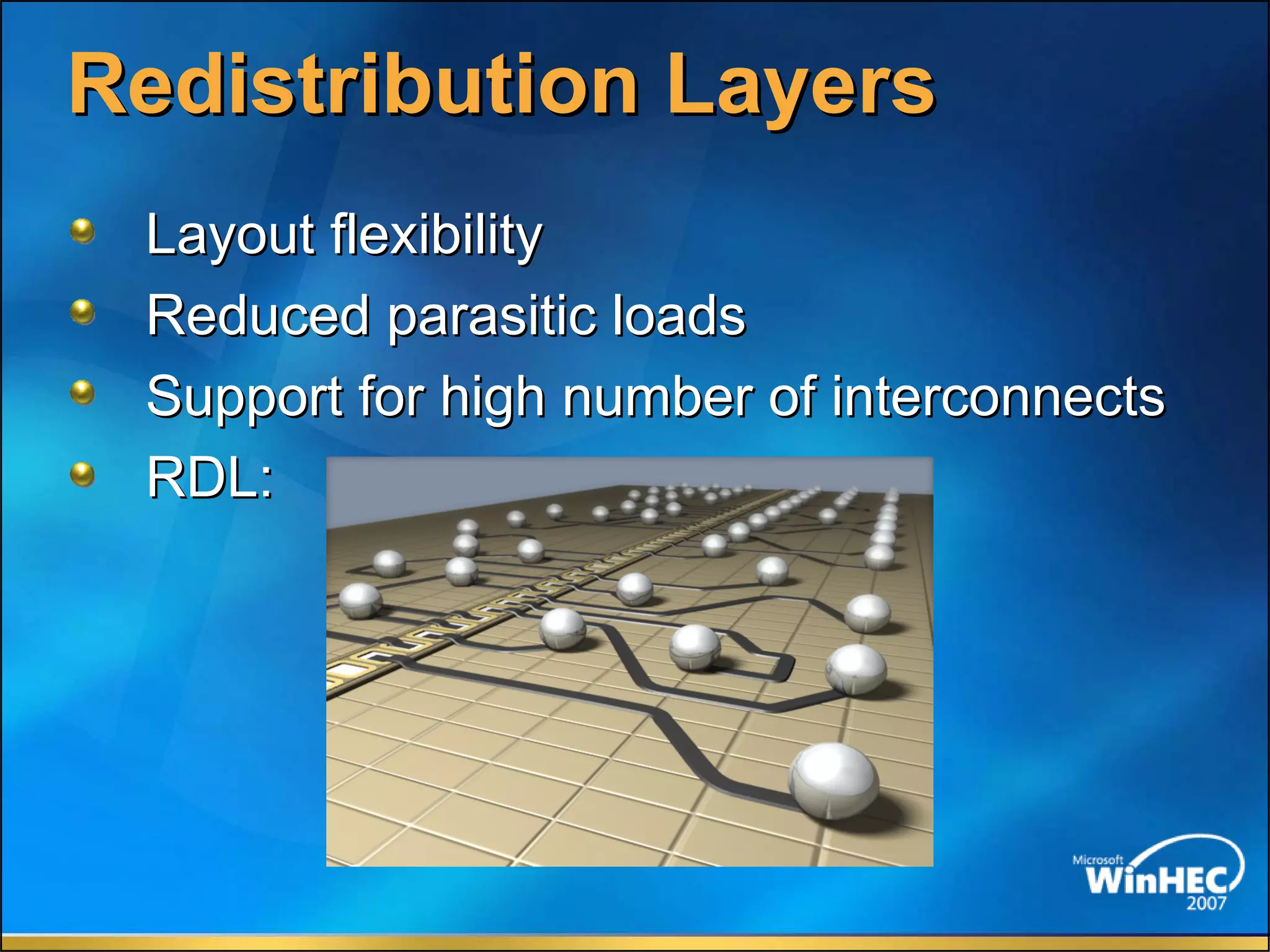

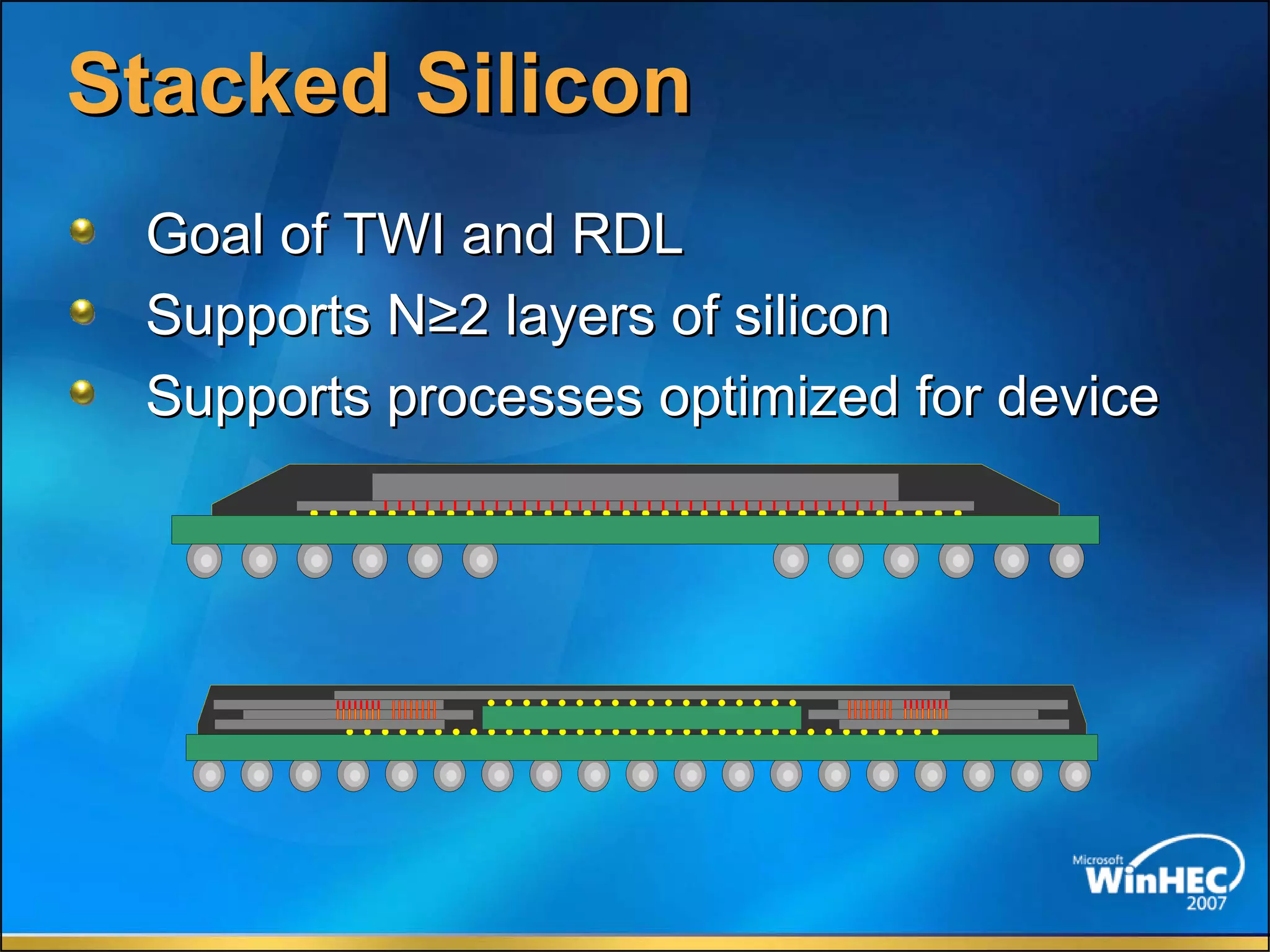

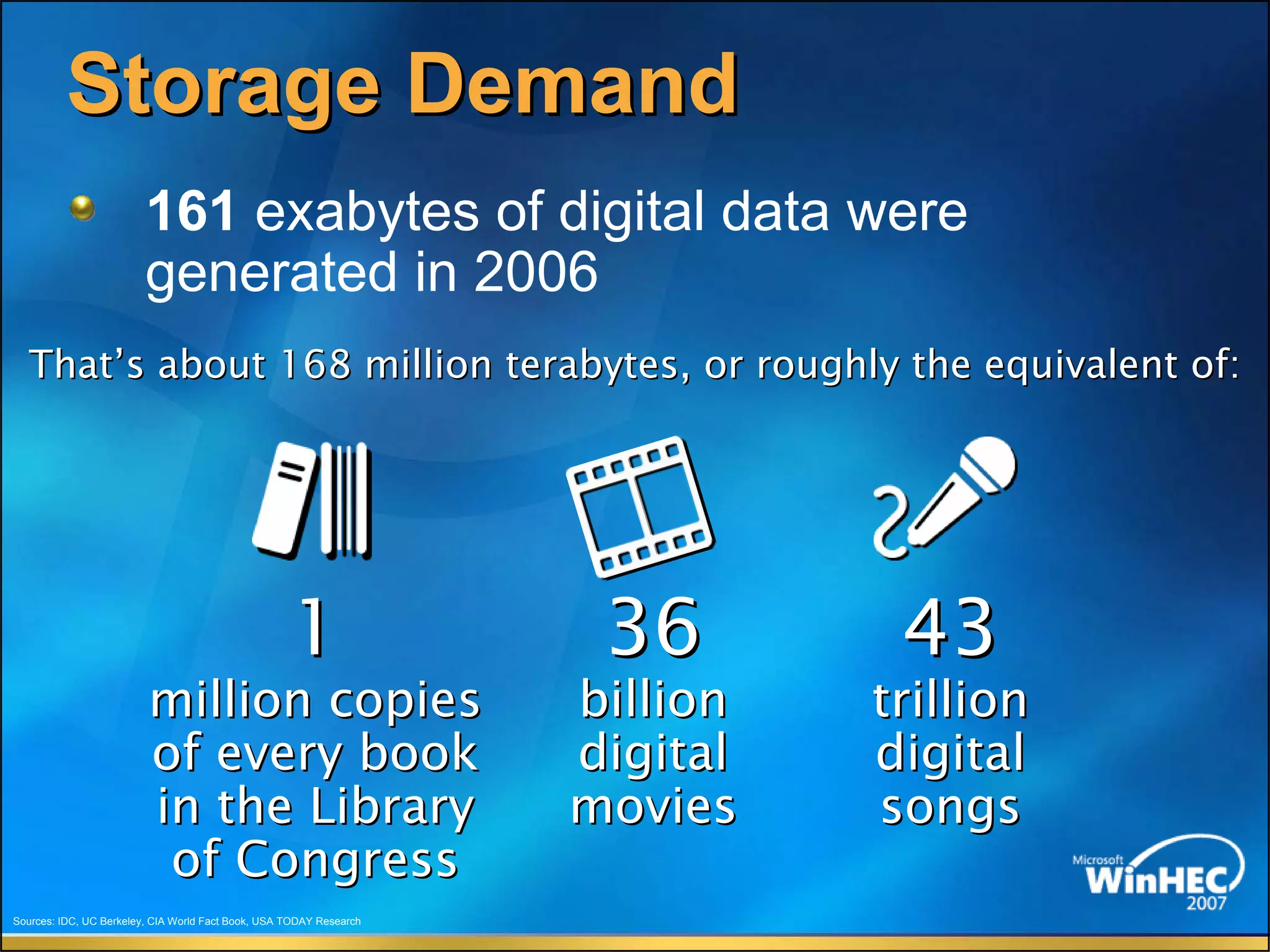

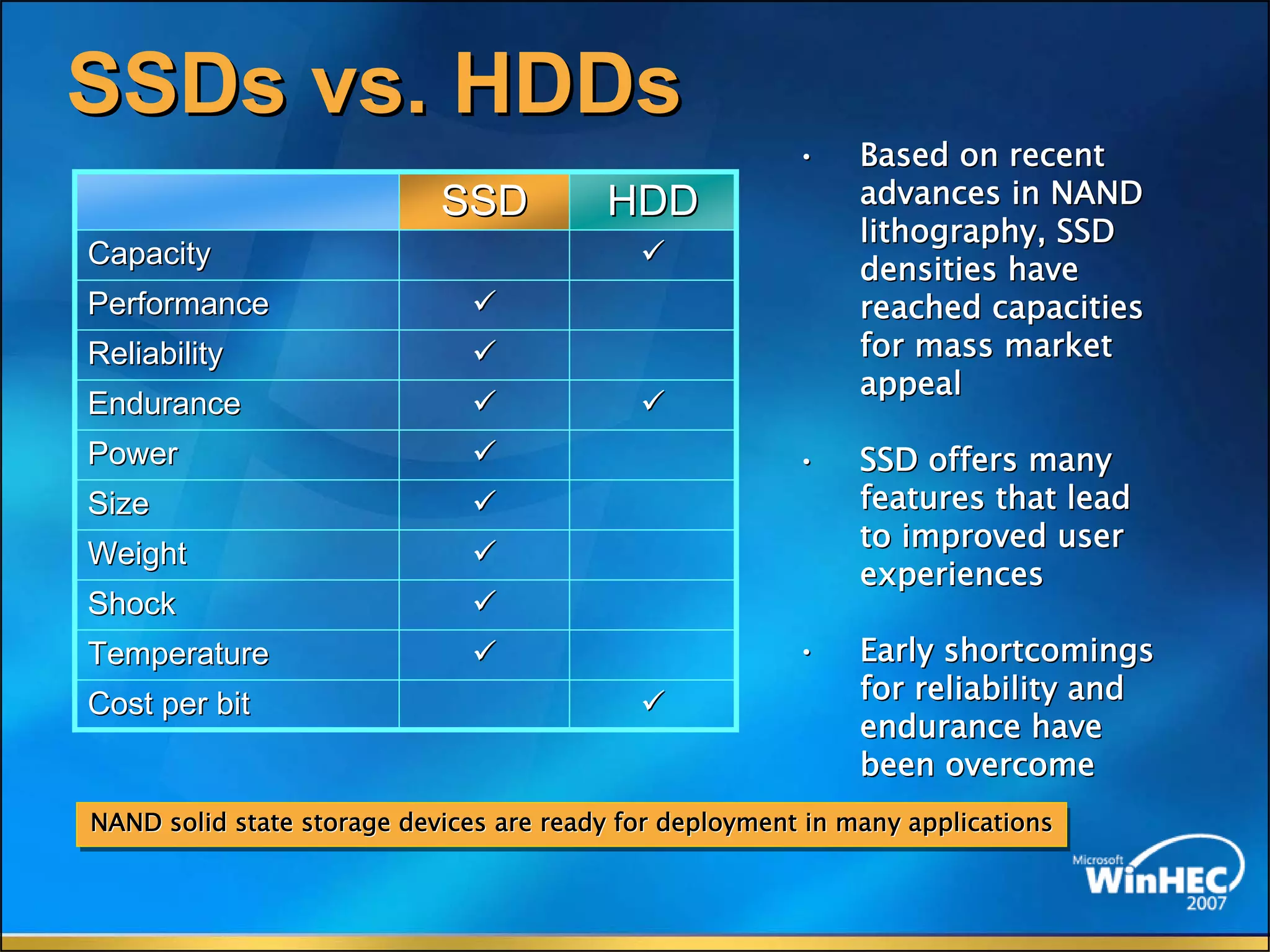

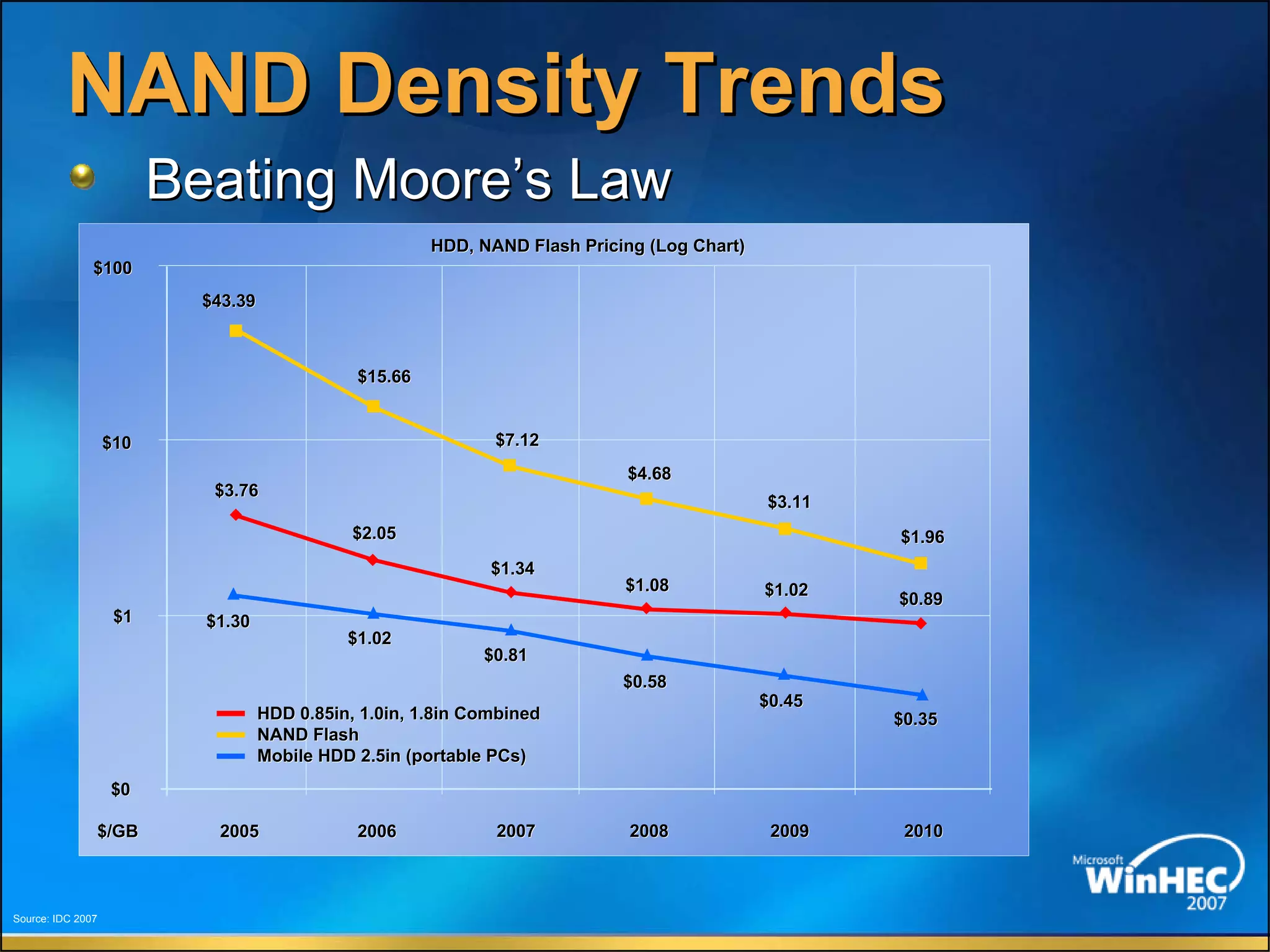

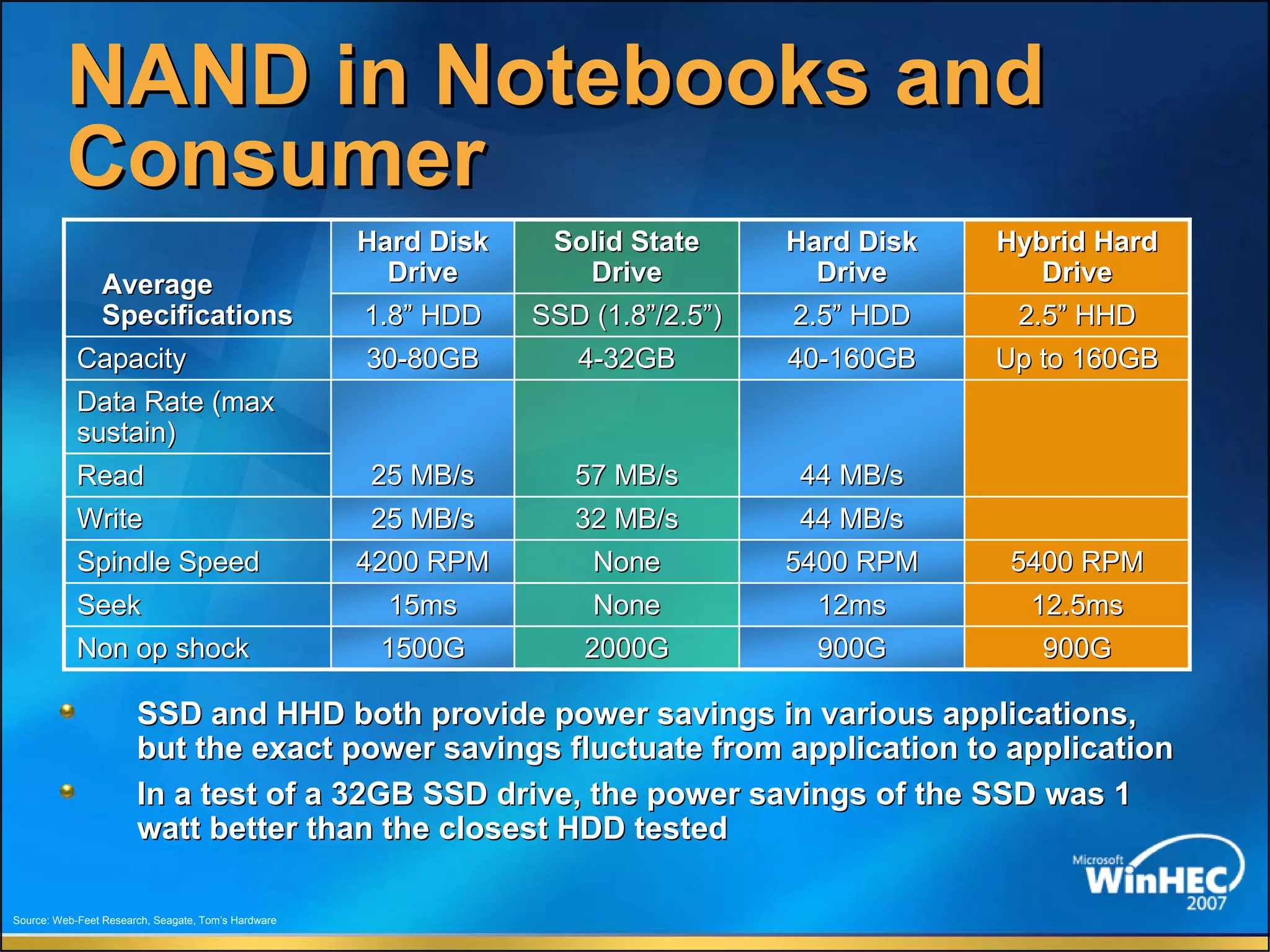

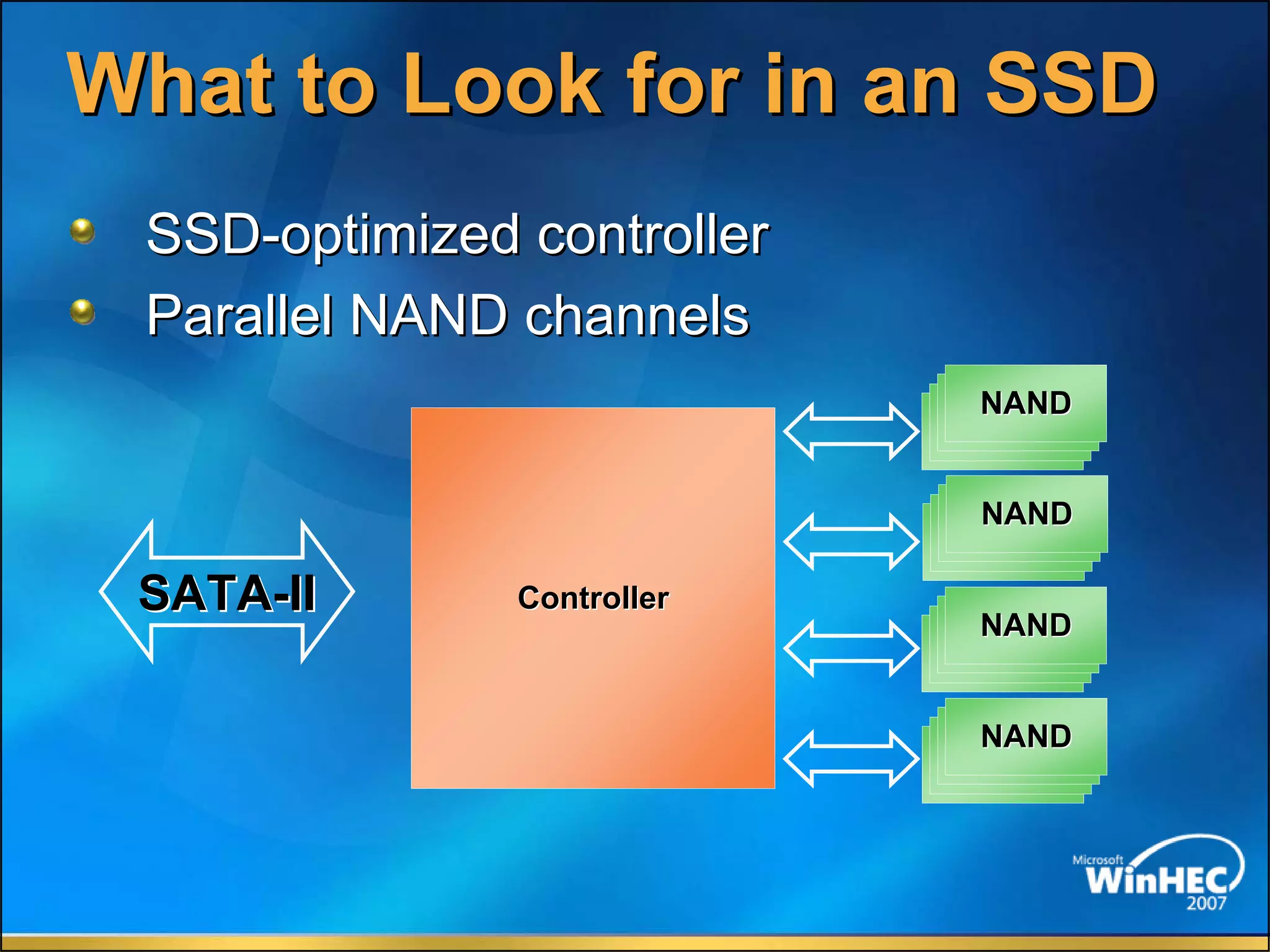

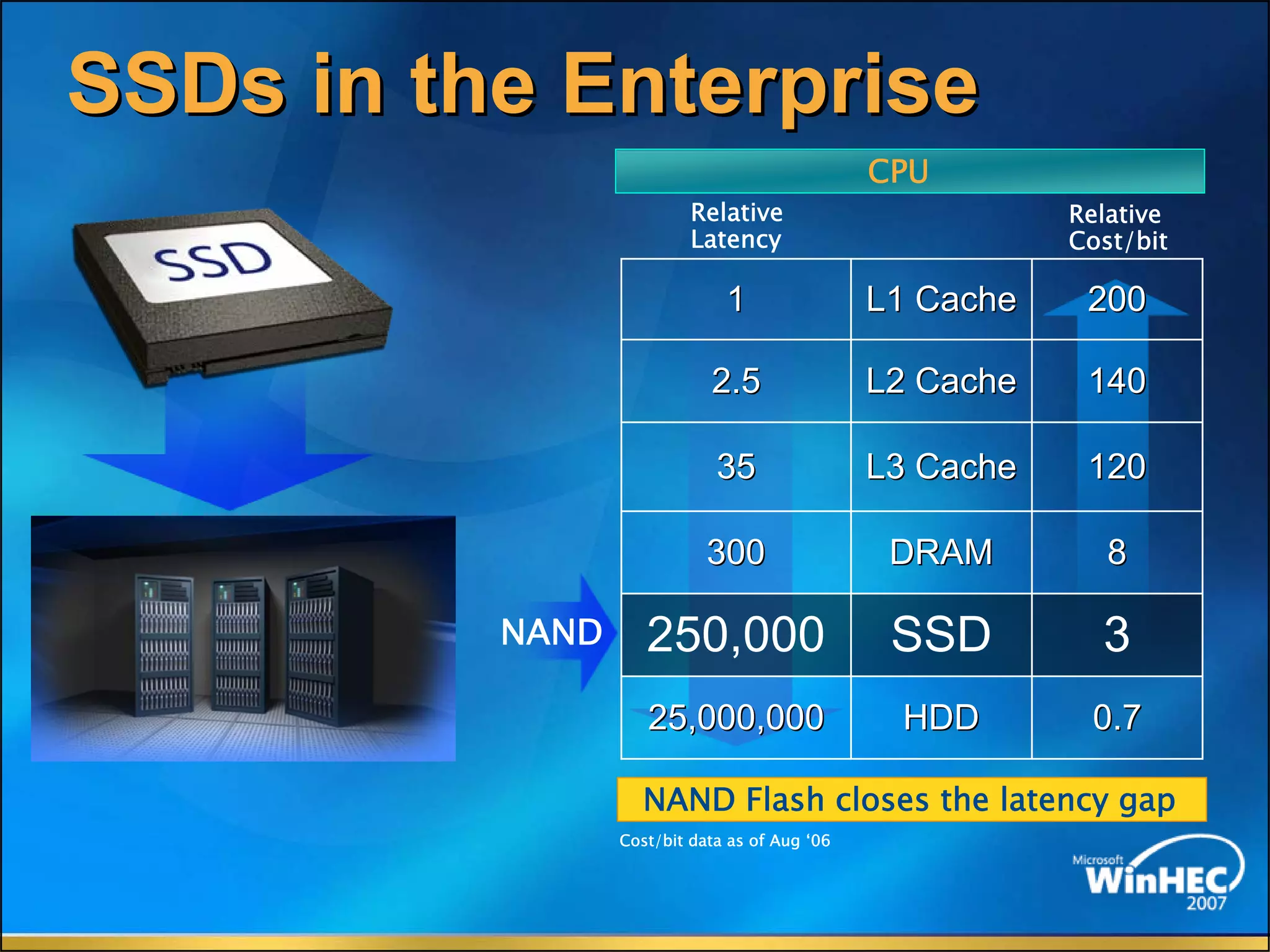

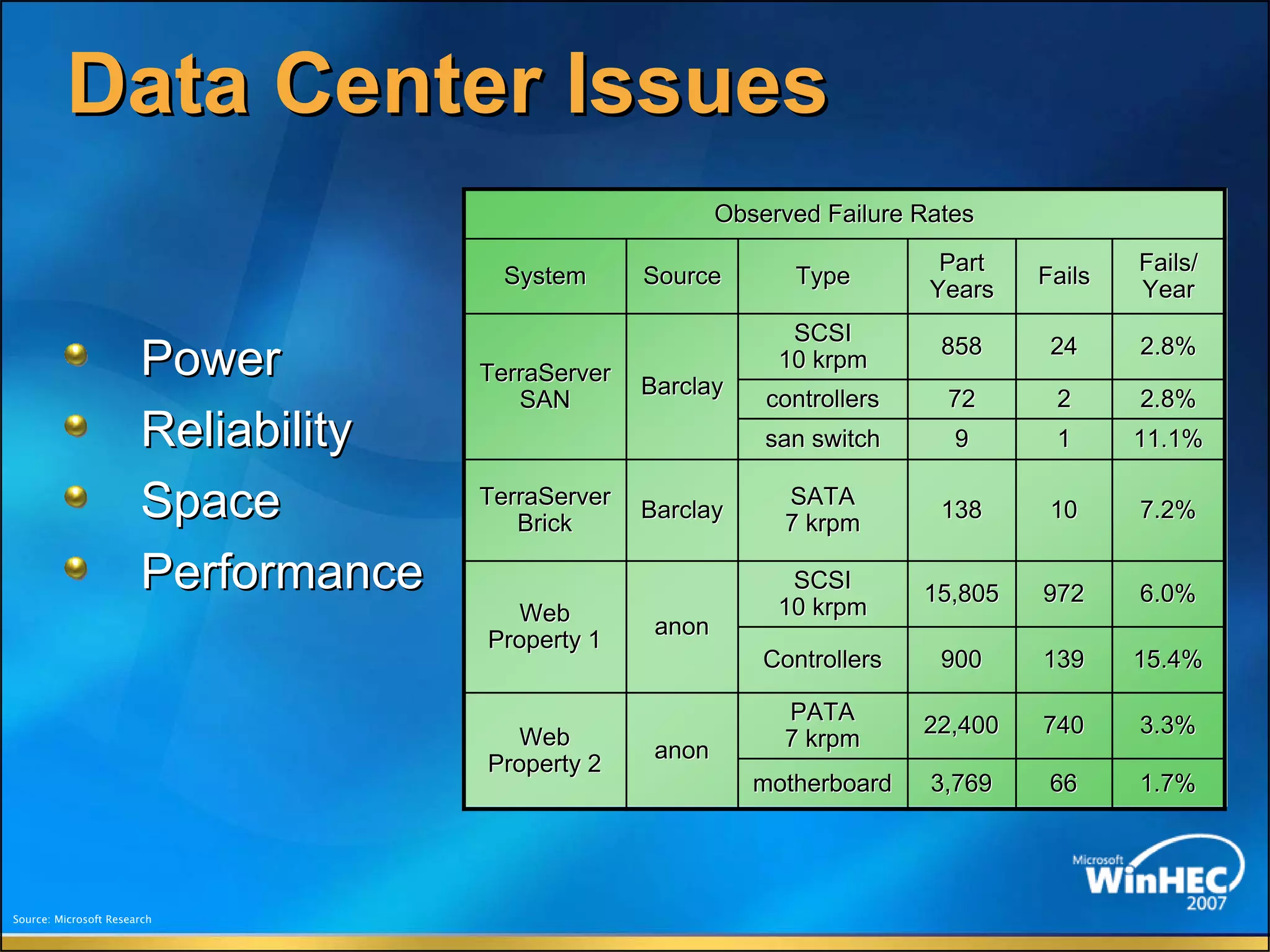

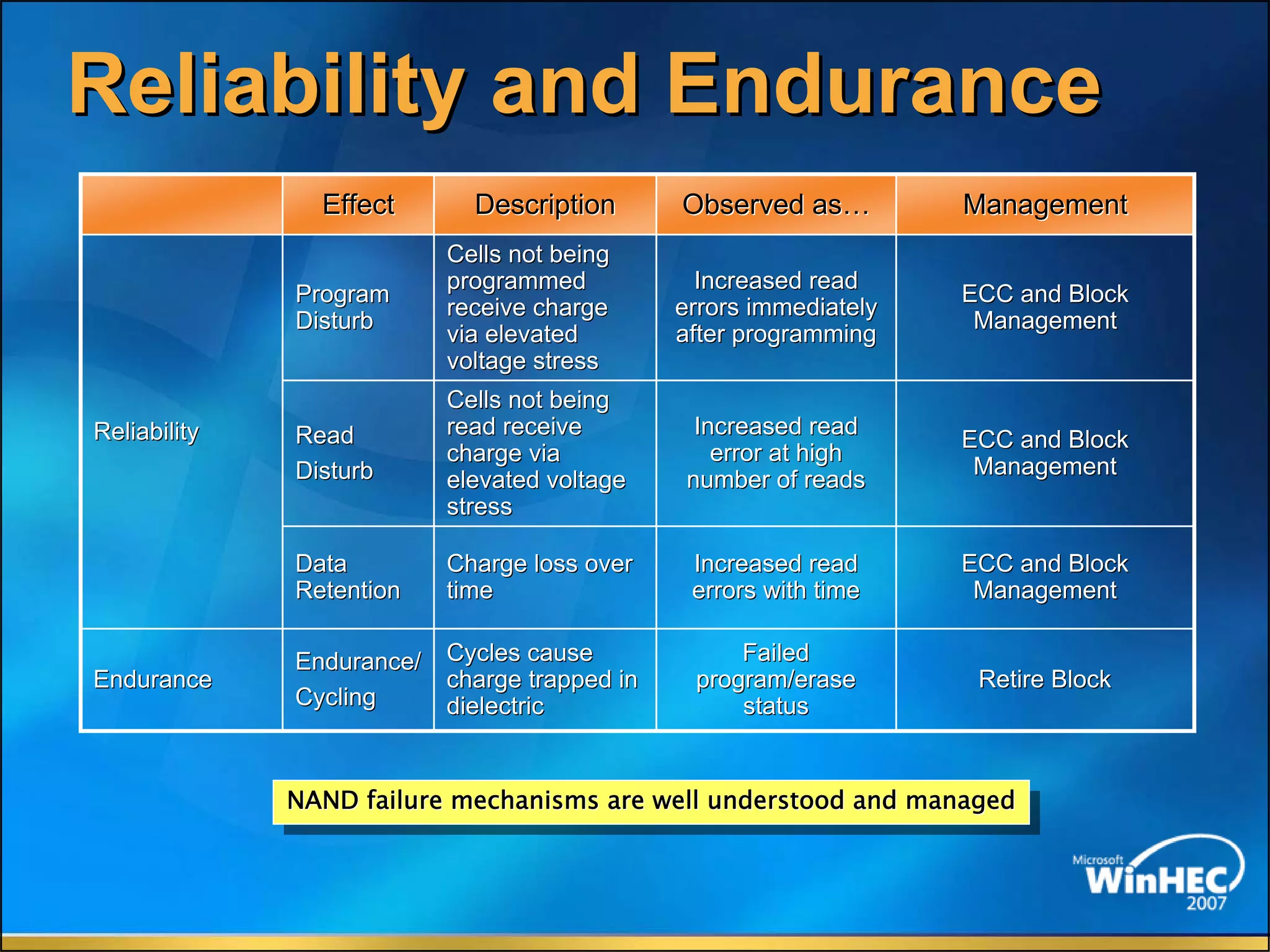

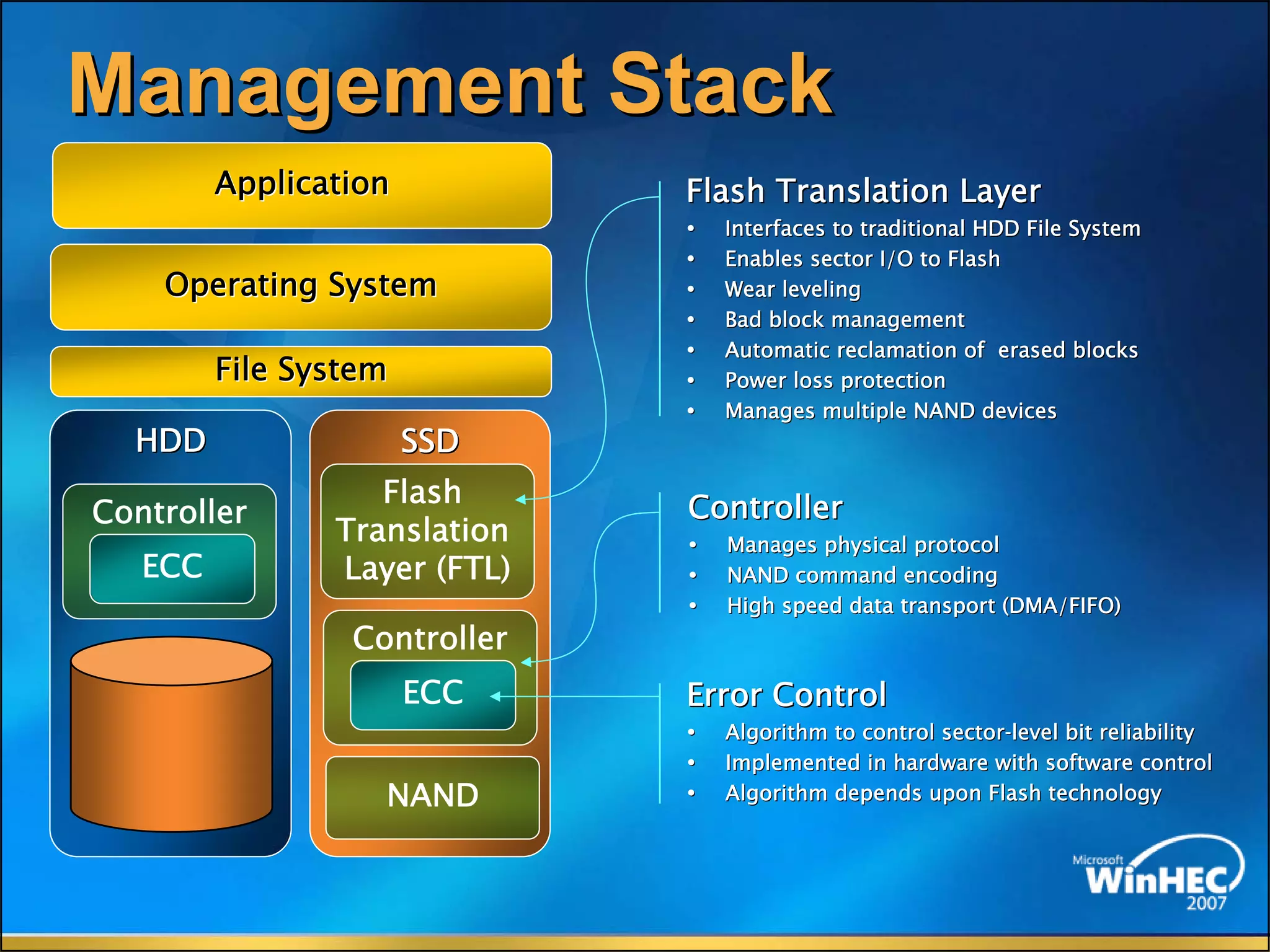

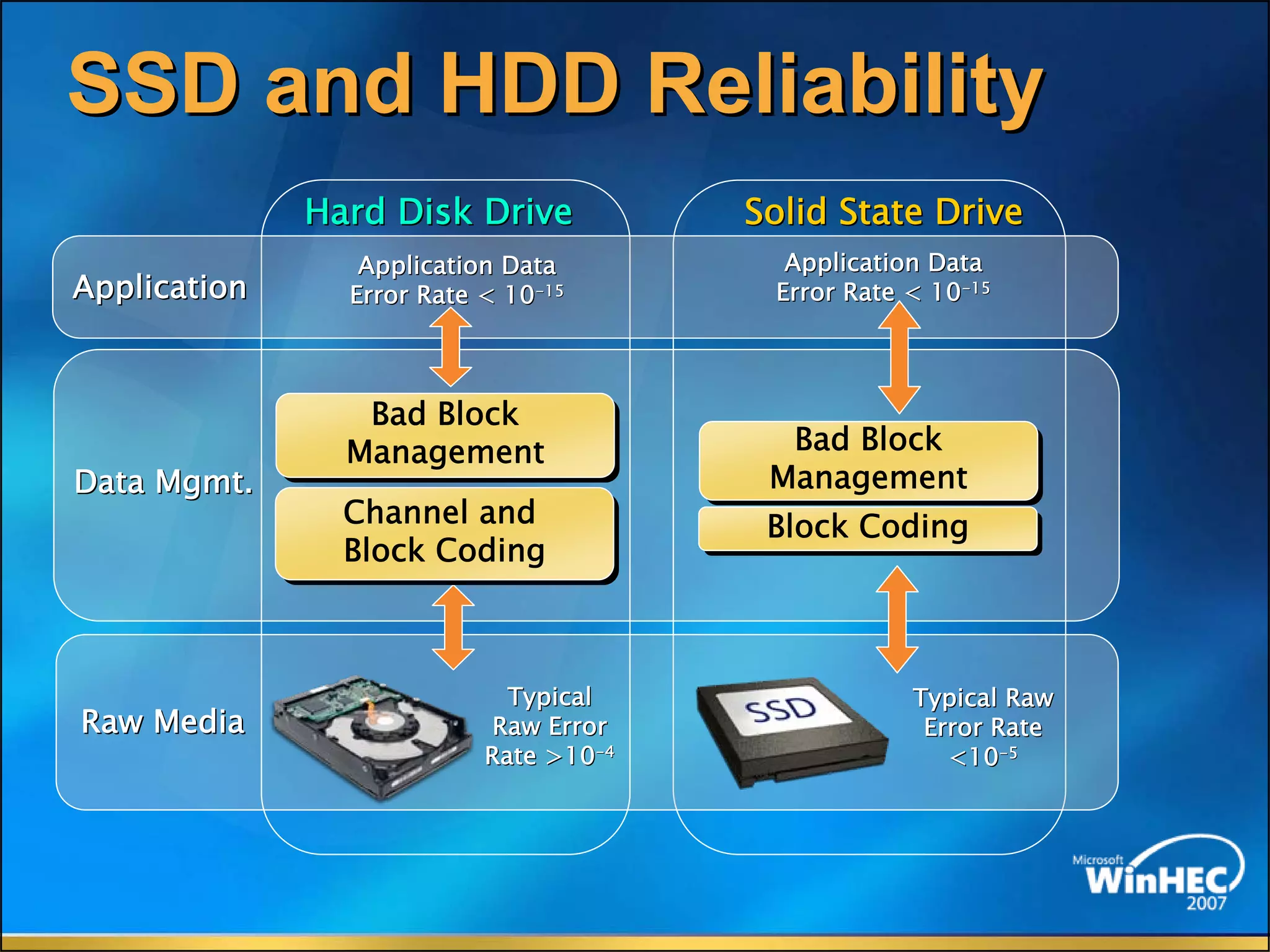

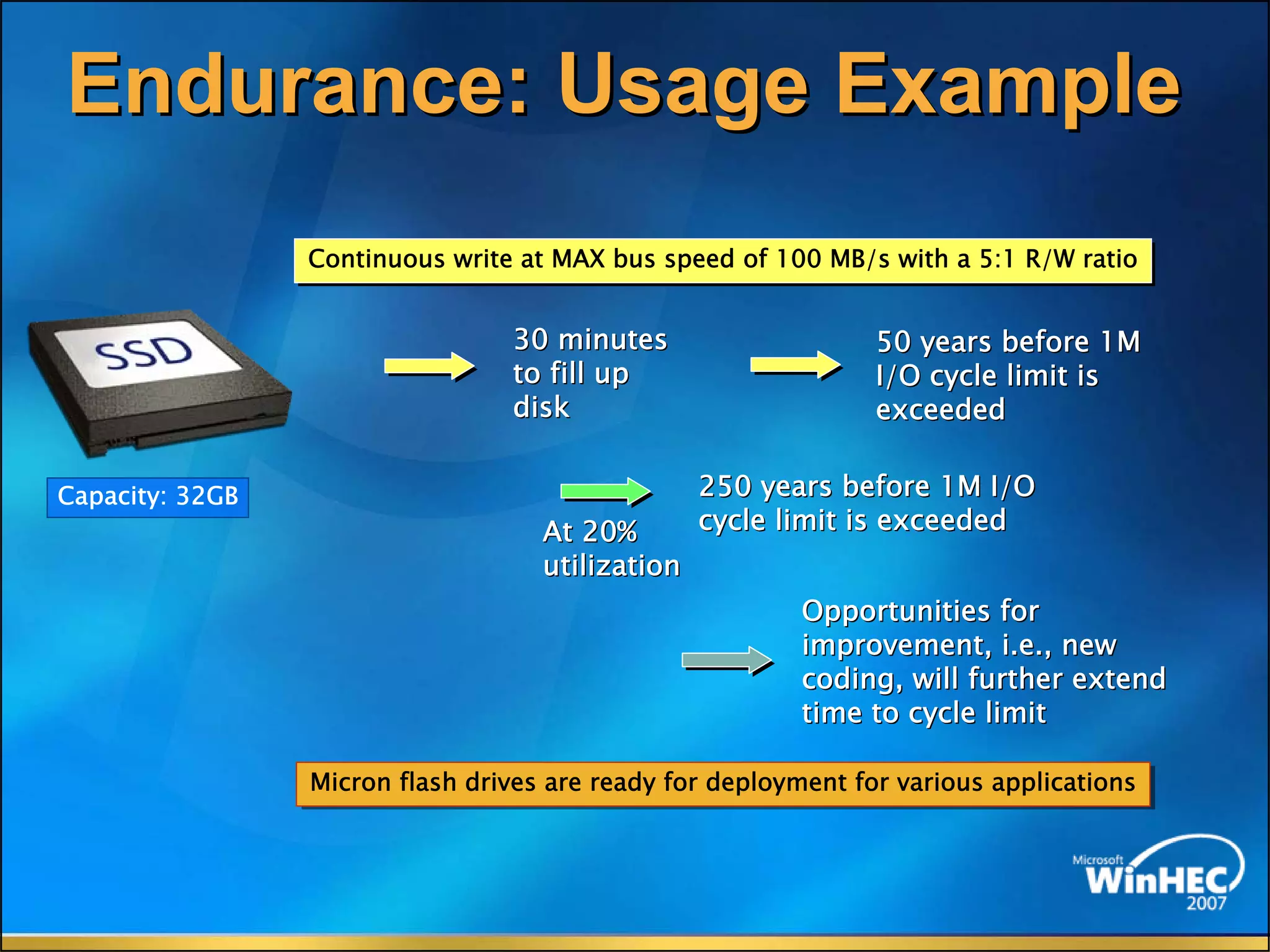

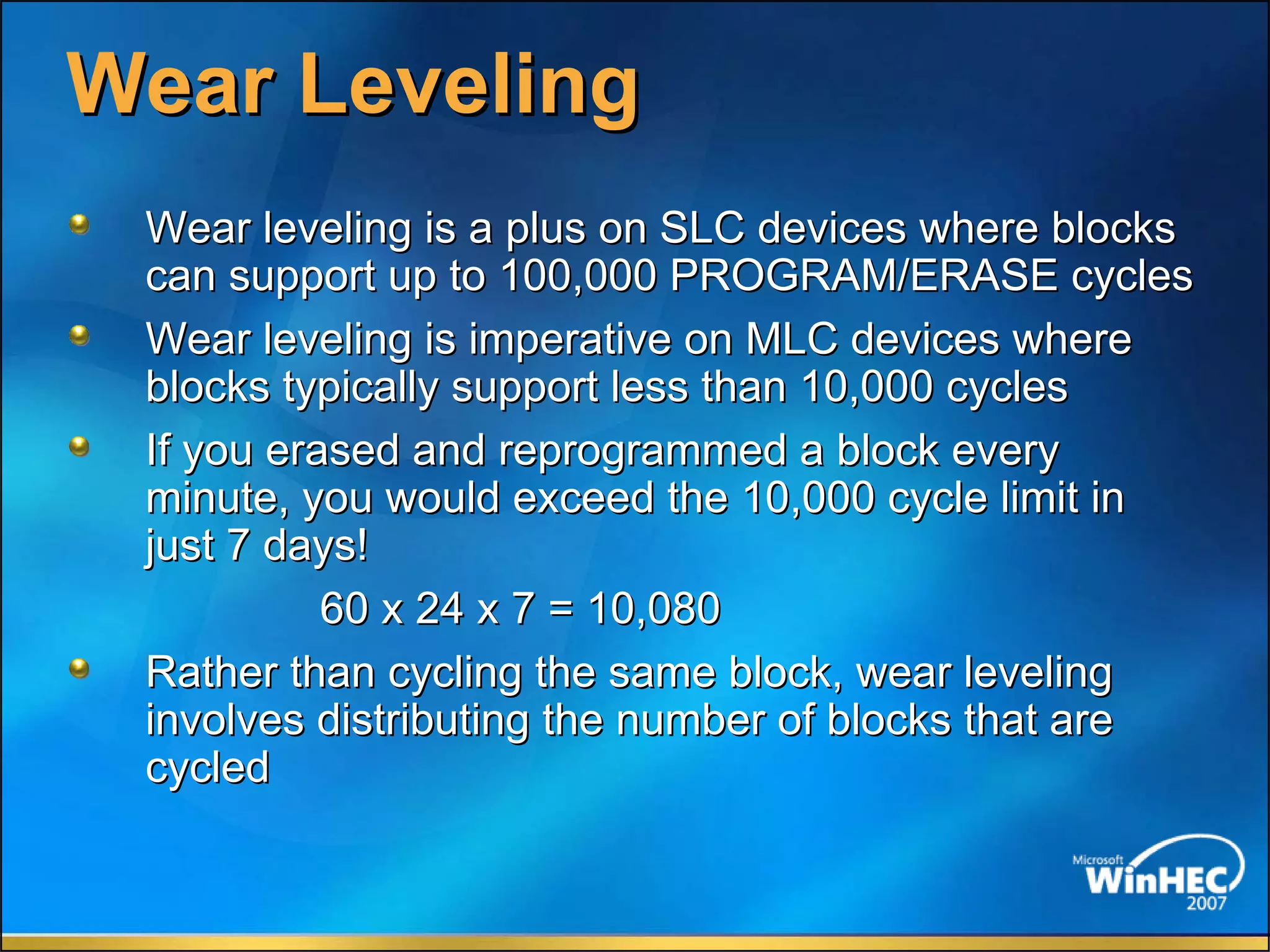

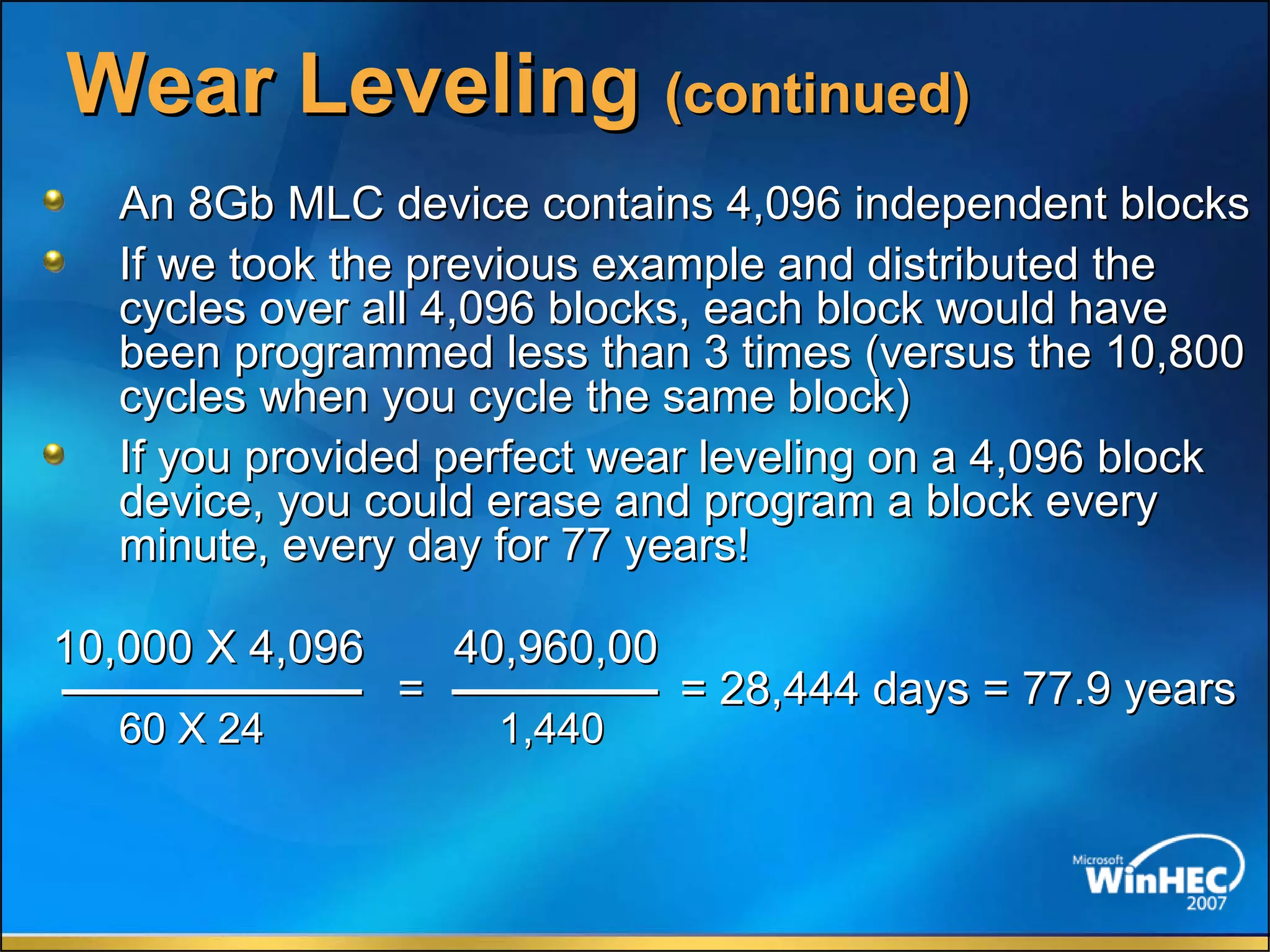

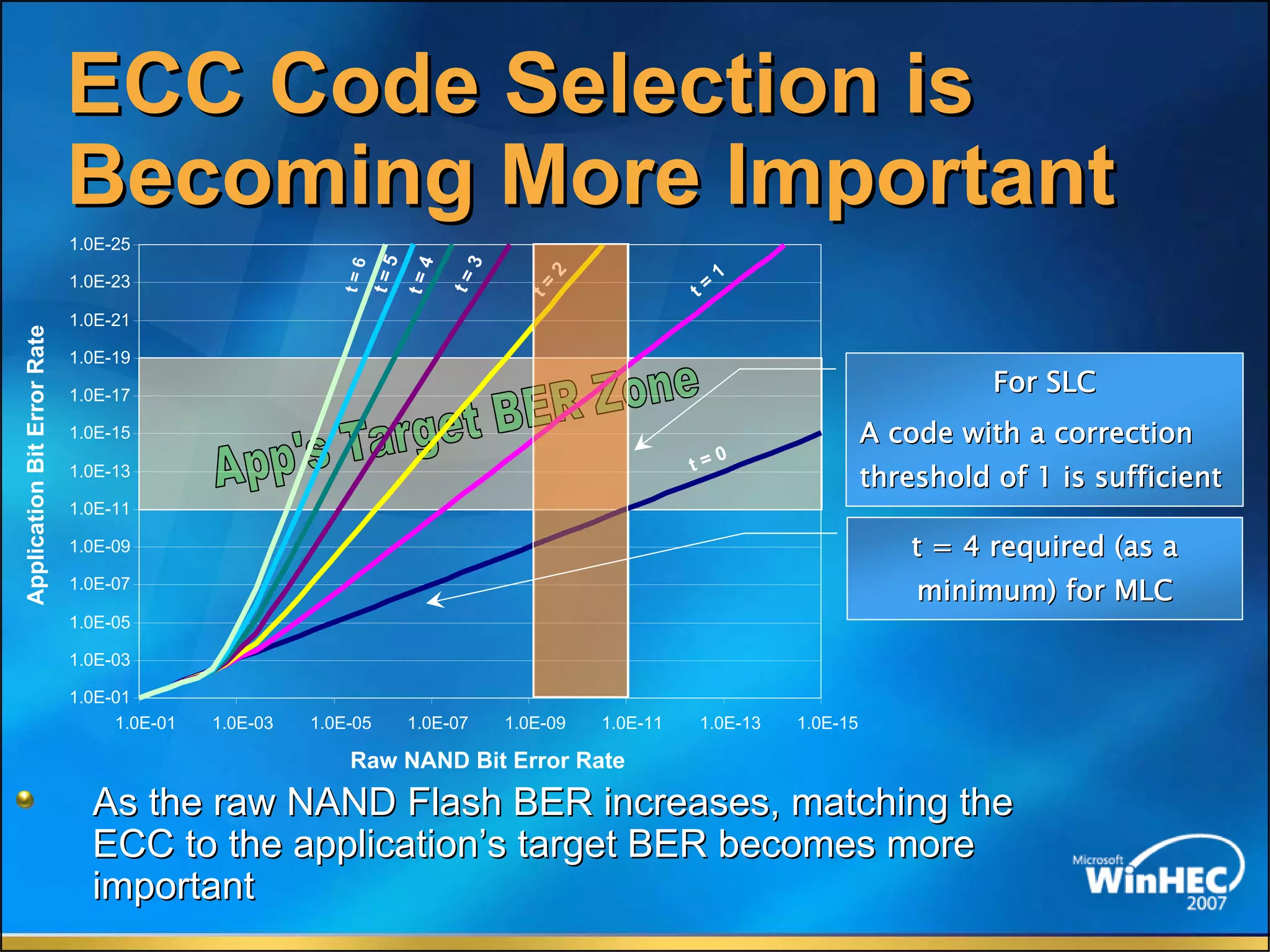

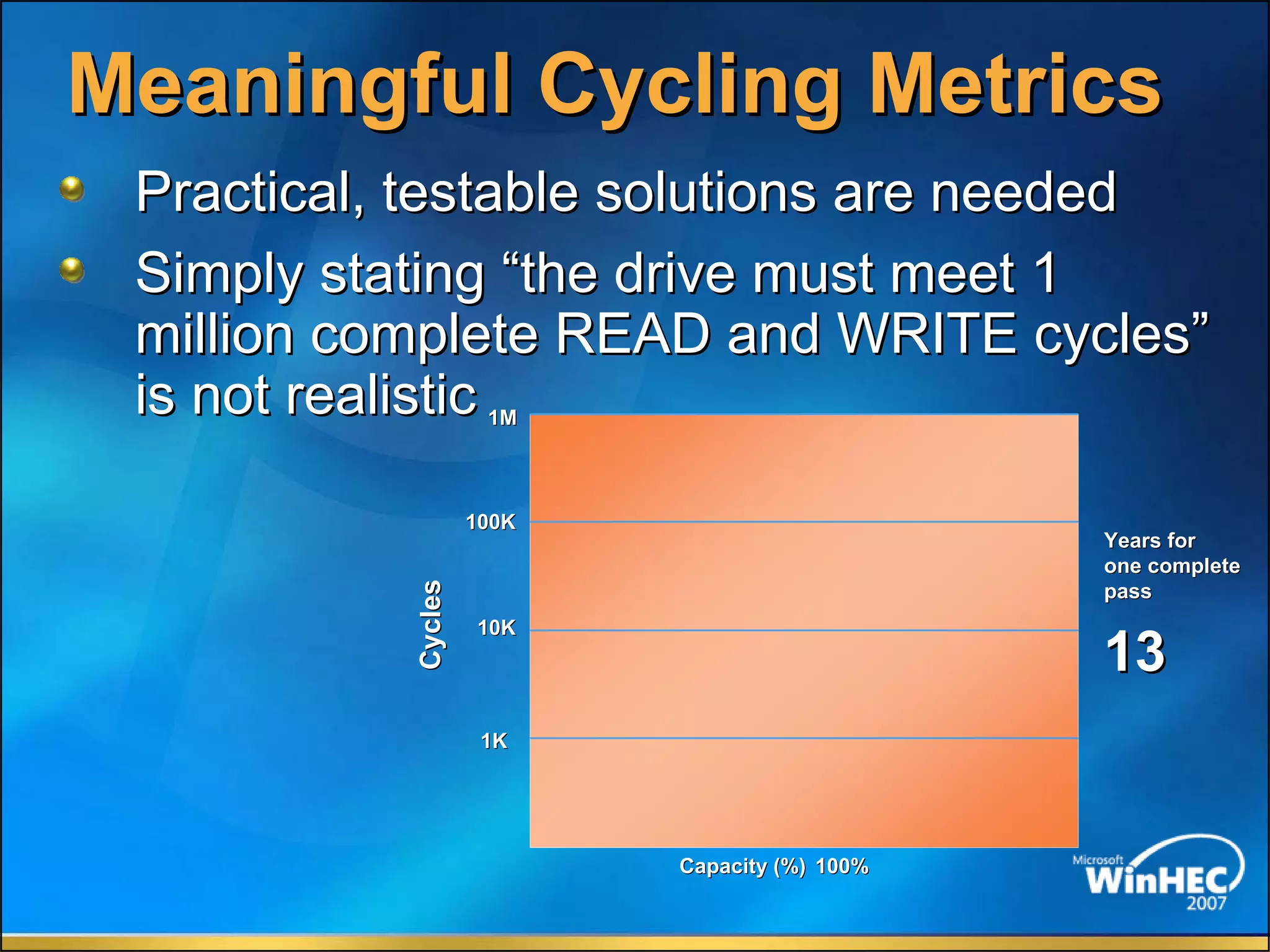

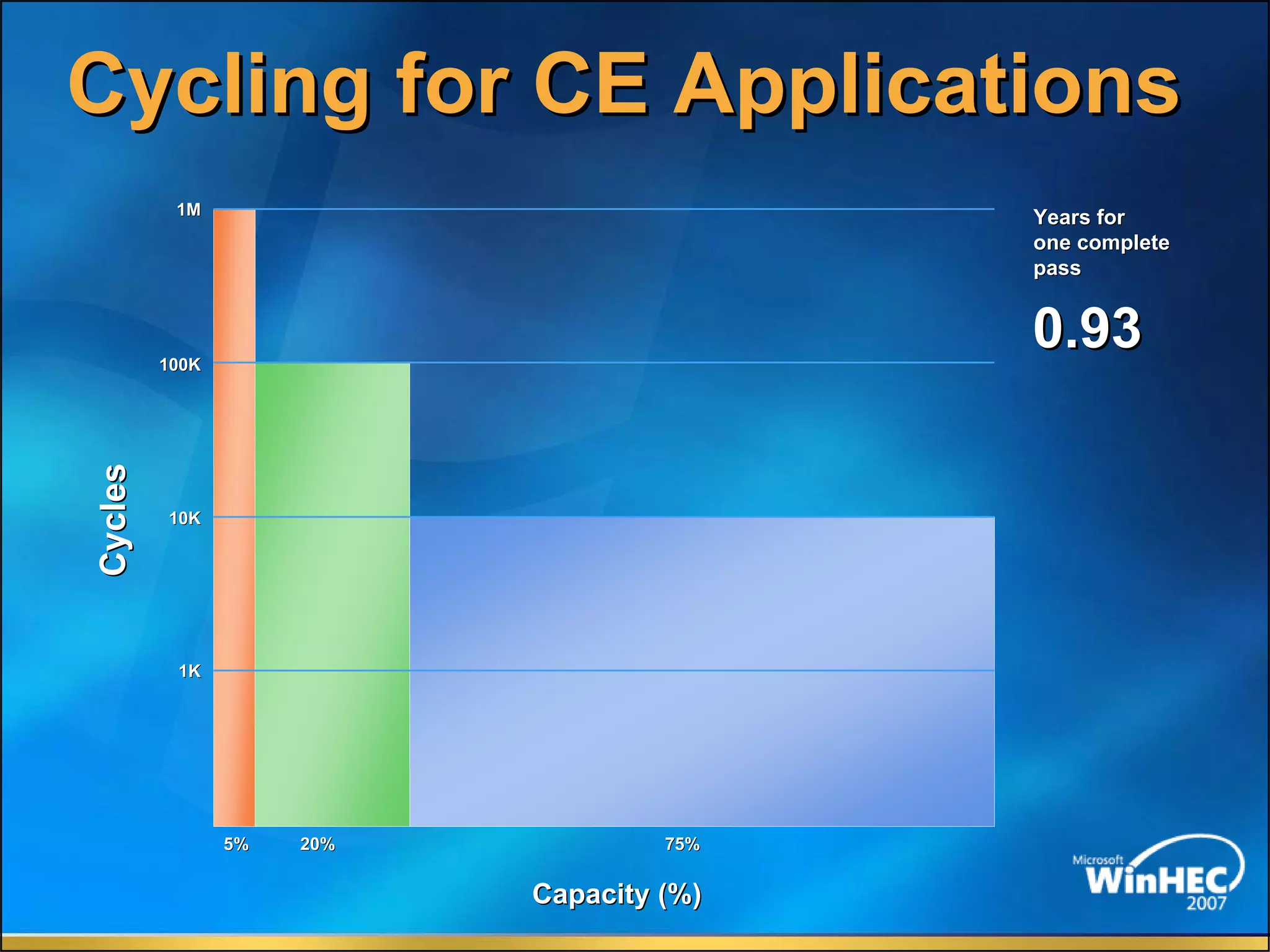

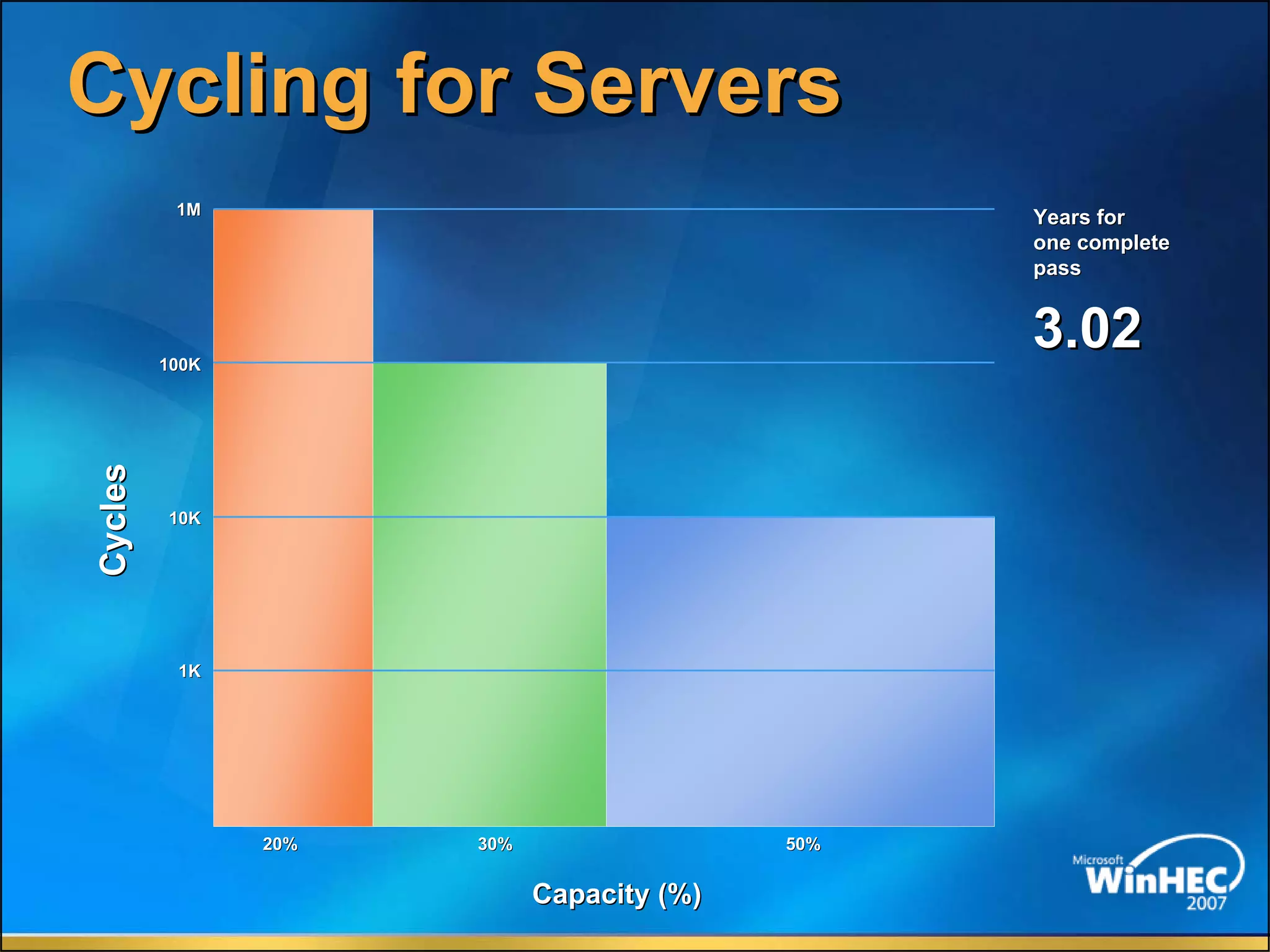

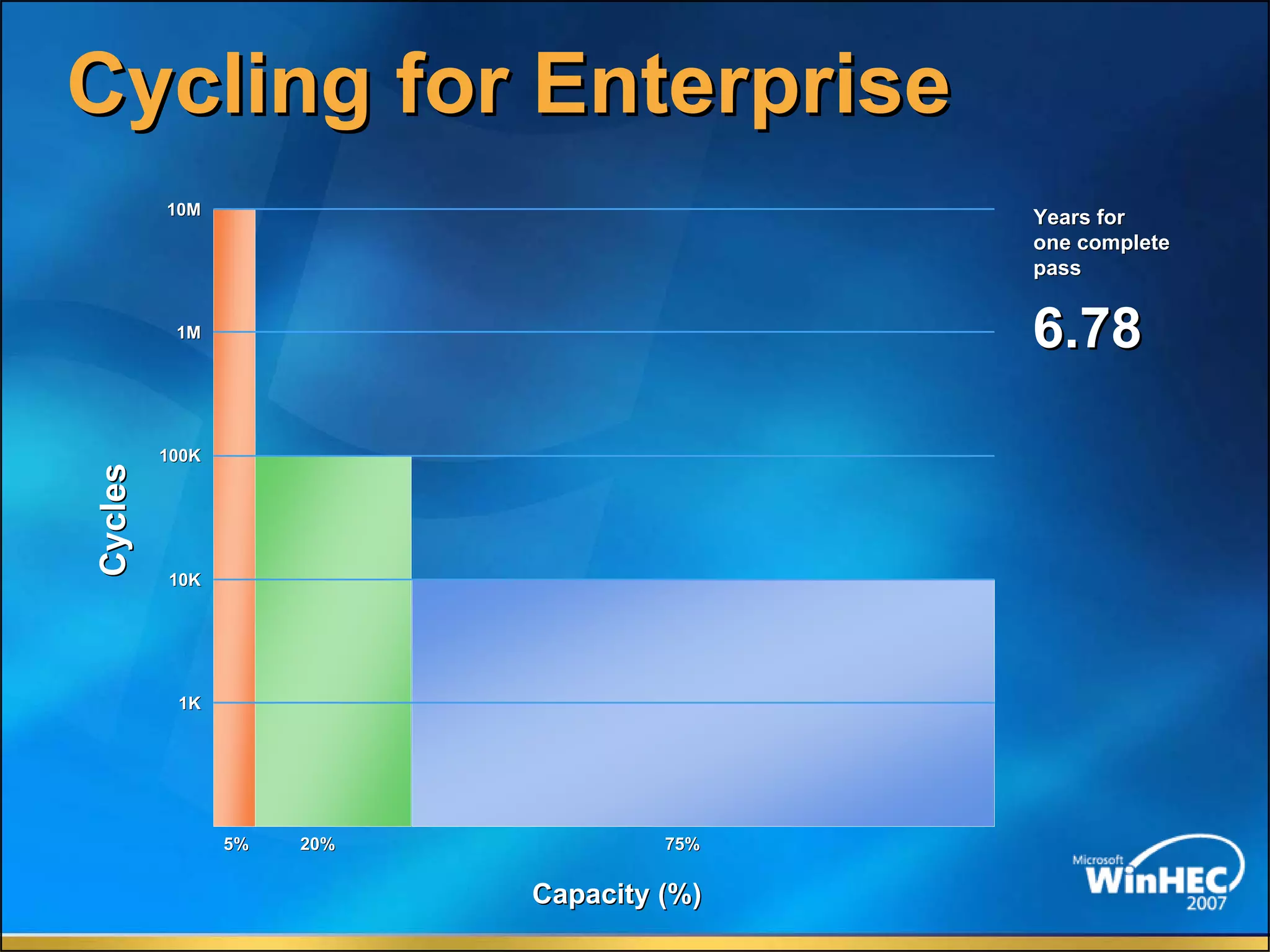

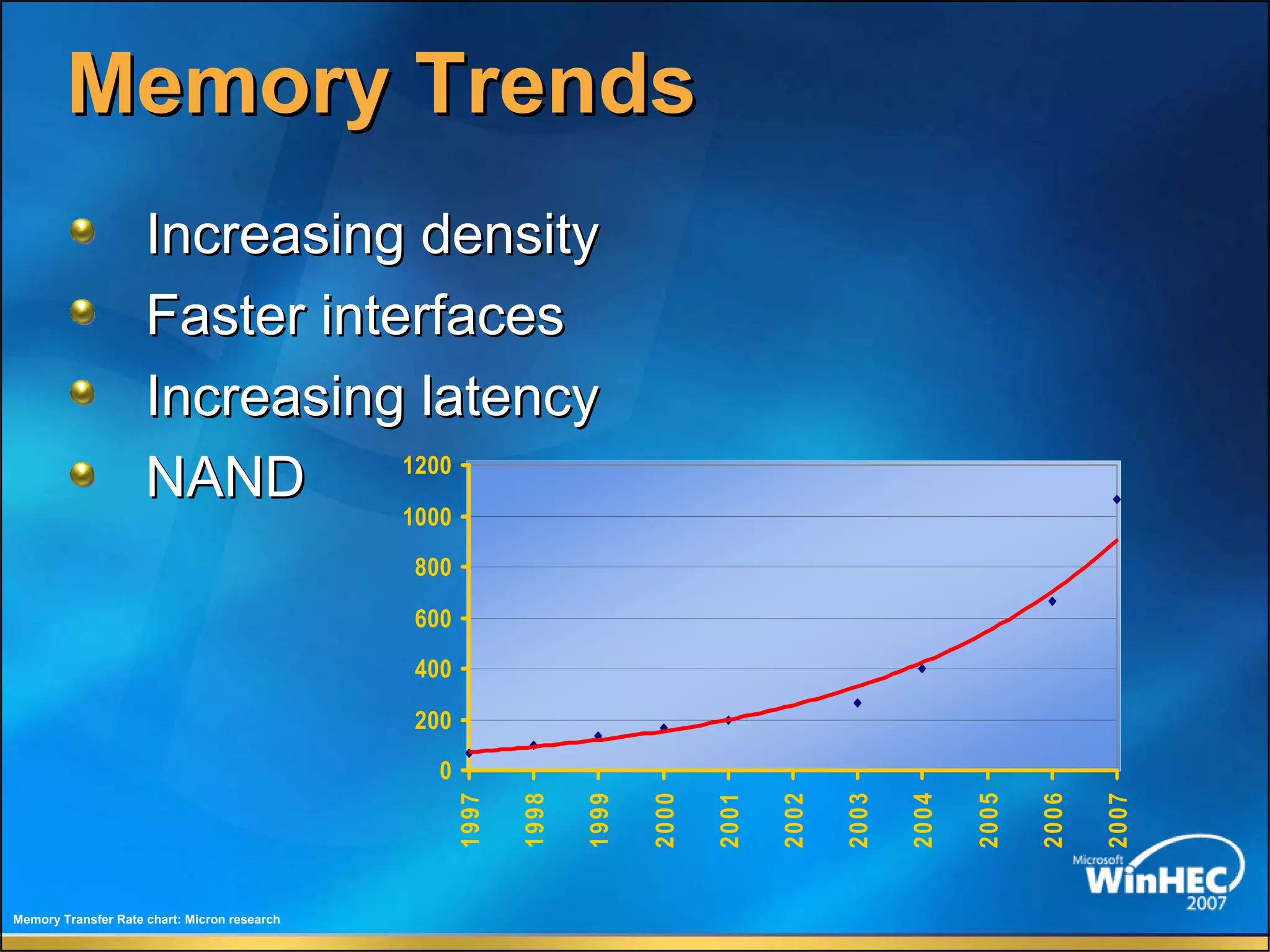

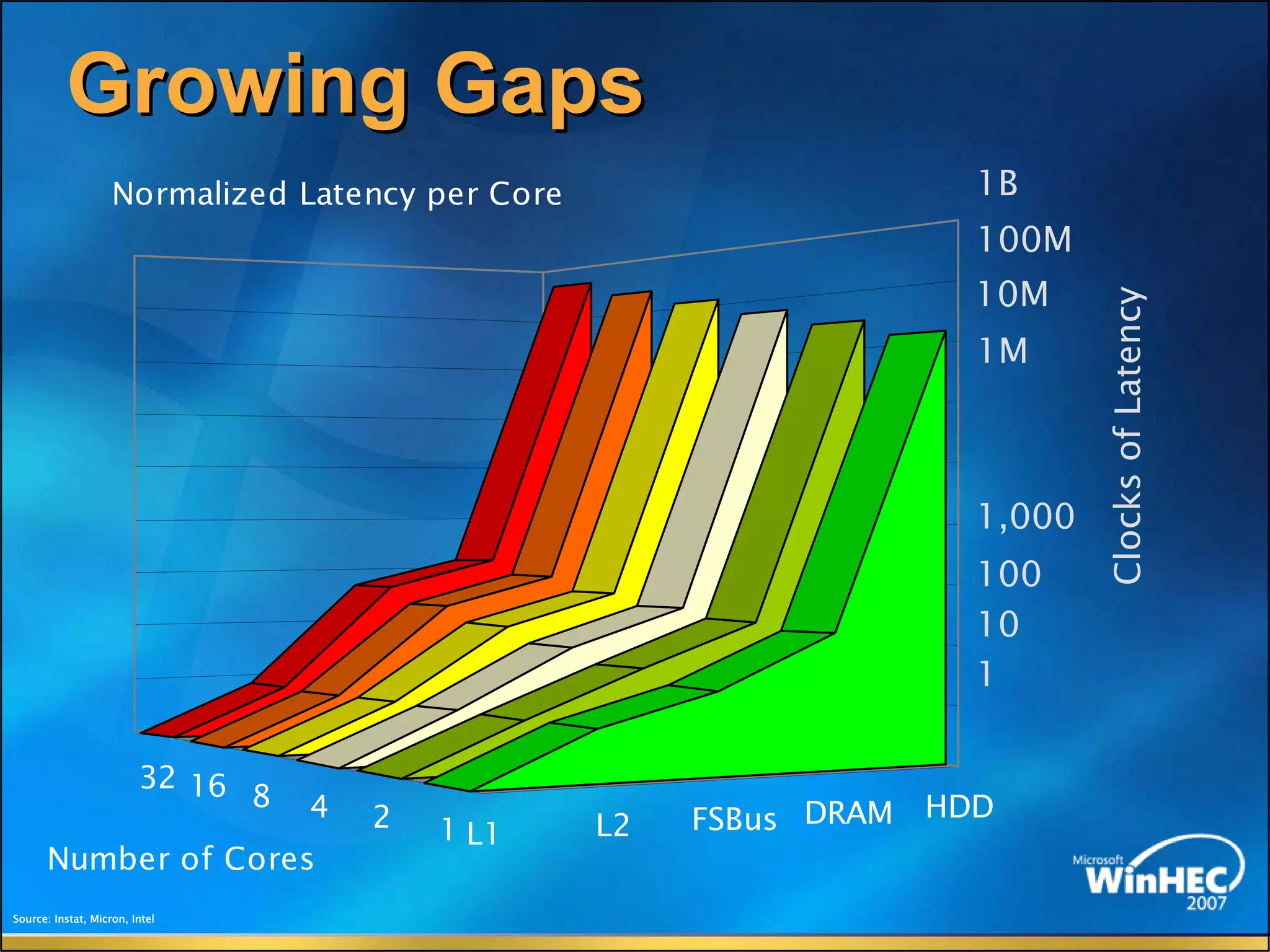

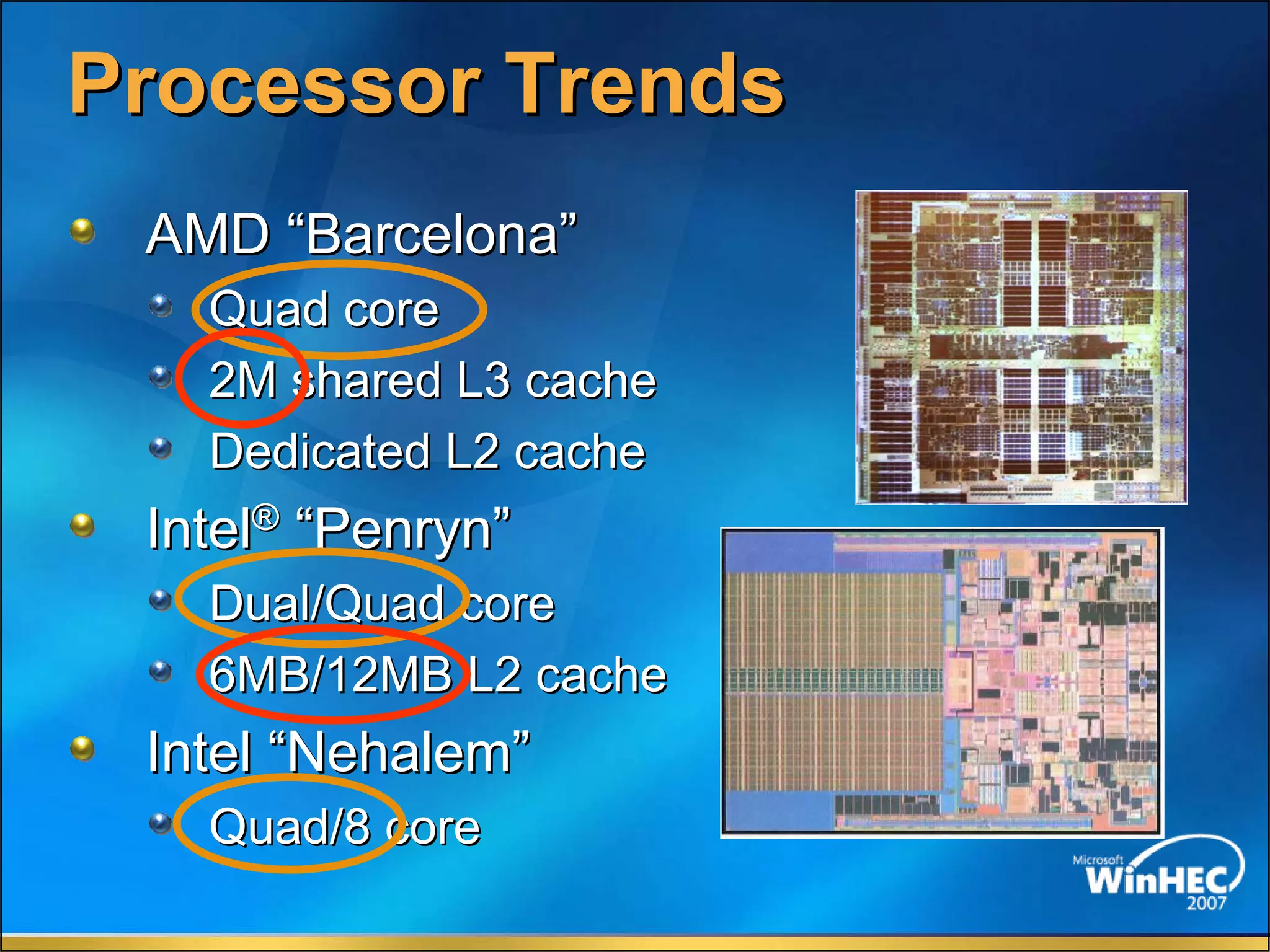

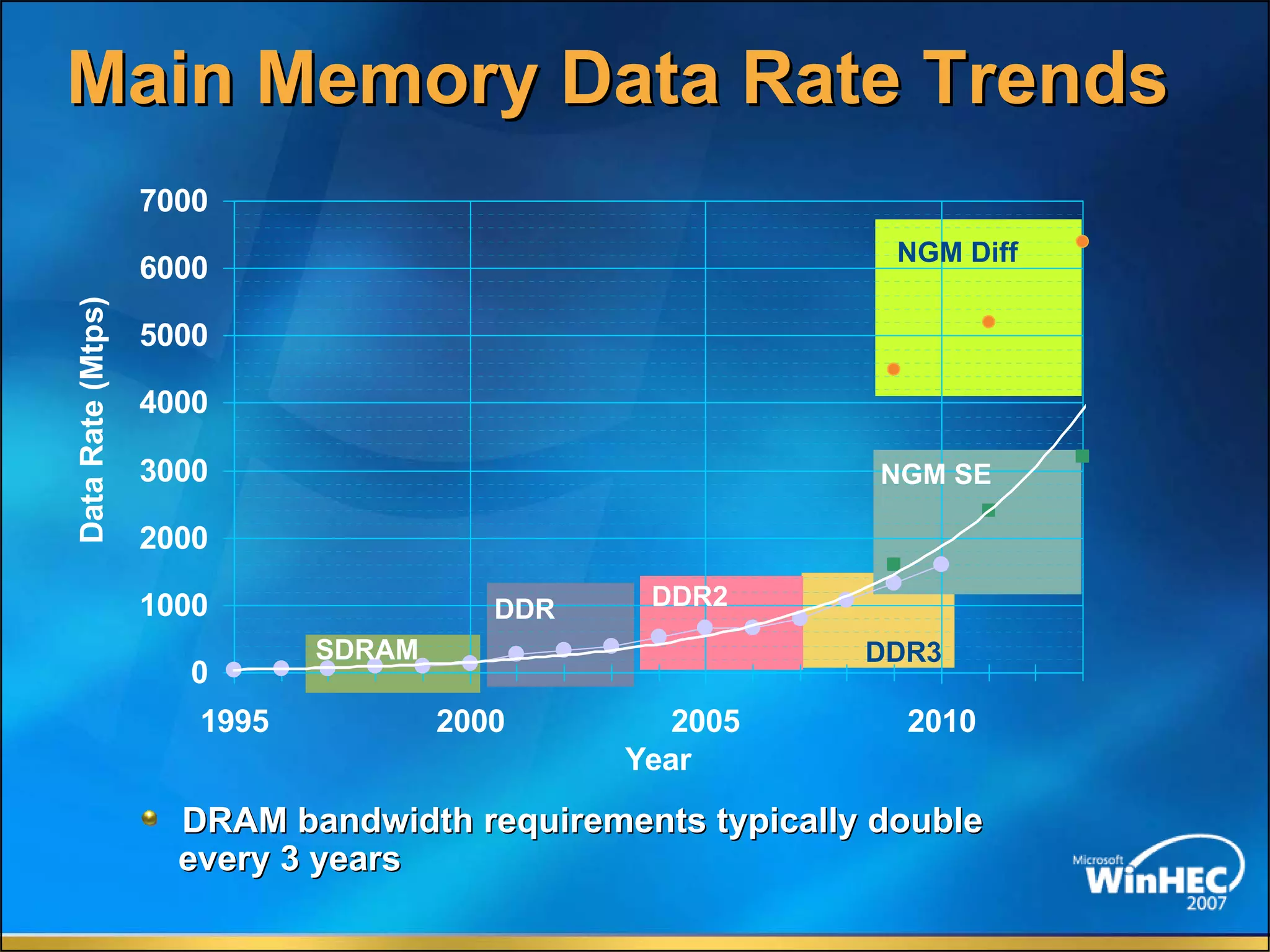

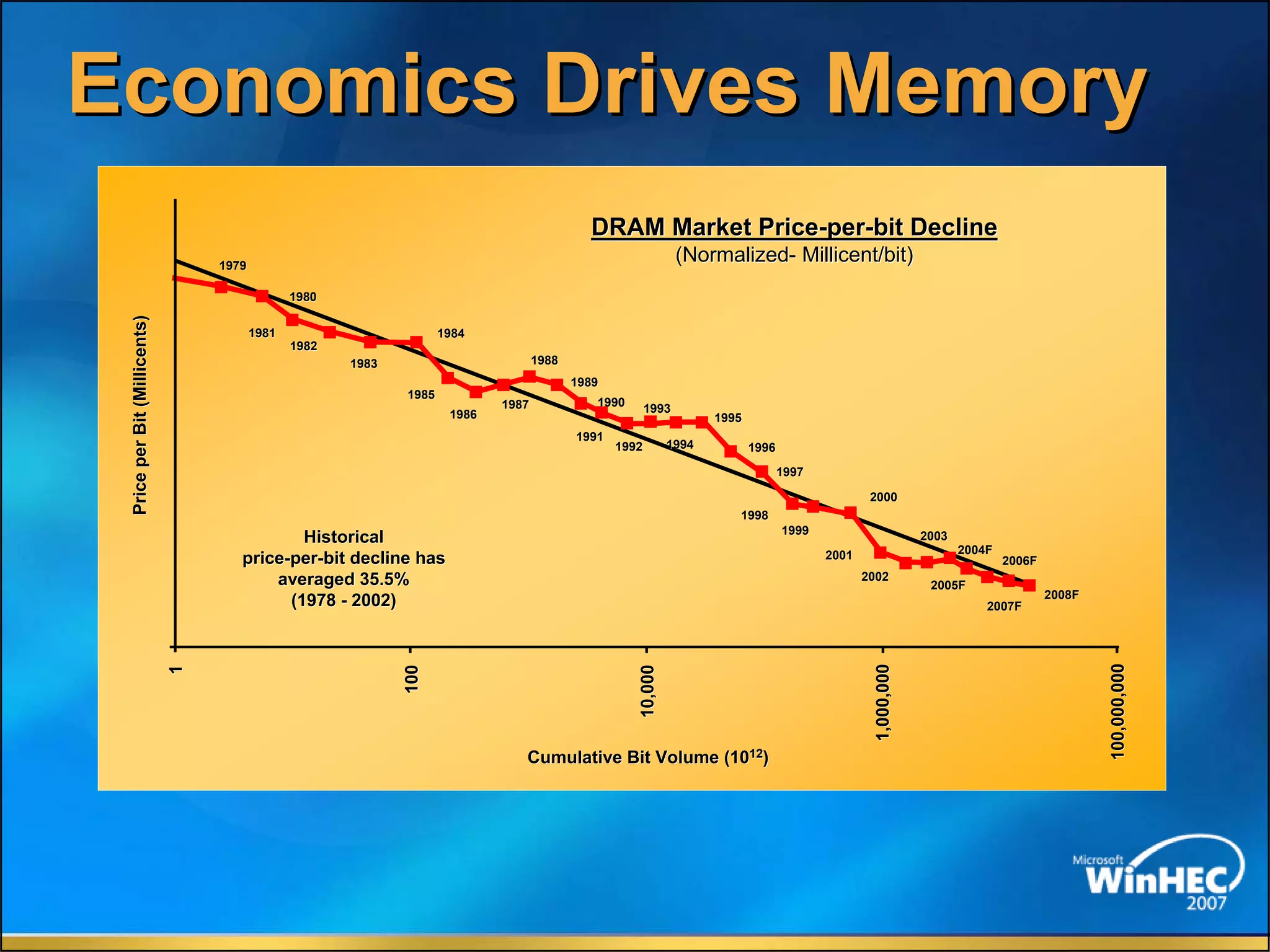

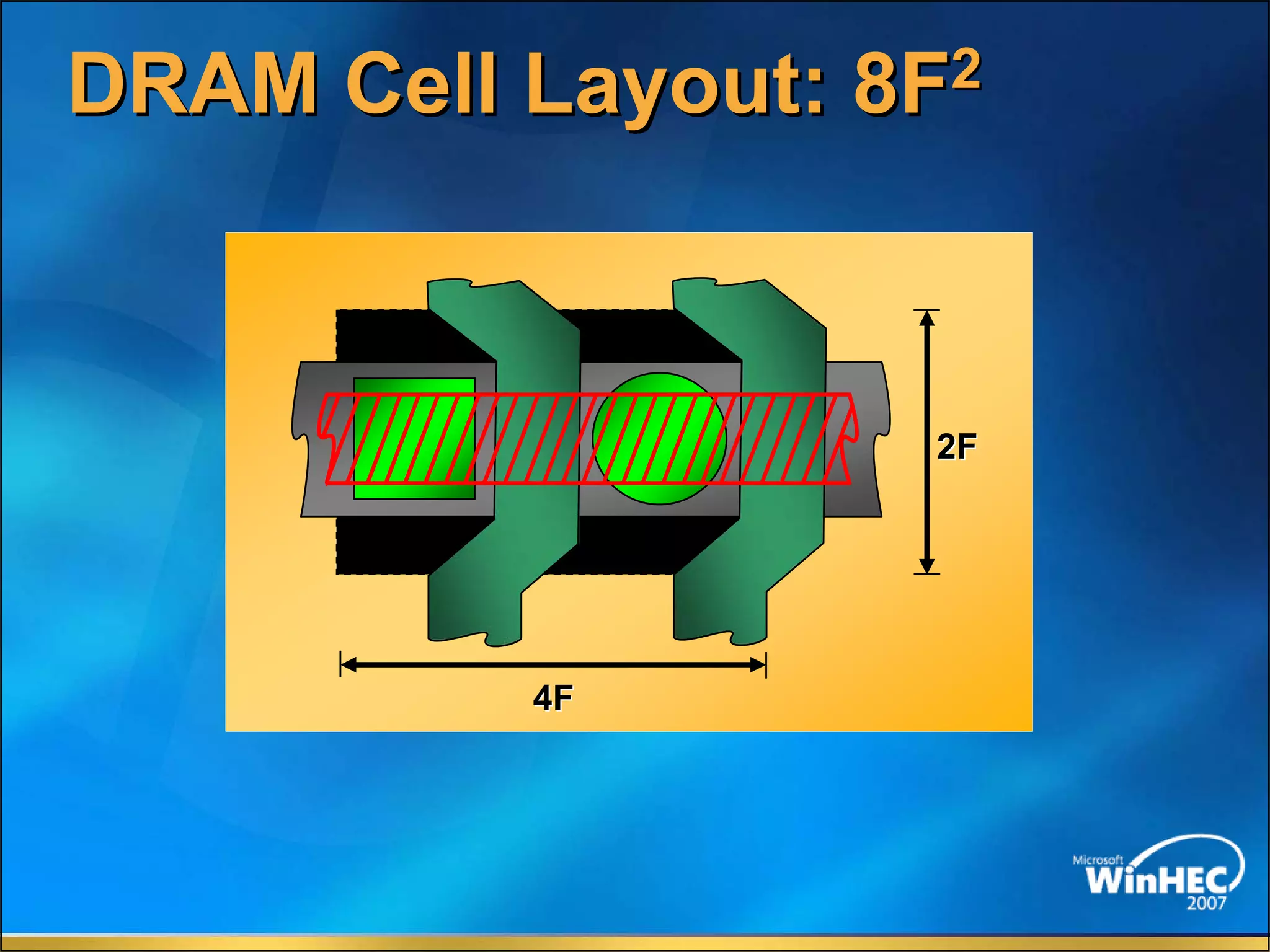

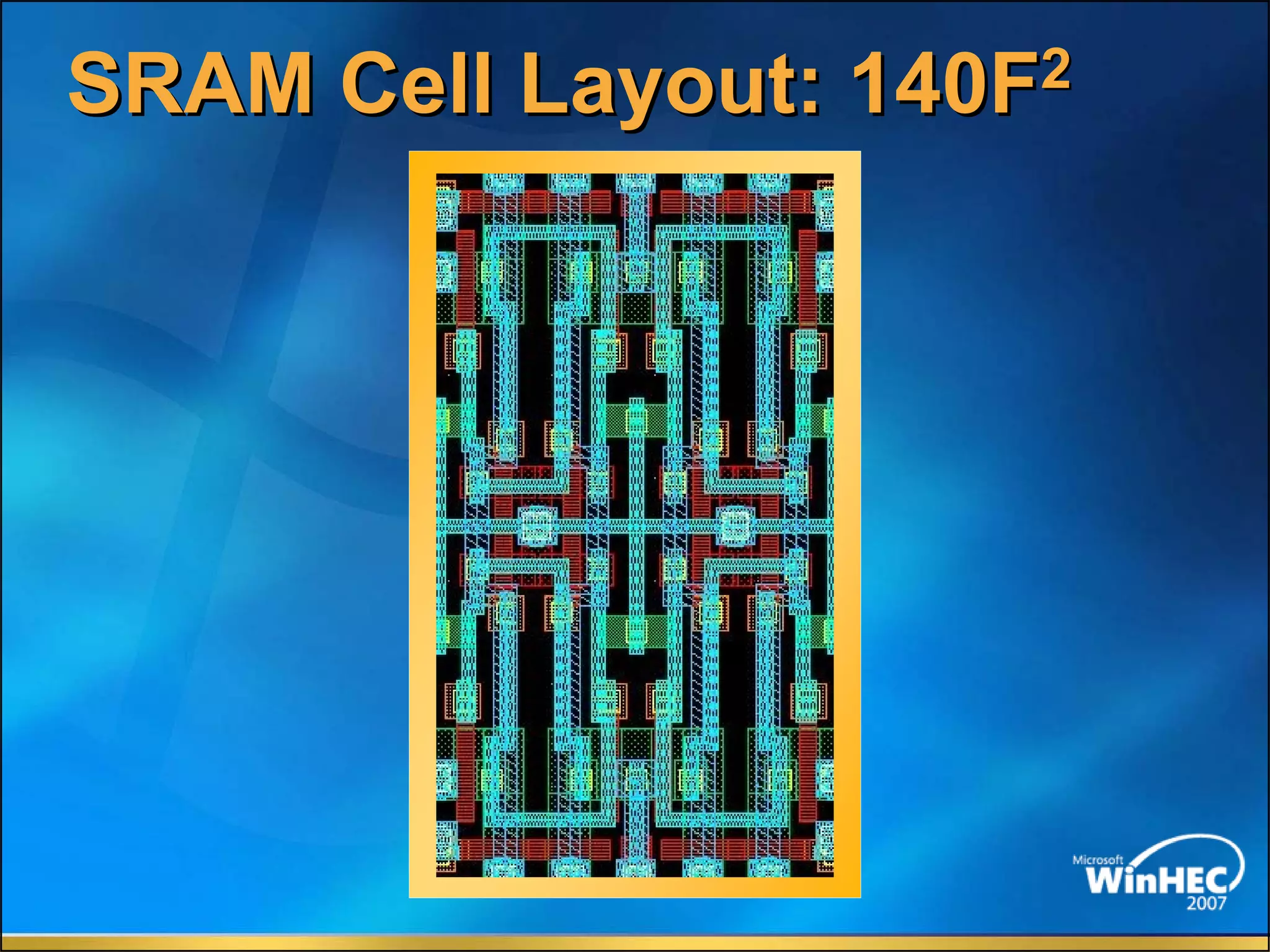

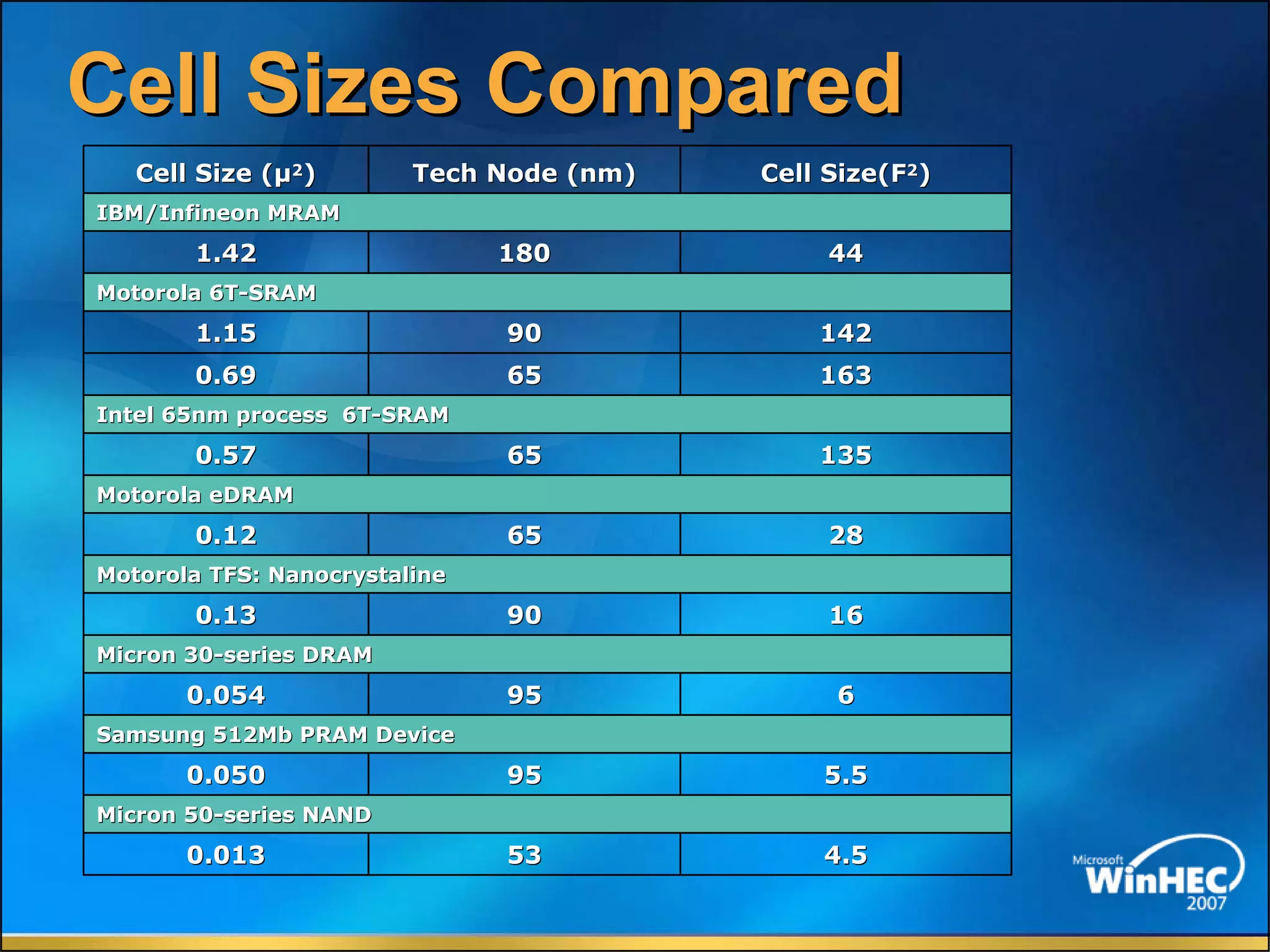

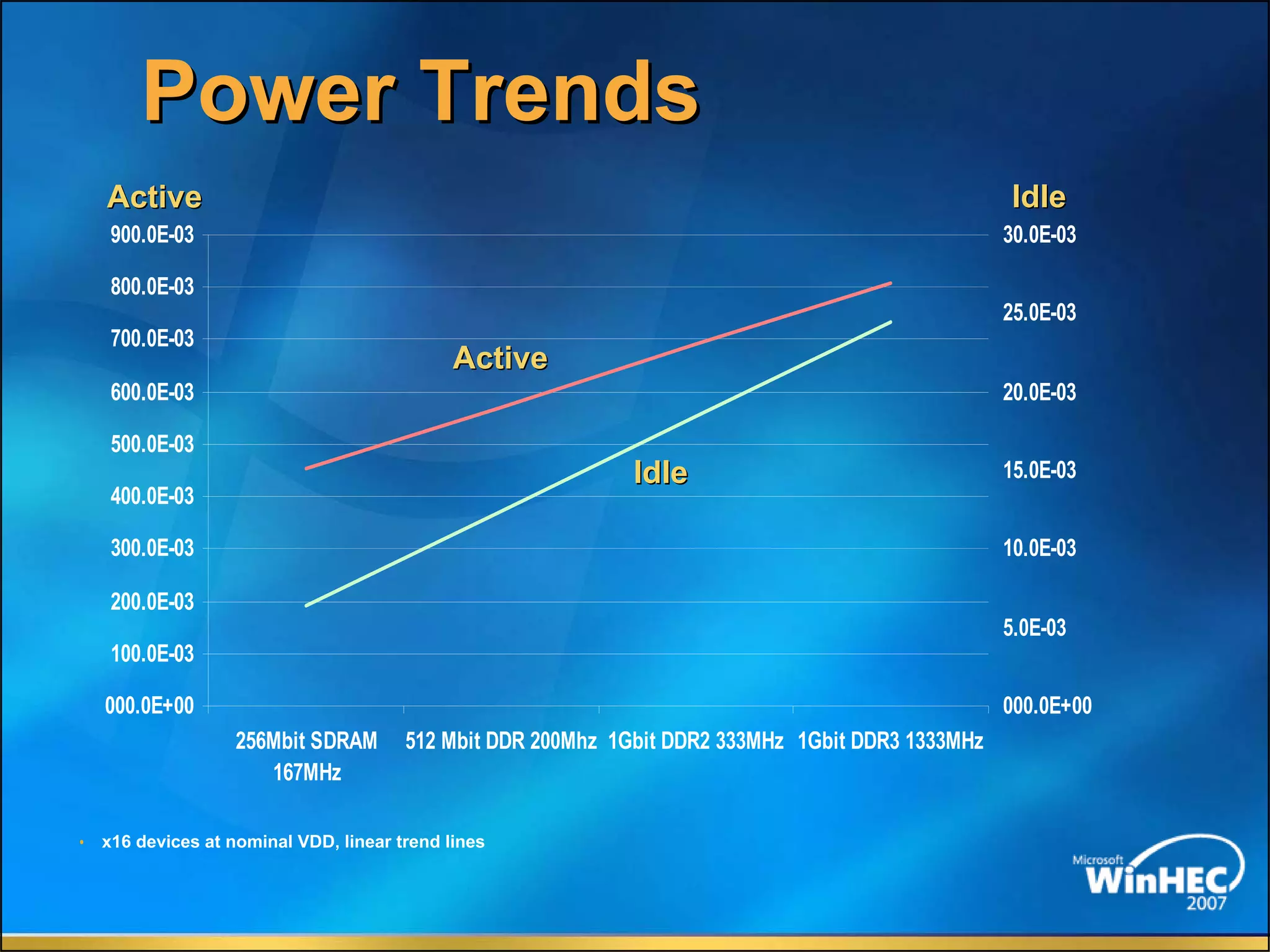

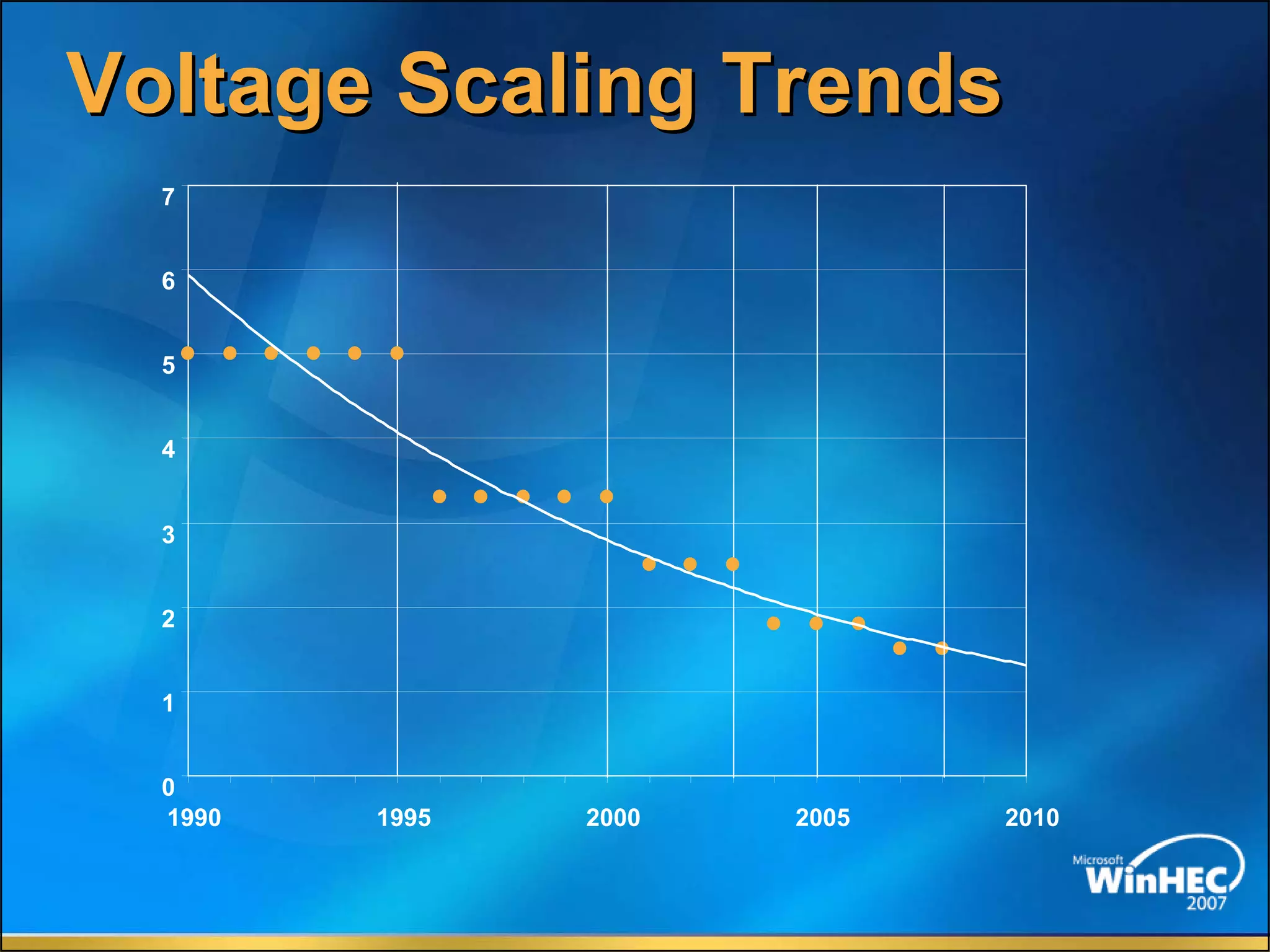

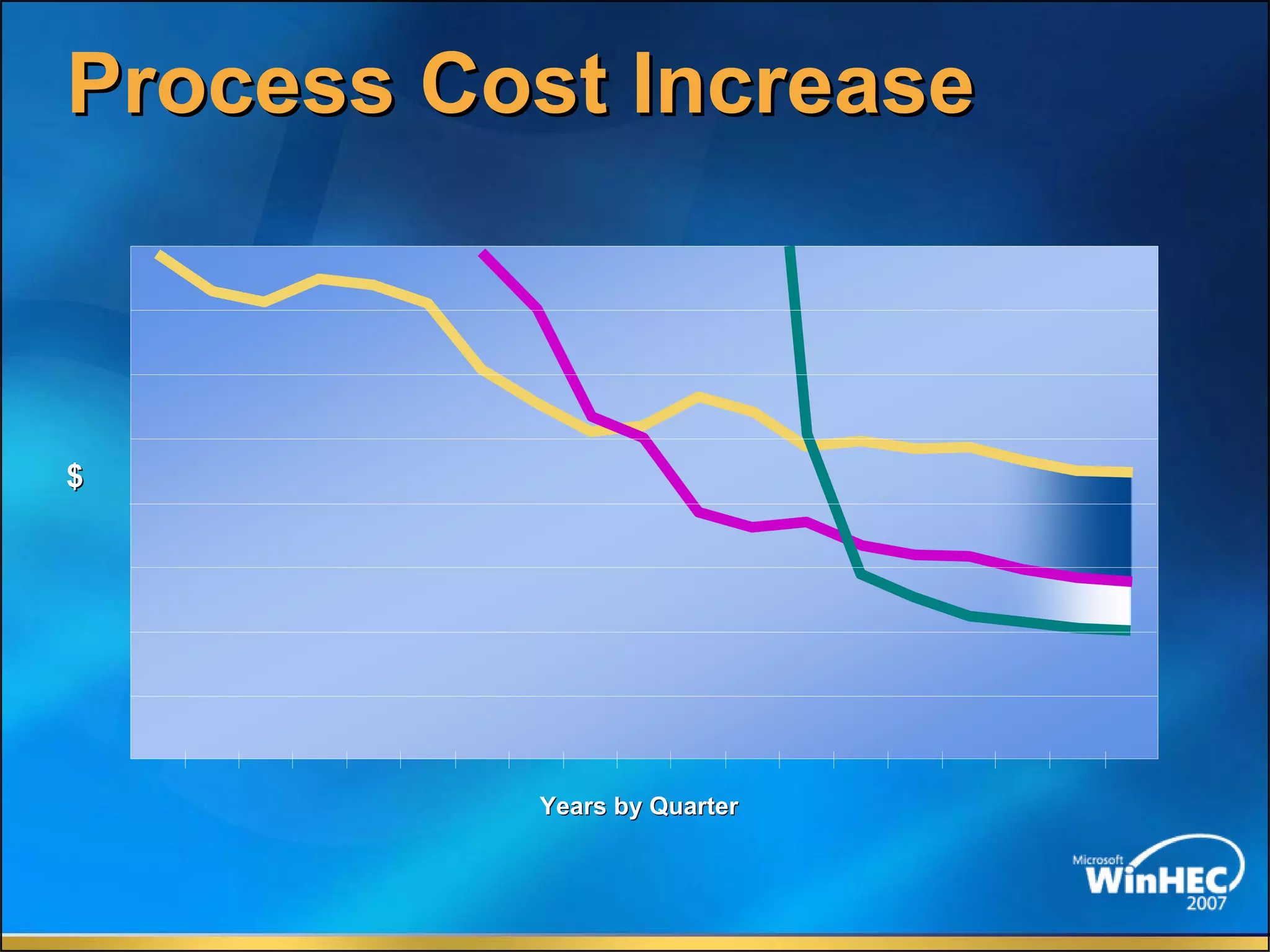

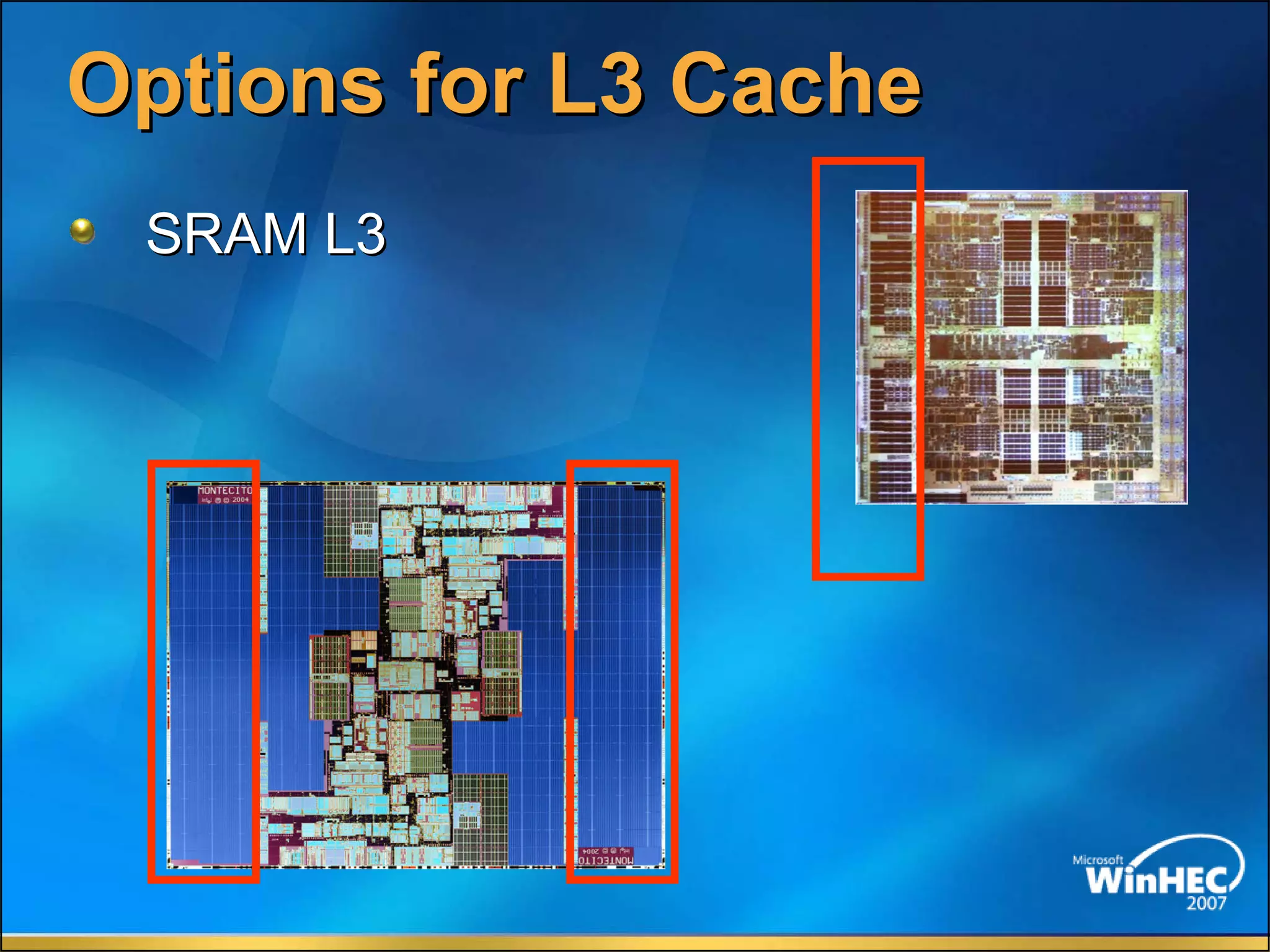

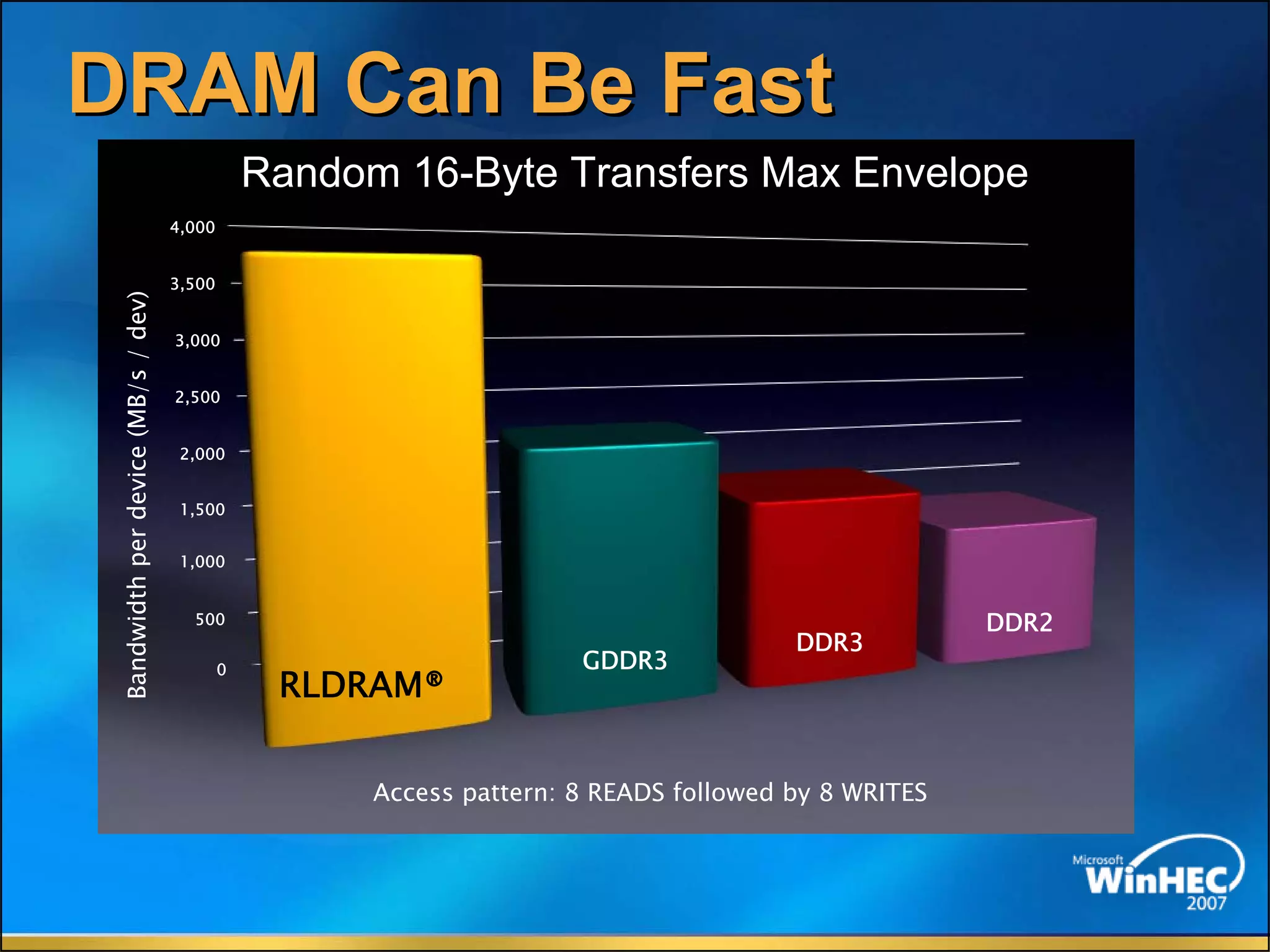

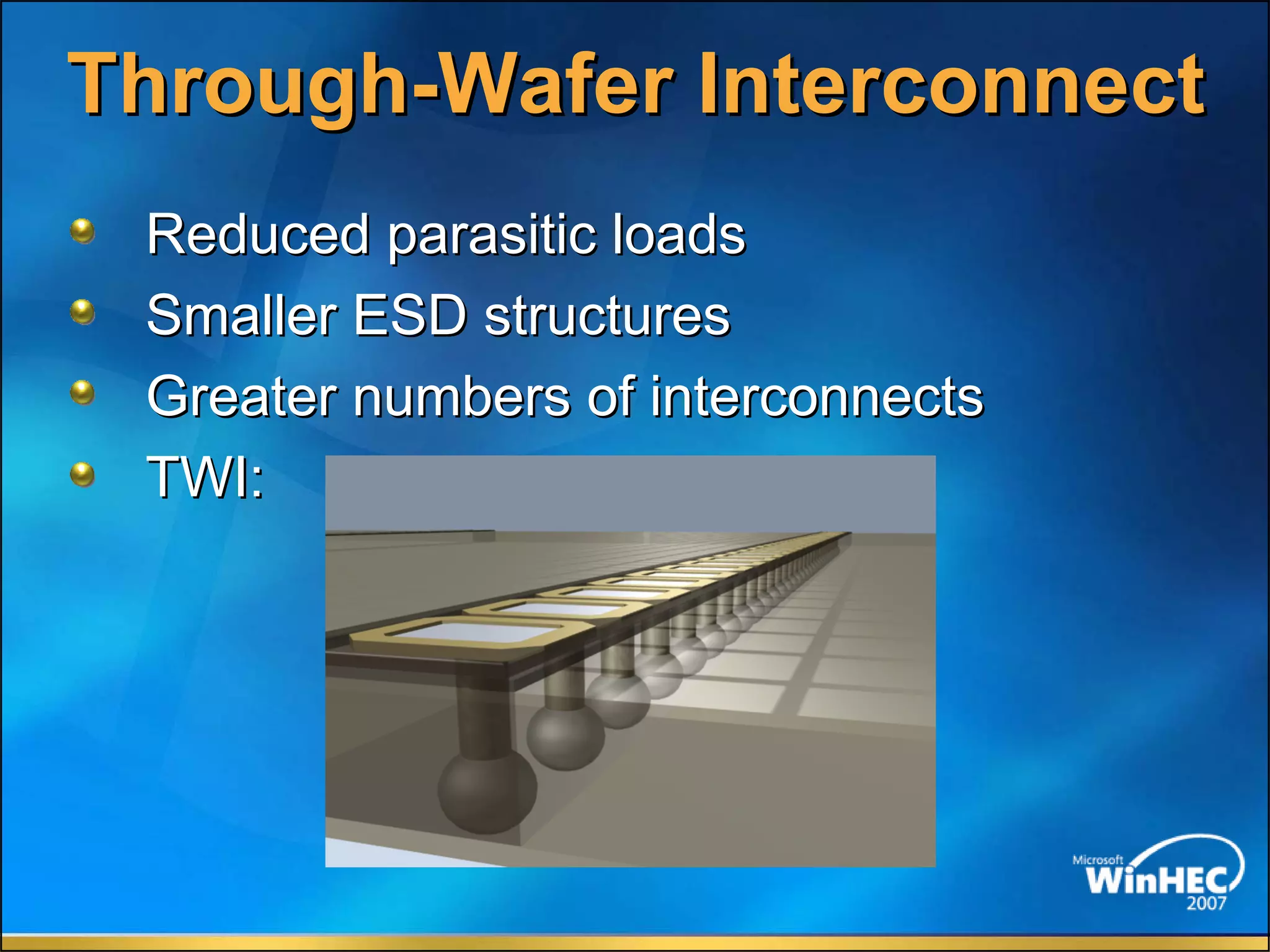

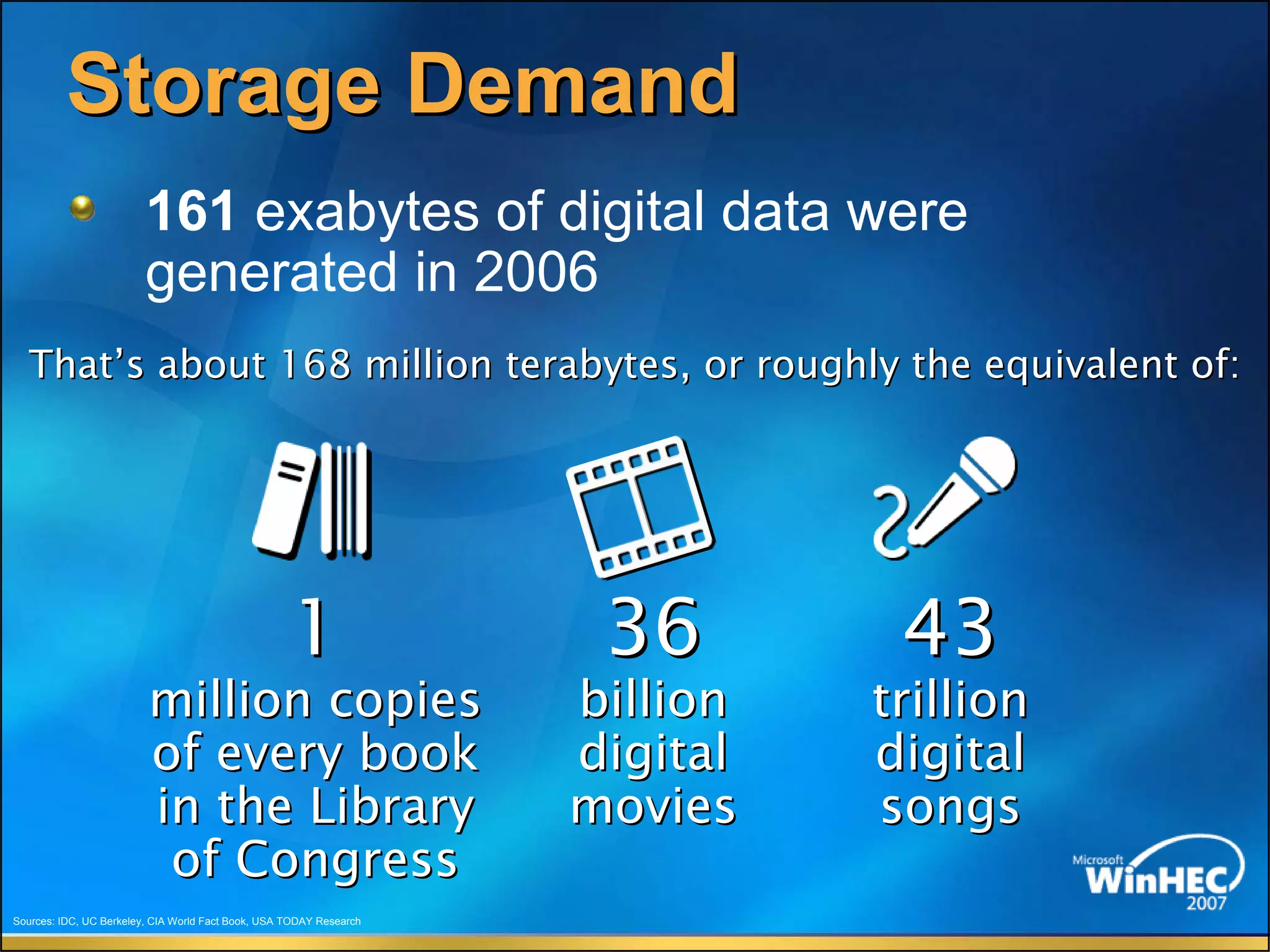

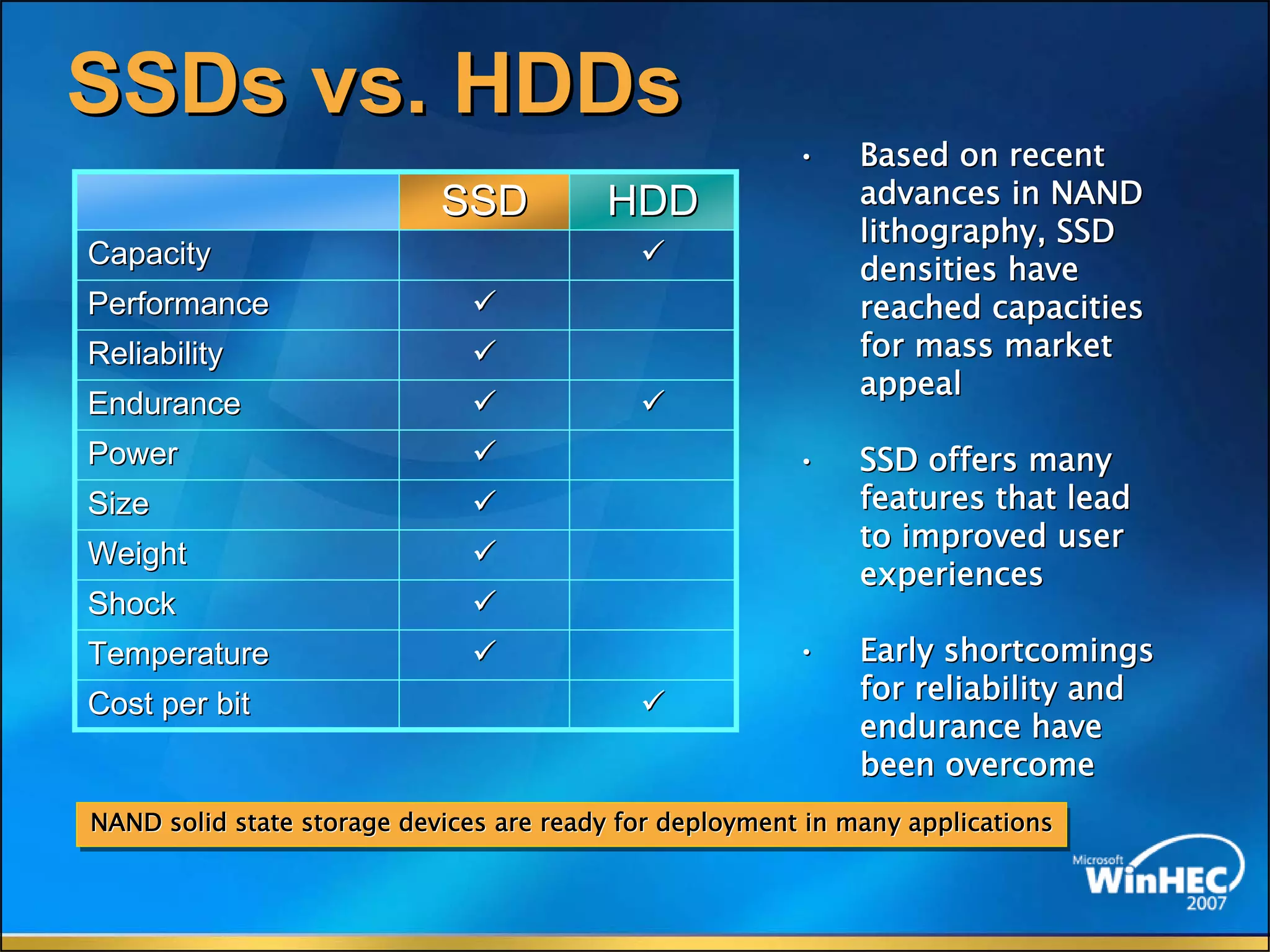

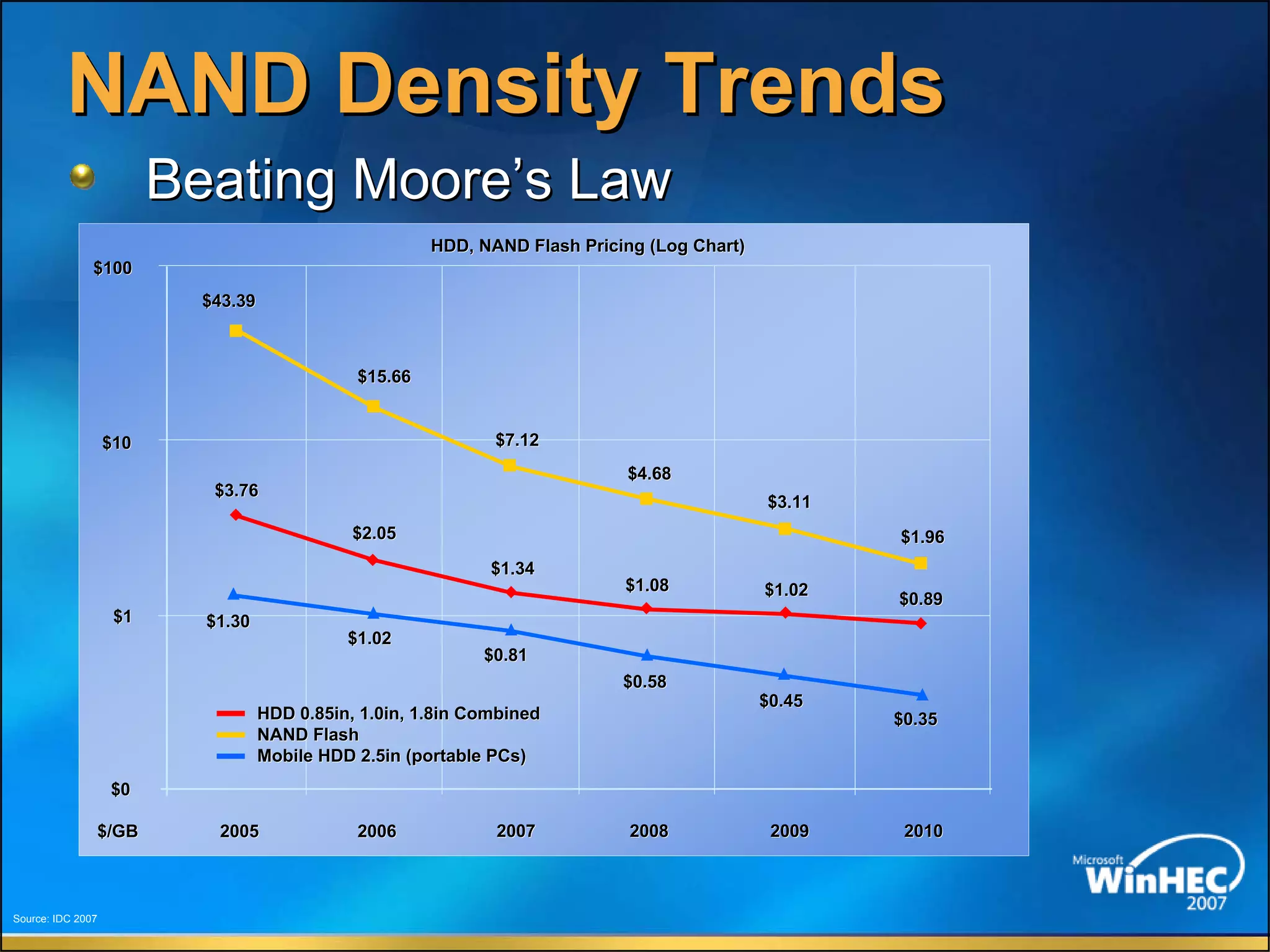

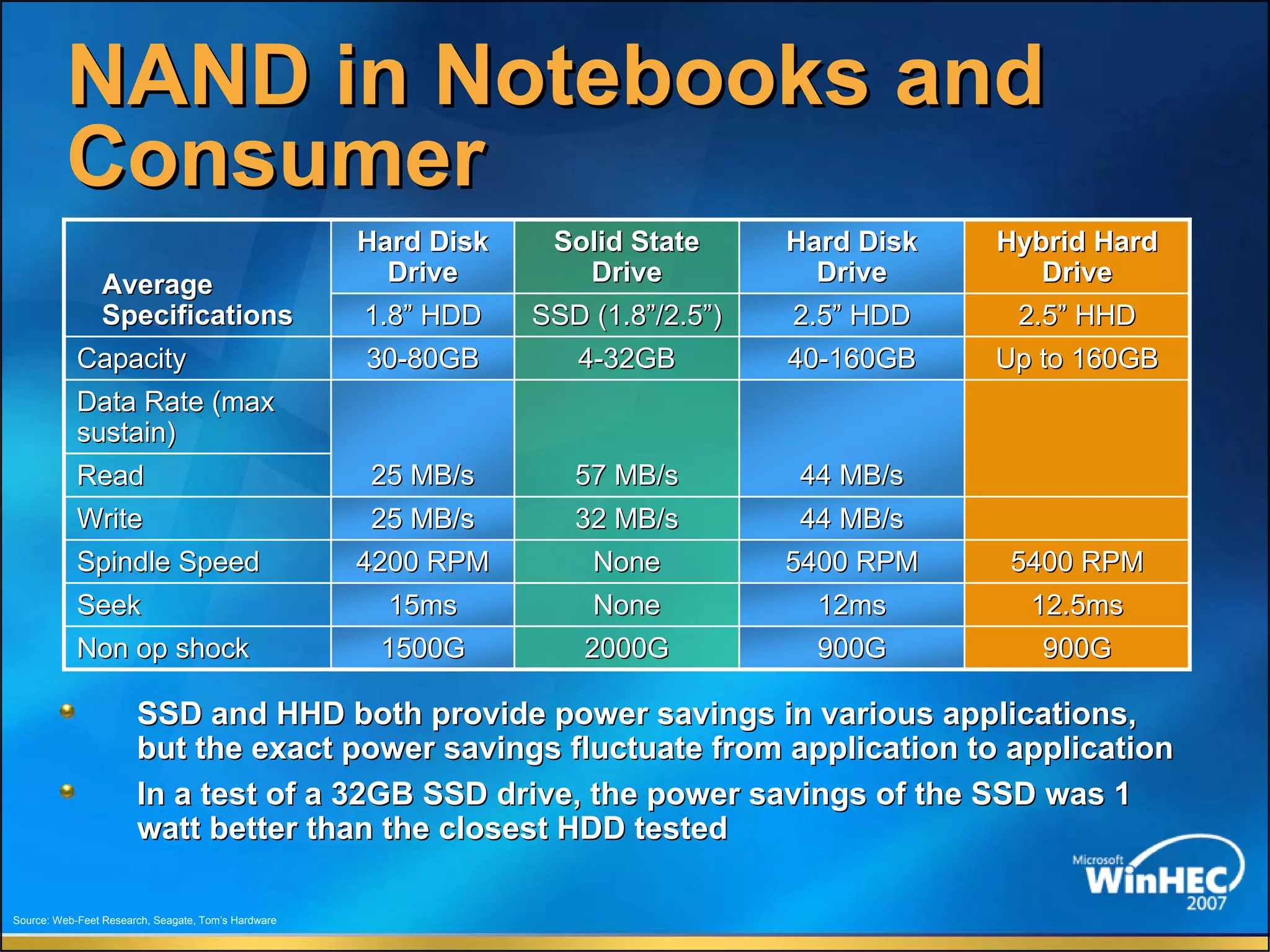

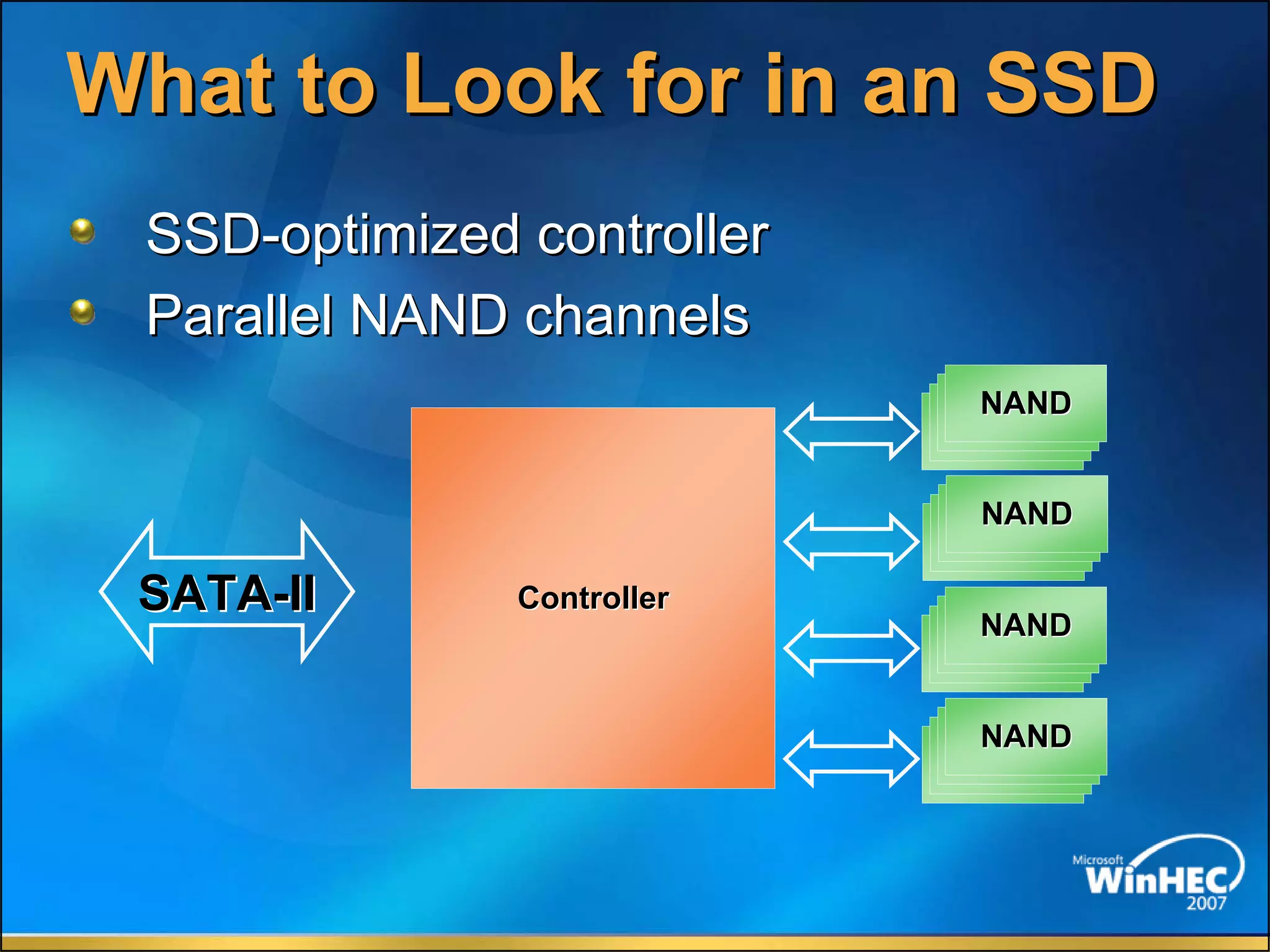

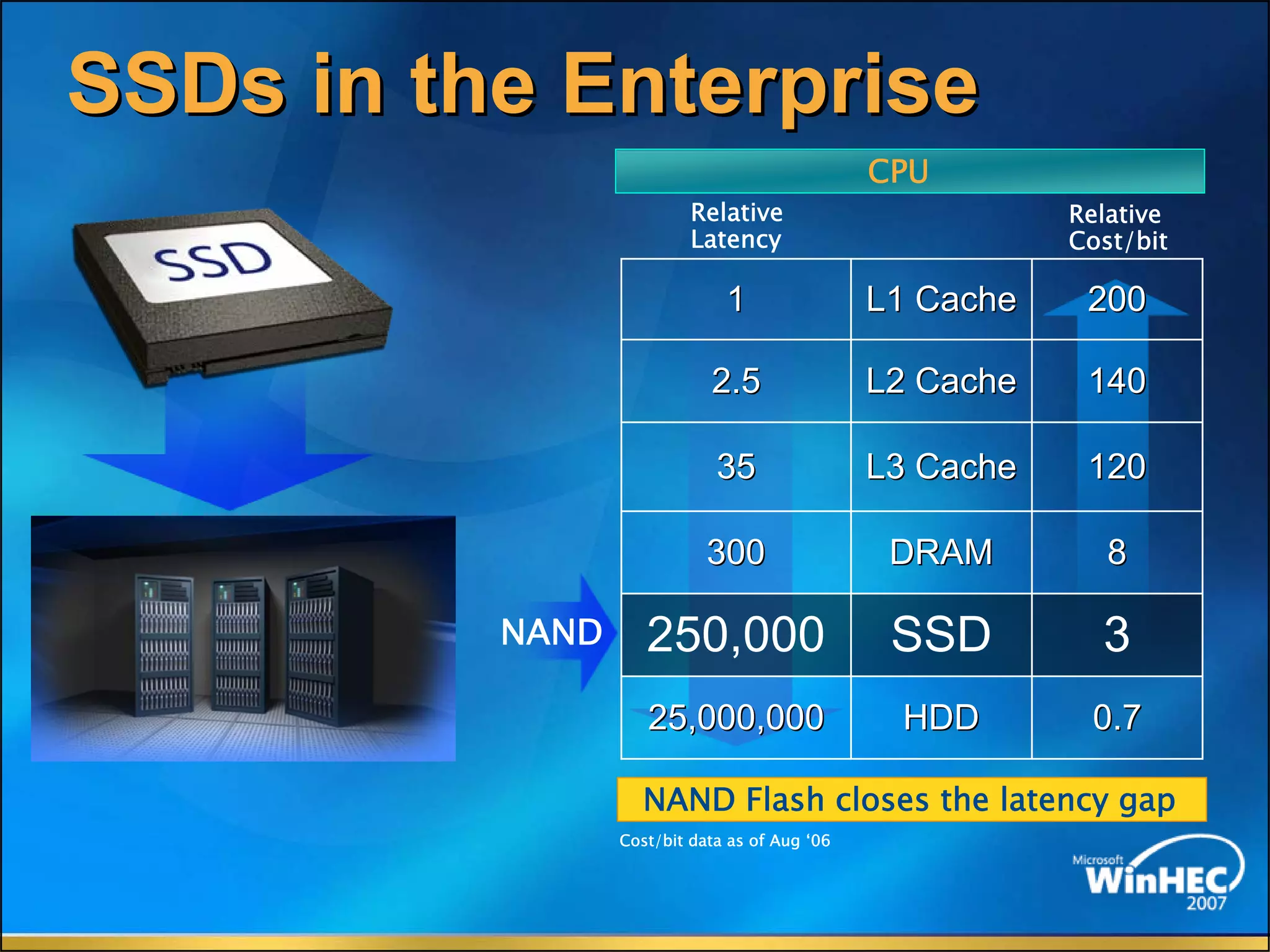

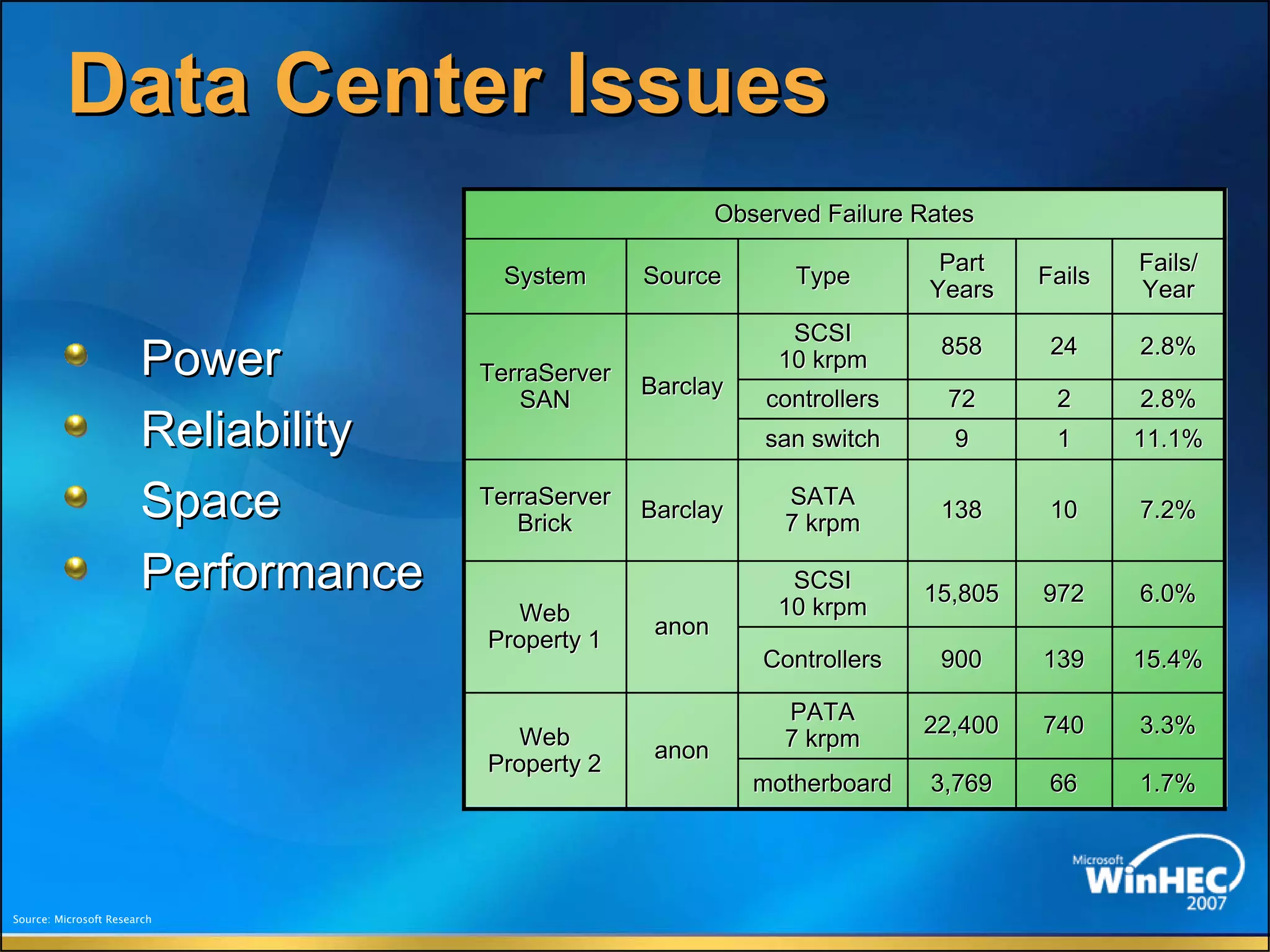

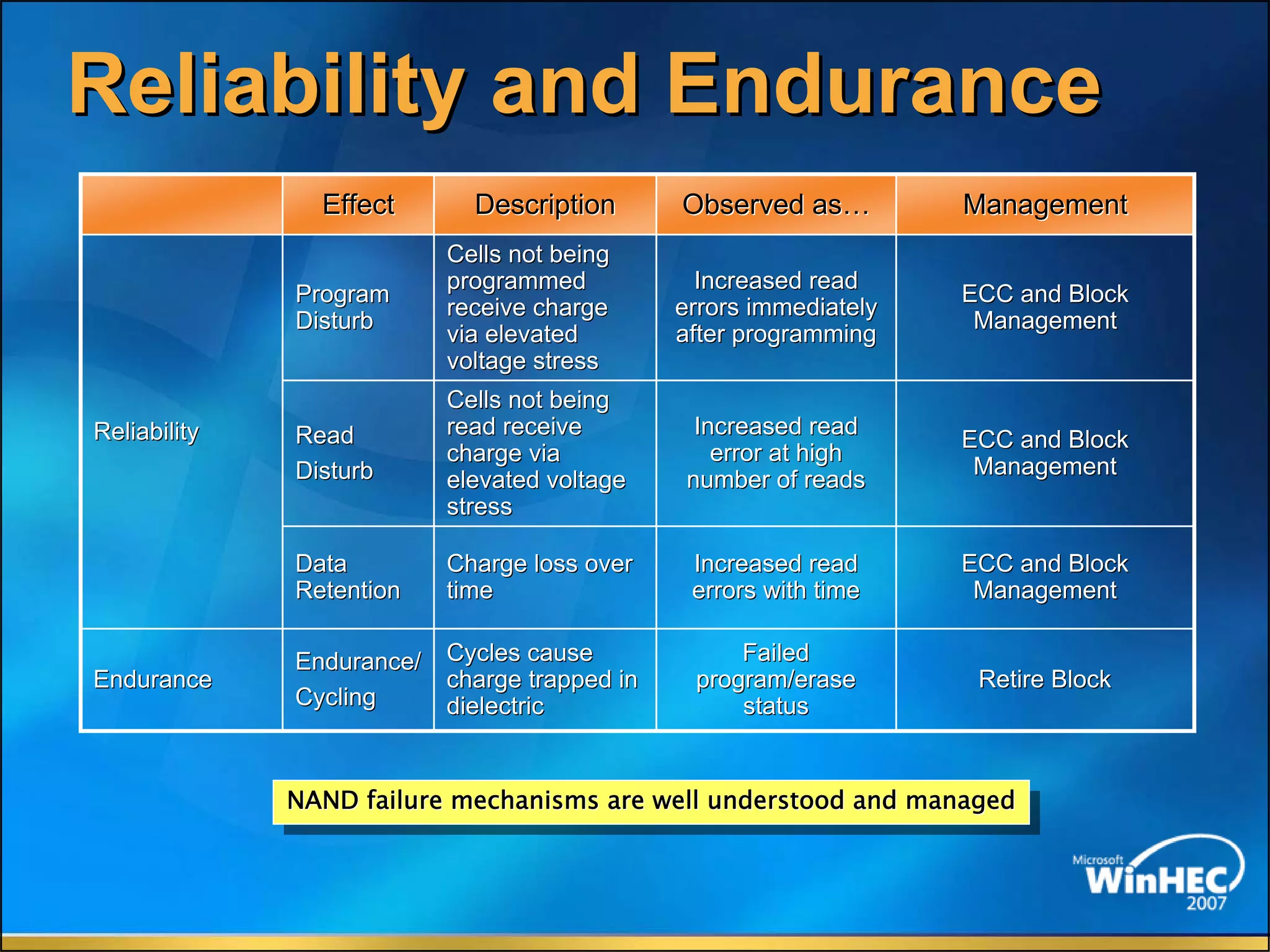

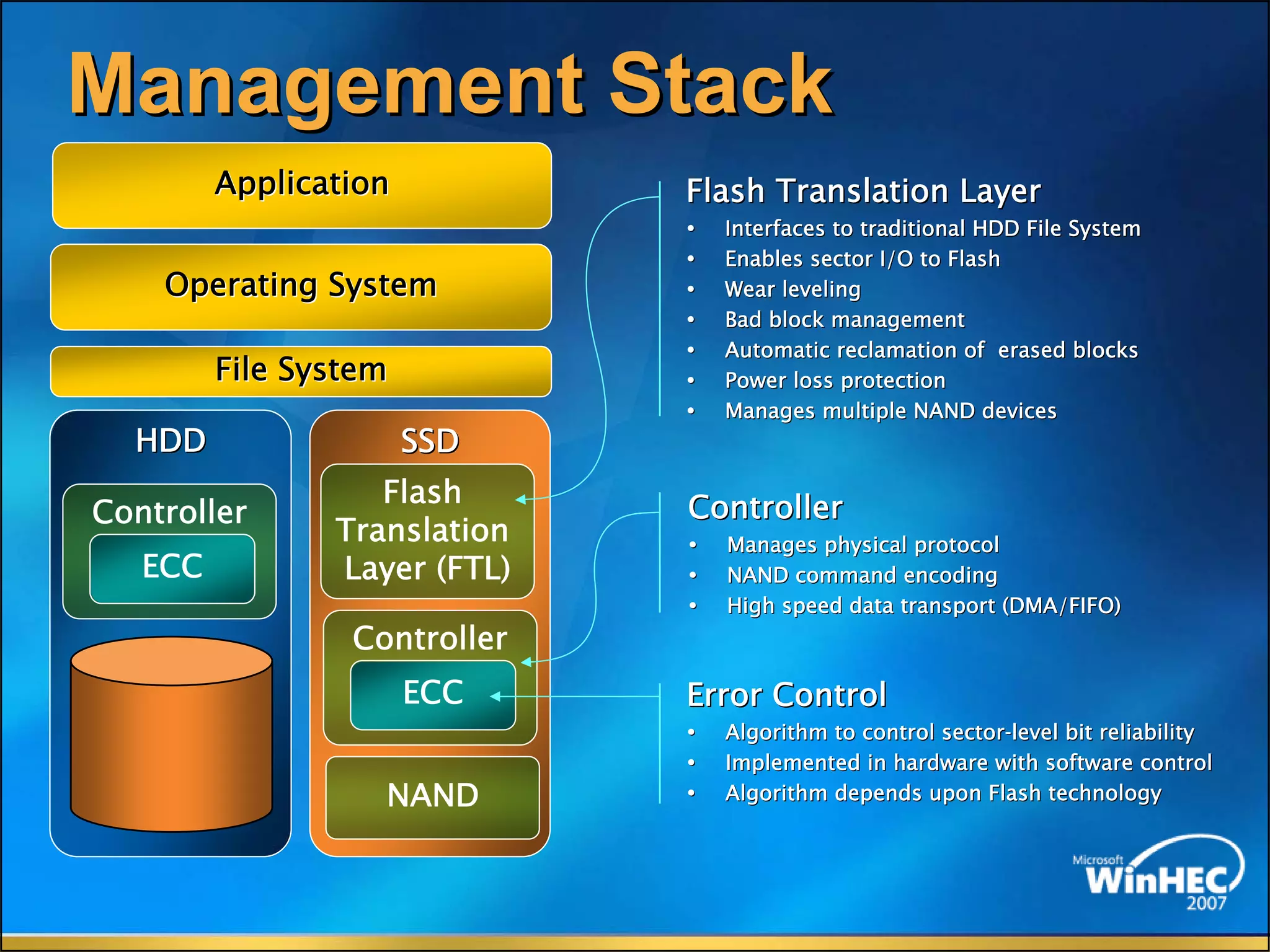

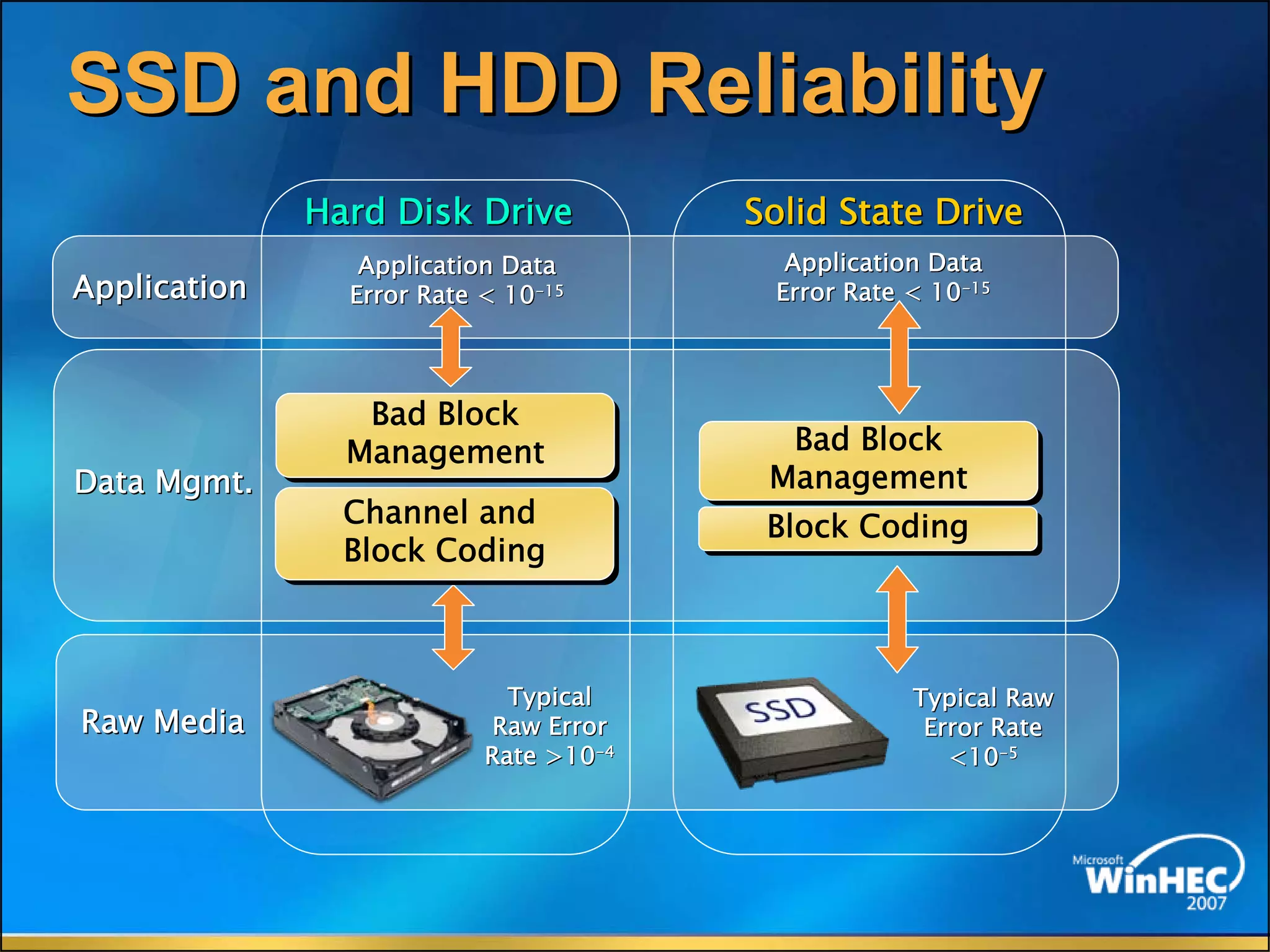

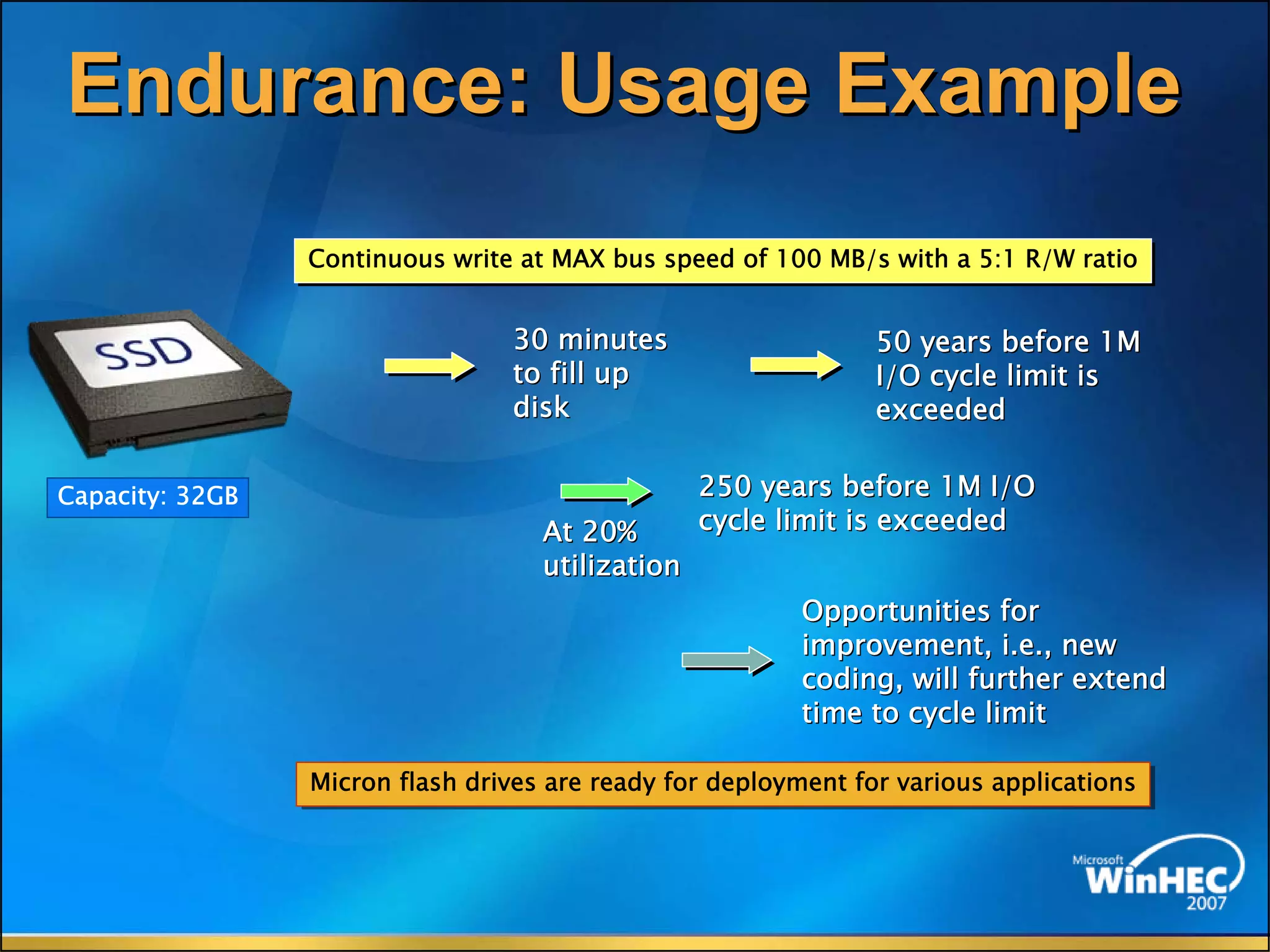

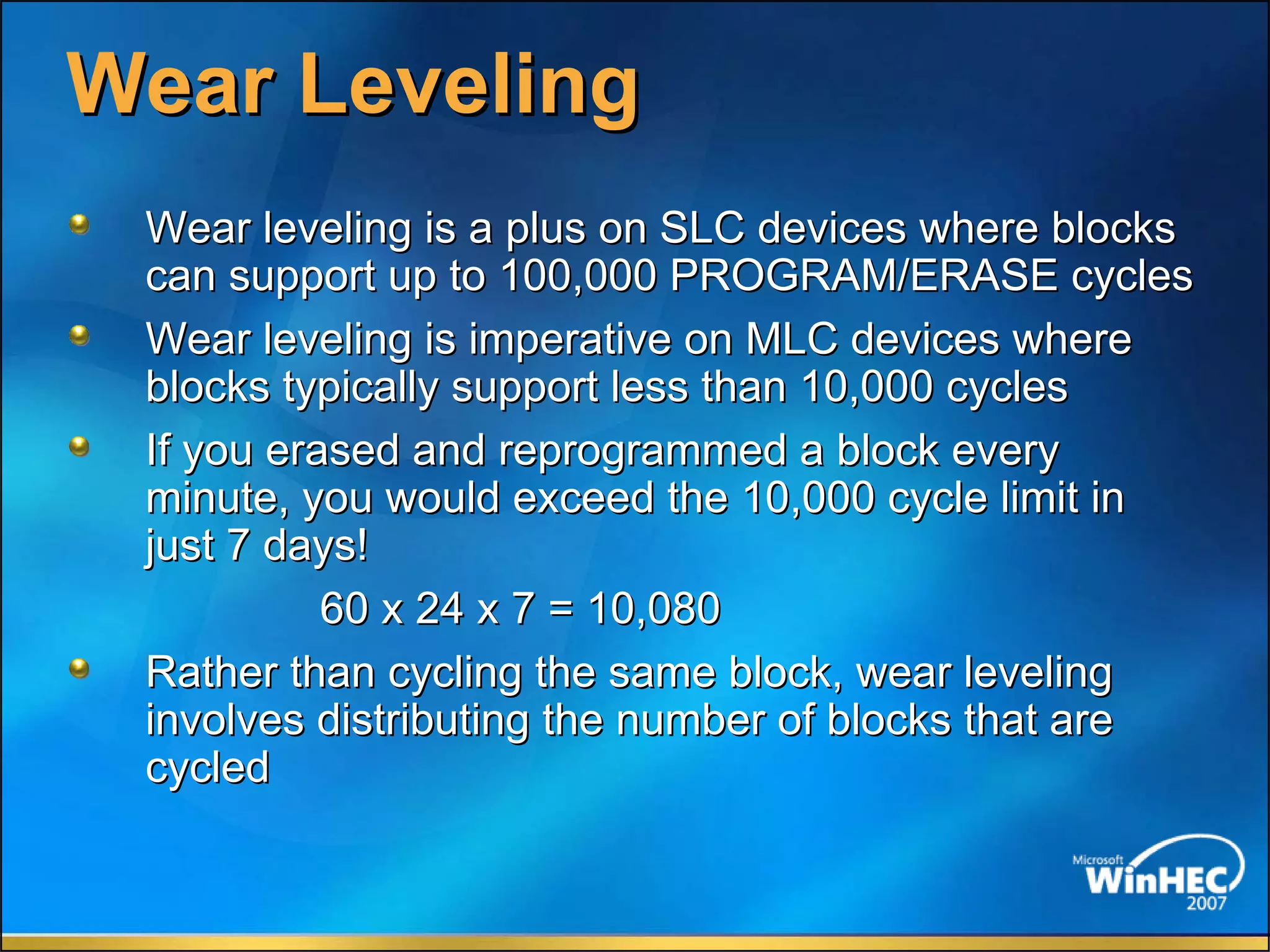

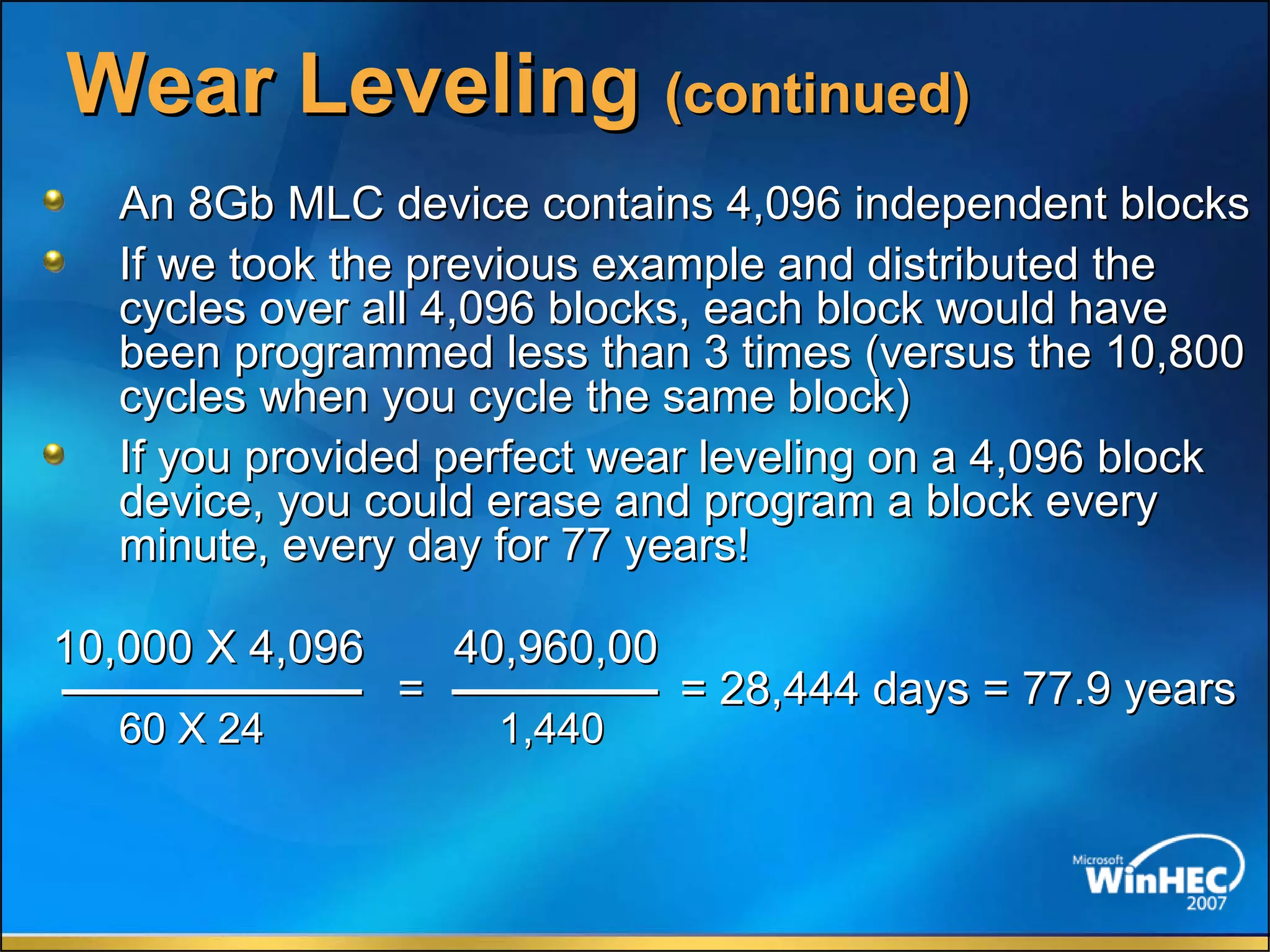

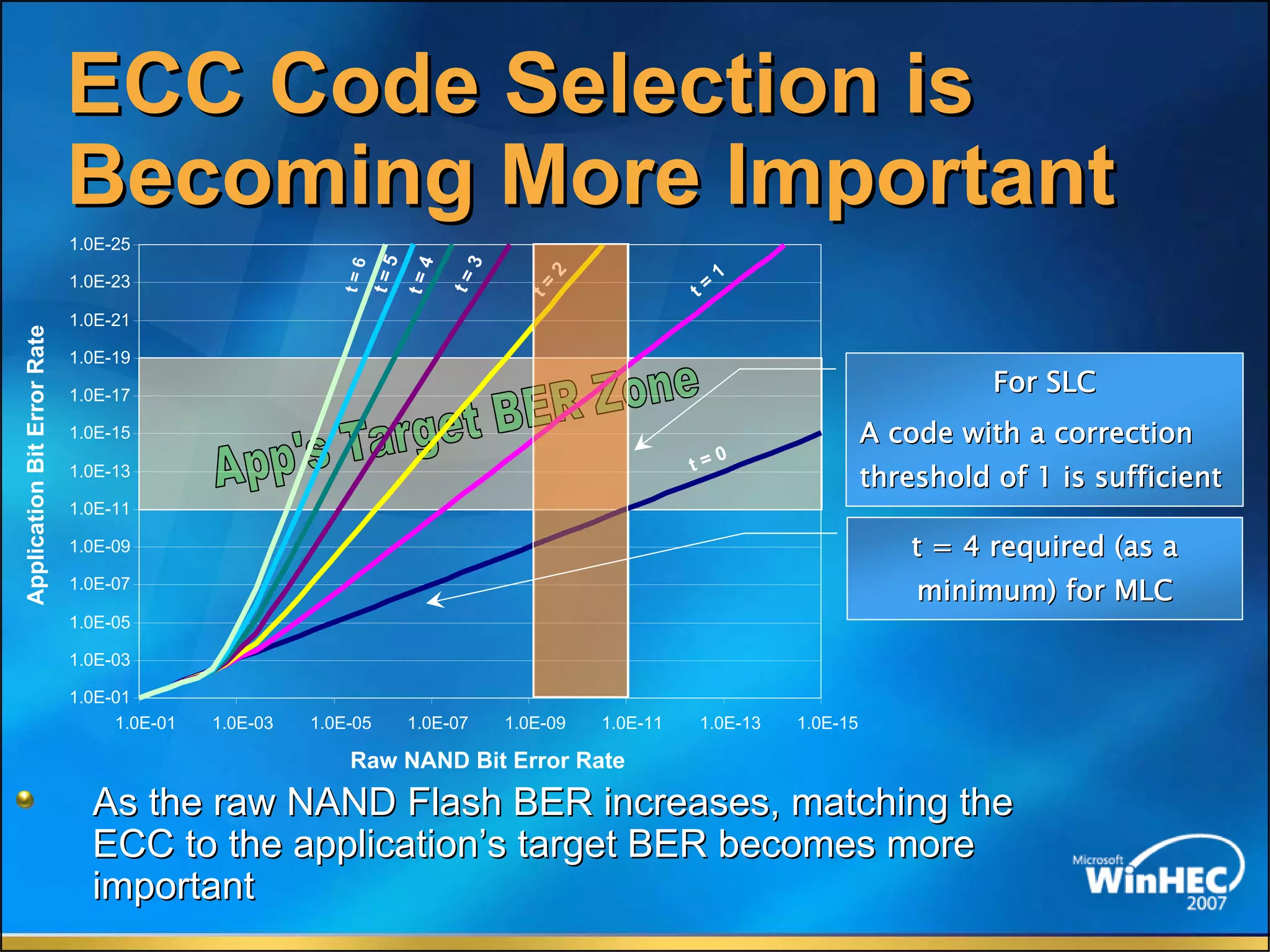

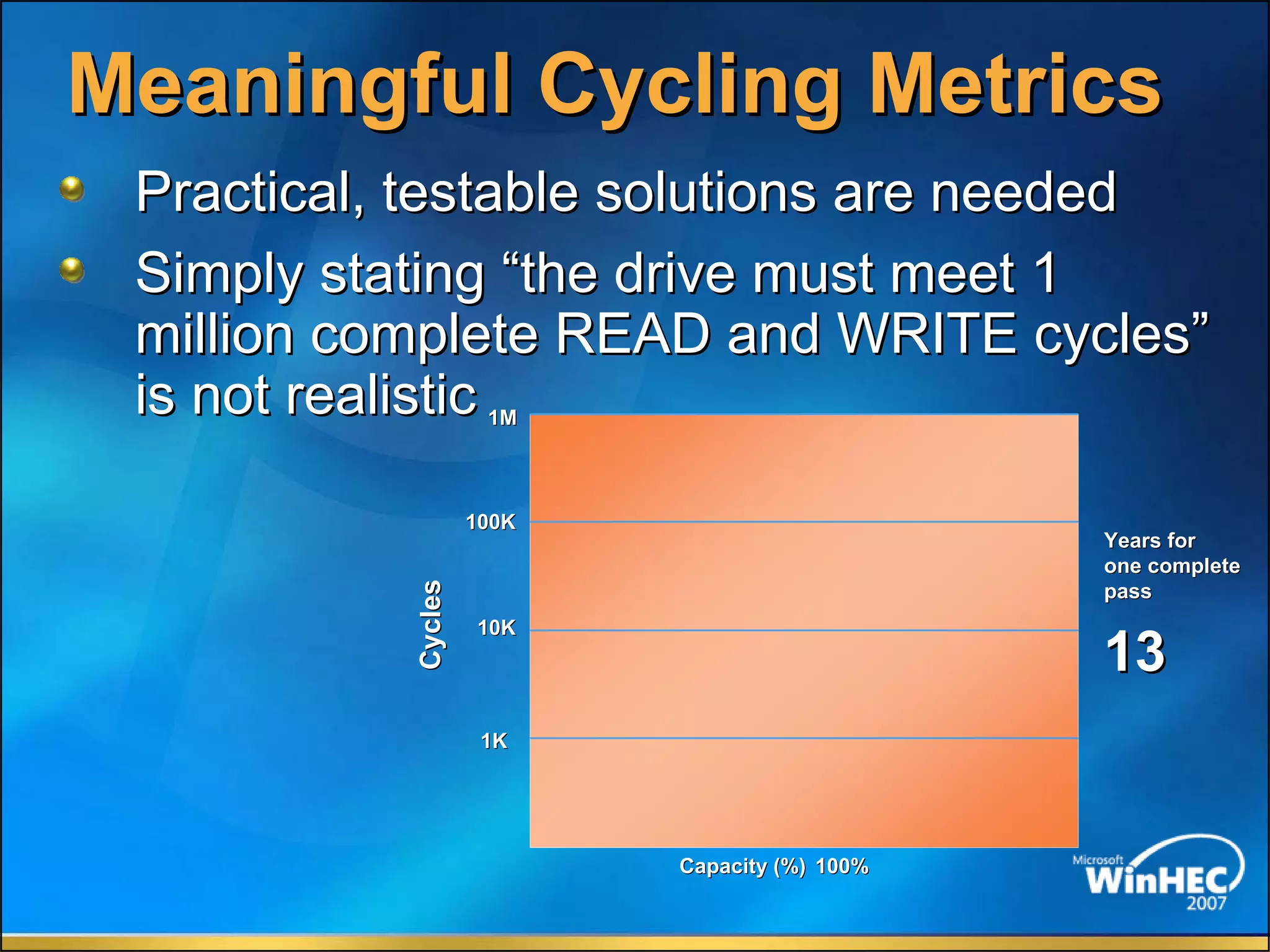

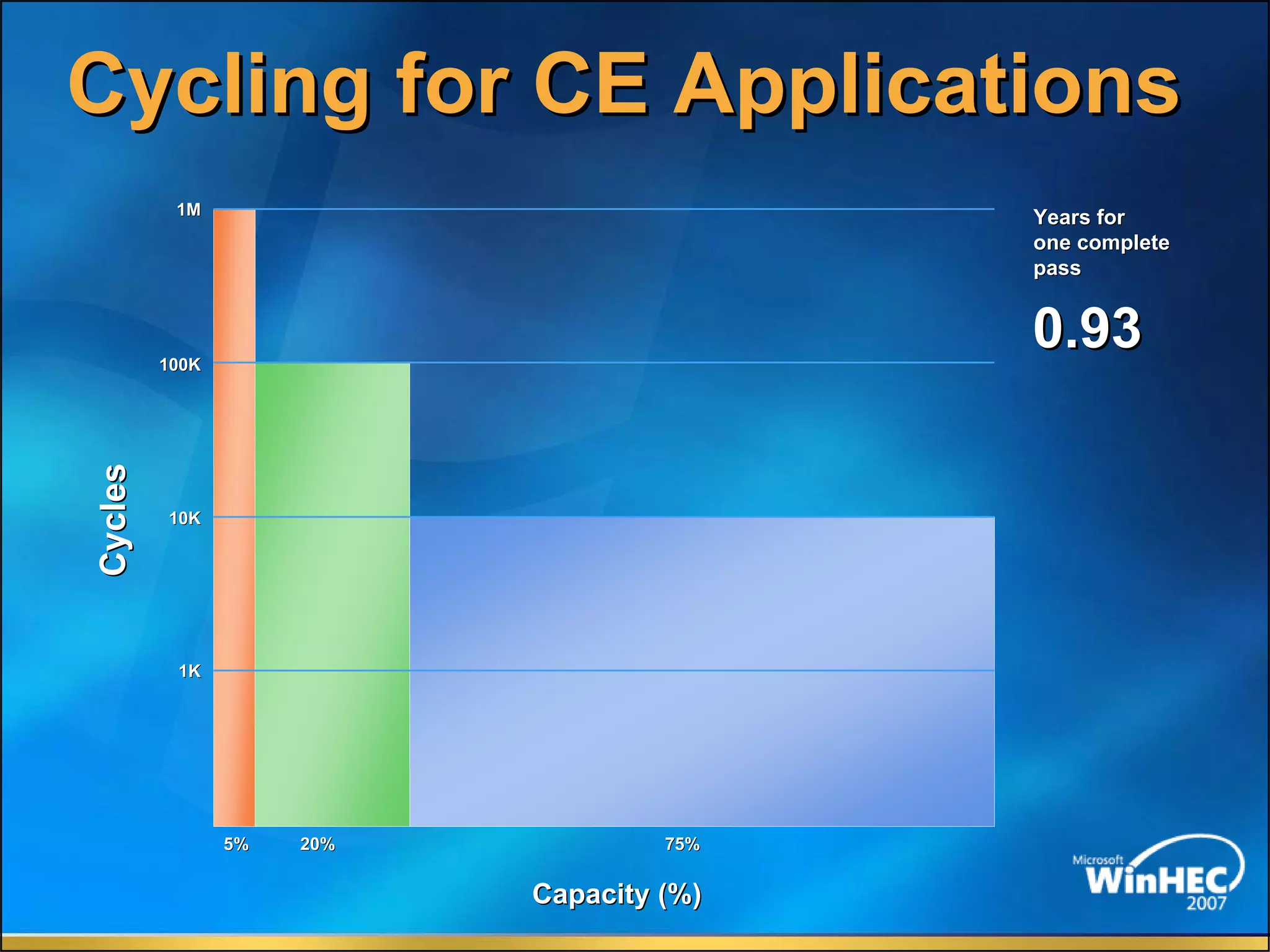

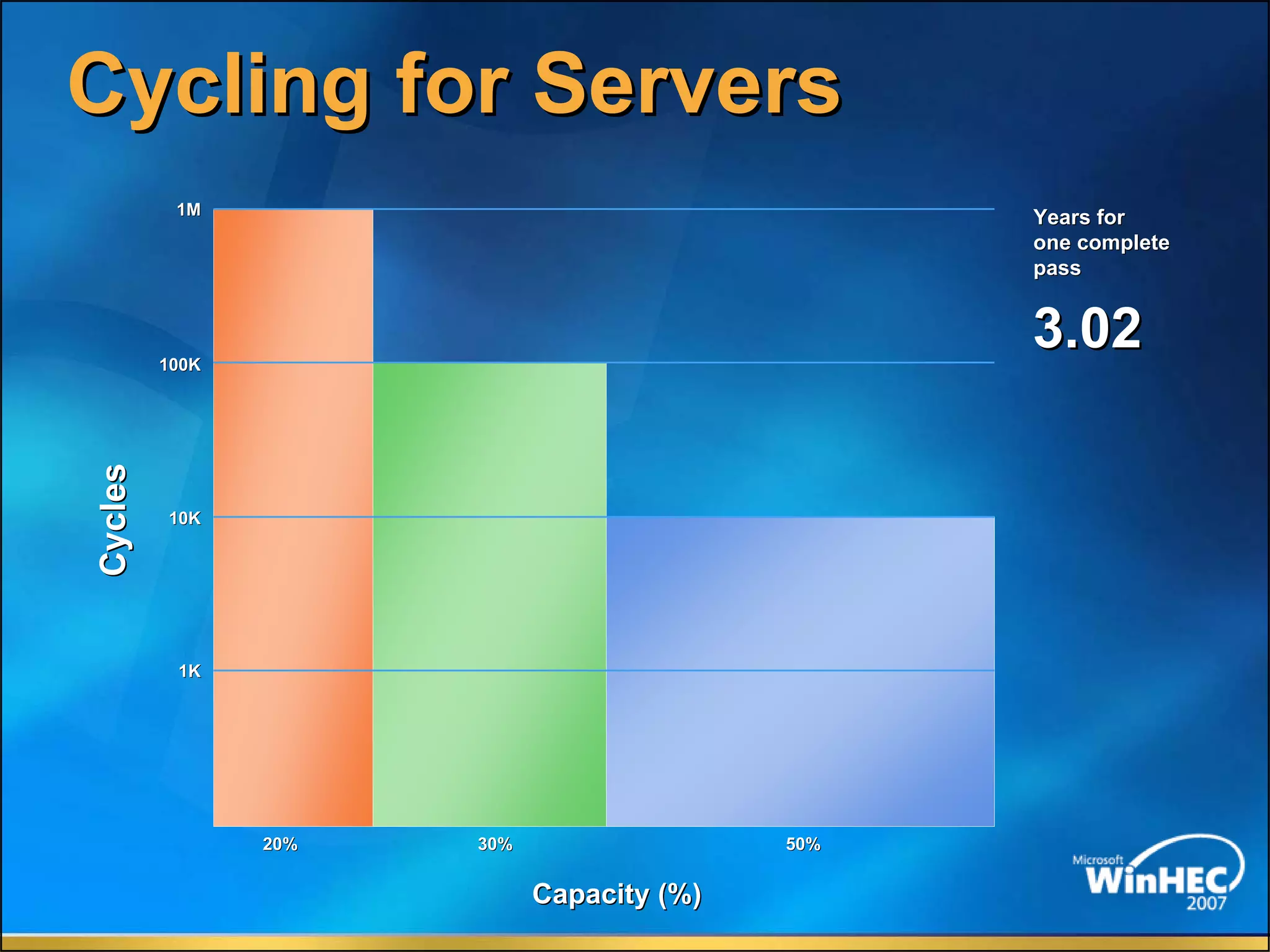

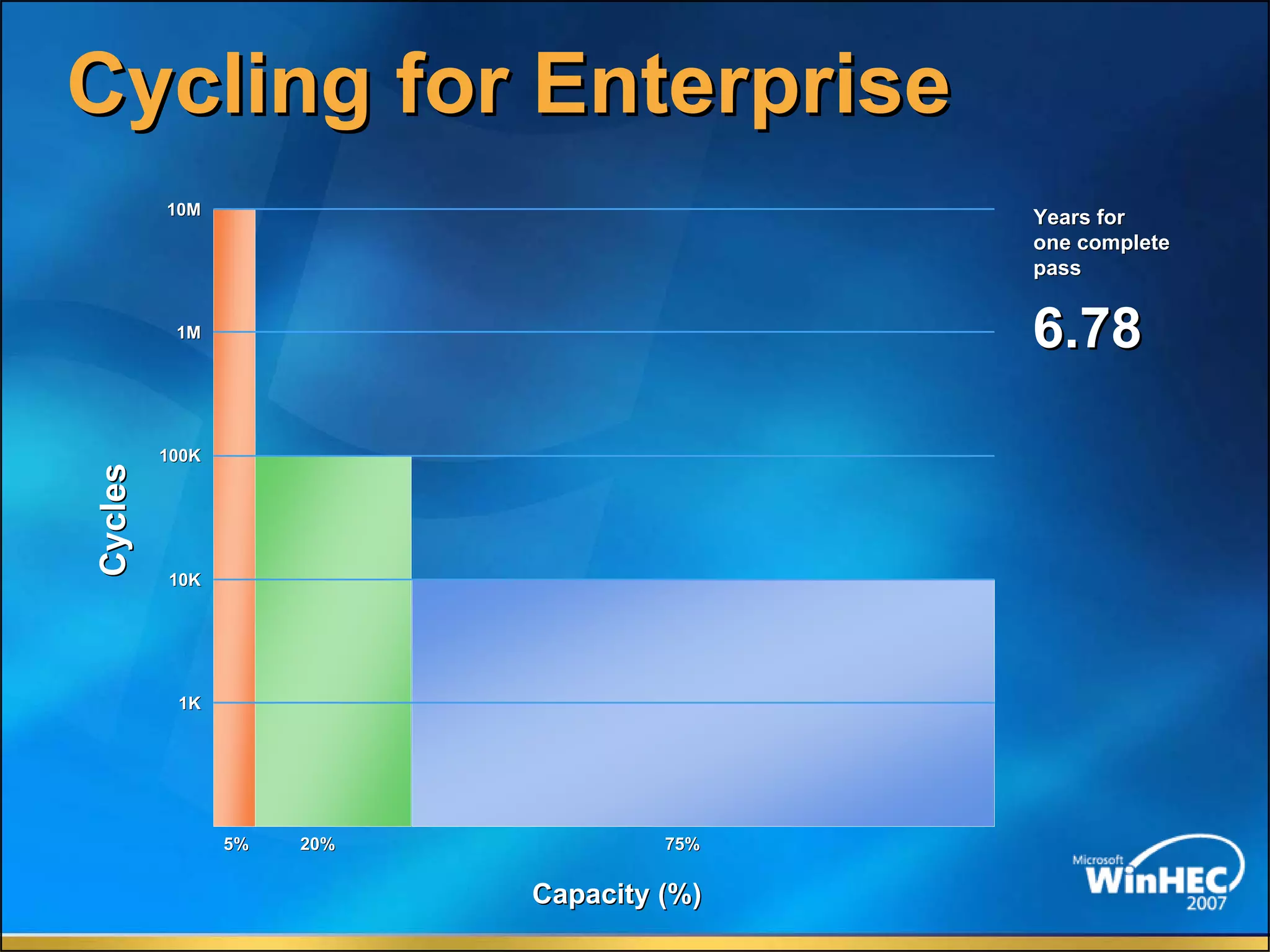

The document discusses trends in memory and storage technology, highlighting the growing performance and latency challenges in CPU-to-memory and memory-to-storage relationships. It emphasizes the decline in cost-per-bit for DRAM and NAND technologies while noting the importance of managing performance and reliability in solid-state drives (SSDs) compared to traditional hard disk drives (HDDs). Additionally, it outlines opportunities for innovation in memory and storage to address these challenges effectively.