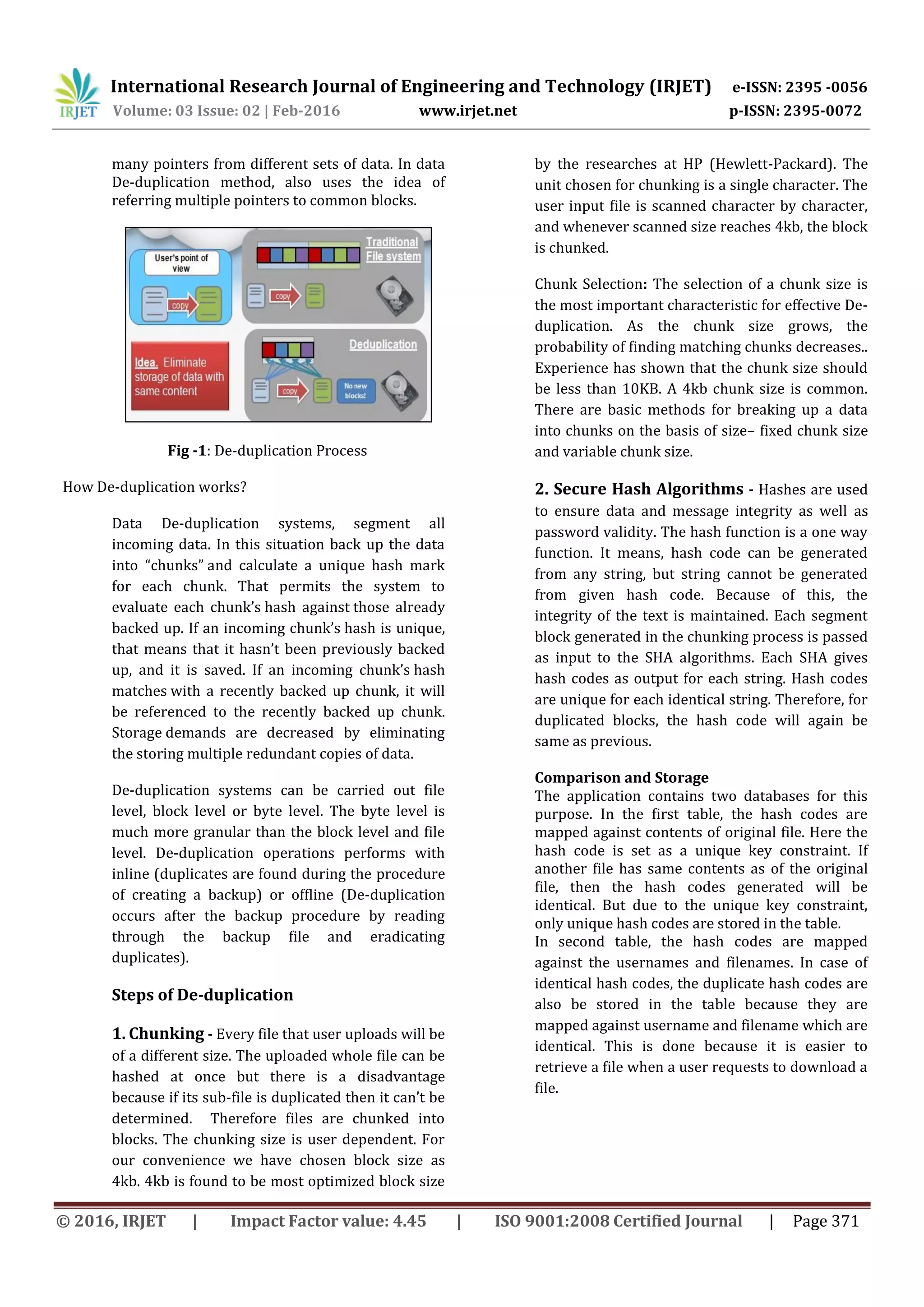

This document summarizes a research paper that proposes a methodology for optimizing storage on the cloud using authorized de-duplication. It discusses how de-duplication works to eliminate duplicate data and optimize storage. The key steps are chunking files into blocks, applying secure hash algorithms like SHA-512 to generate unique hashes for each block, and comparing hashes to reference duplicate blocks instead of storing multiple copies. It also discusses using cryptographic techniques like ciphertext-policy attribute-based encryption for authentication and security on public clouds. The proposed approach aims to optimize storage while providing authorized de-duplication functionality.