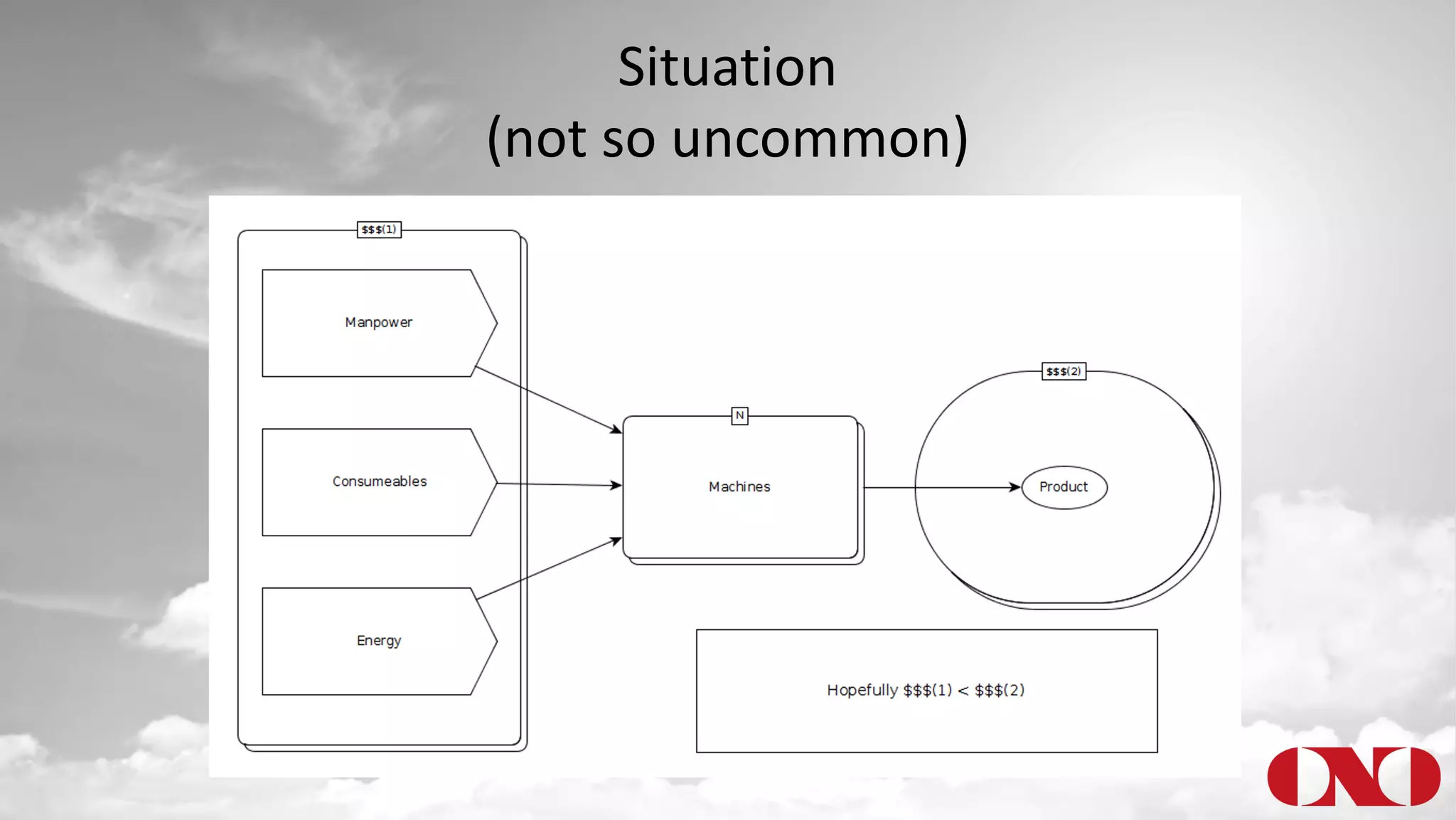

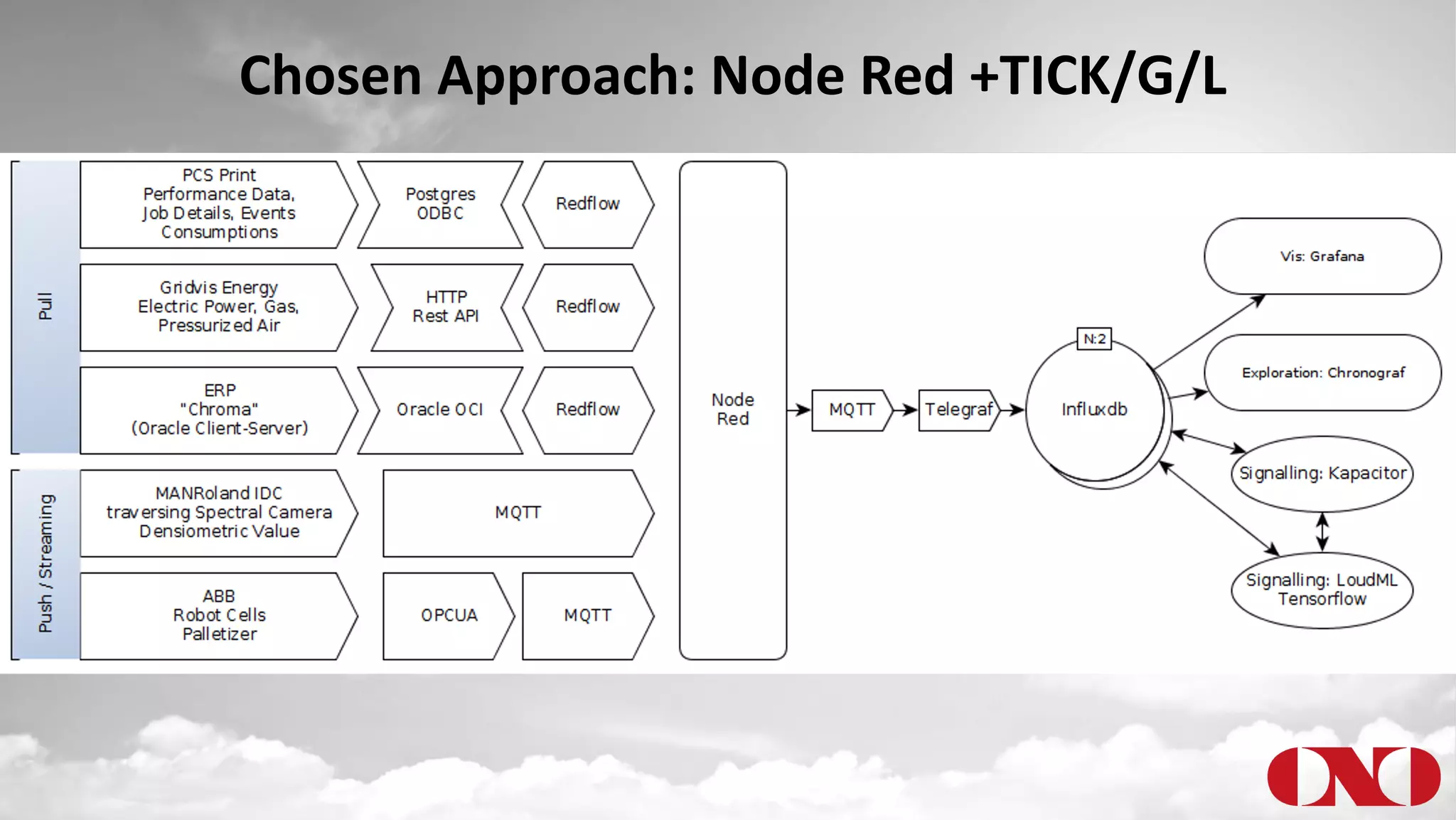

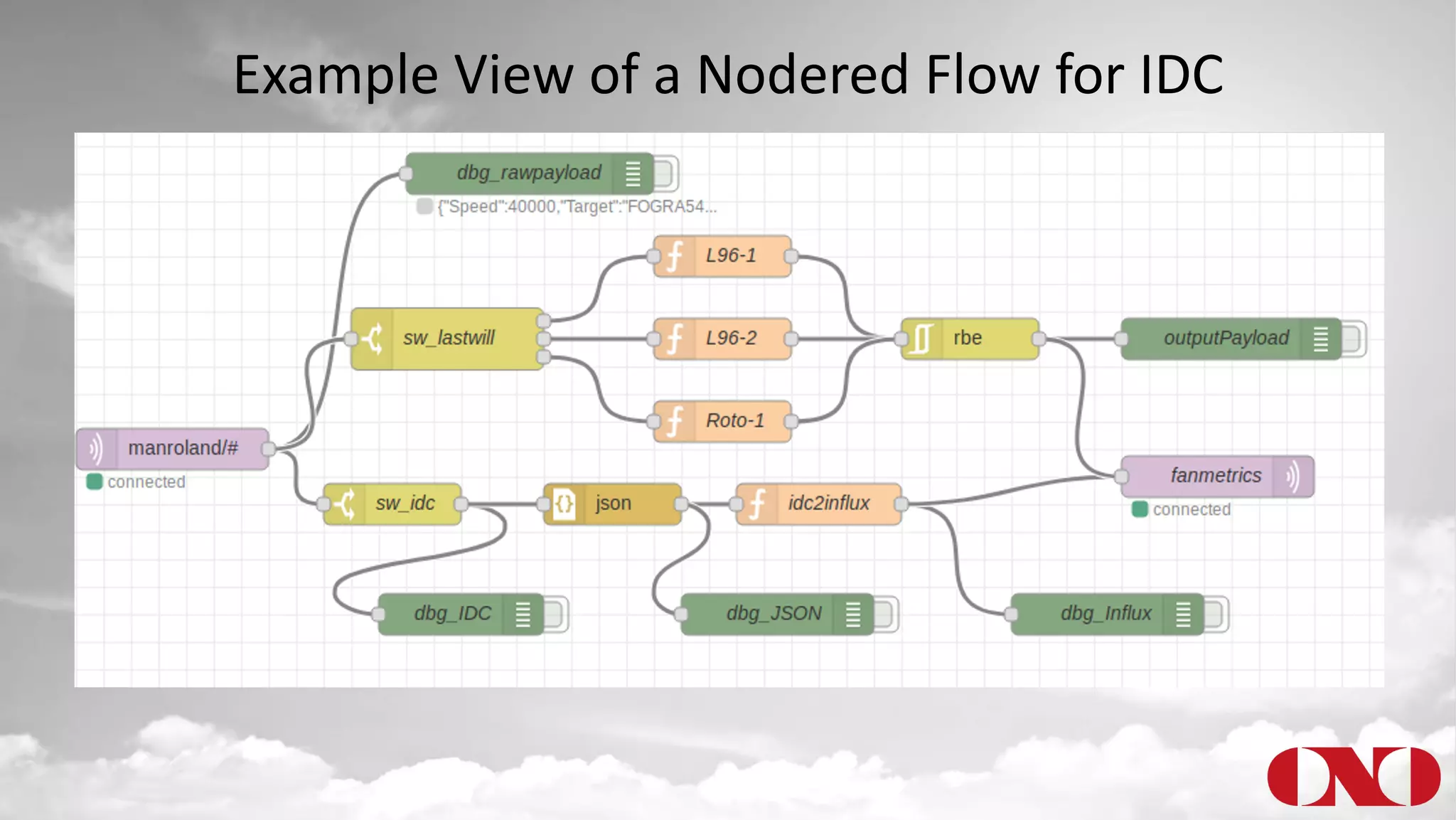

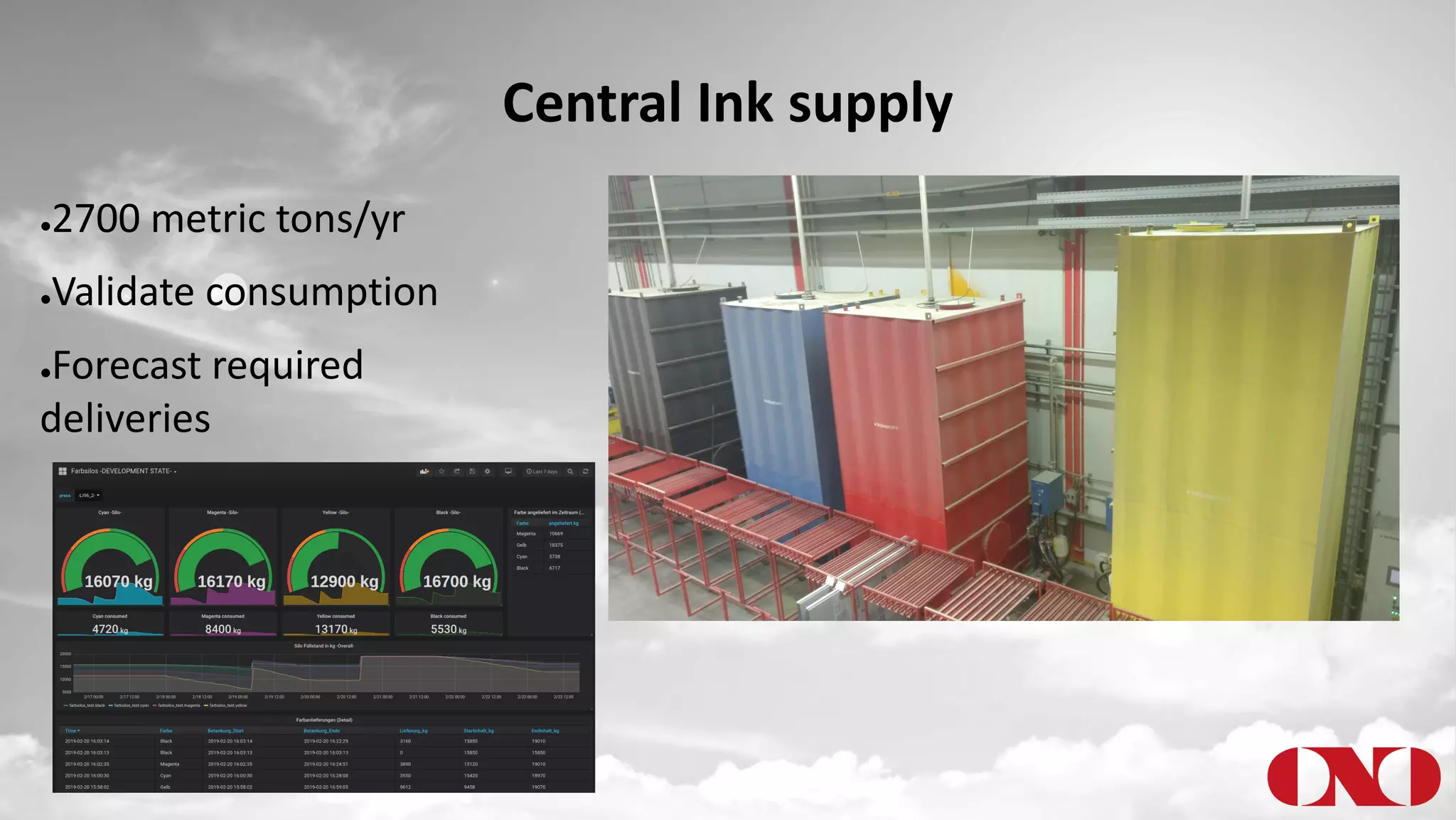

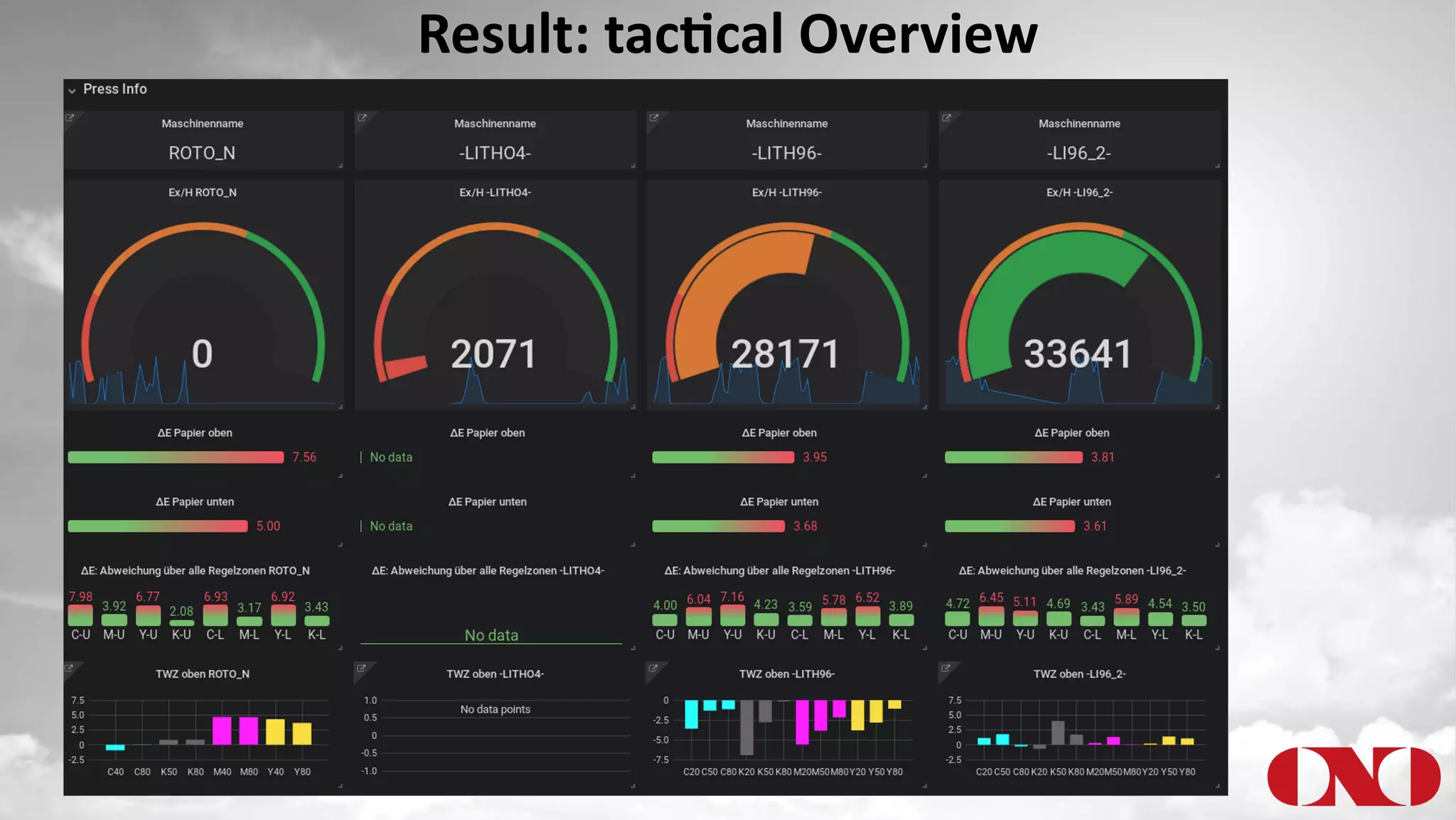

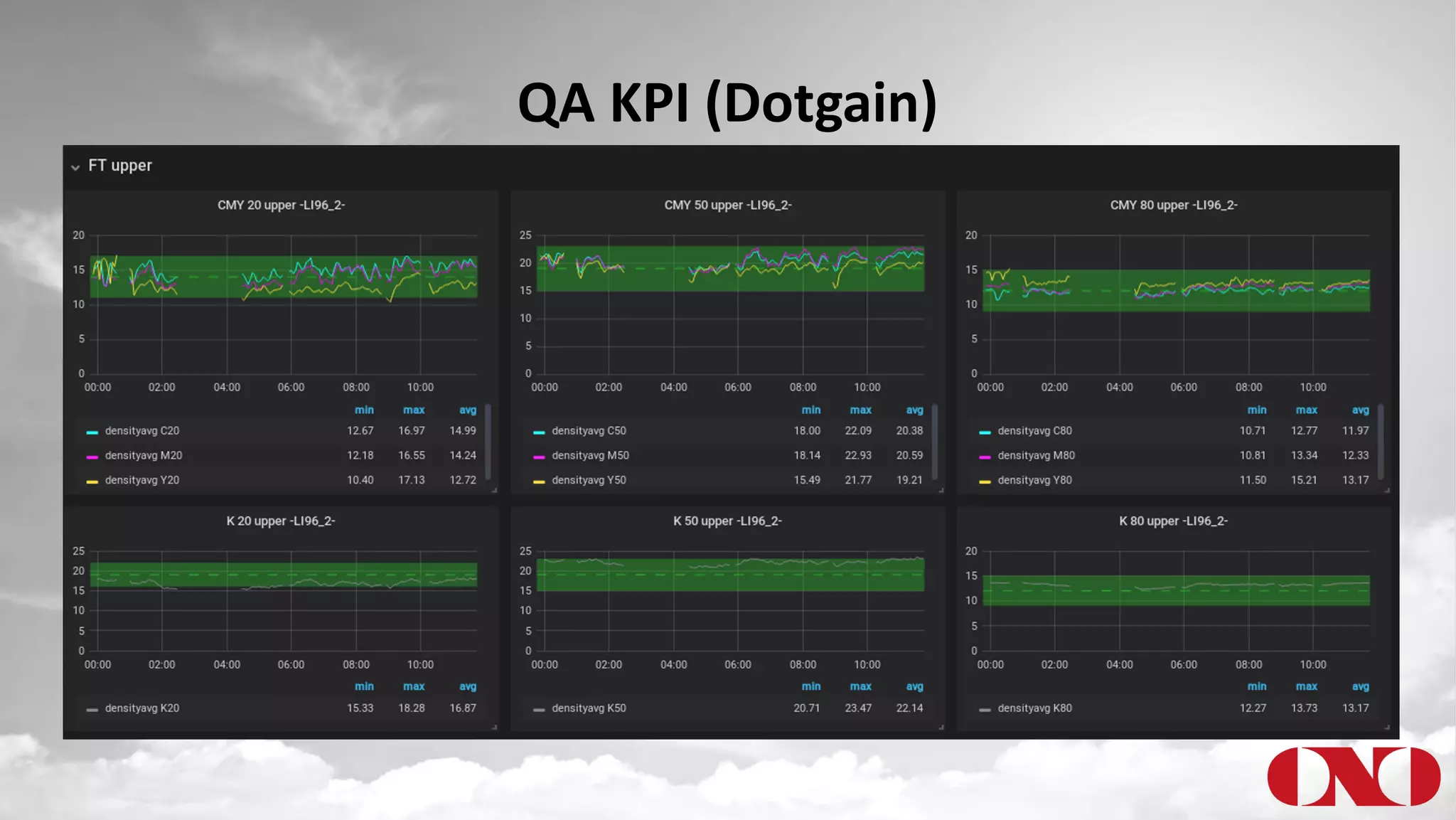

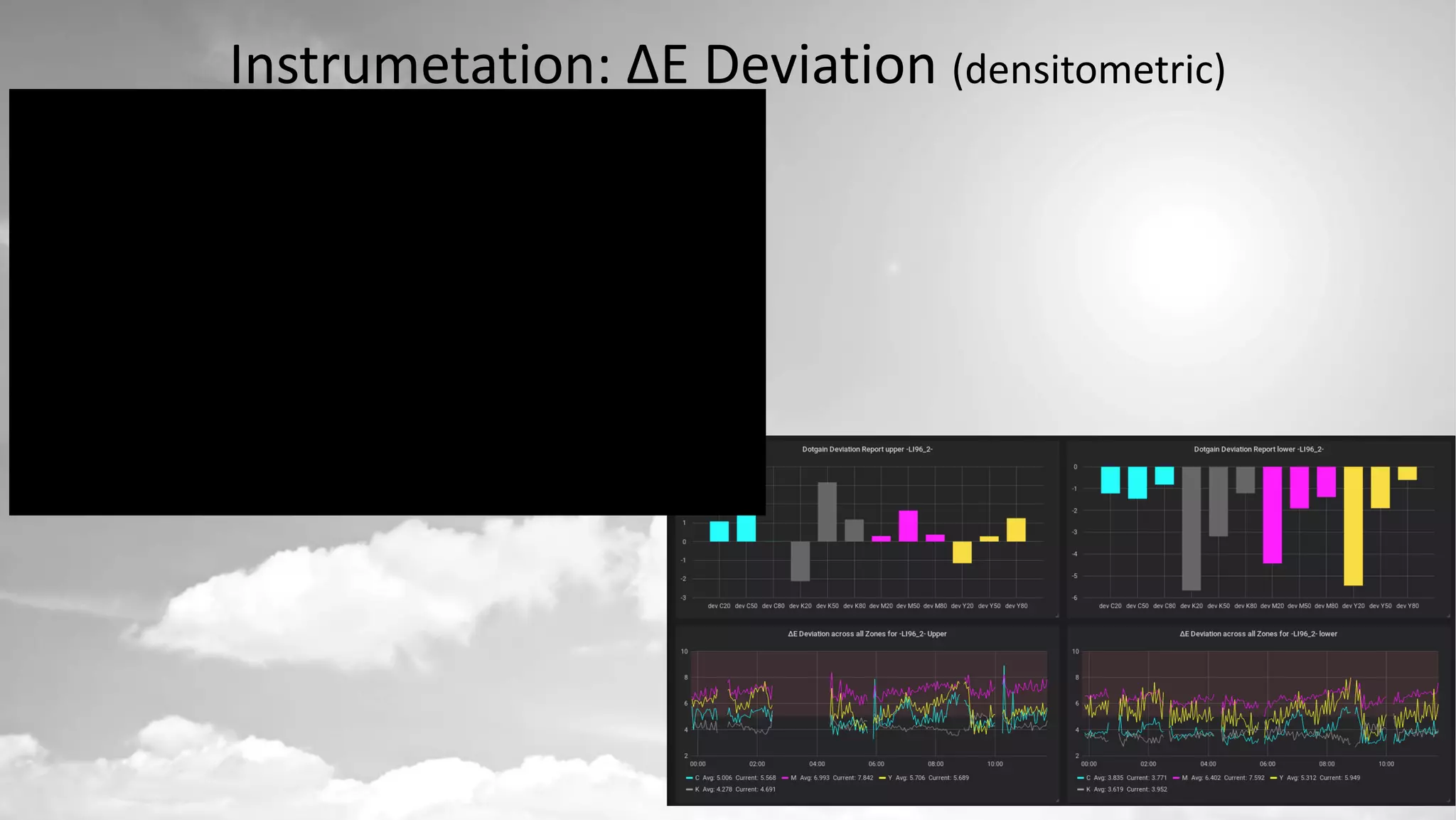

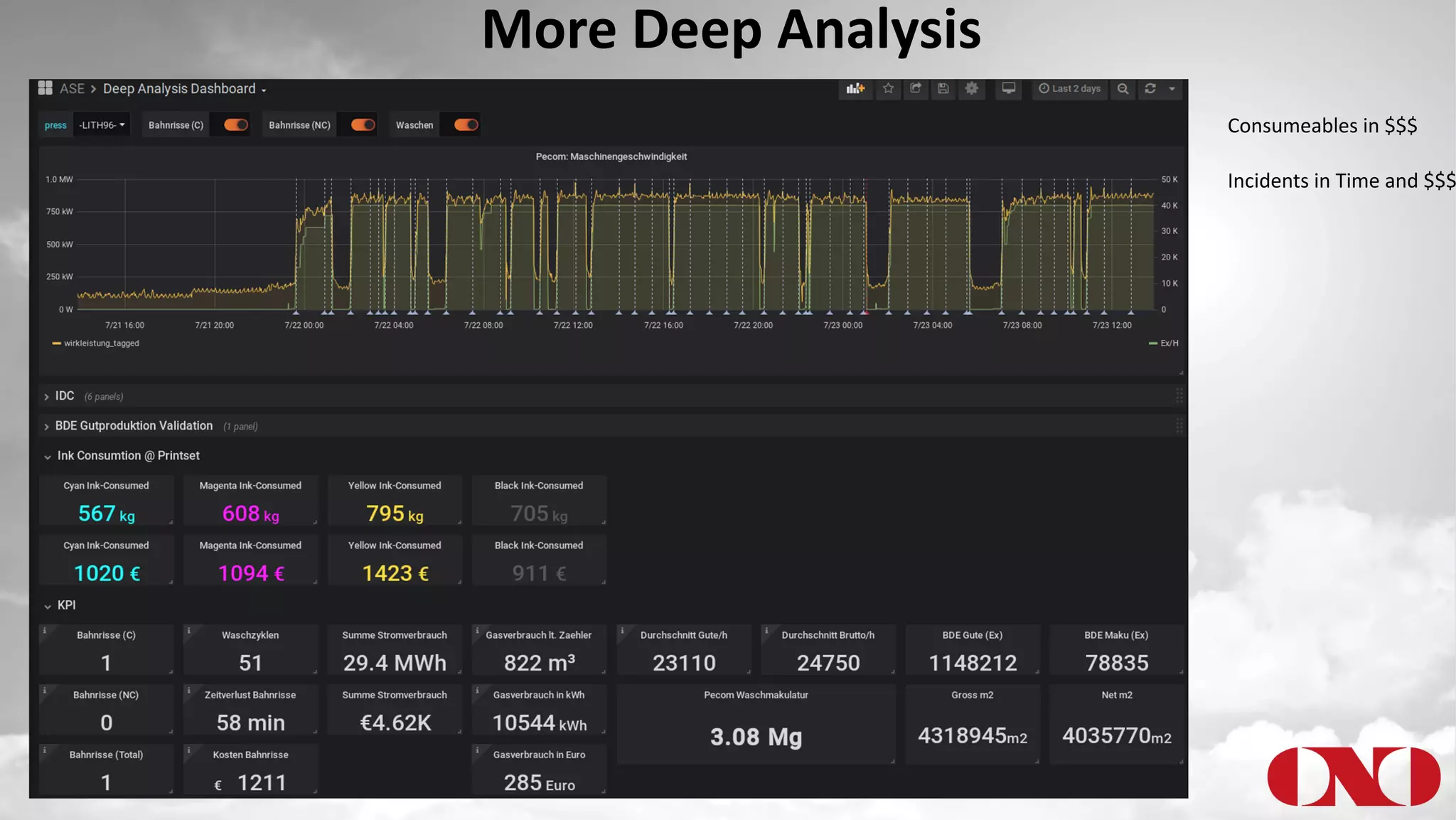

The document details a project by Fr. Ant Niedermayr GmbH & Co. KG that implements telemetry-driven production to enhance visibility and efficiency in their printing operations. Key goals included controlling processes, predicting outcomes, and cost savings, with emphasis on overcoming challenges posed by heterogeneous data sources and outdated technologies. A successful approach using the TICK stack has resulted in significant energy savings, improved data accuracy, and an ongoing plan for further instrumentation and metric analysis.