How to correlate by gkraft

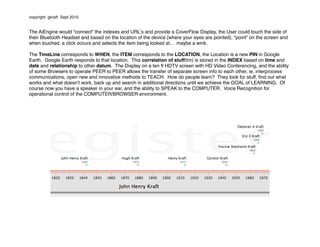

- 1. copyright gkraft Sept 2010 The AiEngine would “connect” the indexes and URLʼs and provide a CoverFlow Display, the User could touch the side of their Bluetooth Headset and based on the location of the device (where your eyes are pointed), “point” on the screen and when touched, a click occurs and selects the item being looked at... maybe a wink. The TimeLine corresponds to WHEN, the ITEM corresponds to the LOCATION, the Location is a new PIN in Google Earth. Google Earth responds to that location. This correlation of stuff(tm) is stored in the INDEX based on time and date and relationship to other datum. The Display on a ten ft HDTV screen with HD Video Conferencing, and the ability of some Browsers to operate PEER to PEER allows the transfer of separate screen info to each other, ie. interprocess communications, open new and innovative methods to TEACH. How do people learn? They look for stuff, find out what works and what doesnʼt work, back up and search in additional directions until we achieve the GOAL of LEARNING. Of course now you have a speaker in your ear, and the ability to SPEAK to the COMPUTER. Voice Recognition for operational control of the COMPUTER/BROWSER environment. the Correlation of Stuff(tm) by gkraft if information or a video is rapid retention oriented, Learn at the Speed of Sight is as simple as the presentation of related items. ones desire to our children are bored with less than Playstation3 or XBOX video speed... learn is the force God gave us a brain to think with of change, we Broadband is Dialup learn what empower the homes with wideband and everything will change fascinates us. when we wait we frustrate, a IBM S/360 had a wait light! We make selections using YouTube that way... oh that looks interesting... rollodex or coverflow how did I get a rotational stack of related items here? Where indexed via voice addendum, and existing metatags was I at dang (good better best) match logic provides a pathway once taken may be the next link, or backtrack, repetition Browser, should helps remember stuff... by timeline or event point in time. Link = trigger decision = why did you change be a snap back direction, what was the datum for your decision? becomes a pattern of behavior based on likes and to my place I dislikes, or your DNAid. links and content within said link becomes a neural network of your thinking was focused process... Google is working this now. "id" is the why we take the paths we take in learning and life. on...

- 2. Daniel and Chris did not do this in a vacuum, I told them what I wanted them to do... I was paying for copyright gkraft Sept 2010 everything out of my pocket. I used Chris then my brother (Sykes dumped both of my brothers) I needed to get them working and try to make us some income... Using a web site to control the library give certain advantages. We envision the AiDigital Library as containing a variety of different displays placed at various locations in it. Using the web in this fashion allows the user to control any number of library Internet devices with the same interface and on the different displays. As such, the web site becomes a universal controller for the user. QuickTime VR viewing and control: If the user arranges them in a half-circle facing him, he would have an excellent viewer for QuickTime VR images. The displays arranged like this could give up to about a 120-degree wraparound screen. Viewing a QuickTime VR image, e.g. of the AiDigital Library web site would be impressive. The touch screens would allow the user to sweep his hand across the display and literally pull the graphics to where he wants. Distance Learning: Capturing live videos of lectures to a server will allow students in remote classrooms, distant cities and other far parts of the world the ability to attend classes at secondary schools, colleges and universities at their convenience. The server allows not only distance learning but also 'time' learning, in the sense that they can view the lessons at a time of their choosing. This allows company employees working on undergraduate or graduate degrees, which must travel on company business or have to work during critical times, the ability to learn their lessons even while accomplishing their regular company business. Another market is in corporate training, military training or any other application where important information needs to be retrieved when the viewer has available time. Methods Feature: Moveable Display Screens Description: The ability of a user to place the displays in almost any position or orientation desired. This is a fundamental component of the Portable Library. The Portable Library has three main LCD panels for its displays. By positioning the displays in various configurations, the user of the Portable Library can use it as either a wide screen system, or a wraparound QTVR viewer, or an out-the-window views such as is used for flight and car simulators (these configurations are described separately as individual features). In addition it also allows the user to use the entire space around him for the Windows Desktop. With this a user can, for example, place file icons for movies clips above his head, and sound clips next to his left ear. Written with the tracker's SDK, a program on the Portable Library computer first initializes the tracker and then monitors it by the serial port. The Portable Library can then access the tracker data from the serial acquisition program. The access can be in done in one of two ways, either by using Window event messages or by using a shared memory location. The conventional method is to use Window event messages. However, it this is not feasible we will resort to a shared memory location. In either case, any program that requires positional information, such as a Java Applet activated from the browser, must make use of the tracker's SDK if it needs to access this information. For the out the window simulators, the program will change the display mode to one viewport per display. The different views can then be produce by either configuring the software for this arraignment or by running multiple instances of the executable, but with different viewing positions (e.g. left, right, and front views). For the QTVR and Panoramic viewer, the program will change the display mode to one large viewport with each display mapping into a different portion of the view. This configuration will also be used to enlarge the Desktop to surround the user. Feature: Panoramic Viewer Description: The displays are placed side by side for viewing videos with wide aspect ratios such as Panoramic movies. Unlike the QTVR displays, the images are not arranged in a semi-circle but as one large flat display.

- 3. copyright gkraft Sept 2010 Procedure: Although this is a simple concept, achieving a workable view will be difficult. When watching movies and looking at images, one should not have a border along each portion of the image mapped by a display. Yet just about all displays have a border along all edges of the display, somewhat like a picture frame (in the case of a LCD panel, this is probably the display circuit board). The ideal solution is to find a display with no borders at least along one dimension. Without this, we may be able to achieve the same effect with real-time optical correction and some type of mirror arrangement. Please see the Addendum: Concerning making a flat display with no gaps (Gordon, I think we might have some patents here). Regardless of how it is achieved, speech, hand motion, and the touch screen will control the Panoramic Viewer. As with the QTVR viewer, it will make use of the IR position sensors on the library's three display support arms. A program will be written that changes the display mode to that of one large viewport with each display mapping into a different portion of the view. Again as with the QTVR viewer, the size and aspect ratio of each viewport will be changed to match the FOV of the combined monitors, and a system message will be added that reminds the user to reposition the displays when he is viewing a QTVR image. A user will be able to use the Panoramic Viewer for editing and interacting with video (such as is done with enhanced DVD's). While watching a news clip the user could say "stop" followed by "save as example one", which would result in a of a clip of video saved onto the hard drive. He could also use hand motions or the touch screen to change the speed of the clip. The user will also be able to manipulate static images. He will be able save an image to disk by using his finger to trace around a frozen video segment (such as with a mouse with MS Photo Editor). Feature: Hand Motion Control Description: The user can control various aspects of the Library hand by using hand gestures. These are the following: QTVR viewer Video Photographic images Music Hopefully this will be one of the more natural ways for controlling the library. With this a person could sweep his hands across a QuickTime VR image and have it rotate around him. He could point to a display icon and launch it by literally making a throwing motion or other such gesture. With photos or other graphics, a user can use his hands to pan an image larger then the viewport. For video, the user can use gestures for the editing commands such as stop, fast forward, and reverse. For music, the user can interact with any of the numerous programs available for creating, conducting and playing music. For example, a user could do such things as change tempo, switch or add instruments, change the rhythm or 'turn' a page while one plays. Procedure: Software from Reality Fusion will be used for recognizing gestures. This software does a real-time analysis of the video input so as to respond to specific hand and body gestures by the user. To use it a small lightweight video camera facing the user will be attached to each display panel. Using the Software Developer's Kit (SDK) that has been provided by Reality Fusion, we will write software to interface it with the Library. The software will send the appropriate user I/O (most likely emulating ASCII from the keyboard) when it senses a command gesture. Reality Fusion will be used for the following: QuickTime VR Viewer DrumBox (shareware) Microsoft Photo Editor (GIF, JPG and others) QuickTime Viewer (MOV) Microsoft Active Player (MPG)

- 4. copyright gkraft Sept 2010 Feature: Speech Control Description: The user controls various functions of the Library by voice. Voice commands will be used for the following: Windows Commands Web browser Library web pages Display settings (brightness, aspect ratio, contrast etc) Video (editing, stop, start, rewind etc.) Music (loudness, bass, treble etc) Procedure: The software ViaVoice will be used for speech control. Like most voice recognition systems, it comes with a command set for controlling Windows (such as with file commands) and also procedures for setting up command sets for programs. With this we can then give the user control over the display settings since they are adjustable by the operating system. Command sets will be written for the other functions. The first command set will be written for the browser since it will be the primary user interface. By doing so we add voice commands to the Library's web pages. To add voice capability for video, music and the display mode, additional command sets will be set up for the most commonly used video and music editor programs, as well as for changing the display. The programs that will have command sets written are the following: Microsoft Photo Editor QuickTime Viewer QuickTime VR Viewer Microsoft Active Player Additional command sets will also be written for the audio player and other multimedia programs that were provided with to the computer when purchased. For show demonstrations we need to make sure we can use voice independent commands for at least a subset of those needed. If ViaVoice does not supply this than we obtain an additional software package that does to be used in conjunction with ViaVoice. Feature: Touch Screen Control Description: The user can control the Library using touch screens on its three displays. Using the software typically supplied with the screens, the user will be able to select icons, move scroll bars, etc. just as if done by a mouse. Procedure: The touch screen will use an active optical matrix keypad and interface with the Library computer through the serial port. These work by placing optical infrared emitter/transmitters around the perimeter of the display. Unlike the pressure sensitive kind, they do not obscure the image. We will also have fewer problems with vibration because they do not require an actual press on the screen. We may have to use the pressure sensitive kind if the other's infrared system interferes with the tracking system that is used for display and other positional information. If this is the case, we will then resort to a touch membrane interface.

- 5. copyright gkraft Sept 2010 Touch screens typically come with software so that a user can control the screen as if it were a mouse. We will use this for most of the functions in the library that will make use of the touch screen (those that are mouse like). If the library is using the displays in a group (such as with QTVR), the touch screen will be active across all three screens and with one shared viewport. Feature: Three-Camera Viewport. Description: Each of the three LCD displays has a lightweight video camera attached to it. Used with a good user interface this feature could be one of the more marketable aspects of the library. They have the added benefit of providing three viewpoints of the participants in conference. In addition, because a user of the Portable Library can reposition the displays and cameras, they can move them so as to interact with other participants. We could greatly enhance the effectiveness of this type of videoconferencing by designing utilities that help the user use the three-viewport arrangement. These could include such things as a 3D global display of all participant's in one picture;. or a tactile response when remote displays 'bump' into each other. Another thing we could do stereoscopic video conferencing. This is possible in the Portable Library because all the displays are 3D capable. If the user positions two of the cameras/displays towards a view, the two images would be used to produced stereoscopic pairs that result in a three dimensional view of the visual scene. These enhanced video features are well suited for distance learning and teleoperations such as remote surgery. Feature: Three-dimensional volumetric reconstruction. Description: The user of the library can use the cameras to reconstruct a 3D volumetric image of whatever he places in front of the library. If he does this to himself he is also provided with a 3D analogue suitable for use as an Avatar. Procedure: Several commercial software companies make products that construct a 3D object from pictures of the object from several viewpoints. There is one company (TBN) that makes software to wrap a picture of a person intelligibly on a generic 3D model of a head. We obtain these products and hopefully SDK's so as to do this in real-time in the library. Daniel worked or saw a SGI Cave and we discussed using it as a imersive work and learning environment, I researched SGI Octane Workstations and a ONXY for Rendering, we needed $2.5M to go to the next level and Jeff was working on his own project MarinaRes on my nickle... I had to close Capitola Beach and Krystle and I discussed this with Jeff in Dallas during a StarPower BOD meeting break, I had Jeff come to Dallas to meet with us as one last try to turn some of our investment into reality, but no sales effort was evident... I put Jamie in charge since he did most of the work... I expected everyone to be as productive as Jamie, and he was surfing half the time... I even met with several Naples Internet developers from the Gulf Coast Venture Group to try to find a way to commercialize, but I could not fund it any longer... I have used the old stuff for over ten years to tell my story of why we need to build it... it's all still in my head, because until I have Work For Hire Developers and Funding, it will stay there.