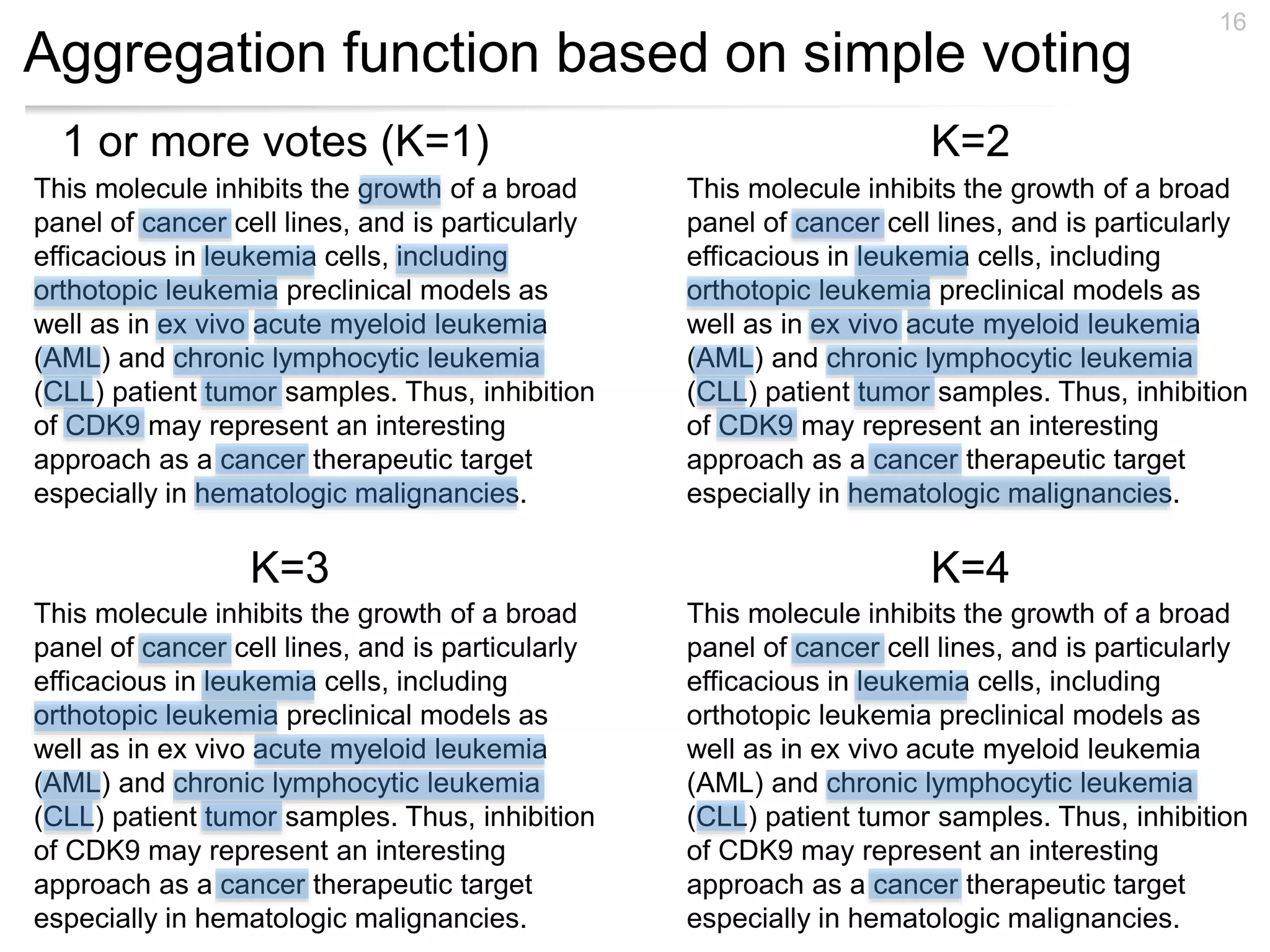

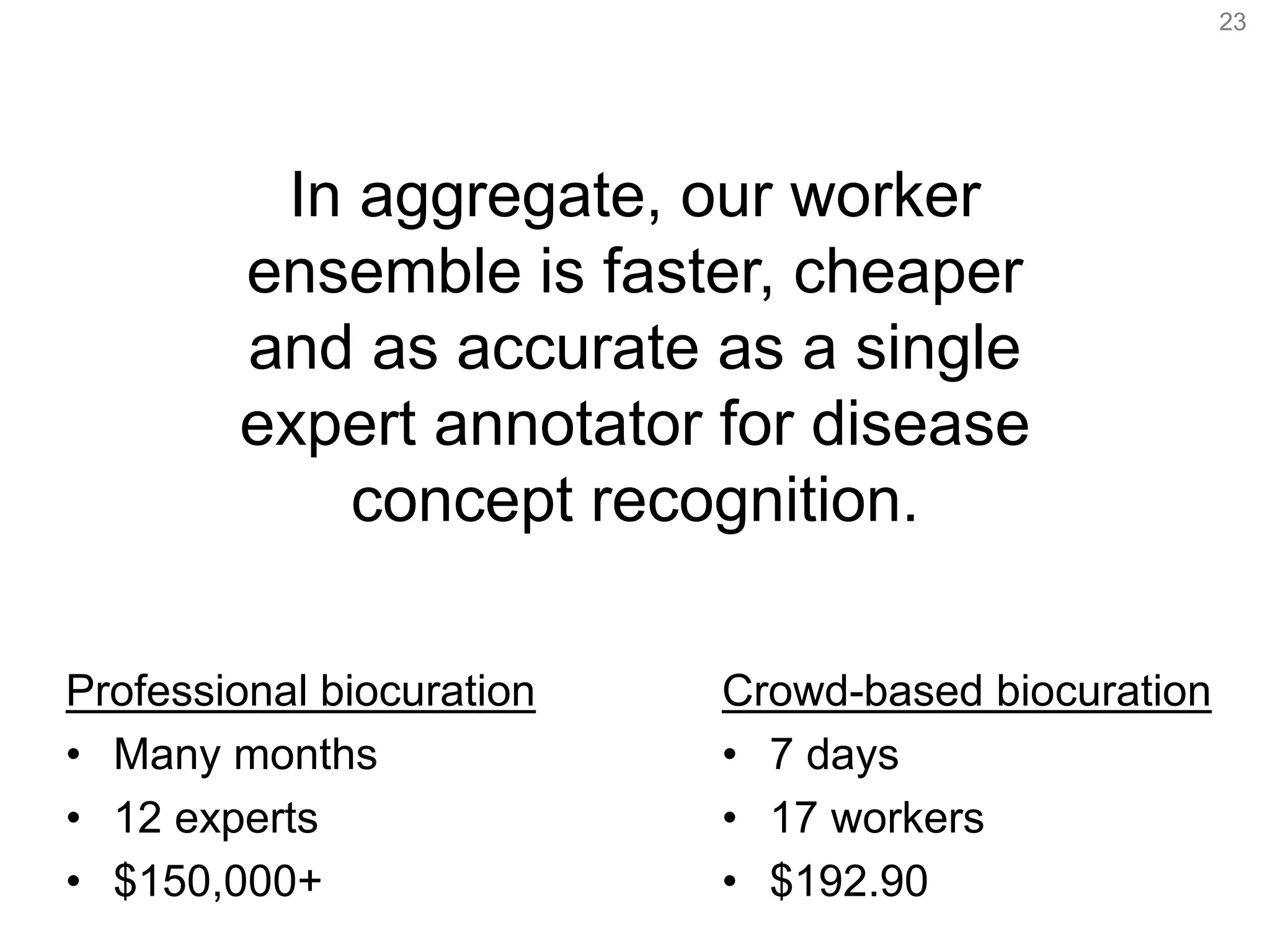

This document discusses a microtask crowdsourcing approach for annotating diseases in PubMed abstracts using non-scientists via Amazon Mechanical Turk. The findings show that the crowd-based method is as accurate as expert annotators, significantly cheaper and faster, with an F-score of 0.81 for disease mention recognition. It emphasizes the potential of leveraging crowdsourcing for biomedical information extraction and biocuration.