CNN-LeNet Explained

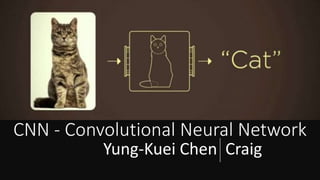

- 1. CNN - Convolutional Neural Network Yung-Kuei Chen Craig

- 4. Summary •Why do we need Convolutional Neural Network? Problems Solutions •LeNet Overview Origin Result •LeNet Techniques Structure

- 5. Why do we need Convolutional Neural Network?

- 8. Problems Source : Volvo autopilot

- 9. Solution Source : Volvo autopilot 𝑓( )

- 11. Introduce Yann LeCun •Director of AI Research, Facebook main research interest is machine learning, particularly how it applies to perception, and more particularly to visual perception. • LeNet Paper: Gradient-Based Learning Applied to Document Recognition. Source : Yann LeCun, http://yann.lecun.com/

- 12. Introduce

- 13. Introduce

- 14. K nearest neighbors Convolutional NN

- 15. •Revolutionary Even without traditional machine learning concept, the result*(Error Rate:0.95%) is the best among all machine learning method. Introduce *LeNet-5, source : Yann LeCun, http://yann.lecun.com/exdb/mnist/

- 16. 0 2 4 6 8 10 12 14 linear classifier (1-layer NN) K-nearest-neighbors, Euclidean (L2) 2-layer NN, 300 hidden units, MSE SVM, Gaussian Kernel Convolutional net LeNet-5 TEST ERROR RATE (%) (The lower the better) Introduce

- 17. Overview Source : [LeCun et al., 1998]: Gradient-Based Learning Applied to Document Recognition Page. 7

- 18. Input Source : [LeCun et al., 1998]: Gradient-Based Learning Applied to Document Recognition Page. 7

- 19. Input Layer Data : MNIST handwritten digits training set : 60,000 examples test set : 10,000 examples Source : http://yann.lecun.com/exdb/mnist/

- 21. Input Layer Source : http://yann.lecun.com/exdb/mnist/ Data : MNIST handwritten digits training set : 60,000 examples test set : 10,000 examples Size : 28x28 Color : Black & White Range : 0~255

- 23. Input Layer – Constant(Zero) Padding Source : http://xrds.acm.org/blog/2016/06/convolutional-neural-networks-cnns-illustrated-explanation/ 1.To make sure the data input fit our structure. 2.Let the edge elements have more chance to be filtered.

- 24. Without Padding With Padding

- 25. Convolutional Layer Source : [LeCun et al., 1998]: Gradient-Based Learning Applied to Document Recognition Page. 7

- 26. Convolutional Layer – Function Extract features from the input image Source : An Intuitive Explanation of Convolutional Neural Networks https://ujjwalkarn.me/2016/08/11/intuitive-explanation-convnets/

- 27. Convolution Convolution is a mathematical operation on two functions to produce a third function, that is typically viewed as a modified version of one of the original functions.

- 28. Convolutional Layer Overview Convolutional Layer = Multiply function + Sum Function Layer input Kernel Layer output Source : https://mlnotebook.github.io/post/CNN1/ Multiply Sum

- 29. 1 0 1 0 1 0 1 0 1 Convolutional Layer – Kernel 1.Any size 2.Any Shape 3.Any Value 4.Any number

- 30. Source : https://cambridgespark.com/content/tutorials/convolutional-neural-networks-with-keras/index.html Convolutional Layer – Computation Multiply Sum

- 31. Convolutional Layer – Computation Layer input Kernel Layer output Source : https://mlnotebook.github.io/post/CNN1/

- 32. Convolutional Layer – Computation 3x3 Kernel Padding = 0 Stride = 1 Shrunk Output Source: https://leonardoaraujosantos.gitbooks.io/artificial-inteligence/content/convolutional_neural_networks.html

- 33. Convolutional Layer – Stride Stride = 1 Stride = 2 Source: Theano website

- 34. Convolutional Layer – Computation 3x3 Kernel Padding = 1 Stride = 1 Same Size Output Source: Theano website

- 35. Convolutional Layer Overview Layer input Kernel Layer output Source : https://mlnotebook.github.io/post/CNN1/

- 36. -1 0 1 -2 0 2 -1 0 1 1 2 1 0 0 0 -1 -2 -1 X filter Y filter Result

- 39. Convolutional Layer – Result Source : Deep Learning in a Nutshell: Core Concepts, Nvidia https://devblogs.nvidia.com/parallelforall/deep-learning-nutshell-core-concepts/ Low-level feature Mid-level feature High-level feature

- 40. Pooling Layer(Subsampling) Source : [LeCun et al., 1998]: Gradient-Based Learning Applied to Document Recognition Page. 7

- 41. Pooling Layer – Function Reduces the dimensionality of each feature map but retains the most important information Source : An Intuitive Explanation of Convolutional Neural Networks https://ujjwalkarn.me/2016/08/11/intuitive-explanation-convnets/

- 42. Pooling Layer Overview. Source : Using Convolutional Neural Networks for Image Recognition https://www.embedded-vision.com/platinum-members/cadence/embedded-vision- training/documents/pages/neuralnetworksimagerecognition#3

- 43. Pooling Layer – Max Pooling Source : Stanford cs231 http://cs231n.github.io/convolutional-networks/

- 44. Source : Tensorflow Day9 卷積神經網路 (CNN) 分析 (2) - Filter, ReLU, MaxPolling https://ithelp.ithome.com.tw/articles/10187424 Kernel : 2x2 Stride : 2 Padding : 0 Pooling Layer – Max Pooling

- 46. Pooling Layer – Examples

- 47. Fully Connection Source : [LeCun et al., 1998]: Gradient-Based Learning Applied to Document Recognition Page. 7

- 48. Fully Connection – Function 1.Flatten the high dimensional input

- 49. Fully Connection – Function 2.Learning non-linear combinations of these features.

- 50. Fully Connection Overview The fully connected means that every two neurons in each layer are connected.

- 51. How Neural Network works? 1 -1 1 1 -1 -2 1 4 -2 0.98 0.12 𝑦1 𝑦2 Sigmoid 0 Source : professor Hung-yi Lee Deep Learning slides page.12 Input Output (1 x 1) + (-1 x -2) + 1 (1 x -1) + (-1 x 1) + 0 Sigmoid

- 54. Output Source : [LeCun et al., 1998]: Gradient-Based Learning Applied to Document Recognition Page. 7

- 55. Output – Loss Function (Least Squared error ) Output 𝑌 = 𝑆𝑈𝑀((𝑋 𝑇 − 𝑊)2 ) Loss Function (Cost Function): To evaluate the difference between predicted value and the answer.

- 56. [ ] Output – One hot encoding 9 Make sure the differences between any pair of numbers are the same.

- 57. Output – One hot encoding 9-8 = 1 Closer!!! 9-5 = 3 Farther!! Make sure the differences between any pair of numbers are the same.

- 58. Output – One hot encoding 0: 1: 2: 3: 4: 5: 6: 7: 8: 9:

- 59. 0: 1: 2: 3: 4: 5: 6: 7: 8: 9: 0: 1: 2: 3: 4: 5: 6: 7: 8: 9: 0: 1: 2: 3: 4: 5: 6: 7: 8: 9: Output – One hot encoding 12 + 12 = 2 12 + 12 = 2 Distance between two dots

- 60. Output How can we estimate the result? 0: 1: 2: 3: 4: 5: 6: 7: 8: 9:

- 61. Output 0: 1: 2: 3: 4: 5: 6: 7: 8: 9: 0: 1: 2: 3: 4: 5: 6: 7: 8: 9: 9 Ps: The digit in Matrix is only for expression, not the real calculation

- 62. Overview Source : [LeCun et al., 1998]: Gradient-Based Learning Applied to Document Recognition Page. 7

- 63. Demo

- 64. Thank you Yung-Kuei (Craig), Chen

Editor's Notes

- Most of the features from convolutional and pooling layers may be good for the classification task, but combinations of those features might be even better