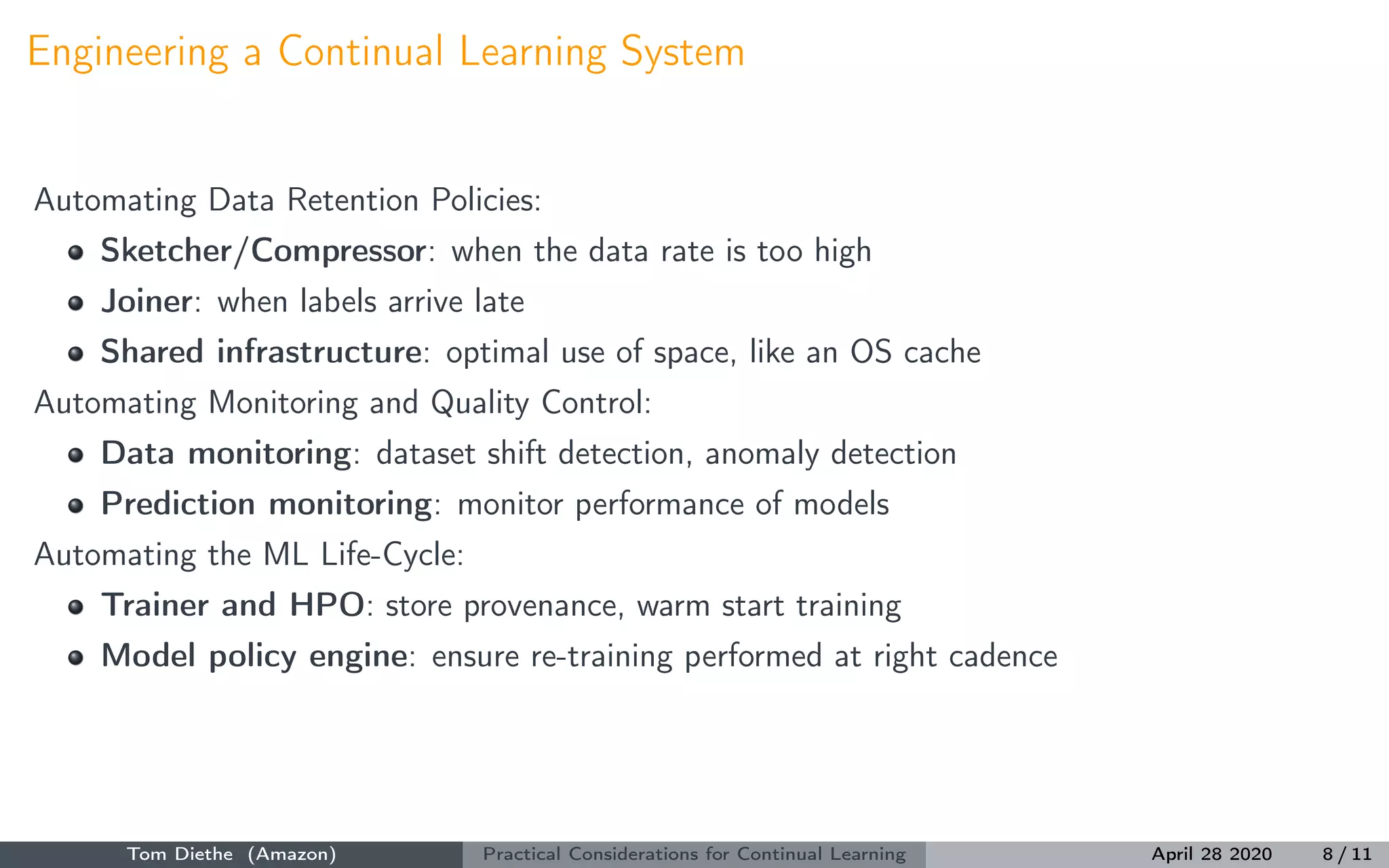

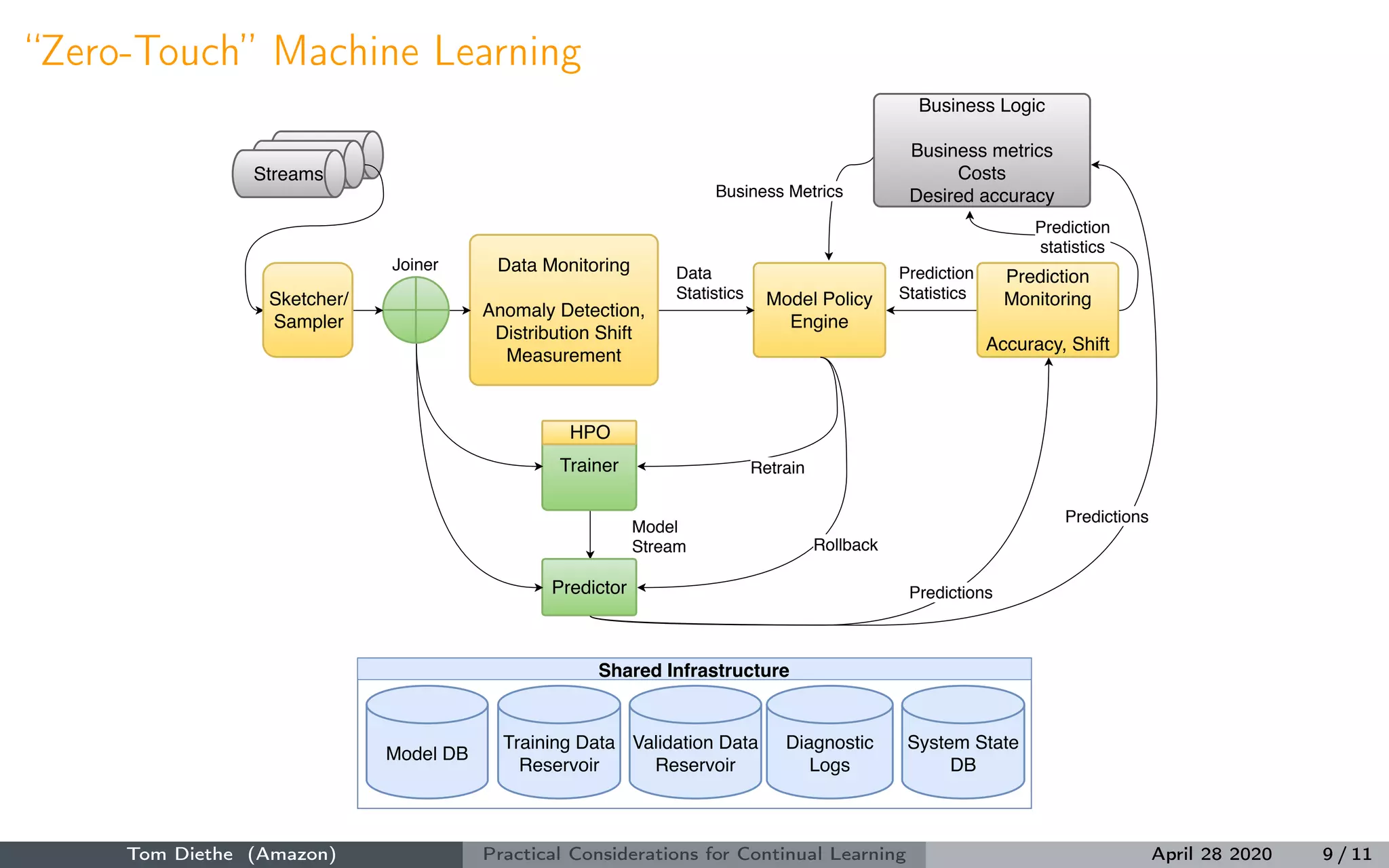

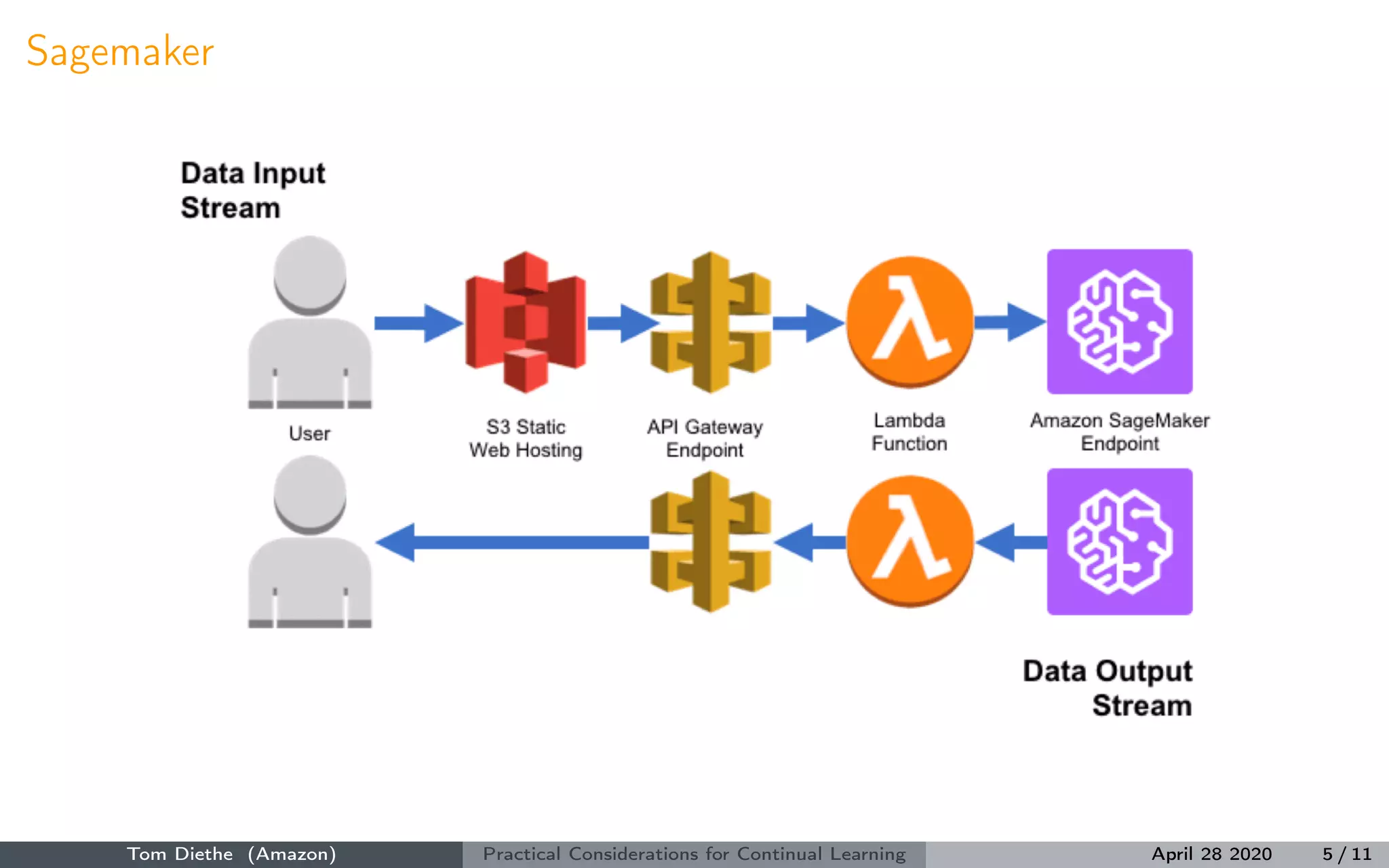

The document discusses practical considerations for implementing continual learning in AI systems, emphasizing the importance of robustness and transparency as data continuously evolves. It highlights specific challenges such as dataset shifts and the need for efficient data management and model training systems. Lastly, it addresses the application of Bayesian methods in continual learning and the current limitations in their integration with deep learning technologies.

![Bayesian Continual Learning [Nguyen 2018]

Given e.g. data in task t as Dt = x

(nt )

t , y

(nt )

t

Nt

n=1

, parameters θ (e.g. BLR, BNN, GP ...)

p(θ|D1:T ) ∝ p(θ)p(D1:T |θ)

= p(θ)

T

t−1

NT

n=1

p y

(nt )

t |θ, x

(nt )

t

= p(θ|D1:T−1)p(DT |θ).

Natural recursive algorithm!

Tom Diethe (Amazon) Practical Considerations for Continual Learning April 28 2020 7 / 11](https://image.slidesharecdn.com/continual-ai-meetup-200429200303/75/Practical-Considerations-for-Continual-Learning-16-2048.jpg)

![Bayesian Continual Learning [Nguyen 2018]

Given e.g. data in task t as Dt = x

(nt )

t , y

(nt )

t

Nt

n=1

, parameters θ (e.g. BLR, BNN, GP ...)

p(θ|D1:T ) ∝ p(θ)p(D1:T |θ)

= p(θ)

T

t−1

NT

n=1

p y

(nt )

t |θ, x

(nt )

t

= p(θ|D1:T−1)p(DT |θ).

Natural recursive algorithm!

Tom Diethe (Amazon) Practical Considerations for Continual Learning April 28 2020 7 / 11](https://image.slidesharecdn.com/continual-ai-meetup-200429200303/75/Practical-Considerations-for-Continual-Learning-17-2048.jpg)