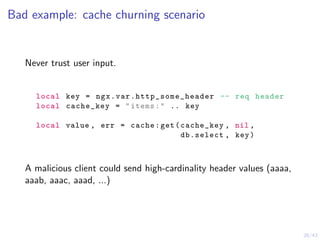

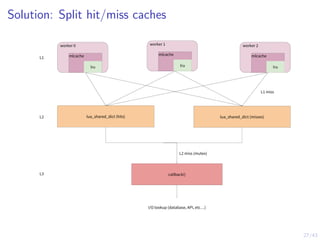

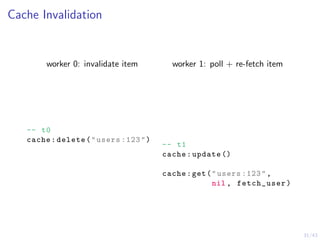

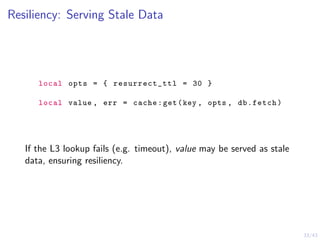

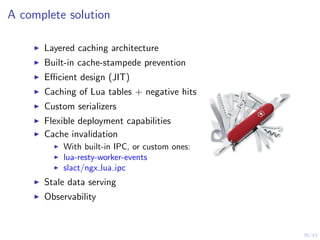

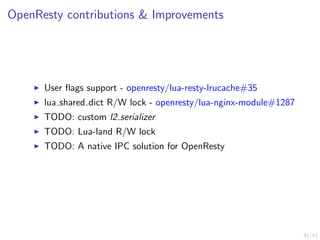

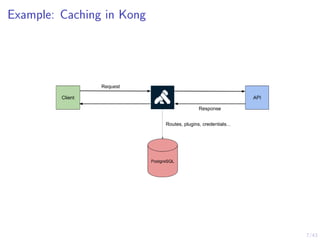

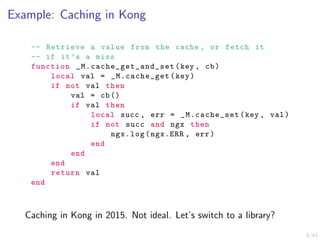

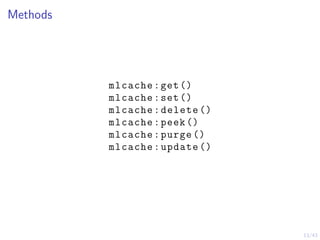

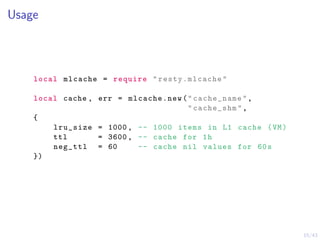

The document discusses the implementation of a layered caching architecture in OpenResty, focusing on the challenges of Lua-land caching and the introduction of a new caching library named 'lua-resty-mlcache'. It covers various caching methods, practical examples, and features such as cache invalidation, error handling, and observability metrics. The library has been utilized in Kong and has received contributions from Cloudflare and Kong Inc., showcasing its effectiveness and stability across different OpenResty versions.

![22/43

Example: DNS records caching

local resolver = require " resty.dns.resolver "

local r = resolver.new ({ nameservers = { "1.1.1.1" }})

local function resolve(name)

local answers = r:query(name)

local ip = answers [1]

local ttl = answers [1] .ttl

return ip , nil , ttl -- provide TTL from result

end

local host = "openresty.org"

local answers , err = cache:get(host , nil , resolve ,

host) -- callback arg](https://image.slidesharecdn.com/layeredcachinginopenresty-181206003447/85/Layered-caching-in-OpenResty-OpenResty-Con-2018-24-320.jpg)