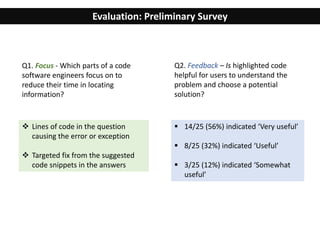

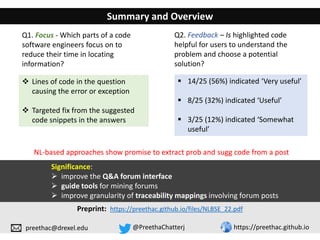

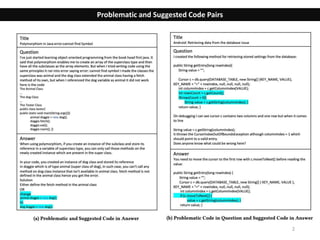

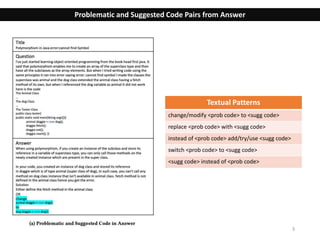

The document discusses the extraction of informative code from Stack Overflow posts, focusing on problematic and suggested code pairs that can enhance software engineers' understanding. A preliminary survey indicates a significant portion of users found highlighted code helpful for problem-solving. The research aims to improve user experience in Q&A forums and enhance tools for code navigation and extraction.

![Stack Overflow Usage: Challenges

Information Overload

o The top 5 SO questions for a query

“Differences Hashmap Hashtable

Java”, consist of 51 answers with a

total of 6,771 words [Xu et. al. 2017]

o Software engineers focus on only

27% of code in a post to understand

and apply the relevant information to

their context [Chatterjee et. al. 2020]

We aim to:

Extract informative code (problematic and suggested code pairs)

within a Stack Overflow post

Reformulation of queries

[Li et al. ‘16, Nie et al. ‘16]

High-quality post detection

[Ponzanelli et al.’14, Yao et al.’15]

Automatic tag recommendation

[Beyer and Pinzger’15 , Saha et al. ‘13]

Identification of cue sentences for

navigation [Nadi and Treude ’20]](https://image.slidesharecdn.com/nlbse-221013211328-8b2704e9/85/Automatic-Identification-of-Informative-Code-in-Stack-Overflow-Posts-2-320.jpg)

![4

Problematic Code from Question and Suggested Code from Answer

Identification of sugg code from answer

- DECA [Di Sorbo et al ‘15]

- Identify short imperative sentences that

suggest a solution

(e.g., “Perform an interactive rebase”).

Identification of prob code from question

- Extract code elements from sugg code.

- Determine the approximate location of

prob code.

- Measure textual similarity between

prob code in the question with sugg

code in answer.](https://image.slidesharecdn.com/nlbse-221013211328-8b2704e9/85/Automatic-Identification-of-Informative-Code-in-Stack-Overflow-Posts-5-320.jpg)