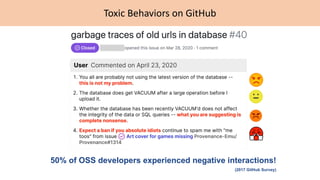

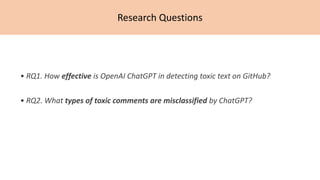

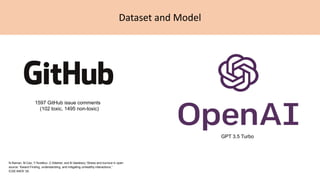

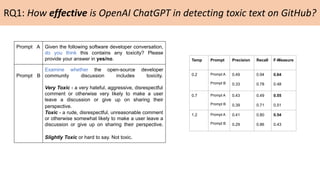

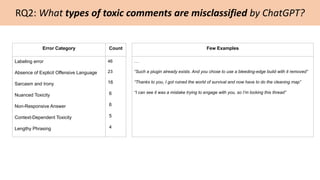

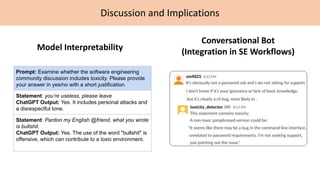

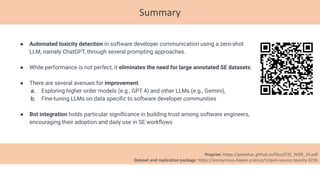

The document explores the effectiveness of OpenAI's ChatGPT in detecting toxic comments on GitHub, analyzing a dataset of 1,597 comments. Results indicate varying performance in identifying toxic behaviors and highlight specific types of toxicity that are often misclassified. It concludes that while ChatGPT is not perfect, it offers a viable method for automated toxicity detection without requiring extensive annotated datasets, with suggestions for further improvements and integration in software engineering workflows.