Introduction to Apache Hadoop Ecosystem

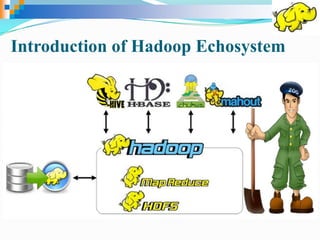

- 1. Introduction of Hadoop Echosystem

- 2. Agenda • Hadoop Brief History • What is Hadoop Distributions • Hadoop Distributions • Core components of Hadoop • Hadoop Base Platform • Hadoop Cluster • Hadoop Distributed File Systems • Hadoop Map Reduce • HBase • Hive • Pig • RHadoop • RHive

- 3. Data! We live in the data age. The size of the “digital universe” at 0.18 zettabytes in 2006, and is forecasting a tenfold growth by 2011 to 1.8 zettabytes.1 A zettabyte is 10^21 bytes, or equivalently one thousand exabytes, one million petabytes, or one billion terabytes. This flood of data is coming from many sources. Consider the ollowing: • The New York Stock Exchange generates about one terabyte of new trade data per day. • Facebook hosts approximately 10 billion photos, taking up one petabyte of storage. So there’s a lot of data out there.

- 4. Data Storage and Analysis One terabyte drives are the norm, but the transfer speed is around 100 MB/s, so it takes more than two and a half hours to read all the data off the disk This is a long time to read all data on a single drive—and writing is even slower. Imagine if we had 100 drives, each holding one hundredth of the data. Working in parallel, we could read the data in under two minutes. The first problem to solve is hardware failure: As soon as you start using many pieces of hardware, the chance that one will fail is fairly high. A common way of avoiding data loss is through replication: redundant copies of the data are kept by the system so that in the event of failure, there is another copy available, where the DFS comes in. The second problem to solve is combine the data : that most analysis tasks need to be able to combine the data in some way; data read from one disk may need to be combined with the data from any of the other 99 disks Various distributed systems allow data to be combined from multiple sources, but doing this correctly is notoriously challenging. MapReduce pro-vides a programming model that abstracts the problem from disk reads and writes, transforming it into a computation over sets of keys and values

- 5. A Brief History of Hadoop Google File System GFS Architecture. • A GFS cluster consists of multiple nodes.These nodes are divided into two types: one Master node and a large number of Chunkservers • In 2004, they set about writing an open source implementation, the Nutch Distributed Filesystem (NDFS). • Hadoop was created by Doug Cutting and Michael J. Cafarella[8] in 2005. Doug, who was working at Yahoo at the time,[9] named it after his son's toy elephant.[10] It was originally developed to support distribution for the Nutch search engine project.[11] Apache Nutch is a project of the Apache Software Foundation. Nutch was started in 2002. However, they realized that their architecture wouldn’t scale to the billions of pages on the Web Help was at hand with the publication of a paper in 2003 that described the architecture of Google’s distributed filesystem, called GFS, which was being used in production at Google • Hadoop has its origins in Apache Nutch, an open source web search engine, itself a part of the Lucene project

- 8. What Hadoop is, and what it’s not

- 9. MapReduce Created at Google in 2004 The MapReduce framework is the powerhouse behind most of today’s big data processing In addition to Hadoop, you’ll find MapReduce inside MPP and NoSQL databases, such as Vertica or MongoDB. At its core, Hadoop is an open source MapReduce implementation, feb 2006. Funded by Yahoo The ability of MapReduce to distribute computation over multiple servers HDFS MapReduce computation to take place, each server must have access to the data. This is the role of HDFS, the Hadoop Distributed File System. HDFS and MapReduce are robust. Servers in a Hadoop cluster can fail and not abort the computation process. HDFS ensures data is replicated with redundancy across the cluster. On completion of a calculation, a node will write its results back into HDFS. Improving programmability: Pig and Hive Improving data access: HBase, Sqoop and Flume The core of Hadoop

- 10. Ambari Deployment, configuration and monitoring Flume Collection and import of log and event data HBase Column-oriented database scaling to billions of rows HCatalog Schema and data type sharing over Pig, Hive and MapReduce HDFS Distributed redundant file system for Hadoop Hive Data warehouse with SQL-like access Mahout Library of machine learning and data mining algorithms MapReduce Parallel computation on server clusters Pig High-level programming language for Hadoop computations Oozie High-level programming language for Hadoop computations Sqoop Imports data from relational databases Whirr Cloud-agnostic deployment of clusters Zookeeper Configuration management and coordination The Hadoop Bestiary

- 11. Apache Hadoop is an open-source software framework that supports data-intensive distributed applications, licensed under the Apache v2 license. Hadoop was derived from Google's MapReduce and Google File System (GFS) papers. Apache Hadoop

- 12. Cloudera : Hadoop :: Red Hat : Linux Cloudera’s Distribution Including Apache Hadoop (CDH) A packaged set of Hadoop modules that work together Now at CDH4 Largest contributor of code to Apache Hadoop CDH4 The world's leading Apache Hadoop distribution. CDH (Cloudera's Distribution, including Apache Hadoop) is Cloudera's 100% open-source Hadoop distribution, and the world's leading Apache Hadoop solution. Cloudera

- 13. Installations are available for • Windows • Red Hat Linux Installing and Configuring HDP using Hortonworks Management Center Powered by Apache Hadoop

- 14. MapR’s Distribution for Apache Hadoop MapR is a complete distribution that includes HBase™, Pig, Hive, Mahout, Cascading, Sqoop, Flume and more. MapR’s distribution is 100% API compatible with Hadoop (MapReduce, HDFS and HBase™). MapR Technologies has significantly advanced Hadoop by making it easy, dependable, and fast. On Cloud: http://aws.amazon.com/elasticmapreduce/mapr/ MapR Apache Hadoop

- 15. Open Platform for Next-Gen Analytics Intel® Distribution for Apache Hadoop* software (Intel® Distribution) is a software platform that provides distributed processing and data management for enterprise applications that analyze massive amounts of diverse data. Intel Distribution is an open source software product that includes Apache Hadoop and other software components along with enhancements and fixes from Intel. Proven in production at some of the most demanding enterprise deployments in the world, Intel Distribution is supported by a worldwide engineering team with access to expertise in the entire software stack as well as the underlying processor, storage, and networking components. Key Features: • Up to 30x boost in Hadoop performance with optimizations for Intel® Xeon processors, Intel® SSD storage, and Intel® 10GbE networking • Data confidentiality without a performance penalty with encryption and decryption in HDFS enhanced by Intel® AES-NI and role-based access control with cell-level granularity in Hbase • Multi-site scalability and adaptive data replication in HBase and HDFS • Up to 3.5x improvement in Hive query performance • Support for statistical analysis with R connector • Enables graph analytics with Intel® Graph Builder • Enterprise-grade support and services from Intel Intel Distribution for Apache Hadoop Software

- 16. Apache Hadoop on cloud and on premises that Accommodates both Windows and Linux. • Interactive Console • run a Pig-Latin Job from the Interactive Javascript Console • Create and run a JavaScript MapReduce job • Execute a job using Hive • Remote Desktop • The Hadoop command shell • View the Job Tracker • View HDFS • Open Port • Connect Excel Hive Add-In To Hadoop on Azure via HiveODBC • FTP data to Hadoop on Azure • Manage Data • Import Data from Data Market • Setup ASV–use your Windows Azure Blob Store account • Setup S3–use your Amazon S3 account Apache Hadoop HDInsight Service Dashboard HDInsight Service makes Apache Hadoop available as a service in the cloud. It makes the HDFS/MapReduce software framework available in a simpler, more scalable, and cost efficient environment.

- 17. Seamless Interoperability with your microsoft tools

- 18. IBM InfoSphere BigInsights Bringing the power of Hadoop to the enterprise

- 20. Creating a Hadoop User It’s good practice to create a dedicated Hadoop user account to separate the Hadoop installation from other services running on the same machine For small clusters, some administrators choose to make this user’s home directory an NFS-mounted drive, to aid with SSH key distribution

- 21. Hadoop Cluster

- 22. A common Hadoop cluster architecture consists of a two-level network topology, as illustrated in Figure 9-1. Typically there are 30 to 40 servers per rack, with a 1 GB switch for the rack (only three are shown in the diagram), and an uplink to a core switch or router (which is normally 1 GB or better). The salient point is that the aggregate band- width between nodes on the same rack is much greater than that between nodes on different racks. Network Topology

- 23. • Files split into 128MB blocks • Blocks replicated across several datanodes (usually 3) • Single namenode stores metadata (file names, block locations, etc) • Optimized for large files, sequential reads • Files are append-only Namenode Datanodes 1 2 3 4 1 2 4 2 1 3 1 4 3 3 2 4 File1 Hadoop Distributed File Systems

- 24. Block Replication The NameNode makes all decisions regarding replication of blocks. It periodically receives a Heartbeat and a Blockreport from each of the DataNodes in the cluster. Receipt of a Heartbeat implies that the DataNode is functioning properly. A Blockreport contains a list of all blocks on a DataNode. Hadoop Distributed File Systems

- 25. Setting Up a Hadoop Cluster Anatomy of a File Write The case we’re going to consider is the case of creating a new file, writing data to it, then closing the file. See Figure. 1. The client creates the file by calling create() on DistributedFileSystem 2. DistributedFileSystem makes an RPC call to the namenode to create a new file in the filesystem’s namespace 3. The client writes data, DFSOutputStream splits it into packets, which it writes to an internal queue, called the data queue. The list of datanodes forms a pipeline—we’ll assume the replication level is three, so there are three nodes in the pipeline. 4. The DataStreamer streams the packets to the first datanode in the pipeline, which stores the packet and forwards it to the second datanode in the pipeline. Similarly, the second datanode stores the packet and forwards it to the third (and last) datanode in the pipeline 5. DFSOutputStream also maintains an internal queue of packets that are waiting to be acknowledged by datanodes, called the ack queue. A packet is removed from the ack queue only when it has been cknowledged by all the datanodes in the pipeline 1. If a datanode fails while data is being written to it, the failed datanode is removed from the pipeline and the remainder of the block’s data is written to the two good datanodes in the pipeline. The namenode notices that the block is under-replicated, and it arranges for a further replica to be created on another node 6. When the client has finished writing data, it calls close() on the stream. 7. Close()action flushes all the remaining packets to the datanode pipeline and waits for ac-knowledgments before contacting the namenode to signal that the file is complete Hadoop Distributed File Systems

- 26. Setting Up a Hadoop Cluster Anatomy of a File Read: To get an idea of how data flows between the client interacting with HDFS, the name- node and the datanodes, consider Figure 3-2, which shows the main sequence of events when reading a file. 1. The client opens the file it wishes to read by calling open() on the DistributedFileSystem object 2. DistributedFileSystem calls the namenode, using RPC, to determine the locations of the blocks for the first few blocks in the file 3. The client then calls read() on the stream . DFSInputStream, which has stored the datanode addresses for the first few blocks in the file, then connects to the first (closest) datanode for the first block in the file. 4. Data is streamed from the datanode back to the client, which calls read() repeatedly on the stream. When the end of the block is reached, DFSInputStream will close the connection to the datanode. 5. Then find the best datanode for the next block (step 5). This happens transparently to the client, which from its point of view is just reading a continuous stream 6. Blocks are read in order with the DFSInputStream opening new connections to datanodes as the client reads through the stream. It will also call the namenode to retrieve the datanode locations for the next batch of blocks as needed. When the client has finished reading, it calls close() on the FSDataInputStream. Hadoop Distributed File Systems

- 28. MapReduce Programming Model Data type: key-value records Map function: (Kin, Vin) list(Kinter, Vinter) Reduce function: (Kinter, list(Vinter)) list(Kout, Vout)

- 29. Reduce (Count) Reduce (Count) Reduce (Count)Data Collection: split1 Split the data to Supply multiple processors Data Collection: split 2 Data Collection: split n Map Map …… Map … Cat Bat Dog Other Words (size: TByte) P-0000 P-0001 P-0002 , count1 , count2 ,count3 Map <key, 1> Reducers (say, Count) MAP: Input data <key, value> pair REDUCE: <key, value> pair <result> MapReduce Operation

- 30. The whole data flow with a single reduce task is illustrated in Figure 2-3. The dotted boxes indicate nodes, the light arrows show data transfers on a node, and the heavy arrows show data transfers between nodes. Imagine the first map produced the output: (1950, 0) (1950, 20) (1950, 10) And the second produced: (1950, 25) (1950, 15) The reduce function would be called with a list of all the values: (1950, [0, 20, 10, 25, 15]) with output: (1950, 25) MapReduce Operation(Cont)

- 31. MapReduce Example Word Count def mapper(line): foreach word in line.split(): output(word, 1) def reducer(key, values): output(key, sum(values))

- 32. Word Count Execution the quick brown fox the fox ate the mouse how now brown cow Map Map Map Reduce Reduce brown, 2 fox, 2 how, 1 now, 1 the, 3 ate, 1 cow, 1 mouse, 1 quick, 1 the, 1 brown, 1 fox, 1 quick, 1 the, 1 fox, 1 the, 1 how, 1 now, 1 brown, 1 ate, 1 mouse, 1 cow, 1 Input Map Shuffle & Sort Reduce Output

- 33. An Optimization: The Combiner • A combiner is a local aggregation function for repeated keys produced by same map • Works for associative functions like sum, count, max • Decreases size of intermediate data • Example: map-side aggregation for Word Count: def combiner(key, values): output(key, sum(values))

- 34. Word Count with Combiner Input Map & Combine Shuffle & Sort Reduce Output the quick brown fox the fox ate the mouse how now brown cow Map Map Map Reduce Reduce brown, 2 fox, 2 how, 1 now, 1 the, 3 ate, 1 cow, 1 mouse, 1 quick, 1 the, 1 brown, 1 fox, 1 quick, 1 the, 2 fox, 1 how, 1 now, 1 brown, 1 ate, 1 mouse, 1 cow, 1

- 35. MapReduce Execution Details • Single master controls job execution on multiple slaves • Mappers preferentially placed on same node or same rack as their input block – Minimizes network usage • Mappers save outputs to local disk before serving them to reducers – Allows recovery if a reducer crashes – Allows having more reducers than nodes

- 36. Anatomy of a MapReduce Job Run • Asks the jobtracker for a new job ID (by calling getNewJobId() on JobTracker) (step2). • Checks the output specification of the job. For example, if the output directory has not been specified or it already exists, the job is not submitted and an error is thrown to the MapReduce program. • Computes the input splits for the job. If the splits cannot be computed, because the input paths don’t exist, for example, then the job is not submitted and an error is thrown to the MapReduce program. • Copies the resources needed to run the job, including the job JAR file, the config-uration file, and the computed input splits, to the jobtracker’s filesystem in a directory named after the job ID. The job JAR is copied with a high replication factor (controlled by the mapred.submit.replication property, which defaults to 10) so that there are lots of copies across the cluster for the tasktrackers to access when they run tasks for the job (step 3) • Tells the jobtracker that the job is ready for execution (by calling submitJob() on JobTracker) (step 4) • The client, which submits the MapReduce job.

- 37. Fault Tolarence in MapReduce 1. If a task crashes: – Retry on another node » OK for a map because it has no dependencies » OK for reduce because map outputs are on disk – If the same task fails repeatedly, fail the job or ignore that input block (user-controlled) Note: For these fault tolerance features to work, your map and reduce tasks must be side-effect-free

- 38. Fault Tolarence in MapReduce 2. If a node crashes: – Re-launch its current tasks on other nodes – Re-run any maps the node previously ran » Necessary because their output files were lost along with the crashed node

- 39. 3. If a task is going slowly (straggler): – Launch second copy of task on another node (“speculative execution”) – Take the output of whichever copy finishes first, and kill the other Surprisingly important in large clusters – Stragglers occur frequently due to failing hardware, software bugs, misconfiguration, etc – Single straggler may noticeably slow down a job Fault Tolarence in MapReduce

- 40. Hadoop comes with a web UI for viewing information about your jobs. It is useful for following a job’s progress while it is running, as well as finding job statistics and logs after the job has completed. You can find the UI at http://jobtracker-host:50030/. Figure 5-2. Screenshot of the job page Walkthrough the MapReduce Web UI

- 41. The task details page Walkthrough the MapReduce Web UI

- 42. Retrieving the Results Once the job is finished, there are various ways to retrieve the results. Each reducer produces one output file, so there are 30 part files named part-r-00000 to part-r-00029 in the max-temp directory. Walkthrough the MapReduce Web UI

- 43. MapReduce

- 44. HBase HBase Architecture • HBase is a distributed column-oriented database built on top of HDFS • HBase is the Hadoop application to use when you require real-time read/write random-access to very large datasets What if you need the database features that Hive doesn’t provide, like row-level updates, rapid query response times, and transactions?

- 45. Hbase Cluster members • HBase depends on ZooKeeper and by default it manages a ZooKeeper instance as the authority on cluster state • HBase hosts vitals such as the location of the root catalog table and the address of the current cluster Master • Regionserver slave nodes are listed in the HBase conf/regionservers file as you would list datanodes and tasktrackers in the Hadoop conf/slaves file • There are multiple implemen-tations of the filesystem interface—one for the local filesystem, one for the KFS file-system, Amazon’s S3, and HDFS (the Hadoop Distributed Filesystem)—HBase can persist to any of these implementations • By default, unless told otherwise, HBase writes to the local filesystem HBase

- 46. HBase • To administer your HBase instance, launch the HBase shell by typing: % hbase shell hbase(main):001:0> hbase(main):007:0> create 'test', 'data' 0 row(s) in 1.3066 seconds • To prove the new table was created successfully, run the list command. This will output all tables in user space: • To insert data into three different rows and columns in the data column family, and then list the table content, do the following: hbase(main):021:0> put 'test', 'row1', 'data:1', 'value1' 0 row(s) in 0.0454 seconds hbase(main):022:0> put 'test', 'row2', 'data:2', 'value2' 0 row(s) in 0.0035 seconds hbase(main):023:0> put 'test', 'row3', 'data:3', 'value3' 0 row(s) in 0.0090 seconds hbase(main):024:0> scan 'test' ROW COLUMN+CELL row1 column=data:1, timestamp=1240148026198, value=value1 row2 column=data:2, timestamp=1240148040035, value=value2 row3 column=data:3, timestamp=1240148047497, value=value3 3 row(s) in 0.0825 seconds hbase(main):019:0> list test 1 row(s) in 0.1485 seconds

- 47. HBase

- 48. HBase Stargate Stargate is the name of the REST server bundled with HBase. Query Table List Examples: % curl http://localhost:8000/ HTTP/1.1 200 OK Content-Length: 13 Cache-Control: no-cache Content-Type: text/plain test % curl -H "Accept: text/xml" http://localhost:8000/ HTTP/1.1 200 OK Cache-Control: no-cache Content-Type: text/xml Content-Length: 121 <?xml version="1.0" encoding="UTF-8" standalone="yes"?> <TableList><Table name="content"/><Table name="urls"/></TableList> % curl -H "Accept: application/json" http://localhost:8000/ HTTP/1.1 200 OK Cache-Control: no-cache Content-Type: application/json Transfer-Encoding: chunked {"Table":[{"name":“test"},{"name":"urls"}]} Set Accept header to text/plain for plain text output. Set Accept header to text/xml for XML reply. Set Accept header to application/json for JSON reply. Set Accept header to application/x-protobuf for protobufs

- 49. HBase Stargate Stargate is the name of the REST server bundled with HBase.

- 50. Many parallel algorithms can be expressed by a series of MapReduce jobs But MapReduce is fairly low-level: must think about keys, values, partitioning, etc Can we capture common “job building blocks”? Motivation

- 51. Apache Oozie max-temp-workflow/ ├── lib/ │ └── hadoop-examples.jar └── workflow.xml An open-source workflow/coordination service to manage data processing jobs for Hadoop, developed and then open-sourced by Yahoo!

- 52. Example 5-14. Oozie workflow definition to run the maximum temperature MapReduce job <workflow-app xmlns="uri:oozie:workflow:0.1" name="max-temp-workflow"> <start to="max-temp-mr"/> <action name="max-temp-mr"> <map-reduce> <job-tracker>${jobTracker}</job-tracker> <name-node>${nameNode}</name-node> <prepare> <delete path="${nameNode}/user/${wf:user()}/output"/> </prepare> <configuration> <property> <name>mapred.mapper.class</name> <value>OldMaxTemperature$OldMaxTemperatureMapper</value> </property> <property> <name>mapred.combiner.class</name> <value>OldMaxTemperature$OldMaxTemperatureReducer</value> </property> <property> <name>mapred.reducer.class</name> <value>OldMaxTemperature$OldMaxTemperatureReducer</value> </property> <property> <name>mapred.output.key.class</name> <value>org.apache.hadoop.io.Text</value> </property> <property> Apache Oozie

- 53. Pig Started at Yahoo! Research Runs about 30% of Yahoo!’s jobs Features: Expresses sequences of MapReduce jobs Data model: nested “bags” of items Provides relational (SQL) operators (JOIN, GROUP BY, etc) Easy to plug in Java functions Pig Pen development environment for Eclipse

- 54. • Higher level data flow language • Convert them into MapReduce Job and runs it • Provides good functionality (JOINS, practitioners) • Very compact! • A comparison between Pig & Java • Faster to develop • Slower to run Pig

- 55. An Example Problem Suppose you have user data in one file, page view data in another, and you need to find the top 5 most visited pages by users aged 18 - 25. Load Users Load Pages Filter by age Join on name Group on url Count clicks Order by clicks Take top 5 Example from http://wiki.apache.org/pig-data/attachments/PigTalksPapers/attachments/ApacheConEurope09.ppt

- 56. In MapReduce i m p o r t j a v a . i o . I O E x c e p t i o n ; i m p o r t j a v a . u t i l . A r r a y L i s t ; i m p o r t j a v a . u t i l . I t e r a t o r ; i m p o r t j a v a . u t i l . L i s t ; i m p o r t o r g . a p a c h e . h a d o o p . f s . P a t h ; i m p o r t o r g . a p a c h e . h a d o o p . i o . L o n g W r i t a b l e ; i m p o r t o r g . a p a c h e . h a d o o p . i o . T e x t ; i m p o r t o r g . a p a c h e . h a d o o p . i o . W r i t a b l e ; im p o r t o r g . a p a c h e . h a d o o p . i o . W r i t a b l e C o m p a r a b l e ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . F i l e I n p u t F o r m a t ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . F i l e O u t p u t F o r m a t ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . J o b C o n f ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . K e y V a l u e T e x t I n p u t F o r m a t ; i m p o r t o r g . ap a c h e . h a d o o p . m a p r e d . M a p p e r ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . M a p R e d u c e B a s e ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . O u t p u t C o l l e c t o r ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . R e c o r d R e a d e r ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . R e d u c e r ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . R e p o r t e r ; i m po r t o r g . a p a c h e . h a d o o p . m a p r e d . S e q u e n c e F i l e I n p u t F o r m a t ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . S e q u e n c e F i l e O u t p u t F o r m a t ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . T e x t I n p u t F o r m a t ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . j o b c o n t r o l . J o b ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . j o b c o n t r o l . J o b Co n t r o l ; i m p o r t o r g . a p a c h e . h a d o o p . m a p r e d . l i b . I d e n t i t y M a p p e r ; p u b l i c c l a s s M R E x a m p l e { p u b l i c s t a t i c c l a s s L o a d P a g e s e x t e n d s M a p R e d u c e B a s e i m p l e m e n t s M a p p e r < L o n g W r i t a b l e , T e x t , T e x t , T e x t > { p u b l i c v o i d m a p ( L o n g W r i t a b l e k , T e x t v a l , O u t p u t C o l l e c t o r < T e x t , T e x t > o c , R e p o r t e r r e p o r t e r ) t h r o w s I O E x c e p t i o n { / / P u l l t h e k e y o u t S t r i n g l i n e = v a l . t o S t r i n g ( ) ; i n t f i r s t C o m m a = l i n e . i n d e x O f ( ' , ' ) ; S t r i n g k e y = l i n e . s u bs t r i n g ( 0 , f i r s t C o m m a ) ; S t r i n g v a l u e = l i n e . s u b s t r i n g ( f i r s t C o m m a + 1 ) ; T e x t o u t K e y = n e w T e x t ( k e y ) ; / / P r e p e n d a n i n d e x t o t h e v a l u e s o w e k n o w w h i c h f i l e / / i t c a m e f r o m . T e x t o u t V a l = n e w T e x t ( " 1" + v a l u e ) ; o c . c o l l e c t ( o u t K e y , o u t V a l ) ; } } p u b l i c s t a t i c c l a s s L o a d A n d F i l t e r U s e r s e x t e n d s M a p R e d u c e B a s e i m p l e m e n t s M a p p e r < L o n g W r i t a b l e , T e x t , T e x t , T e x t > { p u b l i c v o i d m a p ( L o n g W r i t a b l e k , T e x t v a l , O u t p u t C o l l e c t o r < T e x t , T e x t > o c , R e p o r t e r r e p o r t e r ) t h r o w s I O E x c e p t i o n { / / P u l l t h e k e y o u t S t r i n g l i n e = v a l . t o S t r i n g ( ) ; i n t f i r s t C o m m a = l i n e . i n d e x O f ( ' , ' ) ; S t r i n g v a l u e = l i n e . s u b s t r i n g (f i r s t C o m m a + 1 ) ; i n t a g e = I n t e g e r . p a r s e I n t ( v a l u e ) ; i f ( a g e < 1 8 | | a g e > 2 5 ) r e t u r n ; S t r i n g k e y = l i n e . s u b s t r i n g ( 0 , f i r s t C o m m a ) ; T e x t o u t K e y = n e w T e x t ( k e y ) ; / / P r e p e n d a n i n d e x t o t h e v a l u e s o we k n o w w h i c h f i l e / / i t c a m e f r o m . T e x t o u t V a l = n e w T e x t ( " 2 " + v a l u e ) ; o c . c o l l e c t ( o u t K e y , o u t V a l ) ; } } p u b l i c s t a t i c c l a s s J o i n e x t e n d s M a p R e d u c e B a s e i m p l e m e n t s R e d u c e r < T e x t , T e x t , T e x t , T e x t > { p u b l i c v o i d r e d u c e ( T e x t k e y , I t e r a t o r < T e x t > i t e r , O u t p u t C o l l e c t o r < T e x t , T e x t > o c , R e p o r t e r r e p o r t e r ) t h r o w s I O E x c e p t i o n { / / F o r e a c h v a l u e , f i g u r e o u t w h i c h f i l e i t ' s f r o m a n d s t o r e i t / / a c c o r d i n g l y . L i s t < S t r i n g > f i r s t = n e w A r r a y L i s t < S t r i n g > ( ) ; L i s t < S t r i n g > s e c o n d = n e w A r r a y L i s t < S t r i n g > ( ) ; w h i l e ( i t e r . h a s N e x t ( ) ) { T e x t t = i t e r . n e x t ( ) ; S t r i n g v a l u e = t . t oS t r i n g ( ) ; i f ( v a l u e . c h a r A t ( 0 ) = = ' 1 ' ) f i r s t . a d d ( v a l u e . s u b s t r i n g ( 1 ) ) ; e l s e s e c o n d . a d d ( v a l u e . s u b s t r i n g ( 1 ) ) ; r e p o r t e r . s e t S t a t u s ( " O K " ) ; } / / D o t h e c r o s s p r o d u c t a n d c o l l e c t t h e v a l u e s f o r ( S t r i n g s 1 : f i r s t ) { f o r ( S t r i n g s 2 : s e c o n d ) { S t r i n g o u t v a l = k e y + " , " + s 1 + " , " + s 2 ; o c . c o l l e c t ( n u l l , n e w T e x t ( o u t v a l ) ) ; r e p o r t e r . s e t S t a t u s ( " O K " ) ; } } } } p u b l i c s t a t i c c l a s s L o a d J o i n e d e x t e n d s M a p R e d u c e B a s e i m p l e m e n t s M a p p e r < T e x t , T e x t , T e x t , L o n g W r i t a b l e > { p u b l i c v o i d m a p ( T e x t k , T e x t v a l , O u t p u t C o l l ec t o r < T e x t , L o n g W r i t a b l e > o c , R e p o r t e r r e p o r t e r ) t h r o w s I O E x c e p t i o n { / / F i n d t h e u r l S t r i n g l i n e = v a l . t o S t r i n g ( ) ; i n t f i r s t C o m m a = l i n e . i n d e x O f ( ' , ' ) ; i n t s e c o n d C o m m a = l i n e . i n d e x O f ( ' , ' , f i r s tC o m m a ) ; S t r i n g k e y = l i n e . s u b s t r i n g ( f i r s t C o m m a , s e c o n d C o m m a ) ; / / d r o p t h e r e s t o f t h e r e c o r d , I d o n ' t n e e d i t a n y m o r e , / / j u s t p a s s a 1 f o r t h e c o m b i n e r / r e d u c e r t o s u m i n s t e a d . T e x t o u t K e y = n e w T e x t ( k e y ) ; o c . c o l l e c t ( o u t K e y , n e w L o n g W r i t a b l e ( 1 L ) ) ; } } p u b l i c s t a t i c c l a s s R e d u c e U r l s e x t e n d s M a p R e d u c e B a s e i m p l e m e n t s R e d u c e r < T e x t , L o n g W r i t a b l e , W r i t a b l e C o m p a r a b l e , W r i t a b l e > { p u b l i c v o i d r e d u c e ( T e x t k ey , I t e r a t o r < L o n g W r i t a b l e > i t e r , O u t p u t C o l l e c t o r < W r i t a b l e C o m p a r a b l e , W r i t a b l e > o c , R e p o r t e r r e p o r t e r ) t h r o w s I O E x c e p t i o n { / / A d d u p a l l t h e v a l u e s w e s e e l o n g s u m = 0 ; w hi l e ( i t e r . h a s N e x t ( ) ) { s u m + = i t e r . n e x t ( ) . g e t ( ) ; r e p o r t e r . s e t S t a t u s ( " O K " ) ; } o c . c o l l e c t ( k e y , n e w L o n g W r i t a b l e ( s u m ) ) ; } } p u b l i c s t a t i c c l a s s L o a d C l i c k s e x t e n d s M a p R e d u c e B a s e im p l e m e n t s M a p p e r < W r i t a b l e C o m p a r a b l e , W r i t a b l e , L o n g W r i t a b l e , T e x t > { p u b l i c v o i d m a p ( W r i t a b l e C o m p a r a b l e k e y , W r i t a b l e v a l , O u t p u t C o l l e c t o r < L o n g W r i t a b l e , T e x t > o c , R e p o r t e r r e p o r t e r )t h r o w s I O E x c e p t i o n { o c . c o l l e c t ( ( L o n g W r i t a b l e ) v a l , ( T e x t ) k e y ) ; } } p u b l i c s t a t i c c l a s s L i m i t C l i c k s e x t e n d s M a p R e d u c e B a s e i m p l e m e n t s R e d u c e r < L o n g W r i t a b l e , T e x t , L o n g W r i t a b l e , T e x t > { i n t c o u n t = 0 ; p u b l i cv o i d r e d u c e ( L o n g W r i t a b l e k e y , I t e r a t o r < T e x t > i t e r , O u t p u t C o l l e c t o r < L o n g W r i t a b l e , T e x t > o c , R e p o r t e r r e p o r t e r ) t h r o w s I O E x c e p t i o n { / / O n l y o u t p u t t h e f i r s t 1 0 0 r e c o r d s w h i l e ( c o u n t< 1 0 0 & & i t e r . h a s N e x t ( ) ) { o c . c o l l e c t ( k e y , i t e r . n e x t ( ) ) ; c o u n t + + ; } } } p u b l i c s t a t i c v o i d m a i n ( S t r i n g [ ] a r g s ) t h r o w s I O E x c e p t i o n { J o b C o n f l p = n e w J o b C o n f ( M R E x a m p l e . c l a s s ) ; l p . s et J o b N a m e ( " L o a d P a g e s " ) ; l p . s e t I n p u t F o r m a t ( T e x t I n p u t F o r m a t . c l a s s ) ; l p . s e t O u t p u t K e y C l a s s ( T e x t . c l a s s ) ; l p . s e t O u t p u t V a l u e C l a s s ( T e x t . c l a s s ) l p . s e t M a p p e r C l a s s ( L o a d P a g e s . c l a s s ) F i l e I n p u t F o r m a t . a d d I n p u t P a t h ( l p , n P a t h ( " /u s e r / g a t e s / p a g e s " ) ) ; F i l e O u t p u t F o r m a t . s e t O u t p u t P a t h ( l p , n e w P a t h ( " / u s e r / g a t e s / t m p / i n d e l p . s e t N u m R e d u c e T a s k s ( 0 ) ; J o b l o a d P a g e s = n e w J o b ( l p ) ; J o b C o n f l f u = n e w J o b C o n f ( M R E x a m p l l f u . se t J o b N a m e ( " L o a d a n d F i l t e r U s e r s " ) ; l f u . s e t I n p u t F o r m a t ( T e x t I n p u t F o r m a t l f u . s e t O u t p u t K e y C l a s s ( T e x t . c l a s s ) ; l f u . s e t O u t p u t V a l u e C l a s s ( T e x t . c l a s s l f u . s e t M a p p e r C l a s s ( L o a d A n d F i l t e r U s F i l e I n p u t F o r m a t . a d dI n p u t P a t h ( l f u , n e w P a t h ( " / u s e r / g a t e s / u s e r s " ) ) ; F i l e O u t p u t F o r m a t . s e t O u t p u t P a t h ( l f u n e w P a t h ( " / u s e r / g a t e s / t m p / f i l t l f u . s e t N u m R e d u c e T a s k s ( 0 ) ; J o b l o a d U s e r s = n e w J o b ( l f u ) ; J o b C o n f j o i n = n e w J o b C o n f (M R E x a m p l e . c l a s s ) ; j o i n . s e t J o b N a m e ( " J o i n U s e r s a n d P a j o i n . s e t I n p u t F o r m a t ( K e y V a l u e T e x t I n j o i n . s e t O u t p u t K e y C l a s s ( T e x t . c l a s s ) j o i n . s e t O u t p u t V a l u e C l a s s ( T e x t . c l a s j o i n . s e t M a p p e r C l a s s ( I d e n t i t y M a pp e r . c l a s s ) ; j o i n . s e t R e d u c e r C l a s s ( J o i n . c l a s s ) ; F i l e I n p u t F o r m a t . a d d I n p u t P a t h ( j o i n , P a t h ( " / u s e r / g a t e s / t m p / i n d e x e d _ p a g e s " ) ) ; F i l e I n p u t F o r m a t . a d d I n p u t P a t h ( j o i n , P a t h ( " / u s e r / g a t e s / t m p / f i l t e r e d _ u s e r s " ) ) ; F i l e O u t p u t F o r m a t . s et O u t p u t P a t h ( j o i n , n e w P a t h ( " / u s e r / g a t e s / t m p / j o i n e d " ) ) ; j o i n . s e t N u m R e d u c e T a s k s ( 5 0 ) ; J o b j o i n J o b = n e w J o b ( j o i n ) ; j o i n J o b . a d d D e p e n d i n g J o b ( l o a d P a g e s ) j o i n J o b . a d d D e p e n d i n g J o b ( l o a d U s e r s ) J o b C o n f g r o u p = n e w J o b C o n f ( M R Ex a m p l e . c l a s s ) ; g r o u p . s e t J o b N a m e ( " G r o u p U R L s " ) ; g r o u p . s e t I n p u t F o r m a t ( K e y V a l u e T e x t I g r o u p . s e t O u t p u t K e y C l a s s ( T e x t . c l a s s g r o u p . s e t O u t p u t V a l u e C l a s s ( L o n g W r i t g r o u p . s e t O u t p u t F o r m a t ( S e q u e n c e F il e O u t p u t F o r m a t . c l a s s g r o u p . s e t M a p p e r C l a s s ( L o a d J o i n e d . c l g r o u p . s e t C o m b i n e r C l a s s ( R e d u c e U r l s . g r o u p . s e t R e d u c e r C l a s s ( R e d u c e U r l s . c F i l e I n p u t F o r m a t . a d d I n p u t P a t h ( g r o u p P a t h ( " / u s e r / g a t e s / t m p / j o i n e d " ) ) ; F i l e O u t p u t F o r m a t . s e t O u t p u t P a t h ( g r o u p , P a t h ( " / u s e r / g a t e s / t m p / g r o u p e d " ) ) ; g r o u p . s e t N u m R e d u c e T a s k s ( 5 0 ) ; J o b g r o u p J o b = n e w J o b ( g r o u p ) ; g r o u p J o b . a d d D e p e n d i n g J o b ( j o i n J o b ) ; J o b C o n f t o p 1 0 0 = n e w J o b C o n f ( M R E x a t o p 1 0 0 . s e t J o b N a m e ( " T o p 1 0 0 s i t e s " ) ; t o p 1 0 0 . s e t I n p u t F o r m a t ( S e q u e n c e F i l e t o p 1 0 0 . s e t O u t p u t K e y C l a s s ( L o n g W r i t a t o p 1 0 0 . s e t O u t p u t V a l u e C l a s s ( T e x t . c l t o p 1 0 0 . s e t O u t p u t F o r m a t ( S e q u e n c e F i lo r m a t . c l a s s ) ; t o p 1 0 0 . s e t M a p p e r C l a s s ( L o a d C l i c k s . c t o p 1 0 0 . s e t C o m b i n e r C l a s s ( L i m i t C l i c k t o p 1 0 0 . s e t R e d u c e r C l a s s ( L i m i t C l i c k s F i l e I n p u t F o r m a t . a d d I n p u t P a t h ( t o p 1 0 P a t h ( " / u s e r / g a t e s / t m p / g r o u p e d " ) ) ; F i l e O u t p u t F o r m a t . s e t O u t p u t P a t h ( t o p 1 0 0 P a t h ( " / u s e r / g a t e s / t o p 1 0 0 s i t e s f o r u s e r s 1 8 t o 2 t o p 1 0 0 . s e t N u m R e d u c e T a s k s ( 1 ) ; J o b l i m i t = n e w J o b ( t o p 1 0 0 ) ; l i m i t . a d d D e p e n d i n g J o b ( g r o u p J o b ) ; J o b C o n t r o l j c = n e w J o b C o n t r o l ( " F i1 0 0 s i t e s f o r 1 8 t o 2 5 " ) ; j c . a d d J o b ( l o a d P a g e s ) ; j c . a d d J o b ( l o a d U s e r s ) ; j c . a d d J o b ( j o i n J o b ) ; j c . a d d J o b ( g r o u p J o b ) ; j c . a d d J o b ( l i m i t ) ; j c . r u n ( ) ; } } Example from http://wiki.apache.org/pig-data/attachments/PigTalksPapers/attachments/ApacheConEurope09.ppt

- 57. Users = load ‘users’ as (name, age); Filtered = filter Users by age >= 18 and age <= 25; Pages = load ‘pages’ as (user, url); Joined = join Filtered by name, Pages by user; Grouped = group Joined by url; Summed = foreach Grouped generate group, count(Joined) as clicks; Sorted = order Summed by clicks desc; Top5 = limit Sorted 5; store Top5 into ‘top5sites’; Example from http://wiki.apache.org/pig-data/attachments/PigTalksPapers/attachments/ApacheConEurope09.ppt In Pig Latin

- 58. Notice how naturally the components of the job translate into Pig Latin. Load Users Load Pages Filter by age Join on name Group on url Count clicks Order by clicks Take top 5 Users = load … Filtered = filter … Pages = load … Joined = join … Grouped = group … Summed = … count()… Sorted = order … Top5 = limit … Example from http://wiki.apache.org/pig-data/attachments/PigTalksPapers/attachments/ApacheConEurope09.ppt Ease of Translation

- 59. Ease of Translation Notice how naturally the components of the job translate into Pig Latin. Load Users Load Pages Filter by age Join on name Group on url Count clicks Order by clicks Take top 5 Users = load … Filtered = filter … Pages = load … Joined = join … Grouped = group … Summed = … count()… Sorted = order … Top5 = limit … Job 1 Job 2 Job 3 Example from http://wiki.apache.org/pig-data/attachments/PigTalksPapers/attachments/ApacheConEurope09.ppt

- 61. Hive Developed at Facebook Used for majority of Facebook jobs “Relational database” built on Hadoop Maintains list of table schemas SQL-like query language (HQL) Can call Hadoop Streaming scripts from HQL Supports table partitioning, clustering, complex data types, some optimizations Translates SQL into MapReduce jobs So you can do this Select count(*) from users where user_id=56 And Hive will translates this into MapReduce jobs

- 62. Hive Architecture Apache Hive, which is built on top of Hadoop for providing data warehouse services Hive

- 63. Sample Hive Queries SELECT p.url, COUNT(1) as clicks FROM users u JOIN page_views p ON (u.name = p.user) WHERE u.age >= 18 AND u.age <= 25 GROUP BY p.url ORDER BY clicks LIMIT 5; • Find top 5 pages visited by users aged 18-25: • Filter page views through Python script: SELECT TRANSFORM(p.user, p.date) USING 'map_script.py' AS dt, uid CLUSTER BY dt FROM page_views p; Hive

- 64. Pig

- 65. Hive

- 66. Sqoop • Often, valuable data in an organization is stored in relational database systems (RDBMS) • Sqoop is an open-source tool that allows users to extract data from a relational database into Hadoop for further processing. • It’s even possible to use Sqoop to move data from a relational database into HBase.

- 67. A Sample Import Sqoop • After you install Sqoop, you can use it to import data to Hadoop. • Assumed that MySQL is installed, let’s log in and create a database Example 15-1. Creating a new MySQL database schema % mysql -u root -p Enter password: Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 349 Server version: 5.1.37-1ubuntu5.4 (Ubuntu) Type 'help;' or 'h' for help. Type 'c' to clear the current input statement. mysql> CREATE DATABASE hadoopguide; Query OK, 1 row affected (0.02 sec) mysql> GRANT ALL PRIVILEGES ON hadoopguide.* TO '%'@'localhost'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON hadoopguide.* TO ''@'localhost'; Query OK, 0 rows affected (0.00 sec) mysql> quit; Bye

- 68. Example 15-2. Populating the database % mysql hadoopguide Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 352 Server version: 5.1.37-1ubuntu5.4 (Ubuntu) Type 'help;' or 'h' for help. Type 'c' to clear the current input stateme mysql> CREATE TABLE widgets(id INT NOT NULL PRIMARY KEY AUTO_INCREMENT, -> widget_name VARCHAR(64) NOT NULL, -> price DECIMAL(10,2), -> design_date DATE, -> version INT, -> design_comment VARCHAR(100)); Query OK, 0 rows affected (0.00 sec) mysql> INSERT INTO widgets VALUES (NULL, 'sprocket', 0.25, '2010-02-10', -> 1, 'Connects two gizmos'); Query OK, 1 row affected (0.00 sec) mysql> INSERT INTO widgets VALUES (NULL, 'gizmo', 4.00, '2009-11-30', 4, -> NULL); Query OK, 1 row affected (0.00 sec) mysql> INSERT INTO widgets VALUES (NULL, 'gadget', 99.99, '1983-08-13', -> 13, 'Our flagship product'); Query OK, 1 row affected (0.00 sec) mysql> quit; Sqoop • Now let’s login back into the database (not as root, but as yourself this time), and create a table to import into HDFS

- 69. Sqoop • Now let’s use Sqoop to import this table into HDFS: % sqoop import --connect jdbc:mysql://localhost/hadoopguide > --table widgets -m 1 10/06/23 14:44:18 INFO tool.CodeGenTool: Beginning code generation ... 10/06/23 14:44:20 INFO mapred.JobClient: Running job: job_201006231439_0002 10/06/23 14:44:21 INFO mapred.JobClient: map 0% reduce 0% 10/06/23 14:44:32 INFO mapred.JobClient: map 100% reduce 0% 10/06/23 14:44:34 INFO mapred.JobClient: Job complete: job_201006231439_0002

- 70. Sqoop

- 73. RHadoop • It allows data scientists familiar with R to quickly utilize the enterprise-grade capabilities of the MapR Hadoop distribution directly with the analytic capabilities of R. • Rhadoop is an open source collection of three R packages created by Revolution Analytics that allow users to Manage and analyze data with Hadoop from an R environment. • RHadoop is a collection of three R packages that allow users to manage and analyze data with Hadoop • The packages have been implemented and tested in Cloudera's distribution of Hadoop(CDH3) & (CDH4). and R 2.15.0. • The packages have also been tested with Revolution R 4.3, 5.0, and 6.0. For rmr see Compatibility.

- 74. > library(rhdfs) > hdfs.init() > hdfs.ls('/') > q() • From R, load the rhdfs library and confirm that you can access the MapR cluster file system by listing the root directory. RHadoop and MapR Accessing Enterprise-Grade Hadoop from R >library("rmr2") >small.ints <- to.dfs(1:1000) >out <- mapreduce(input = small.ints, map = function(k, v) keyval(v, v^2)) >df <- as.data.frame(from.dfs(out)) • From R, load the rmr2 library and confirm that you can access the hadoop cluster file system by runing simple MapReduce job. R --save > library(rhbase) > hb.init() > hb.new.table('testtable', 'colfam1') > hb.describe.table('testtable') > hb.delete.table('testtable') > q() • Load rhbase library and create a HBase table, display its description, and drop it.

- 75. RHadoop

- 76. RHive is an R extension facilitating distributed computing via HIVE query. It provides an easy to use HQL like SQL and R objects and functions in HQL.

- 77. Examples: ##Loading Rhive library into R >library(RHive) >rhive.int() ## try to connect hive server >rhive.connect(“HiveServer_IP”) ## execute HQL(hive query) >dt < - rhive.query("select * from emp")

- 79. Deployment with R evolution R Enterprise

- 96. Questions ?

- 97. Thank you Mahabubur Rahaman Sr. Software Engineer Orion Informarics Ltd Dhaka, Bangladesh